An Introduction to Storage for Docker Enterprise

An Introduction to Storage for Docker Enterprise¶

Introduction¶

The Docker Enterprise platform delivers a secure, managed application environment for developers and operations personnel to build, ship, and run enterprise applications and custom business processes. This platform often requires storage across the different phases of the Software Delivery Supply Chain.

A variety of storage solutions exist for enterprise use and a rapidly growing container ecosystem continues to provide many more storage solutions for future consideration. The growing ecosystem of new storage options combined with a need to utilize existing storage investments brings forth the critical requirement that storage must be highly adaptable and configurable to achieve the optimal platform for containerized workloads.

The Docker Enterprise platform provides a default prescriptive storage configuration ‘out of the box.’ This allows consumers to begin building, shipping, and running containerized applications quickly.

However, and perhaps most importantly, Docker Enterprise provides a pluggable “batteries included, but replaceable” architecture that allows for the implementation and configuration of storage solutions that best meet your requirements across the entire Software Delivery Supply Chain. This pluggable architecture approach for implementing the storage of choice also includes the ability to interchange other critical enterprise infrastructure services in a pluggable fashion such as networking, logging, authentication, authorization, and monitoring.

Overview¶

Docker separates storage use cases within the Docker Enterprise Platform into three categories:

Docker image run storage (storage drivers)

Storage used for reading image filesystem layers from a running container state typically require high IOPS which in turn drivers the underlying storage requirements. These higher performance disk requirements often have higher costs and reduced scalability, so features such as redundancy or resiliency are sometimes traded off to manage the storage economics.

Persistent data container storage (volumes)

Containers often require persistent storage for using, capturing, or saving data beyond a specific container’s life cycle. Utilizing volume storage is selected to keep data for future use or permit shared consumption by other containers or services. The many volume storage solutions available provide features such as high availability, scalable performance, shared filesystems, and reliable read/write filesystem protocols that are supported by Docker, OS vendors, and other storage vendors.

Registry “at rest” image storage (registry)

When images are stored at rest on disk for cataloging and e-discovery purposes, as is the case for Mirantis Secure Registry (MSR), key storage service metrics will likely revolve around scalability, costs, redundancy, and resiliency. In the case of Mirantis Secure Registry, acute attention will likely be toward lower cost per terabyte and higher scalability. Lower IOPS and perhaps fewer filesystem protocols are often traded out in exchange for lower costs.

Each storage tier has specific storage requirements to achieve expected service levels across the different stages of the Software Delivery Supply Chain of a Docker Enterprise platform. Speed, scalability, high availability, recoverability, and costs are just a few of the many storage metrics that can help determine the optimal storage choice for each phase where storage is consumed.

This document will explore each of these three distinct storage tiers — local image storage, volume storage, and registry storage — in further detail.

Image Storage¶

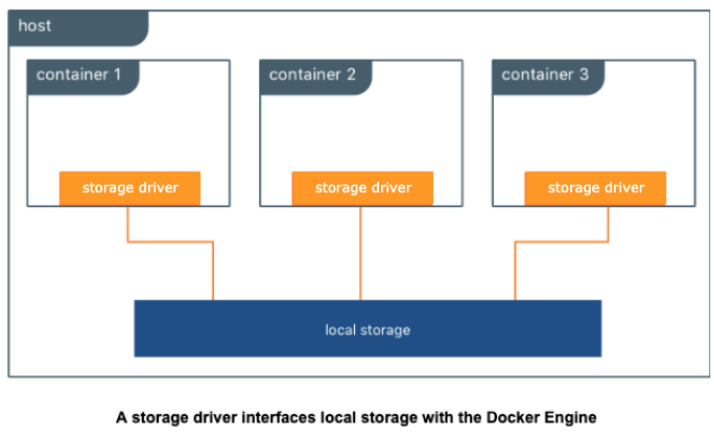

On each node of the Docker Enterprise cluster, storage drivers (previously known as graph drivers) interface local storage with the Mirantis Container Runtime. Performance is almost always considered the key metric for image storage. OS support and resiliency are typical requirements as well.

Storage drivers must be able to act as a local registry to store and retrieve copies of image layers that make up full images. Storage drivers also act as a caching mechanism to improve storage efficiency and download times for images within the local registry. They provide a Copy on Write (CoW) filesystem which is appended to a set of read-only image layers that constitute a running container. In acceptable I/O time frames, storage drivers must also be able to reliably read into memory the sets of image layers that make a running container on the Mirantis Container Runtime. Storage drivers are arguably the workhorse of all storage used within the Docker Enterprise platform. Choosing an incorrect storage driver or misconfiguration can significantly impact expected service levels of the entire Software Delivery Supply Chain.

Storage Drivers also supply a writeable CoW (Copy on Write) image layer on top of read-only filesystem layers of an image that are started as a running container. The CoW filesystem created at image runtime is assigned a unique filesystem layer ID; this unique CoW layer ID is ephemeral and does not persist or stay with the original image after each iteration of that image being run as a container. The default execution of an image as a container is ephemeral, meaning the container runtime does not automatically persist the CoW layer as part of the original image. You can save a running container with its unique CoW layer into a new image where the CoW is then transformed to an additional read-only layer on top of the original running read image layers. While it is possible to save the CoW contents of a running container as a new image itself, doing so as a means to persist data isn’t scalable, pragmatic, or practical. The CoW filesystem is most often successfully utilized when used as a means to expand or iterate a current image state to include necessary components or code to expand the image service requirements into a repeatable and reusable image.

Currently, the only supported storage drivers available are built into the Mirantis Container Runtime. Thus, the host OS has some influence on available, supported storage drivers. There is growing interest in experimental support using pluggable volumes, but none are currently recommended or available.

Selecting Storage Drivers¶

In previous versions, several factors influenced the selection of a storage driver and different Linux distributions had different preferred storage drivers. The promotion of the overlay2 storage driver as the default storage driver for all Linux distributions has made choosing a storage driver much easier. The majority of supported Linux distributions default to using the overlay2 storage driver for Mirantis Container Runtime.

It was chosen as the default storage driver due to its mainline kernel support, speed, capabilities, and ease of setup compared to alternate storage drivers.

For the most stable and hassle-free Docker experience use the overlay2 storage driver. When Docker is installed and started for the first time, a storage driver is selected based on your operating system and filesystem’s capabilities. Straying from this default may increase your chances of encountering bugs and other issues. Follow the configuration specified in the Compatibility Matrix. Alternate storage drivers may be available for your Linux distribution but their use may be deprecated in future releases in favor of standardizing on the overlay2 driver.

There are two versions of overlayFS drivers available, Overlay and Overlay2. The usage of the overlay storage driver has been deprecated in favor of overlay2. Overlay has known documented issues with inode exhaustion and commit exhaustion. The overlay2 storage driver does not suffer from the same inode exhaustion issues as overlay. To use overlay2, you need version 4.0 or higher of the Linux kernel, or RHEL or CentOS using version 3.10.0-514 and above. For more details on how Docker utilizes storage drivers, go to the documentation storage drivers page in the additional resources section.

Volume Storage¶

Volume storage is an extremely versatile storage solution that can be used to do many things. Generally, volume storage provides ways for an application or user to store data generated by a running container. It extends beyond the life or boundaries of an existing or running container. This storage use case is commonly referred to as persistent storage. Persistent storage is an extremely important use case, especially for things like databases, image files, file and folder sharing, and big data collection activities. Volume storage can also be used to do other interesting things such as provide easy access to secrets (for example, backed by KeyWhiz) or provide configuration data to a container from a key/value store. No matter where the data comes from, this information is translated by the volume driver plugin from the backend into a filesystem that can be accessed by normal tools meant to interact with the filesystem.

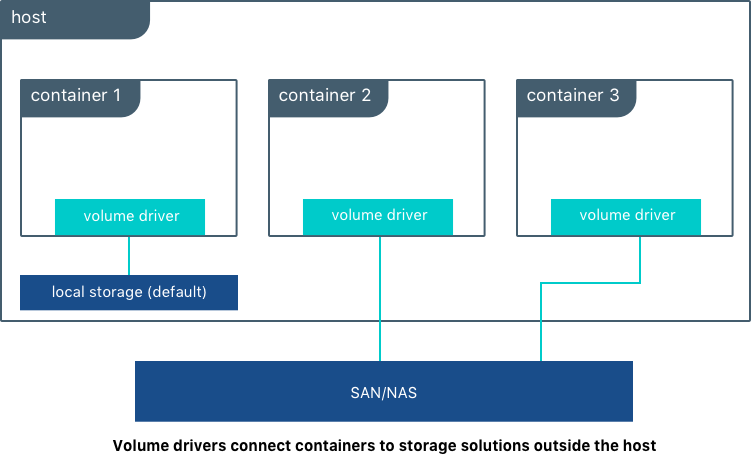

Many enterprises consume storage from various storage systems such as SAN and NAS arrays. These solutions often provide increased performance and availability as well as advanced storage features such as thin provisioning, replication, deduplication, encryption, and compression. They usually offer storage monitoring and management as well.

Volume drivers are used to connect storage solutions to the Docker Enterprise platform. You can use existing drivers or write drivers to allow the underlying storage to interface with the underlying APIs of the Docker Enterprise platform. A variety of volume driver solutions exist and can be plugged into and consumed within Docker Platform. There are volume storage projects from the open source community, and there are commercially-supported volume drivers available from storage vendors. Many volume driver plugins available today are software-defined and provide feature sets that are agnostic to the underlying physical storage.

For a list of volume plugins, go to the volume plugins documentation page in the additional resources section.

Docker references a list of tested and Certified Volume Storage plugins from partners on hub. Among this list, we can highlight:

- Trident from NetApp

- HPE Nimble

- Nutanix DVP

- Pure Storage

- vSphere Storage

- DataCore SDS

- NexentaEdge

- BlockBridge

For the full list of Docker Certified Storage plugins, go to the link in the additional resources section.

Docker Kubernetes Services also bring the best from Kubernetes world in order to provide containers with Persistent Storage:

- Static and Dynamic Persistent Volume provisioning

- Block Level-access with iSCSI

- FlexVolume and CSI (Container Storage Interface) drivers

- File and Block storage from various cloud providers including Amazon EBS & EFS , Azure Disks and File Storage.

- NFS shares

For a list of storage options available through Docker Kubernetes Service, go to the kubernetes storage options documentation page in the additional resources section.

Registry Storage¶

Registry storage is the backing storage for a running image registry instance such as Mirantis Secure Registry or Docker Hub. Mirantis Secure Registry is an on-premises image registry service within the Docker Enterprise platform. Docker Hub is the public SaaS image registry provided by Docker. Registry Storage, regardless of location, does not typically require high I/O performance metrics, but they almost always require resiliency, scalability, and low cost economics to meet expected SLAs. The public Docker Hub image registry service is a specific example where these metric choices are clearly identifiable. Docker Hub requirements for faster push and pull speeds are secondary to metrics such as scalability, resiliency, and economics. Combined, these three metrics enable Docker to efficiently manage and support the most popular Docker container registry in the industry.

There are several available supported registry storage backend options for Mirantis Secure Registry such as NFSv4, NFSv3, Amazon S3, S3 Compliant Alternatives, Azure Storage (Blob), Google Cloud Storage, OpenStack Swift, and local filesystem. For an up to date list of backing storage options for Mirantis Secure Registry, refer to the Compatibility Matrix. These storage options can provide the same registry SLAs required by large scale operations like Docker Hub. Local storage is also an available backing storage option and is the “out of the box” default for the Docker Enterprise platform. Ultimately, local file system storage options cannot offer similar or improved service levels in the order of magnitude that object storage can provide due to filesystem restrictions such as inode limits or filesystem protocol restrictions.

Docker images are immutable, read only, and attached with metadata. These digital characteristics relate very well with object storage features offered in S3, Azure, etc. Object storage also provides many additional digital management features that can enhance the overall image storage experience in addition to what Mirantis Secure Registry provides for managing your application images. For example, object storage can provide additional service catalog items such as multi-dc or multi-region image replication to support Disaster Recovery and Continuous Availability designs, or offer additional built-in native redundancy for enhanced image availability, backup and restore solutions, or common API capabilities. It can also provide encryption at rest and client side encryption. Because of these additional features and advantages that object storage solutions provide, it’s recommended that Mirantis Secure Registry be configured to utilize an object storage backing solution for highly available installations. Object storage also provides additional image pulling performance benefits due to the Mirantis Secure Registry serving the contents directly from the object stores by default. If HA is not required, then a single local filesystem is prepared as the default backing storage configuration for Mirantis Secure Registry, but this configuration can be changed to use a backing storage solution of your choice.

Often NFS or NFS-like file share solutions are used as an alternative backing storage solution to Object Storage. These file share based solutions can also fulfill the backing storage requirement for High Availability of Mirantis Secure Registry. Because many enterprises are very familiar with NFS or similar shared filesystem storage solutions, there are natural tendencies to use shared filesystems over object storage solutions. There are disadvantages of using NFS or comparable shared filesystems as the backing storage for your Docker images:

- NFS has many guarantees that erodes performance with too much unnecessary synchronization.

- NFS essentially mimics a filesystem, often masking low level errors because it has no way to mitigate them.

- NFSv3 doesn’t possess a native security model within.

Plugin storage options are not currently supported for registry storage, but there are a number of on-premises, S3 compliant backing storage options that are also a good fit for Mirantis Secure Registry. Many partners leverage built-in S3-compliant API compatibility support as a way to have their storage service supported without having to write new code.

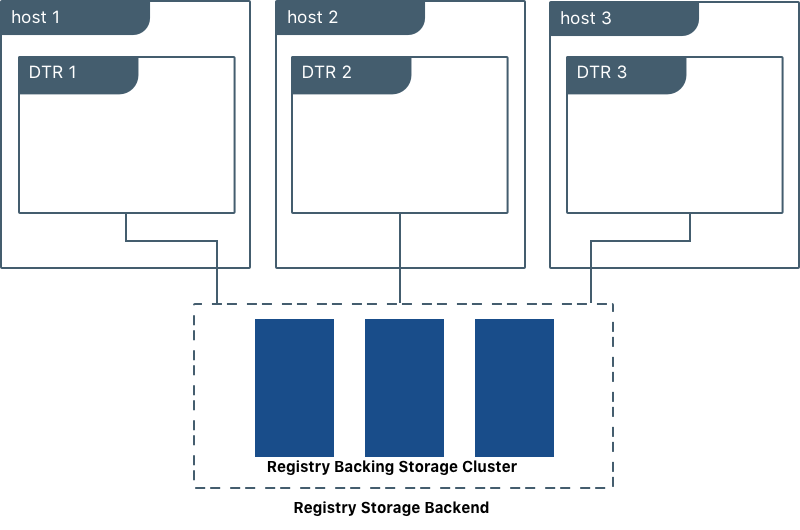

When planning a production-grade installation of Mirantis Secure Registry on-premises, it’s best to configure the image registry service as a highly available and redundant service, making the ability to change the backing storage of choice an important feature of the Mirantis Secure Registry. All highly available Mirantis Secure Registry configurations do require a backing storage solution that can support a clustered set of containers requesting parallel asynchronous write requests to the physical storage itself. Mirantis Secure Registry does not assume, manage, or control any write-locking mechanisms that takes place for image/filesystem data being written to the physical underlying storage. Therefore, writes must be managed independently by the storage protocol of the backing storage itself.

Conclusion¶

When choosing storage solutions for the Docker Enterprise Platform, consider the following:

- Typically, containers are described and categorized to run as one of two separate, distinct states — stateful and stateless. A stateful service holds requirements for capturing and storing persistent data during a container runtime. For example, a database that presents database tables on physical storage within a container for the purpose of writing or updating data is considered a stateful service. Stateless containers do not possess the same requirements for data persistence within a container runtime. Any data captured or generated during runtime does not exist or does not have to be recorded. When planning for image builds, it is recommended that you determine the state of each application or service within a container. Then, determine what the storage requirements are as well as the consumption possibilities for each container within the application stack. Also determine what storage options are available or have to be purchased to provide the best results.

- Consider implementing a service catalog that captures all labels, filters, and constraints assigned to your storage hosts. Capturing all of these definitions will help eliminate overlap or isolate resource gaps in your Docker Platform environment and allow you to model and plan out the most efficient and informed orchestration methods available across the entire Software Delivery Supply Chain.

- Regardless of container state, every container possesses a writable Copy on Write (CoW) filesystem that is presented by each Docker host. As previously covered, storage drivers are not designed to accommodate data persistence and sharing, thus misinterpretation and misuse of this space as a means to persist and reuse data can lead to unexpected results and data loss. Strongly consider early in your planning stages that applications should not use this writeable space as a means to persist data beyond the container life cycle.