Docker Enterprise products v2.1 documentation

Docker Enterprise¶

Docker Enterprise¶

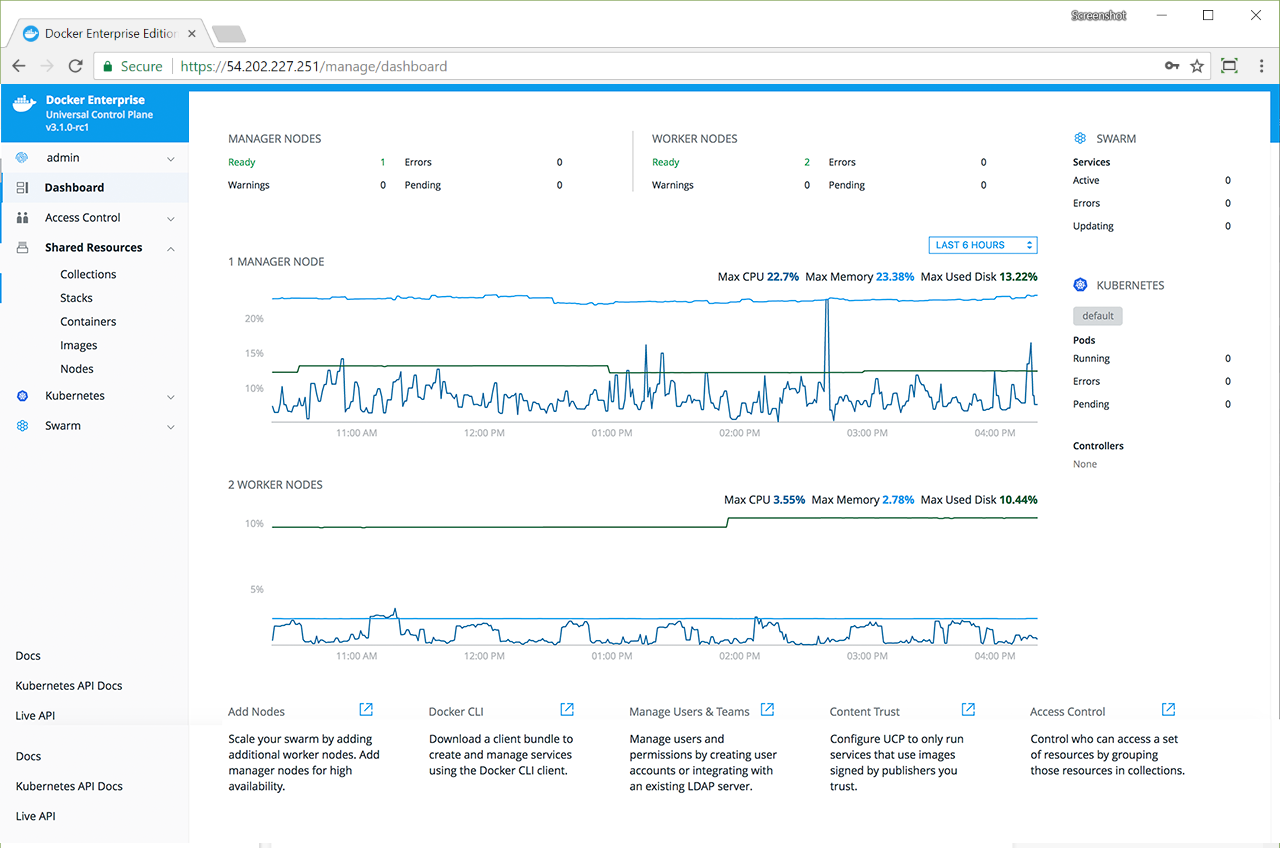

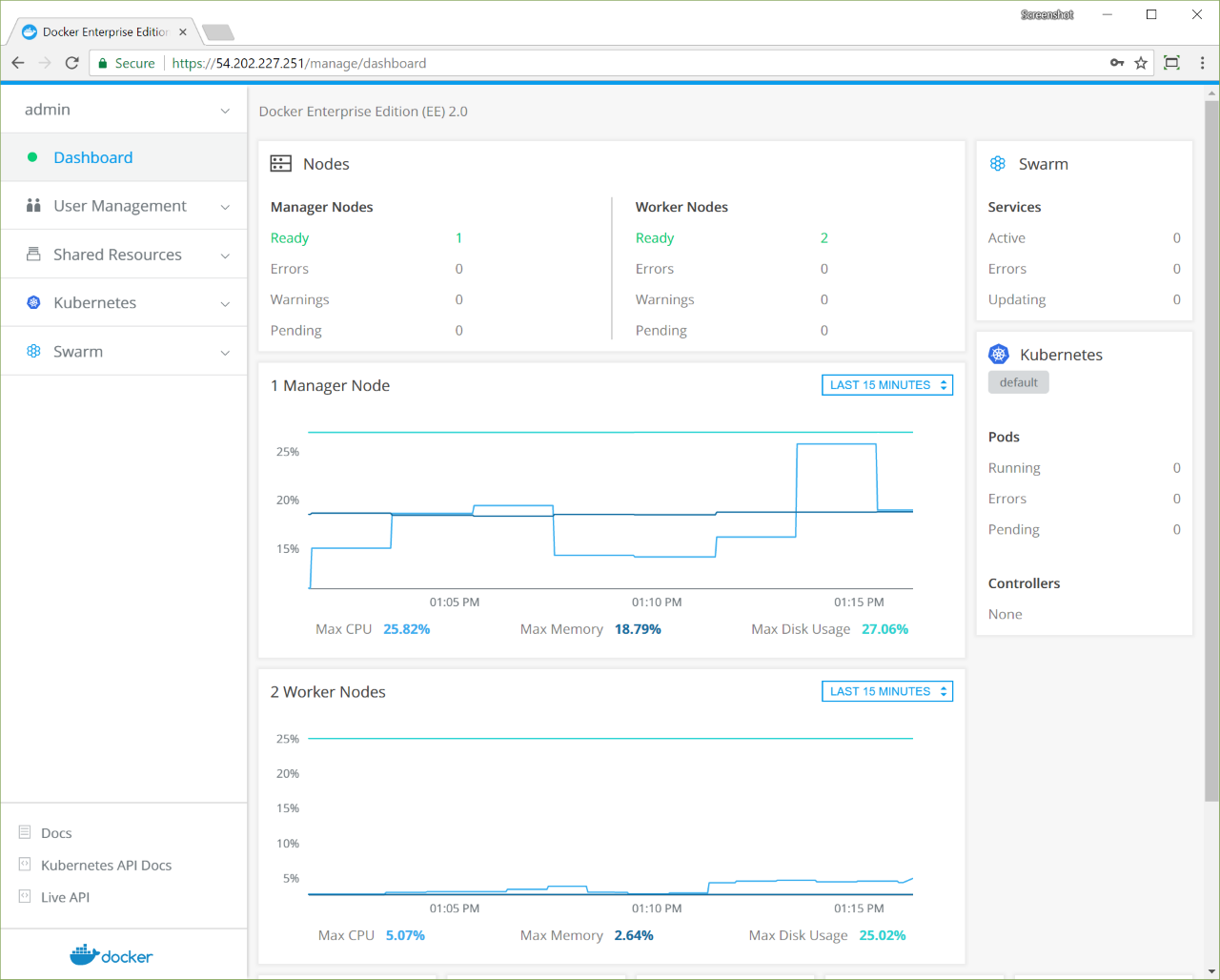

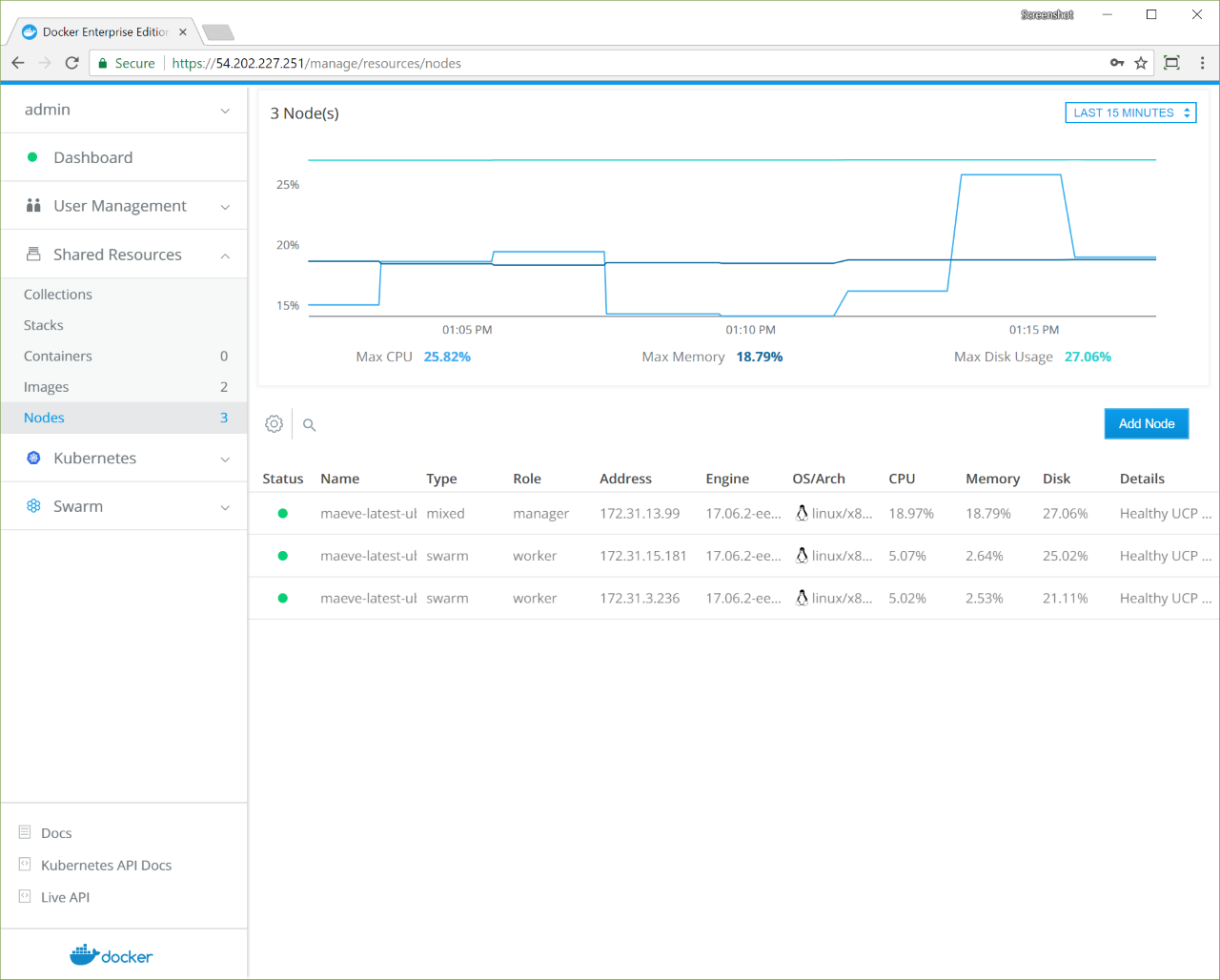

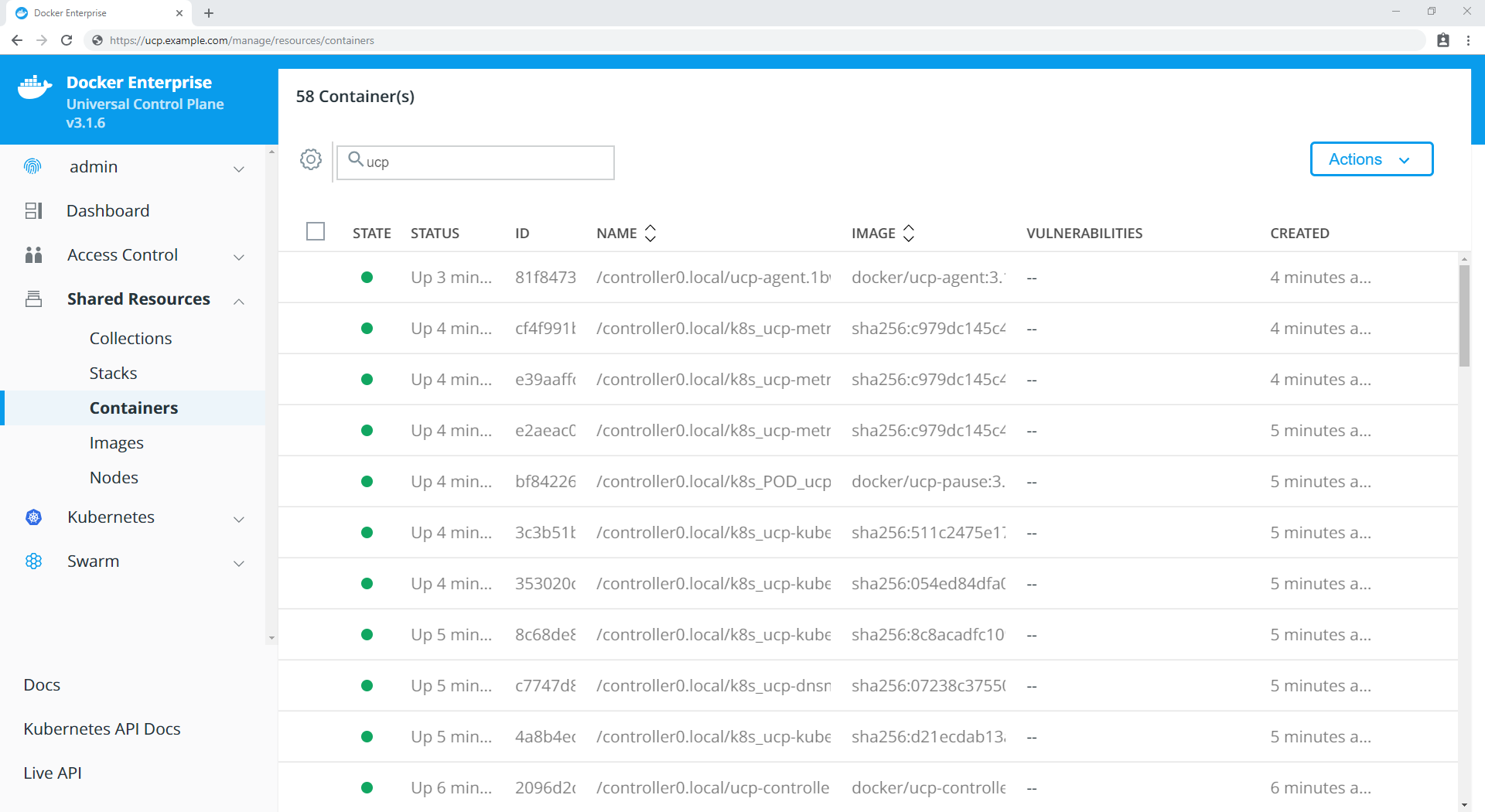

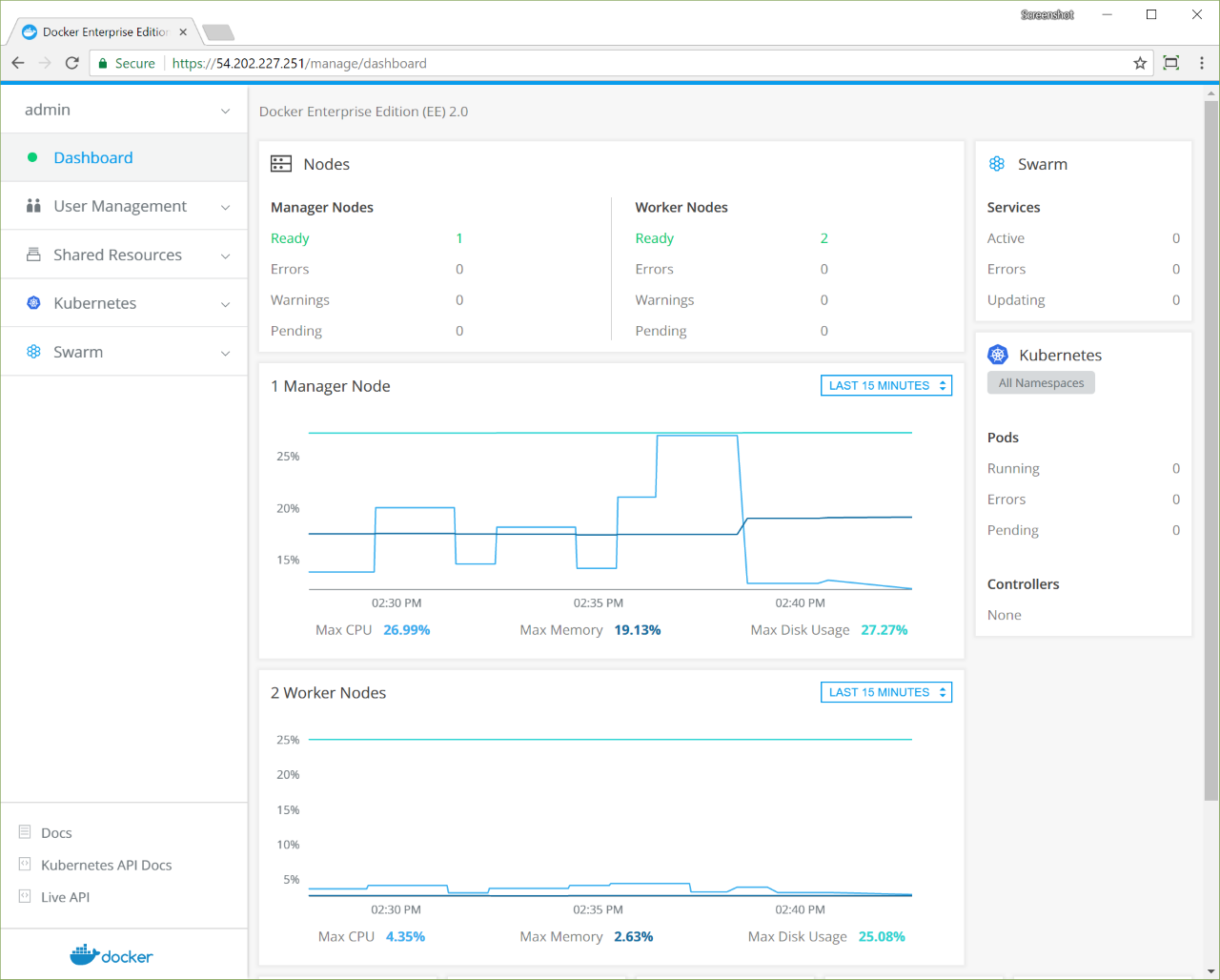

Docker Enterprise is a standards-based container platform for development and delivery of modern applications. Docker Enterprise is designed for application developers and IT teams who build, share, and run business-critical applications at scale in production. Docker Enterprise provides a consistent and secure end-to-end application pipeline, choice of tools and languages, and globally consistent Kubernetes environments that run in any cloud.

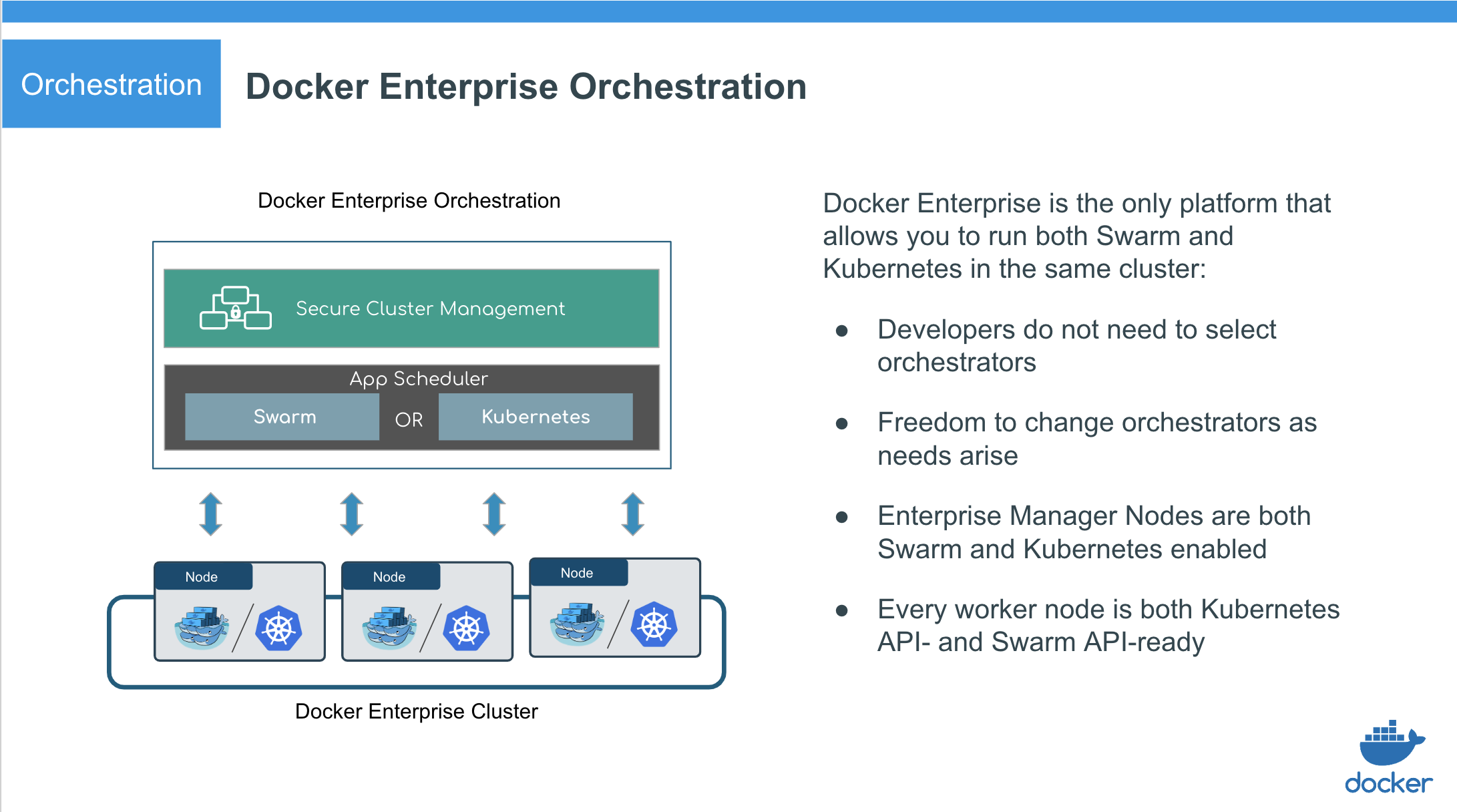

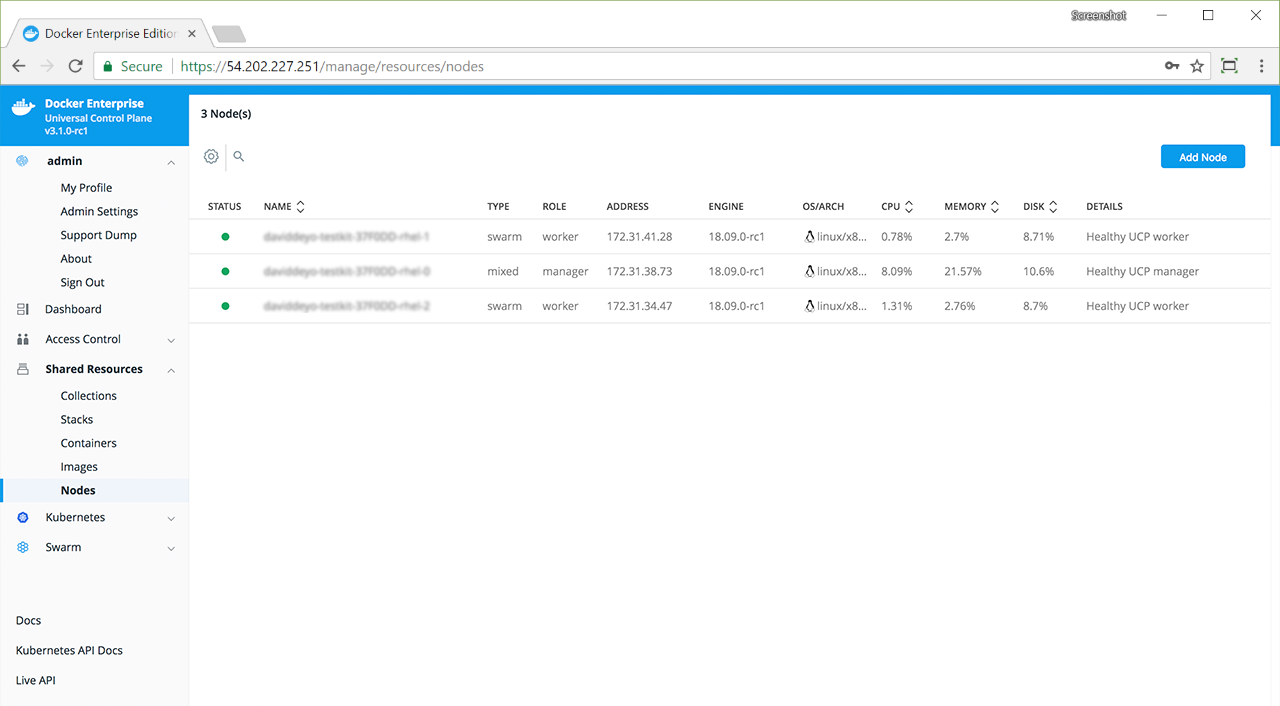

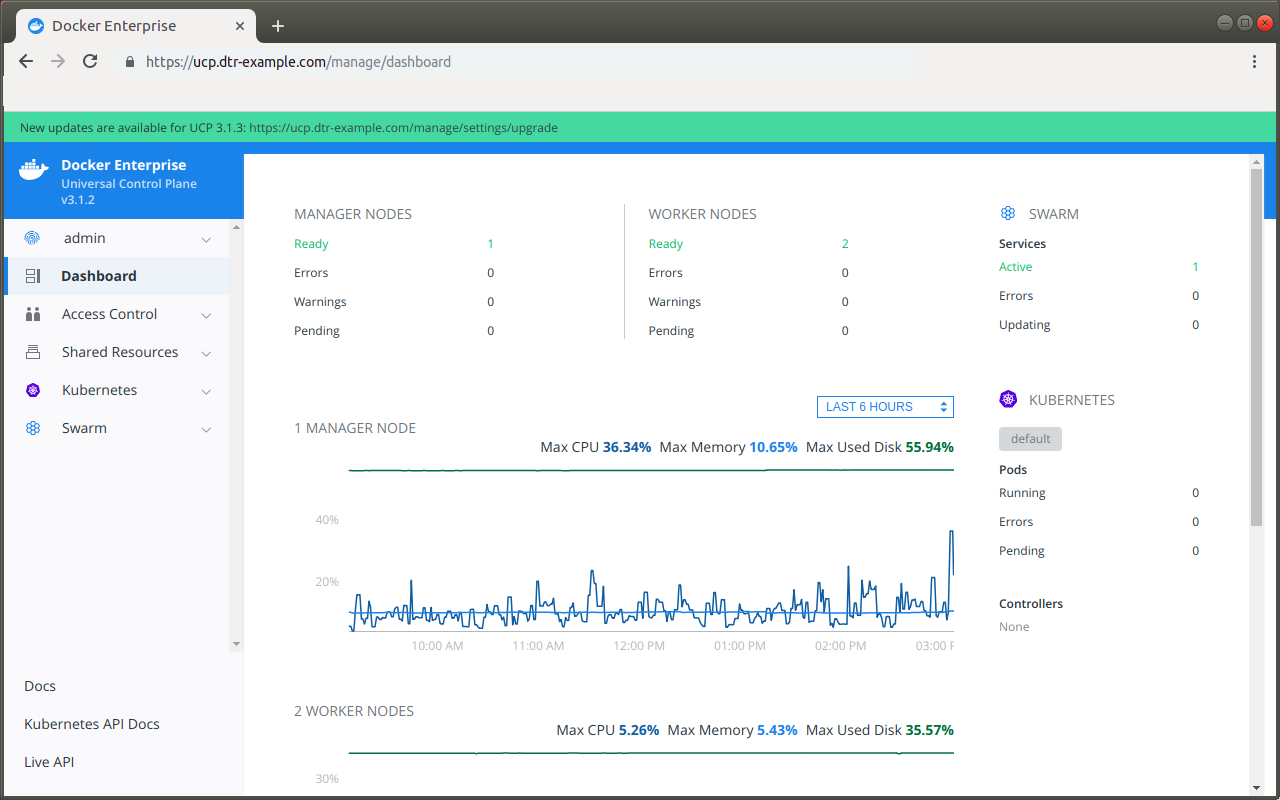

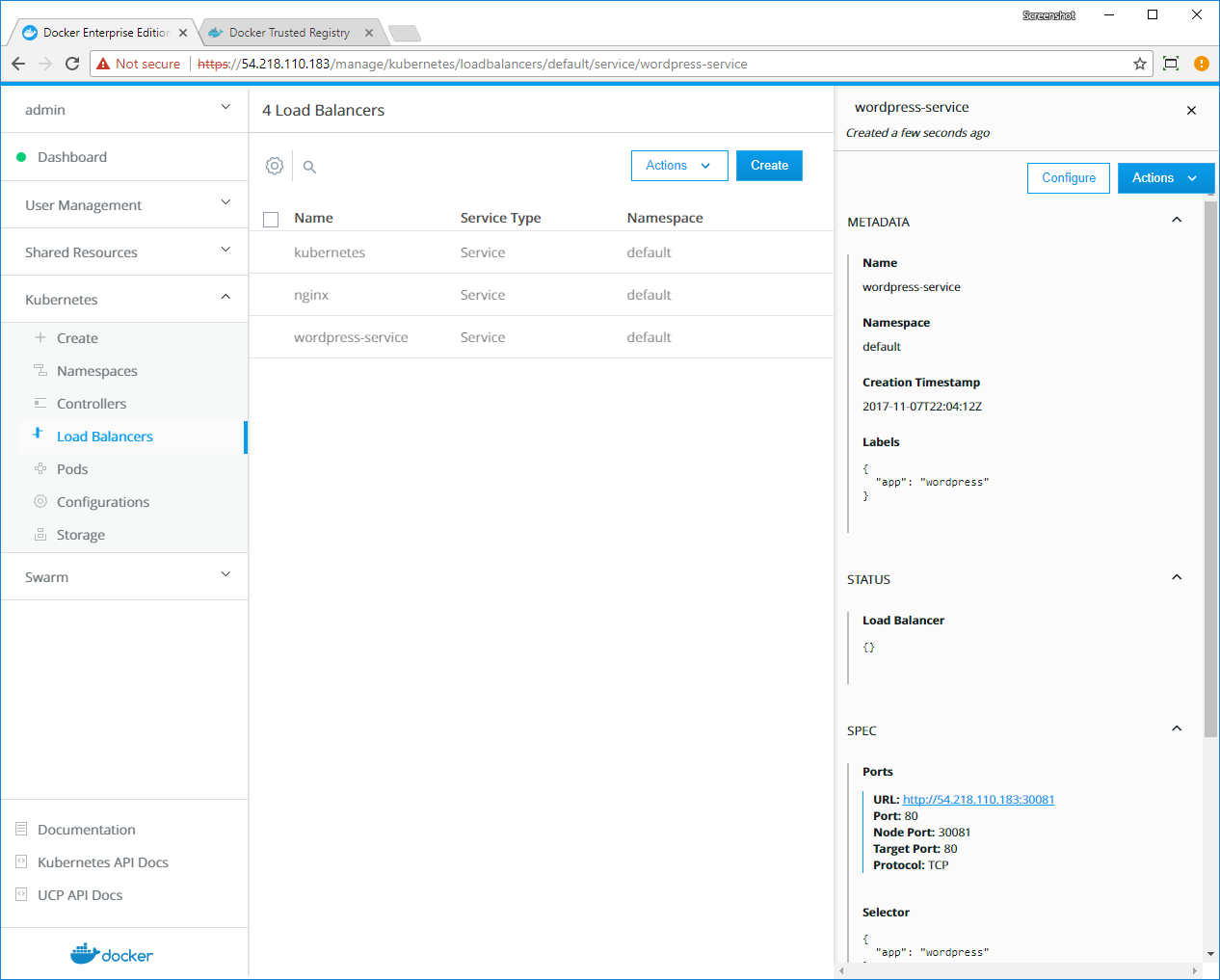

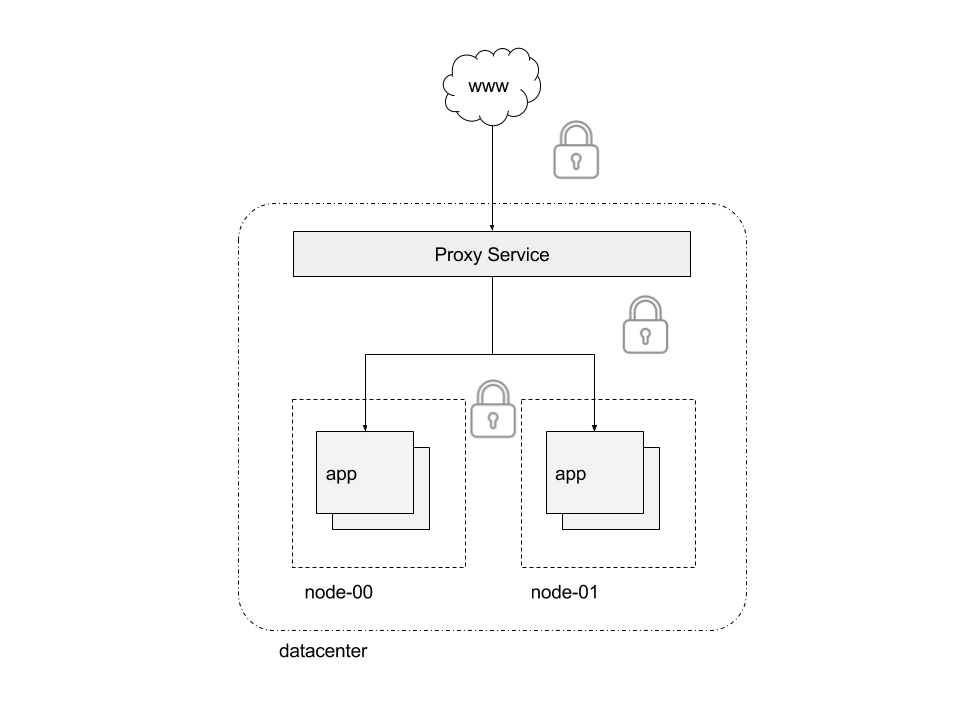

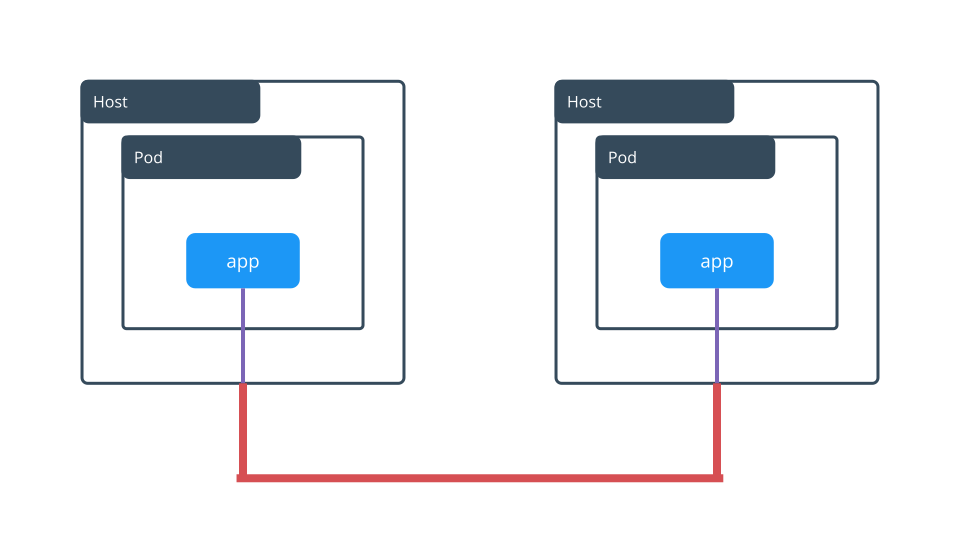

Docker Enterprise enables deploying highly available workloads using either the Docker Kubernetes Service or Docker Swarm. You can join thousands of physical or virtual machines together to create a cluster, allowing you to deploy your applications at scale and to manage your clusters from a centralized place.

Docker Enterprise automates many of the tasks that orchestration requires, like provisioning pods, containers, and cluster resources. Self-healing components ensure that Docker Enterprise clusters remain highly available.

Docker Kubernetes service¶

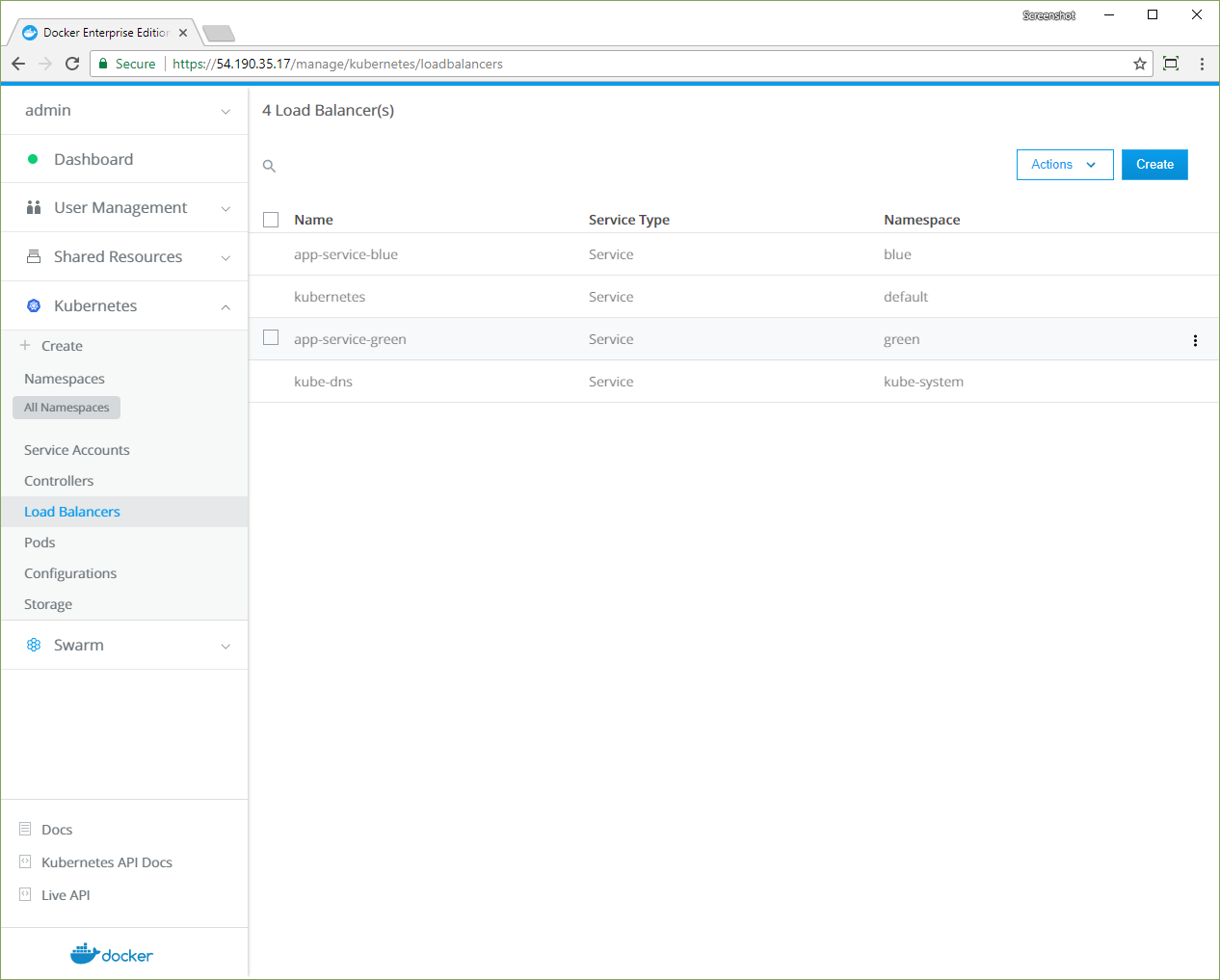

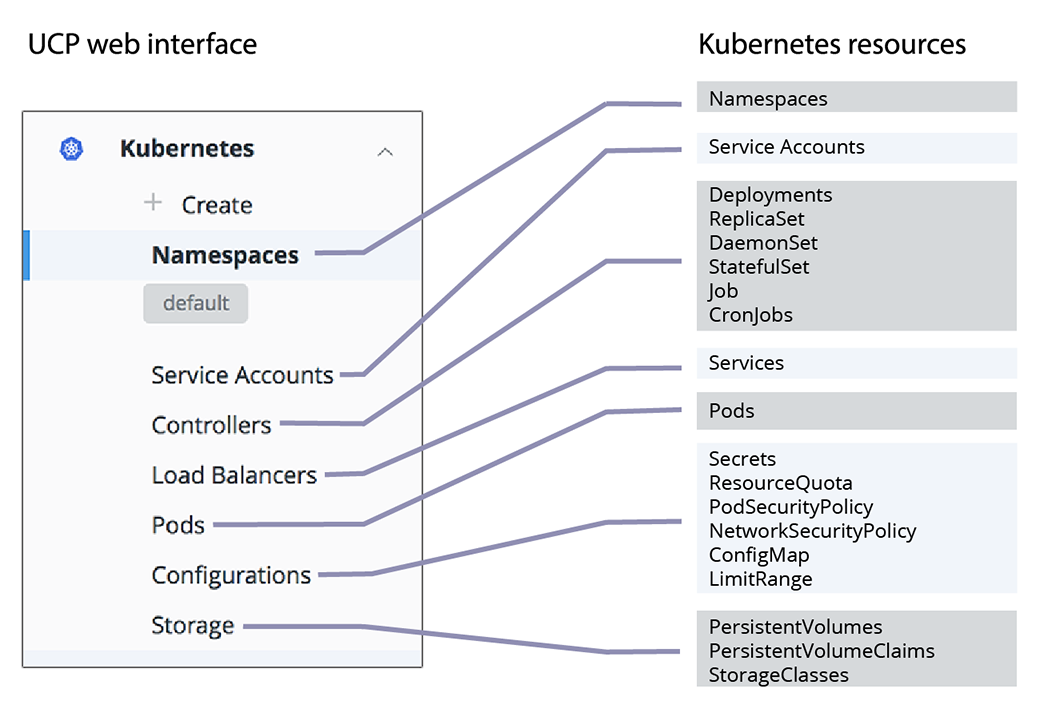

The Docker Kubernetes Service fully supports all Docker Enterprise features, including role-based access control, LDAP/AD integration, image scanning and signing enforcement policies, and security policies.

Docker Kubernetes Services features include:

- Kubernetes orchestration full feature set

- CNCF Certified Kubernetes conformance

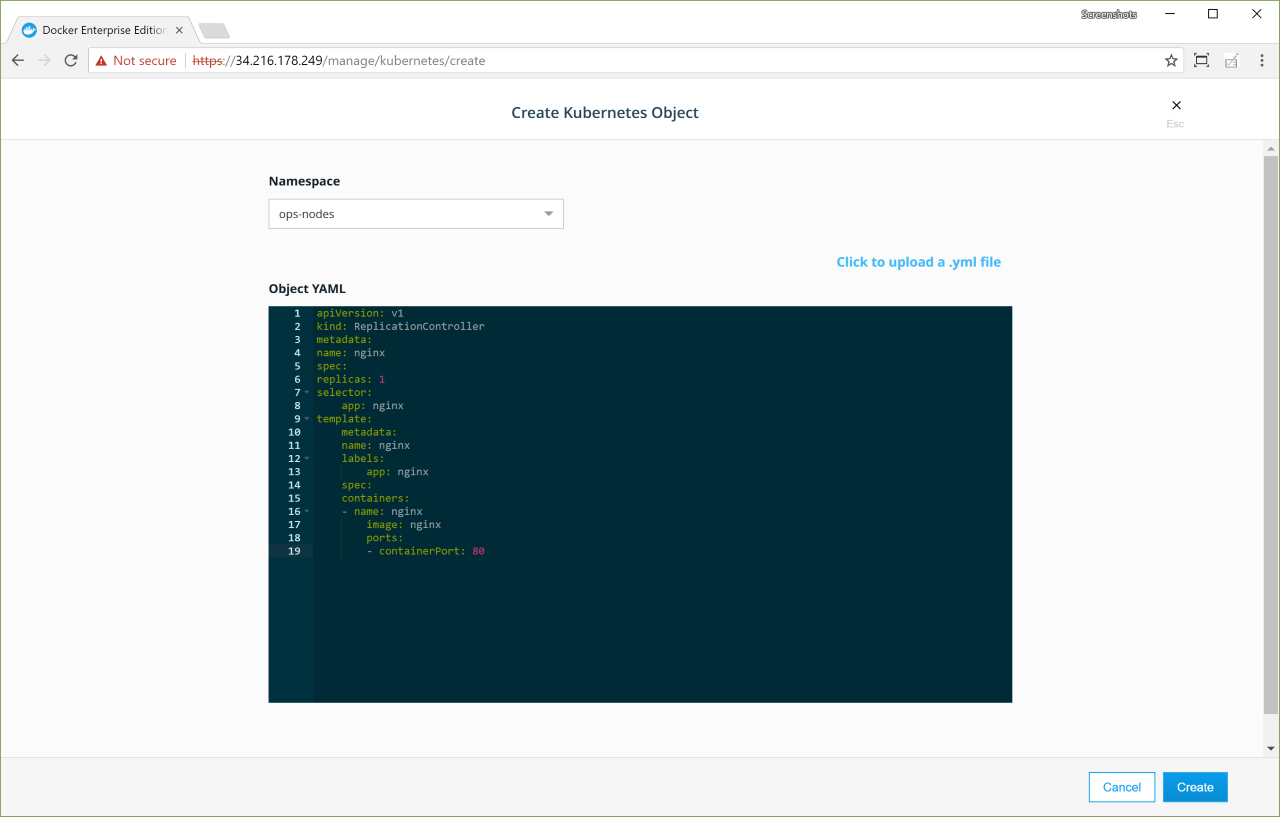

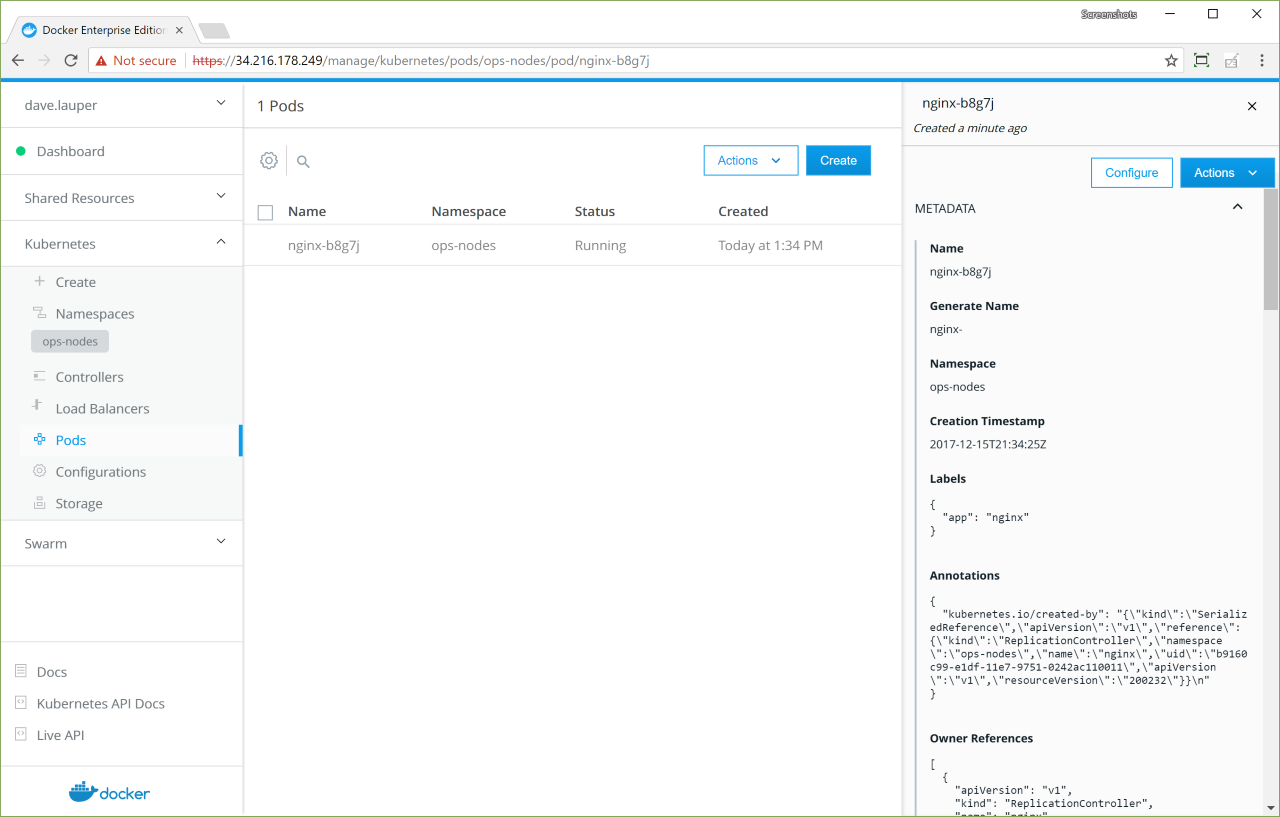

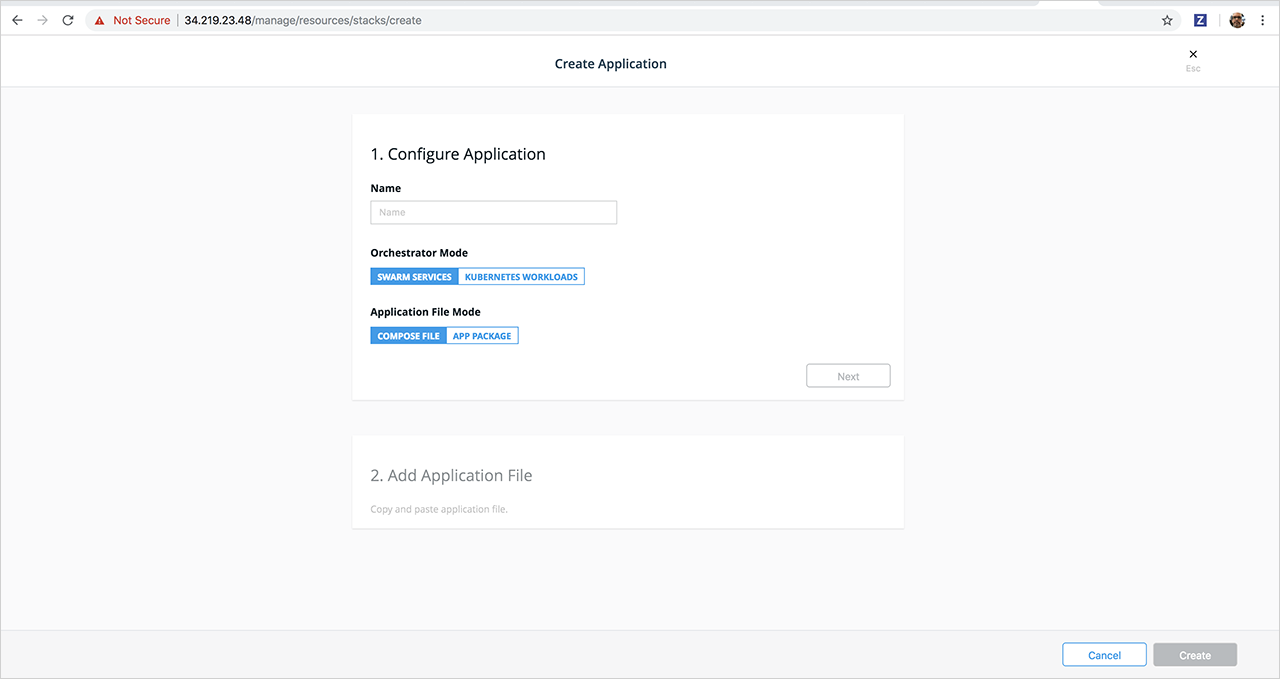

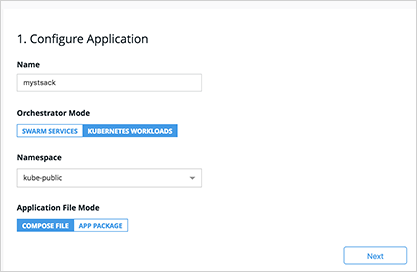

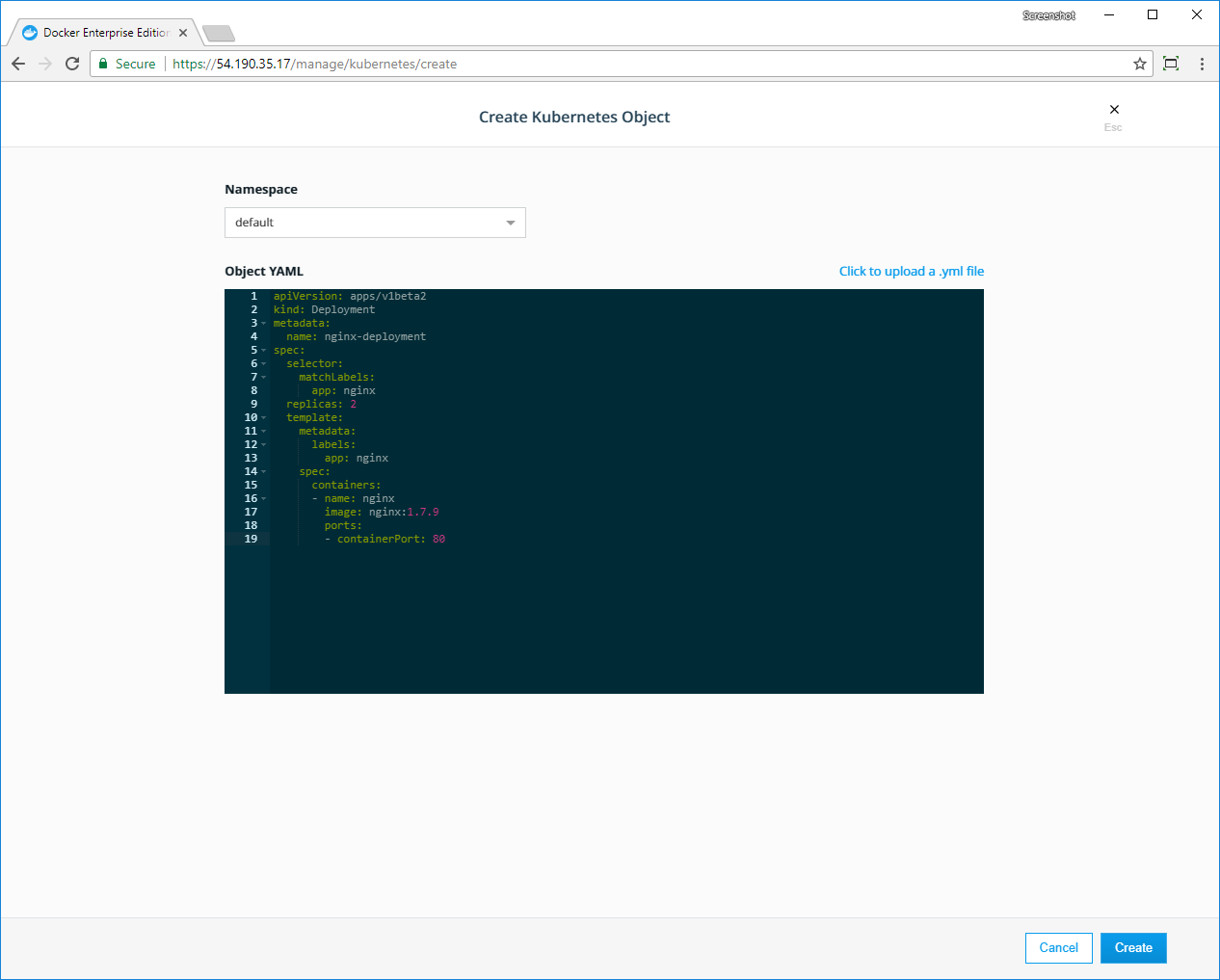

- Kubernetes app deployment via UCP web UI or CLI (kubectl)

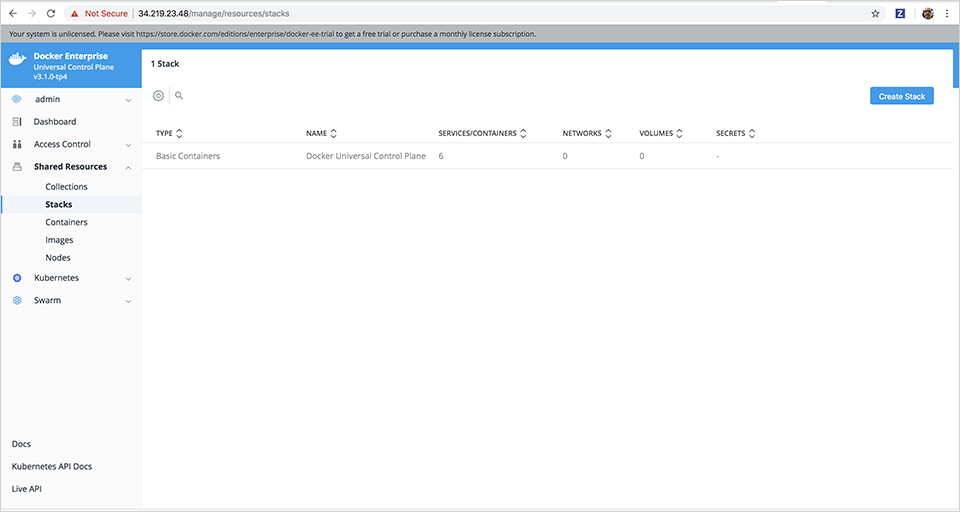

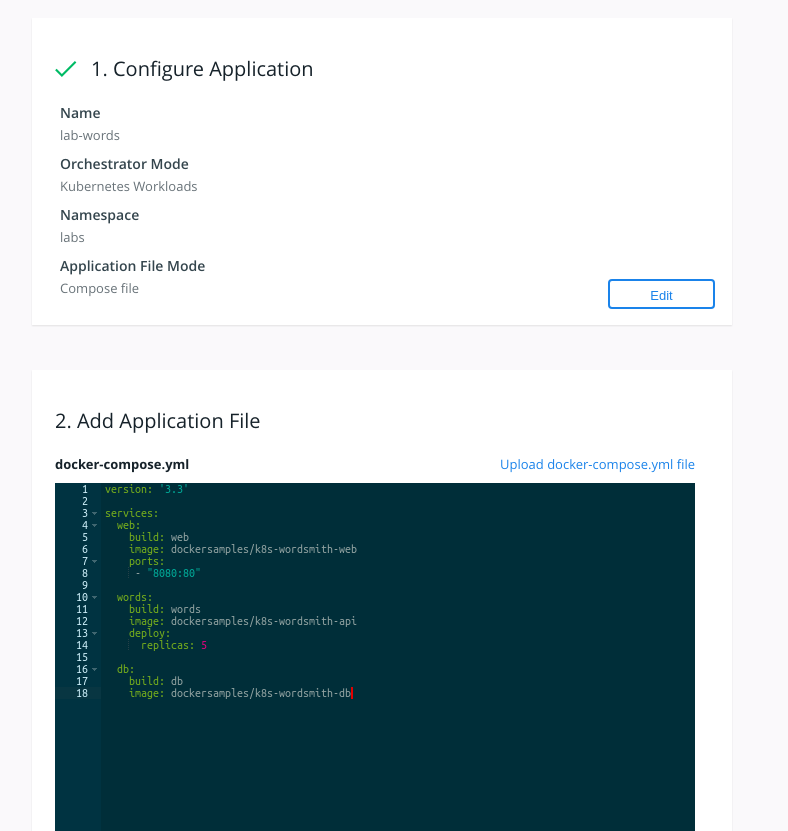

- Compose stack deployment for Swarm and Kubernetes apps (docker stack deploy)

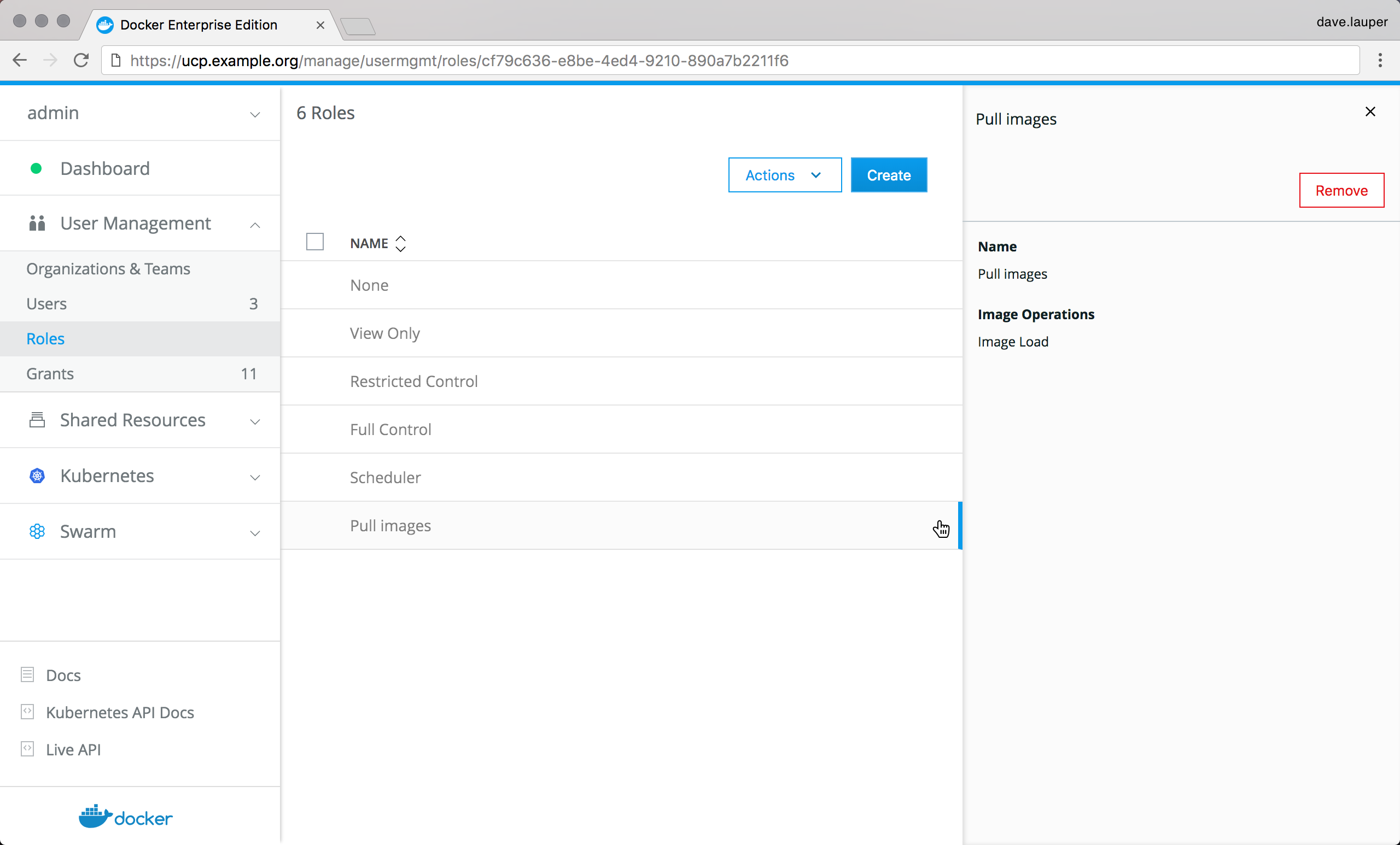

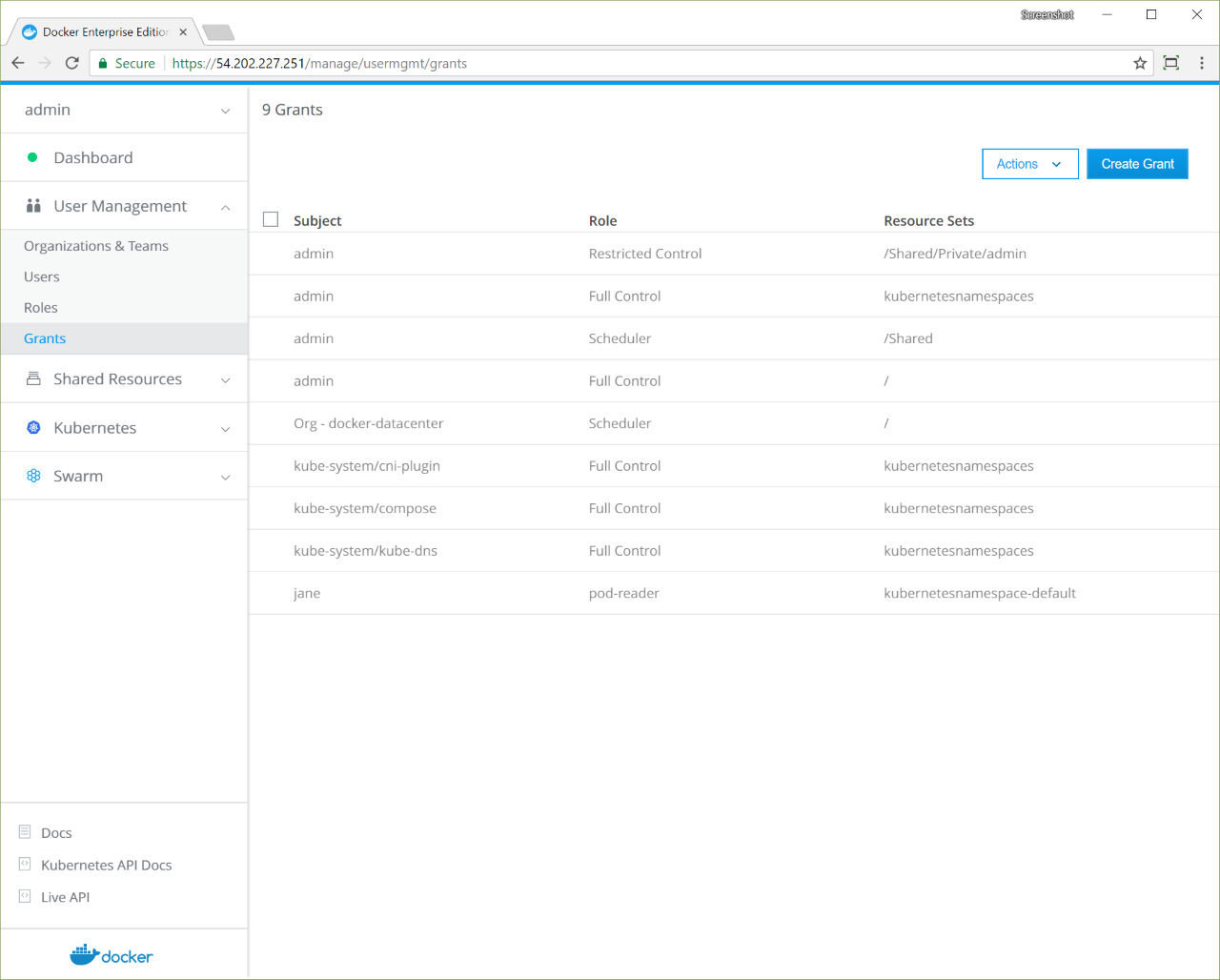

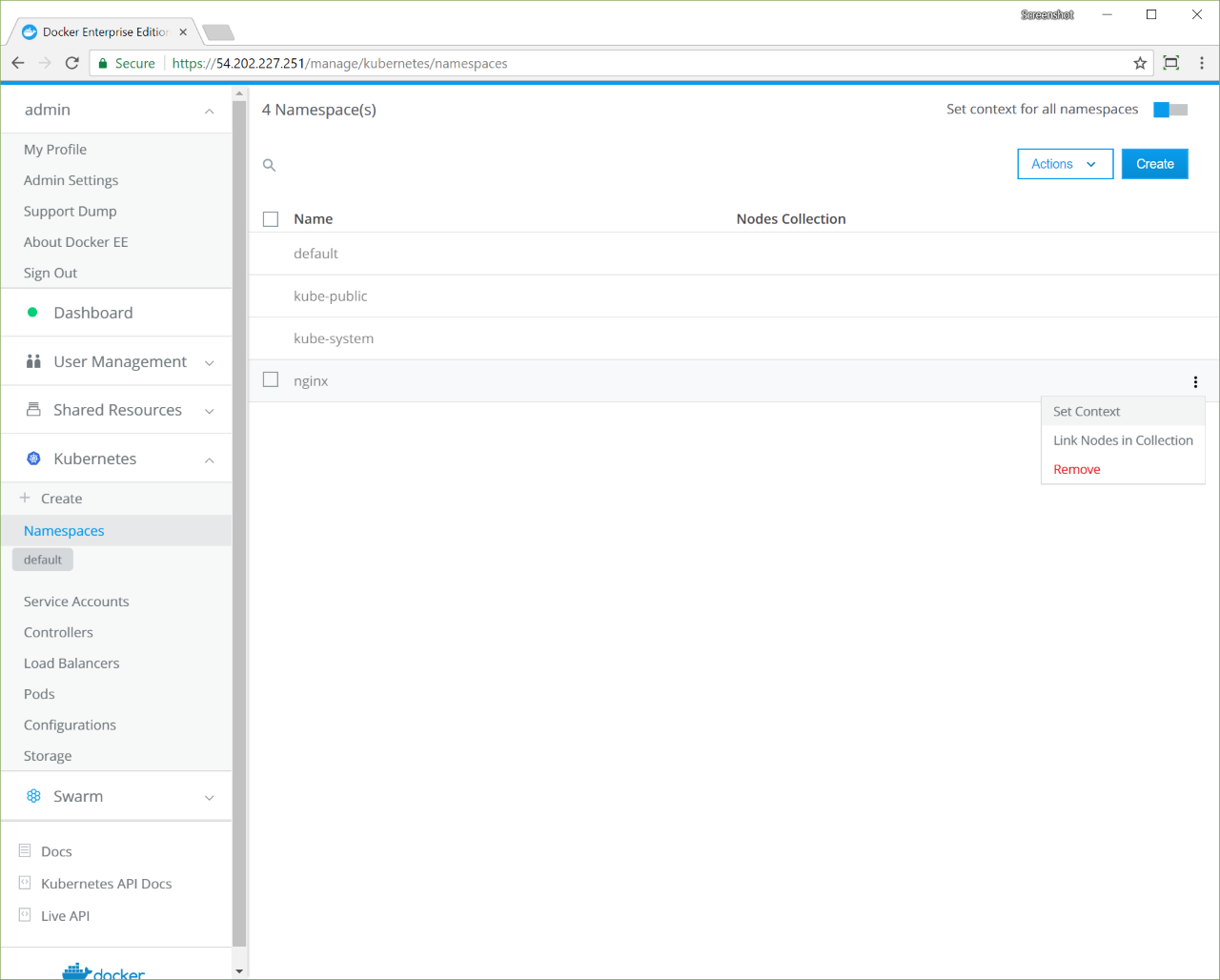

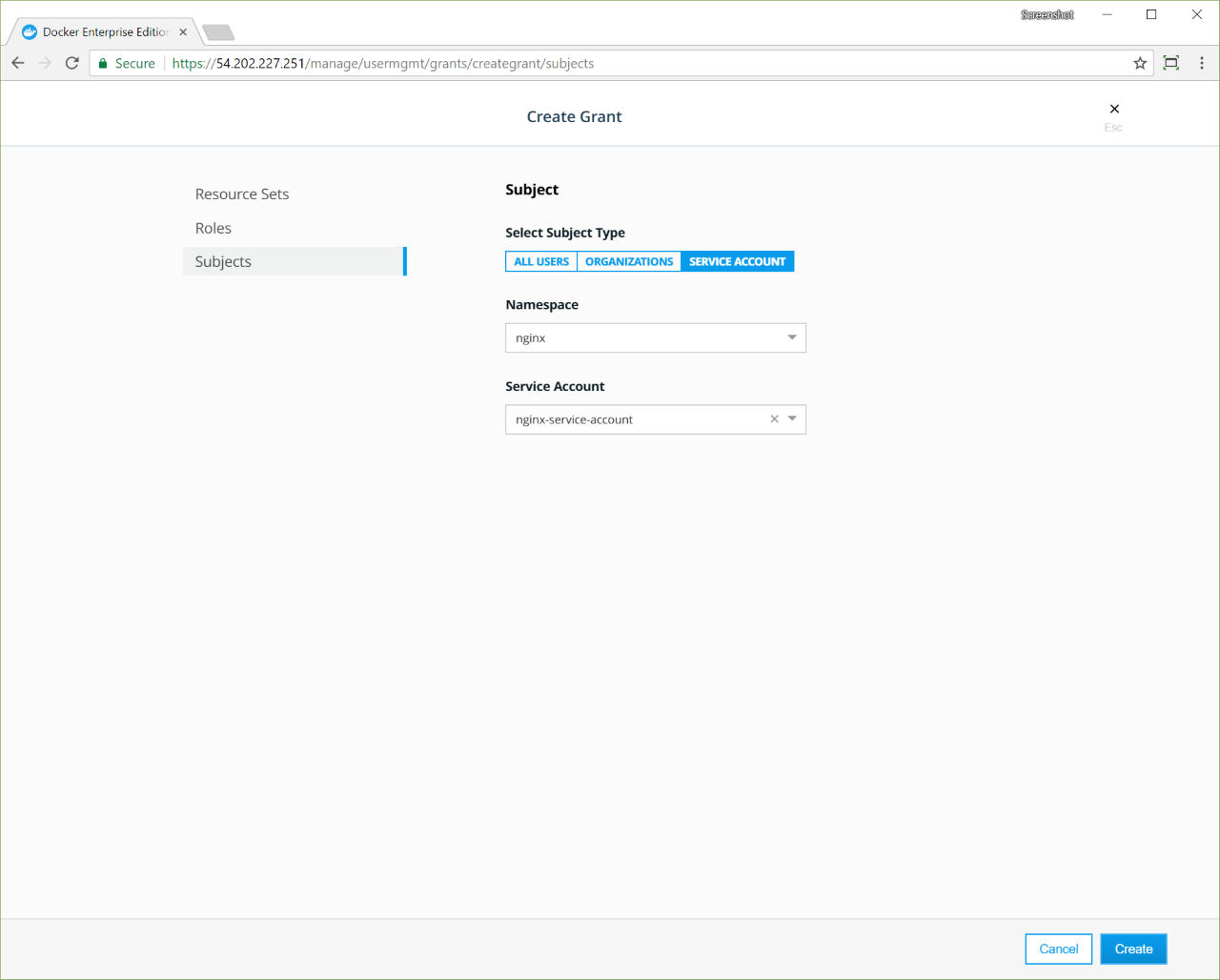

- Role-based access control for Kubernetes workloads

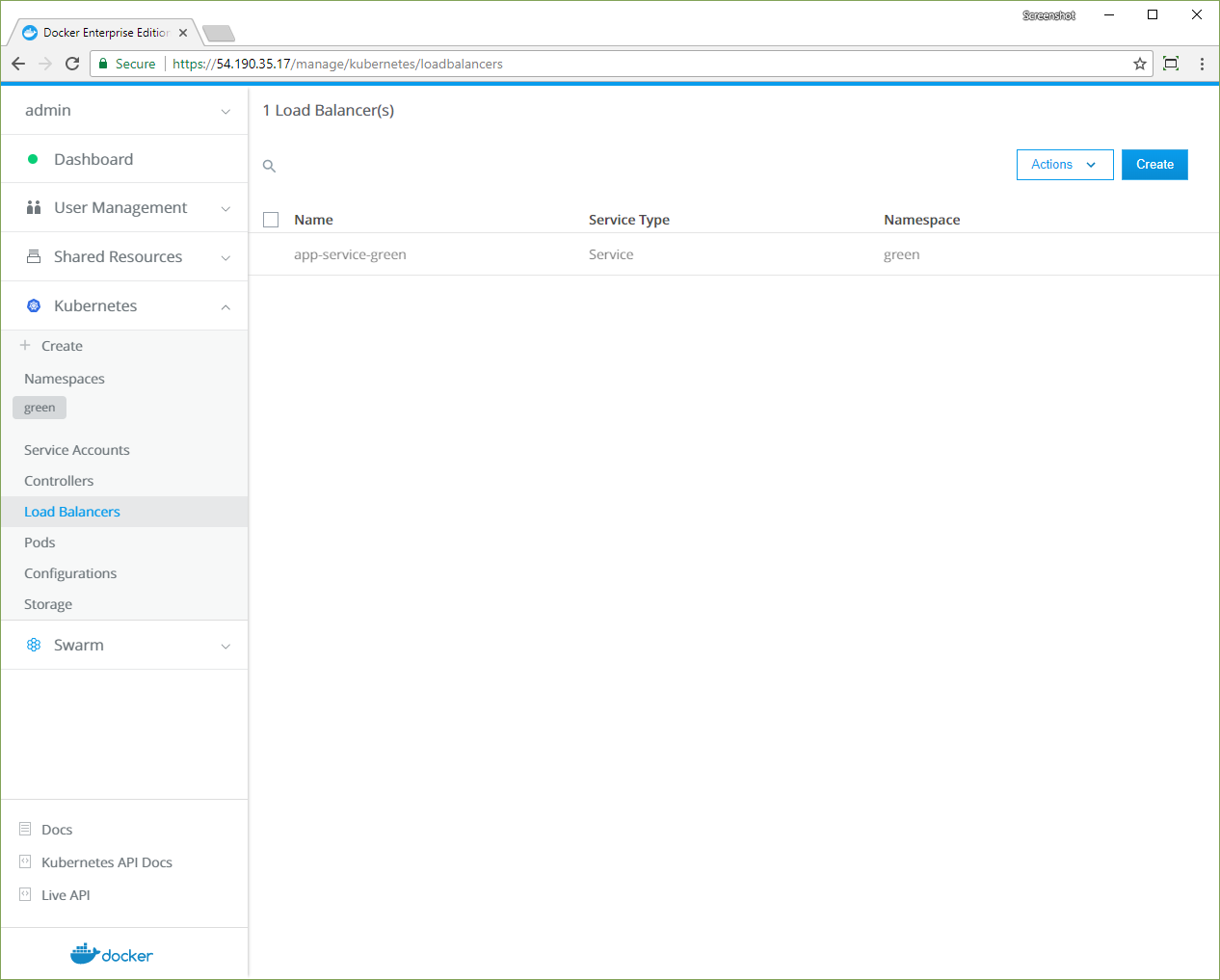

- Ingress Controllers with Kubernetes L7 routing

- Pod Security Policies to define a set of conditions that a pod must run with in order to be accepted into the system

- Container Storage Interface (CSI) support

- iSCSI support for Kubernetes

- Kubernetes-native ingress (Istio)

In addition, UCP integrates with Kubernetes by using admission controllers, which enable:

- Authenticating user client bundle certificates when communicating directly with the Kubernetes API server

- Authorizing requests via the UCP role-based access control model

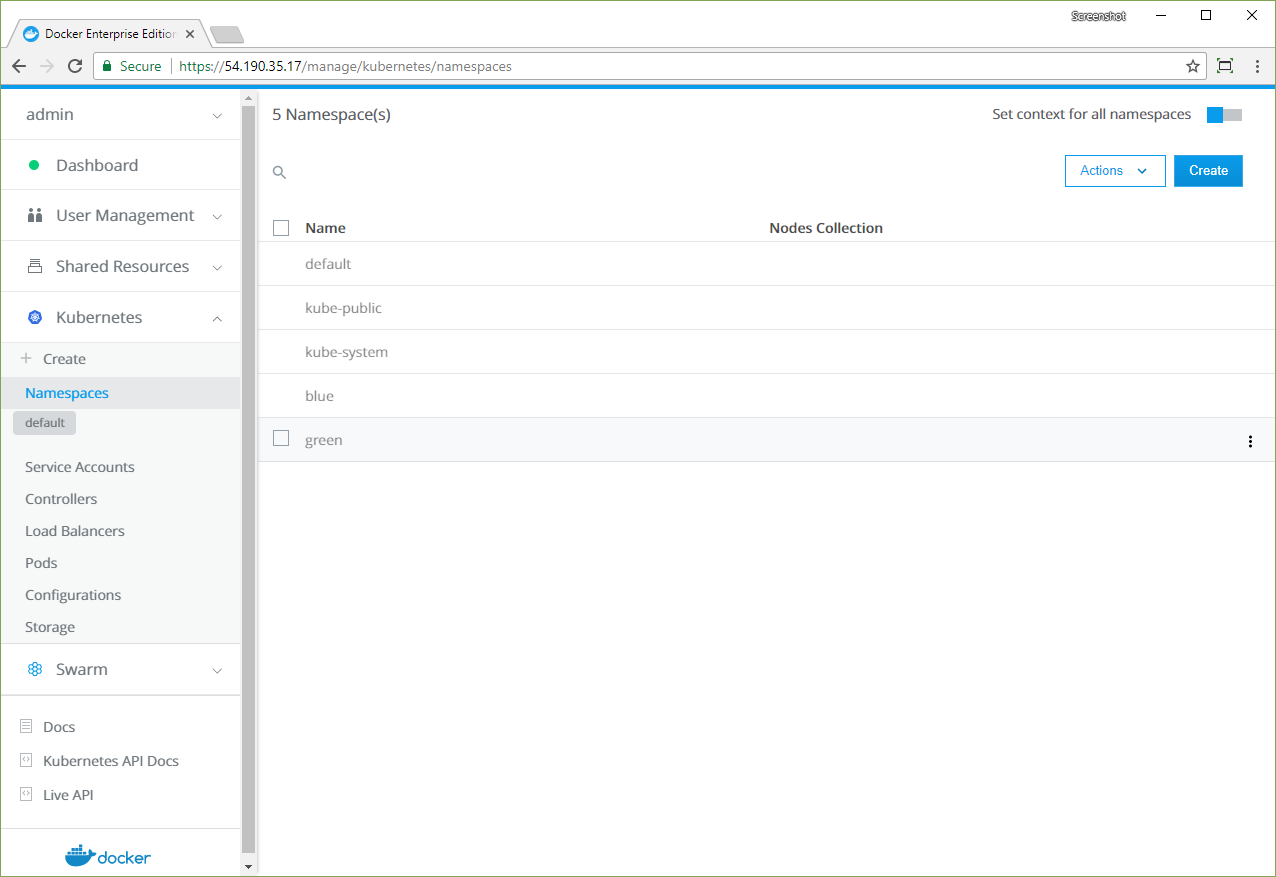

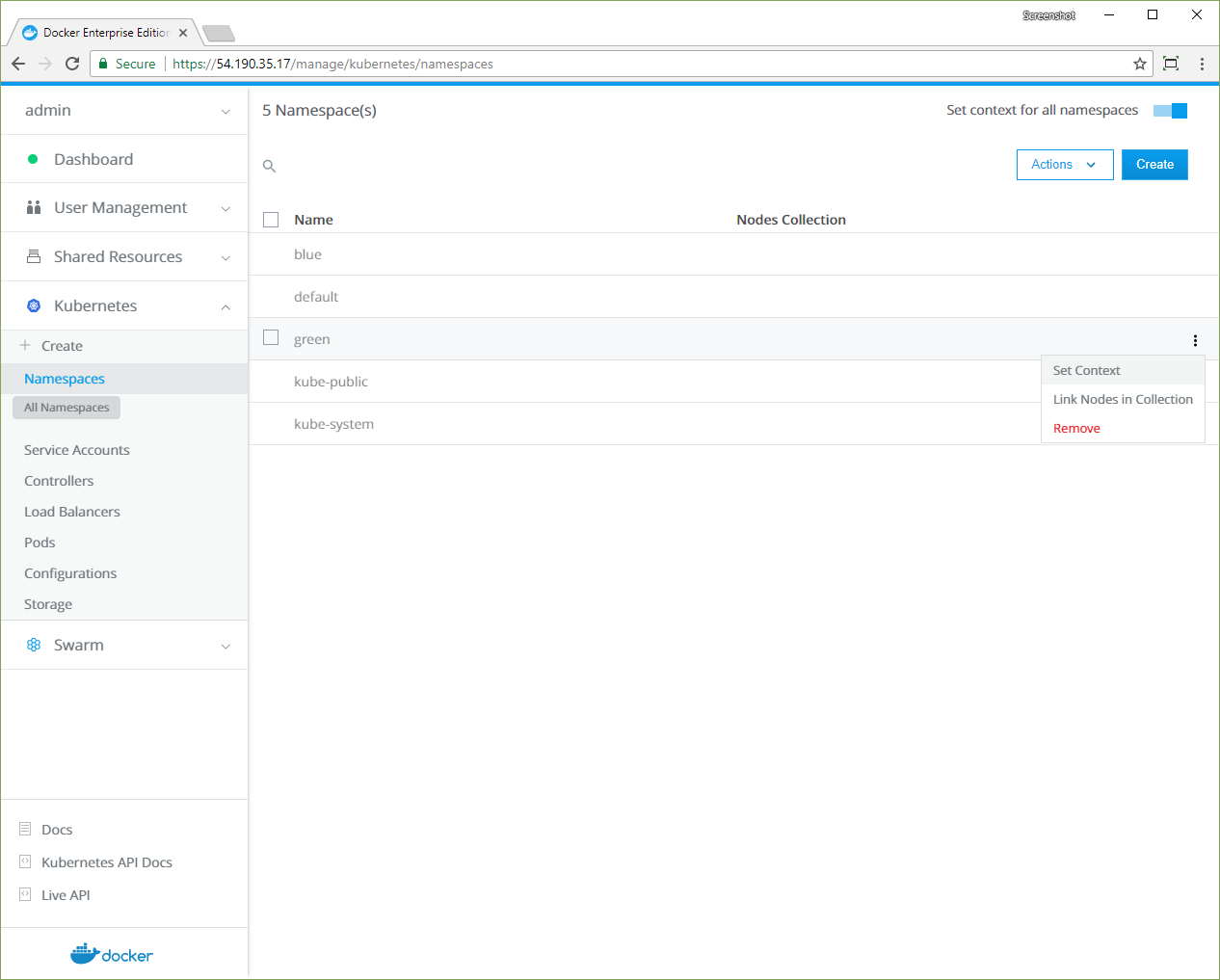

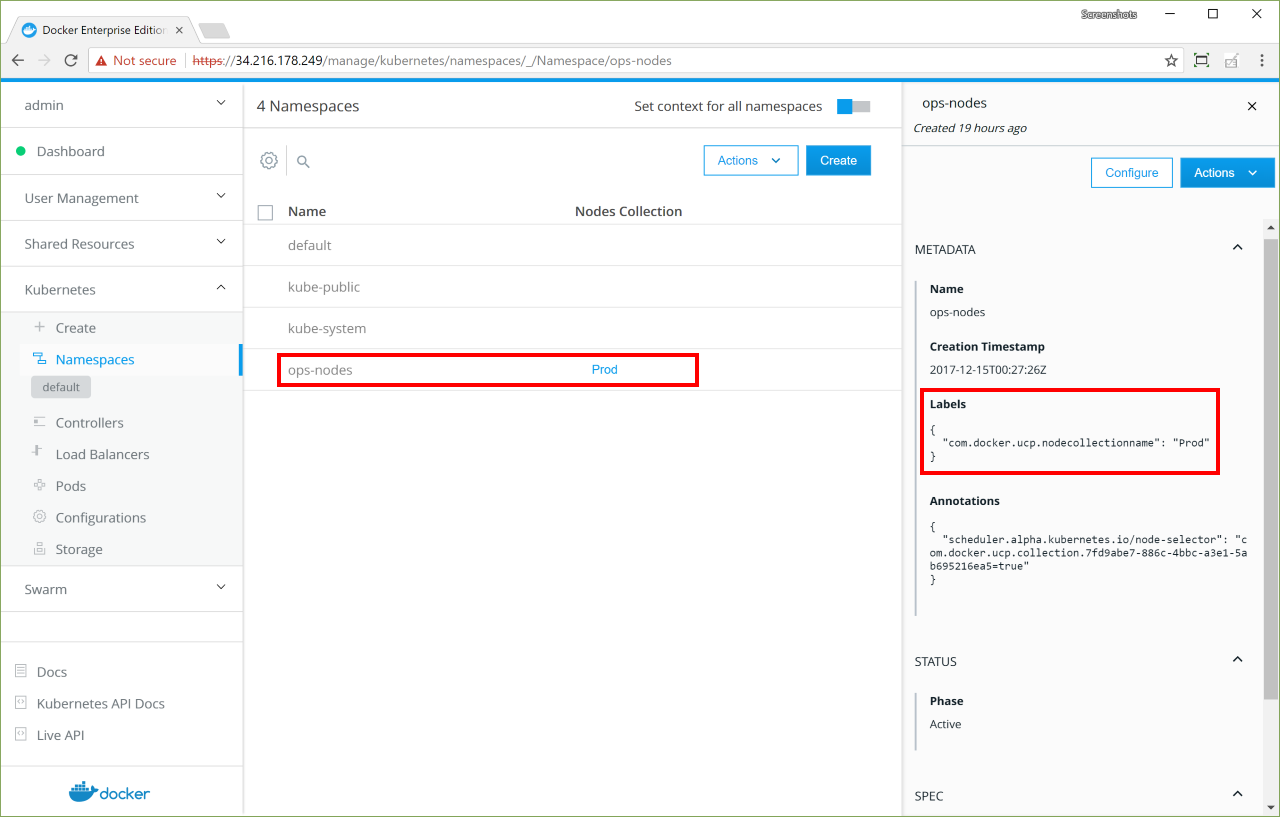

- Assigning nodes to a namespace by injecting a

NodeSelectorautomatically to workloads via admission control - Keeping all nodes in both Kubernetes and Swarm orchestrator inventories

- Fine-grained access control and privilege escalation prevention without

the

PodSecurityPolicyadmission controller - Resolving images of deployed workloads automatically, and accepting or rejecting images based on UCP’s signing-policy feature

The default Docker Enterprise installation includes both Kubernetes and Swarm components across the cluster, so every newly joined worker node is ready to schedule Kubernetes or Swarm workloads.

Kubernetes CLI¶

Docker Enterprise exposes the standard Kubernetes API, so you can use kubectl to manage your Kubernetes workloads:

kubectl cluster-info

Which produces output similar to the following:

Kubernetes master is running at https://54.200.115.43:6443

KubeDNS is running at https://54.200.115.43:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info

dump'.

Orchestration platform features¶

- Docker Enterprise manager nodes are both Swarm managers and Kubernetes masters, to enable high availability

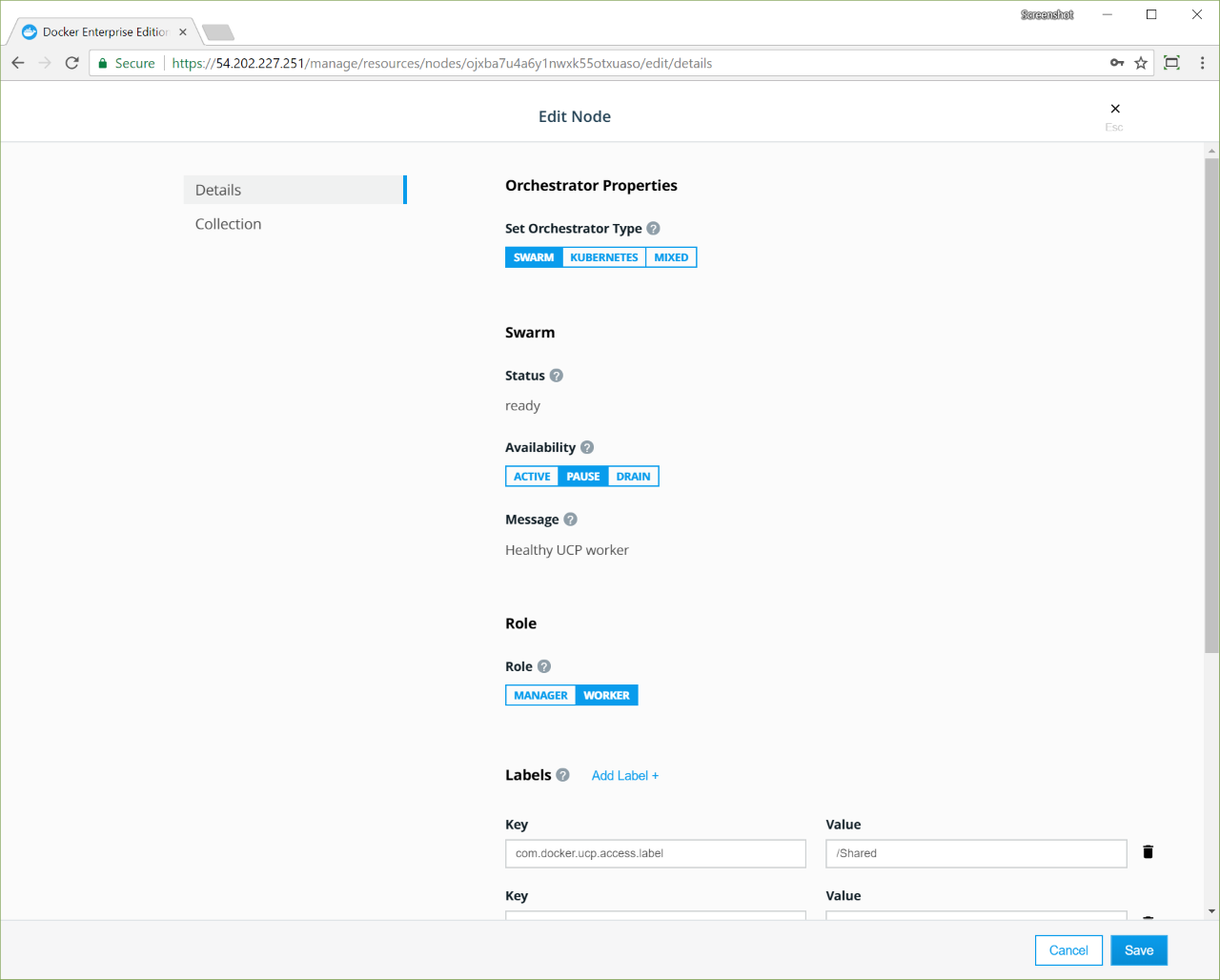

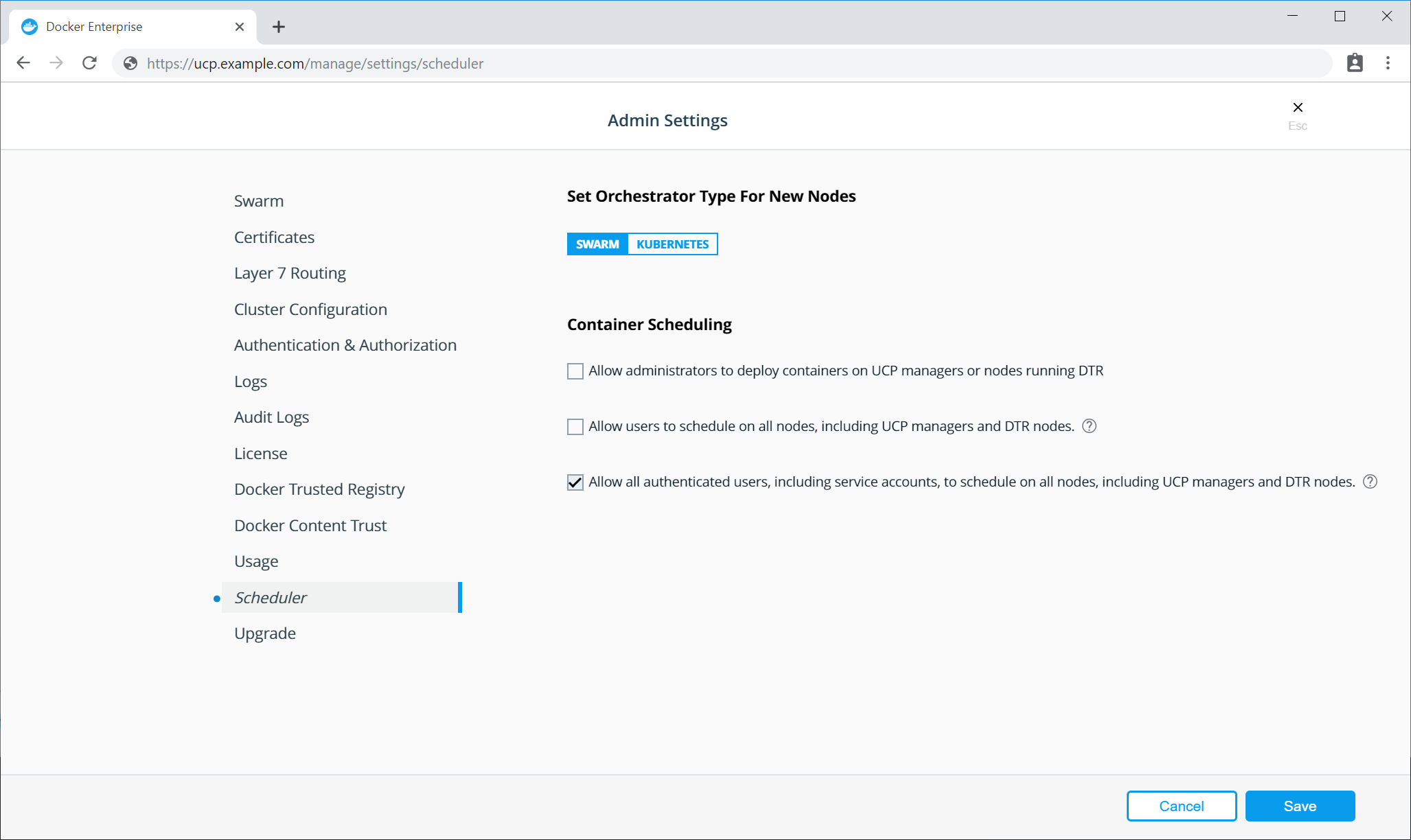

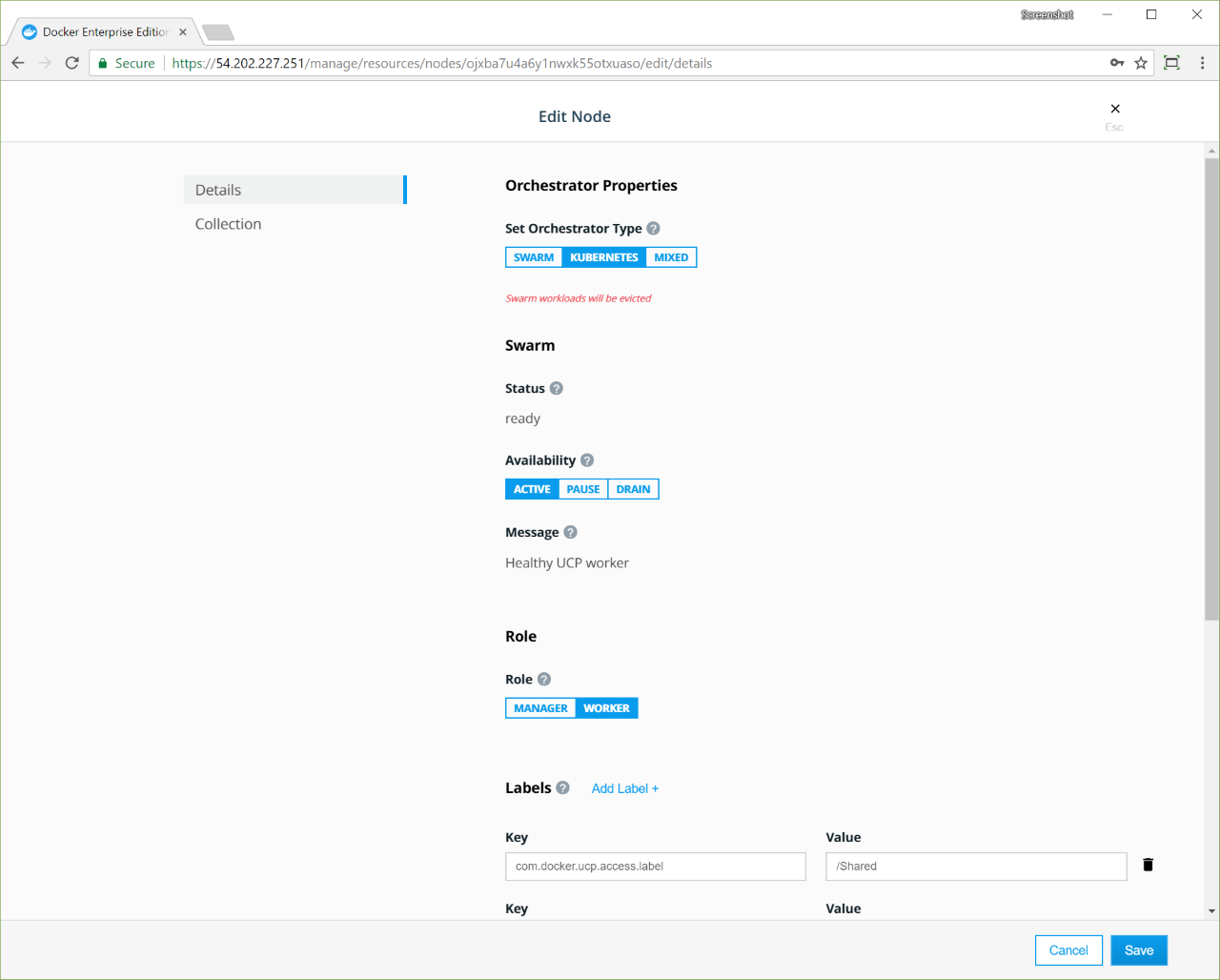

- Allocate worker nodes for Swarm or Kubernetes workloads (or both)

- Single pane of glass for monitoring apps

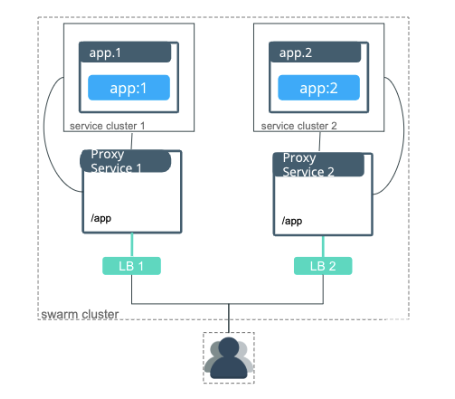

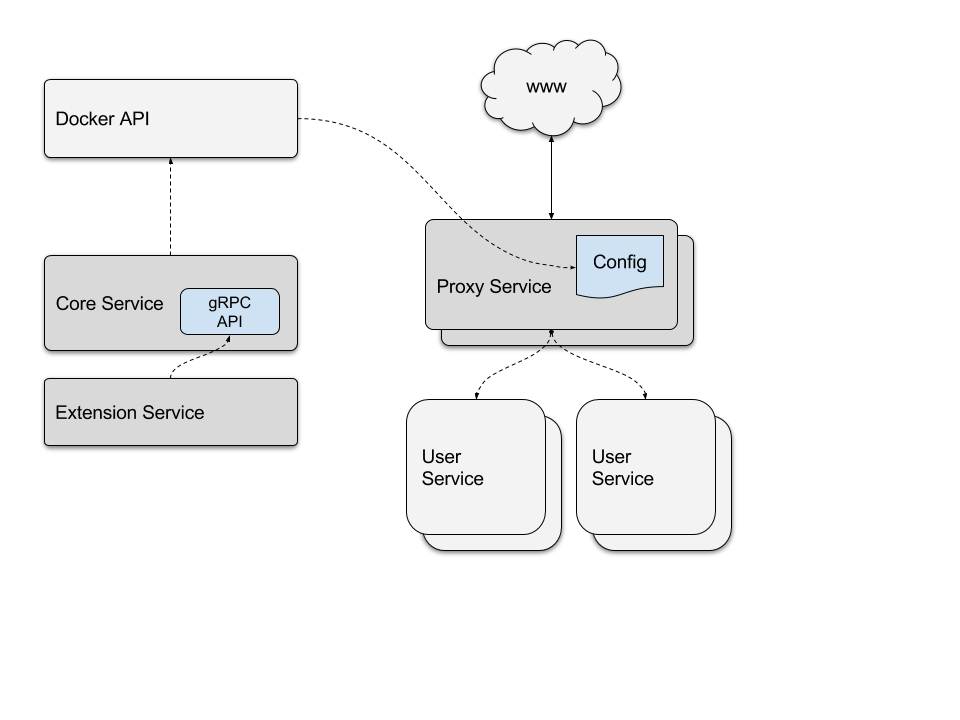

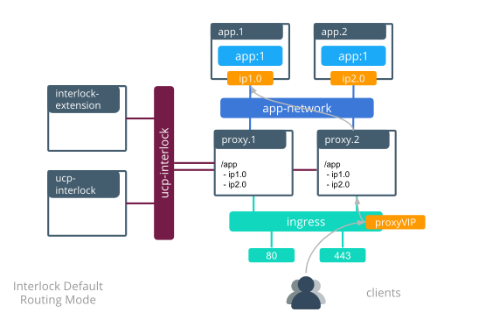

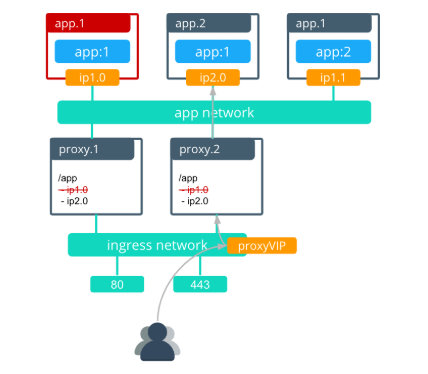

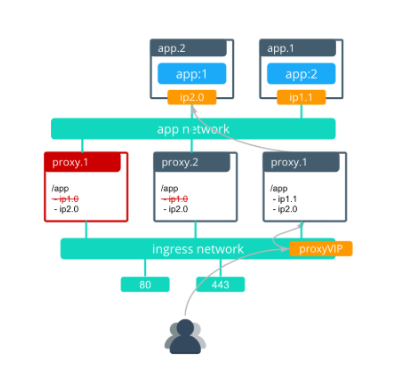

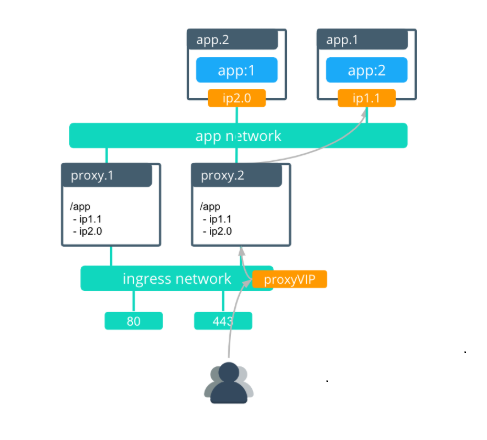

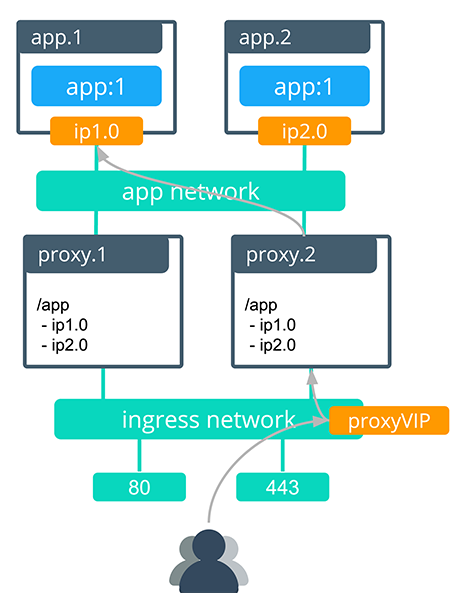

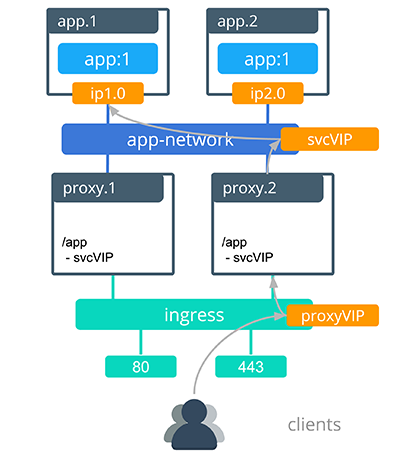

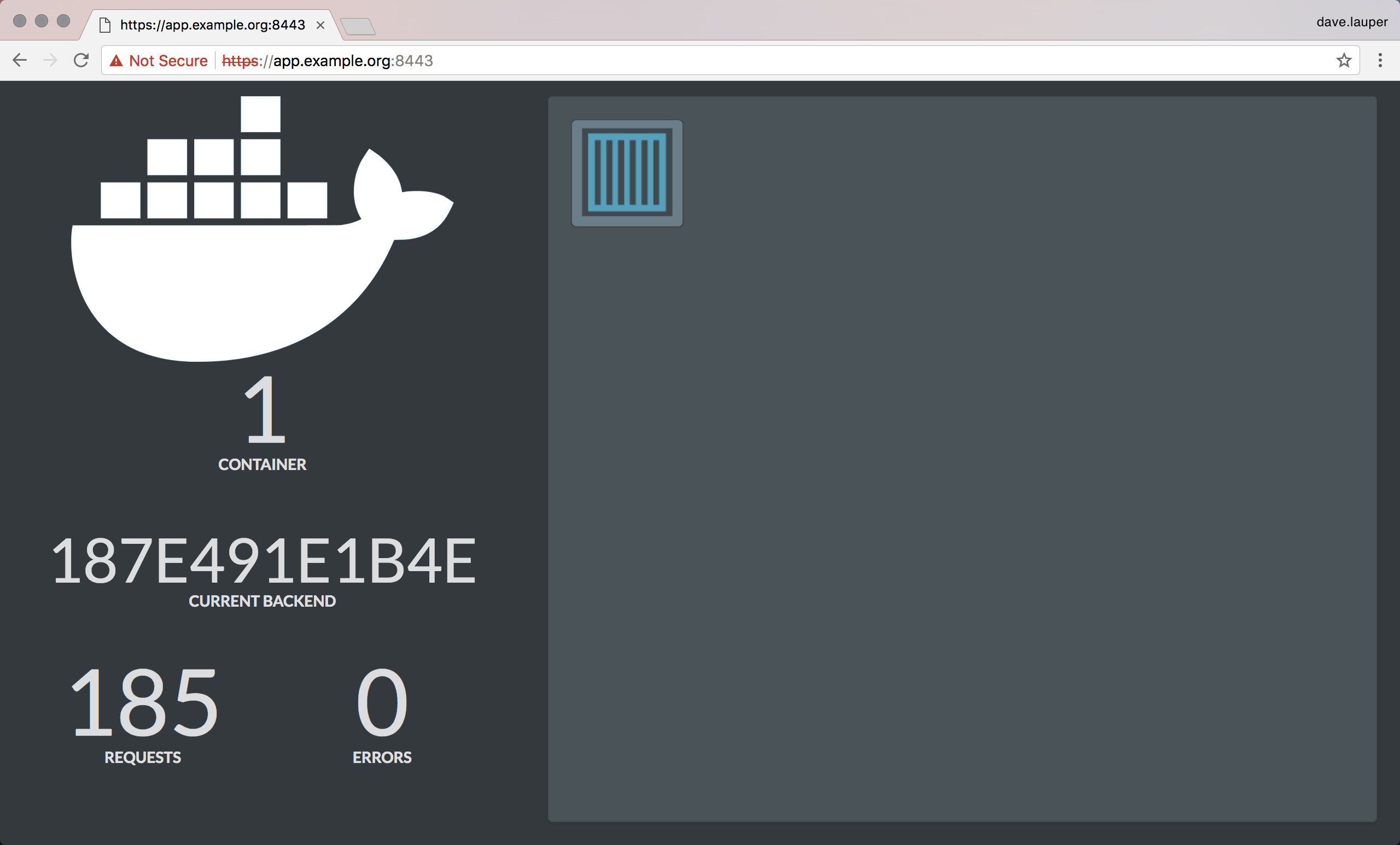

- Enhanced Swarm hostname routing mesh with Interlock 2.0

- One platform-wide management plane: secure software supply chain, secure multi-tenancy, and secure and highly available node management

Security and access control¶

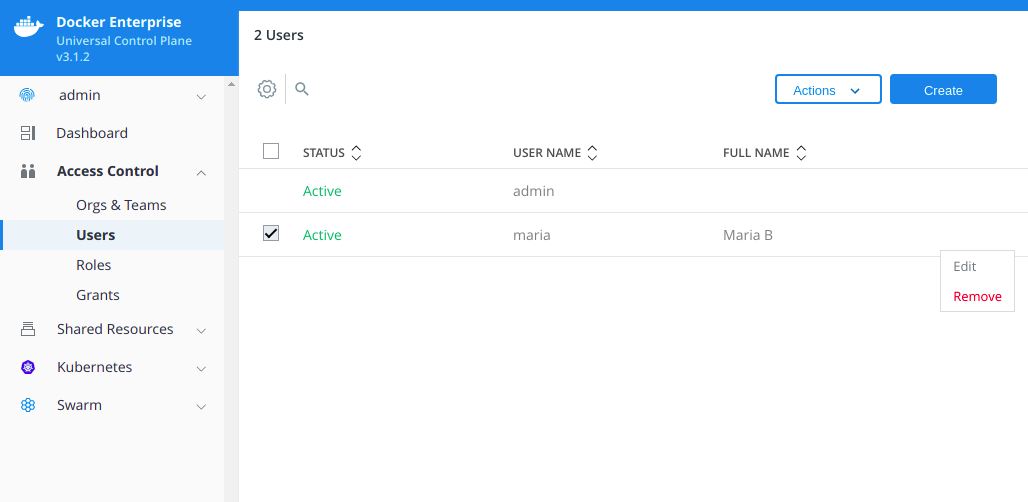

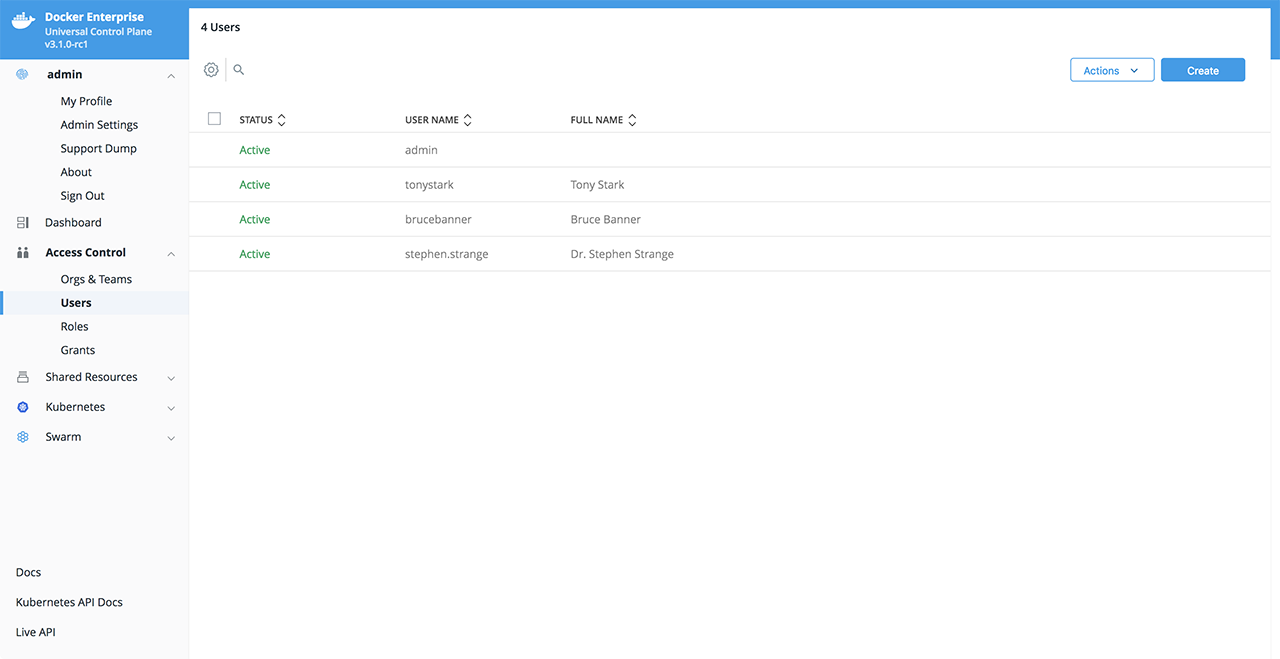

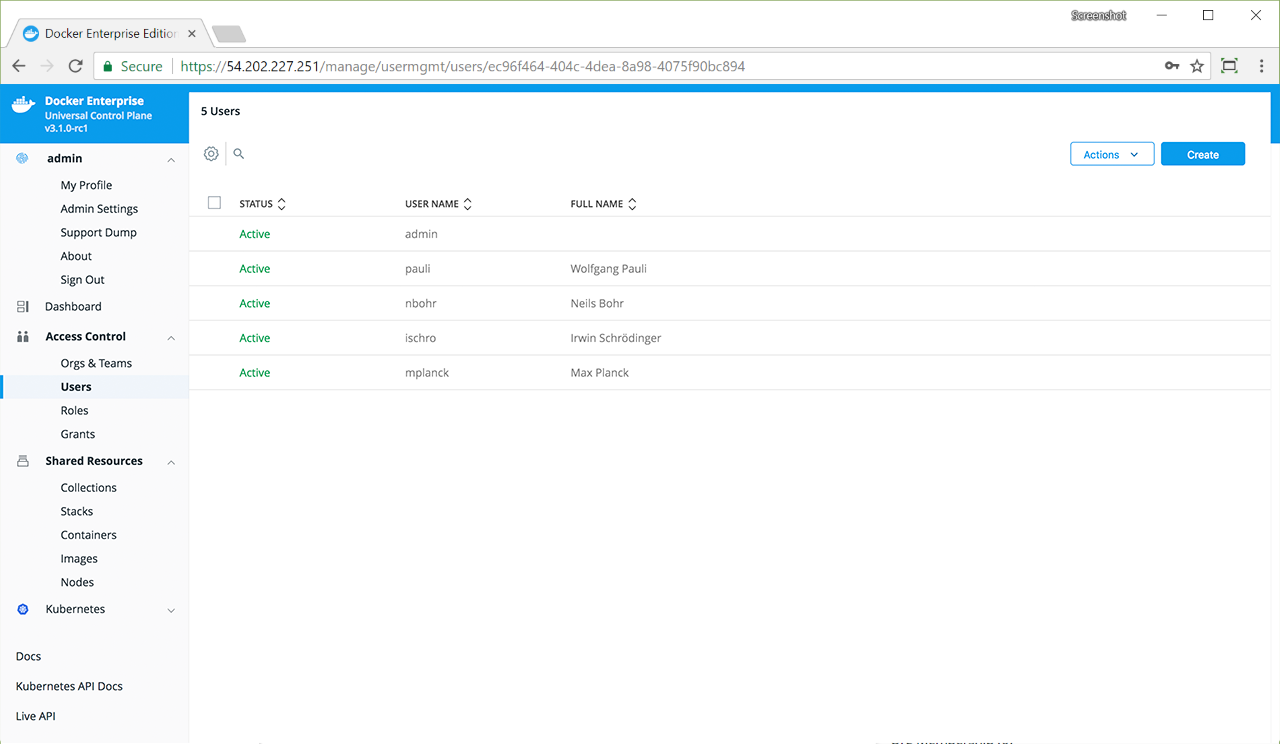

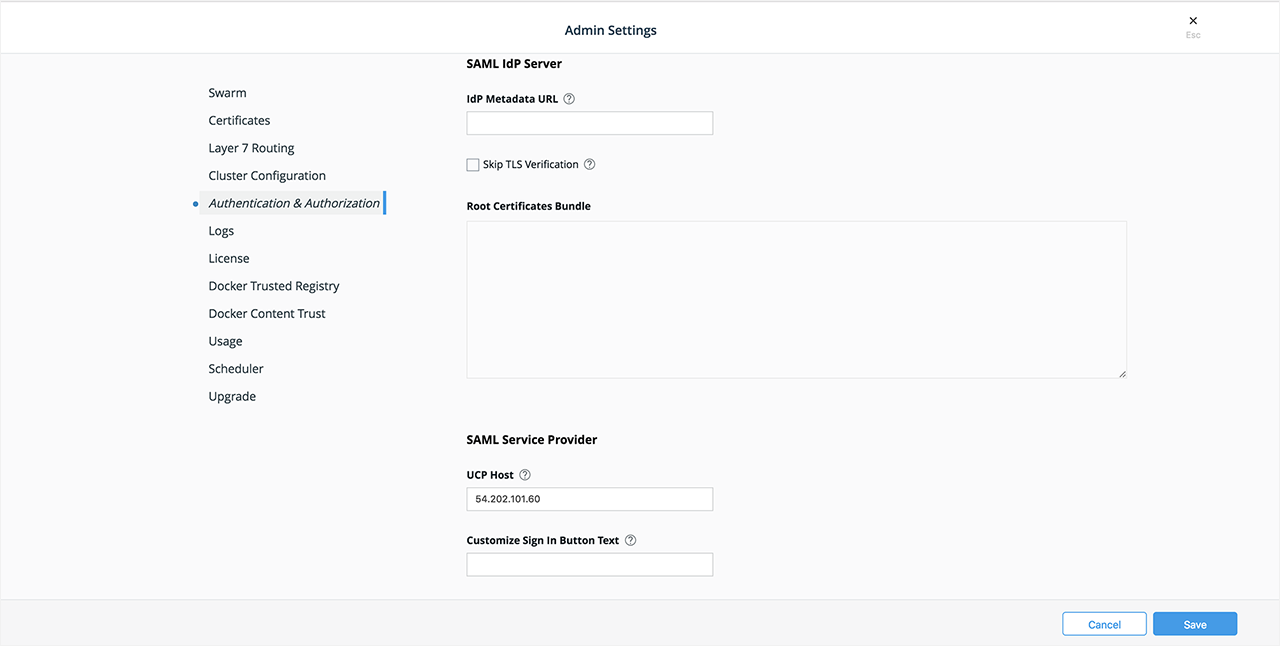

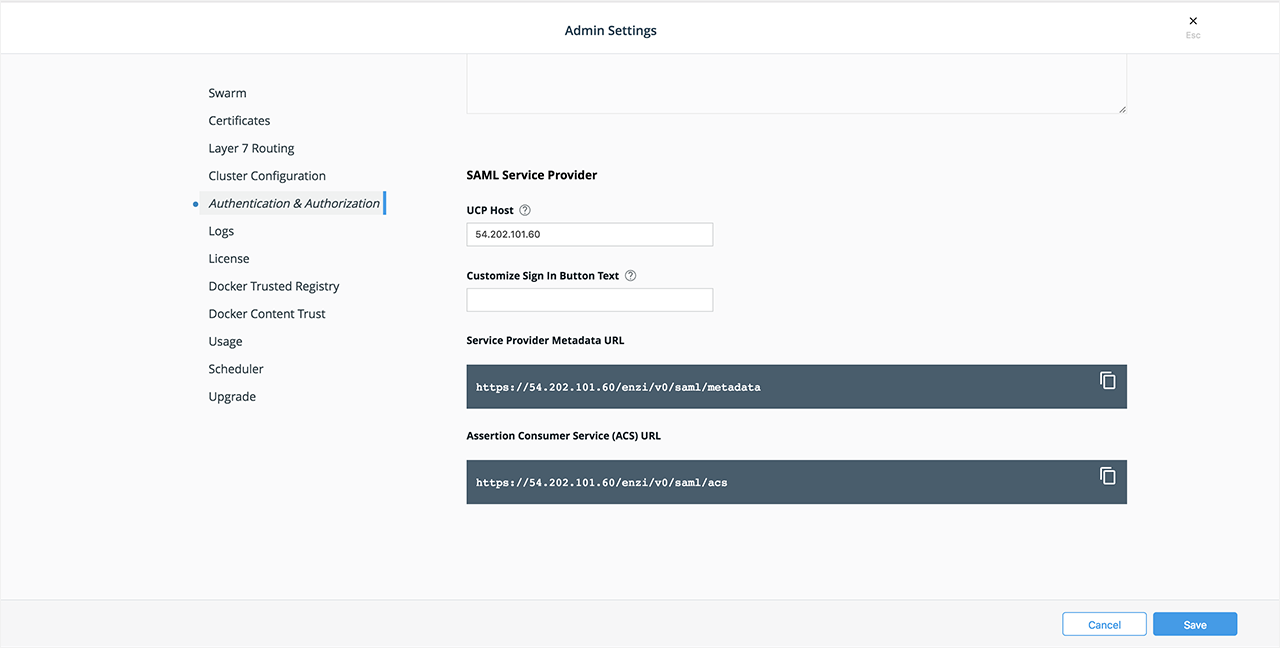

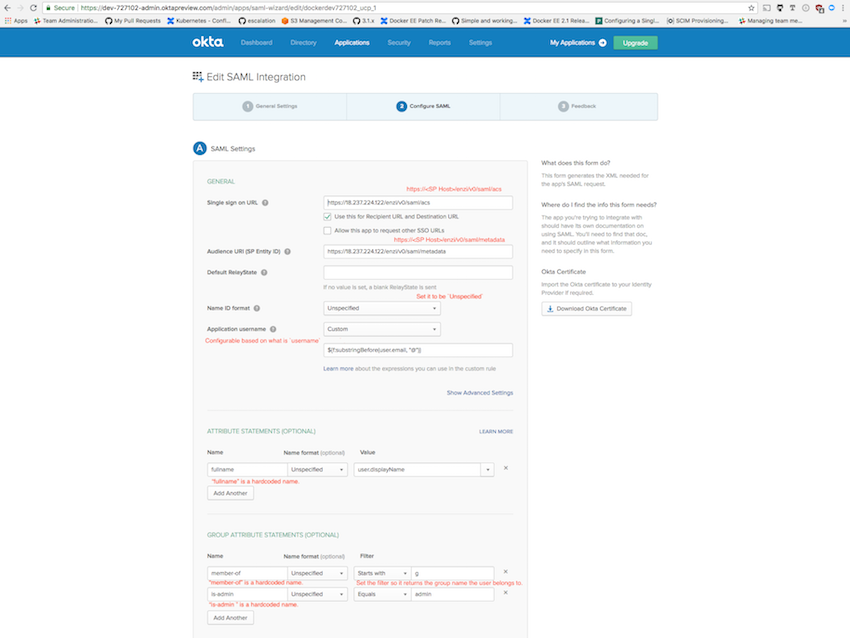

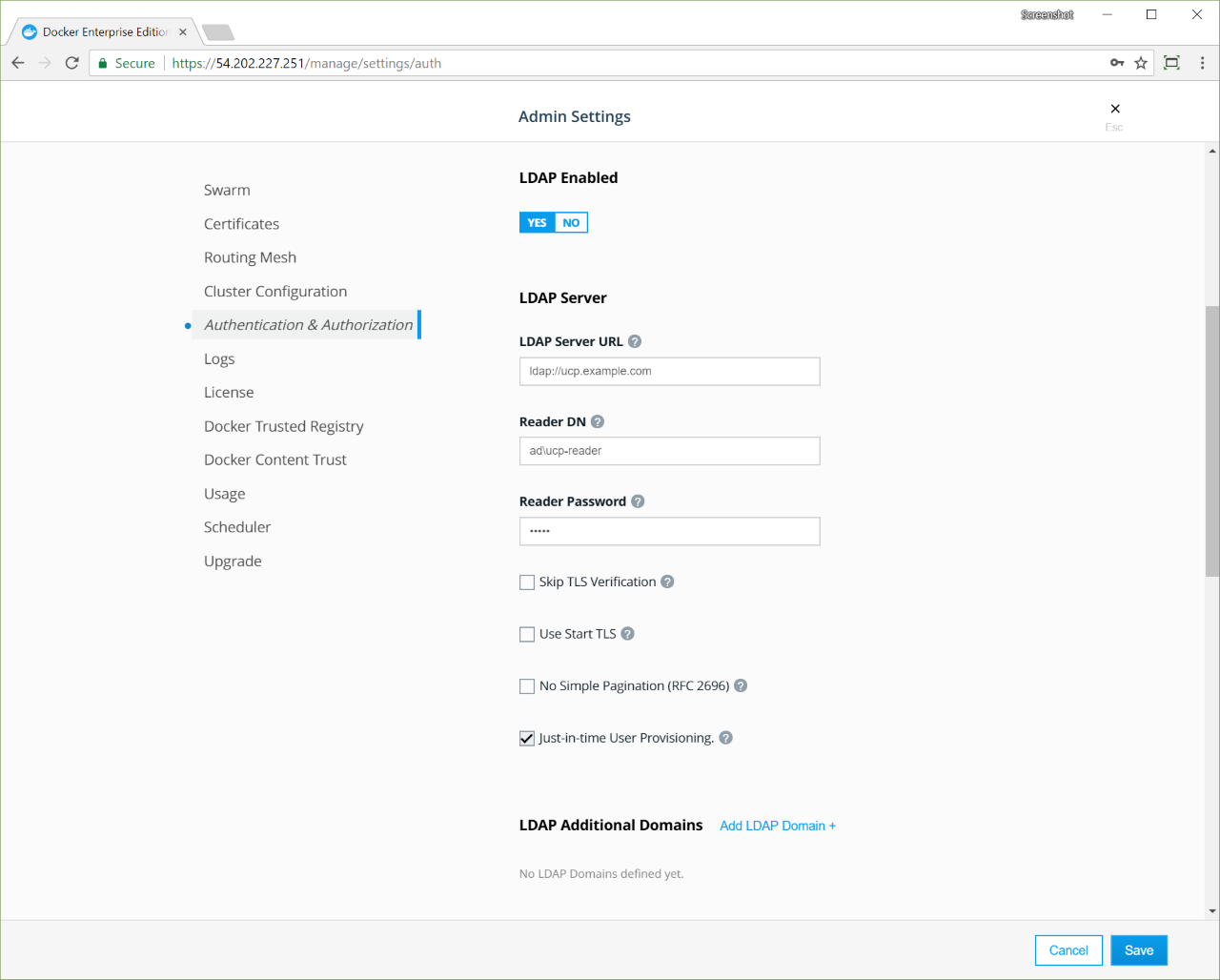

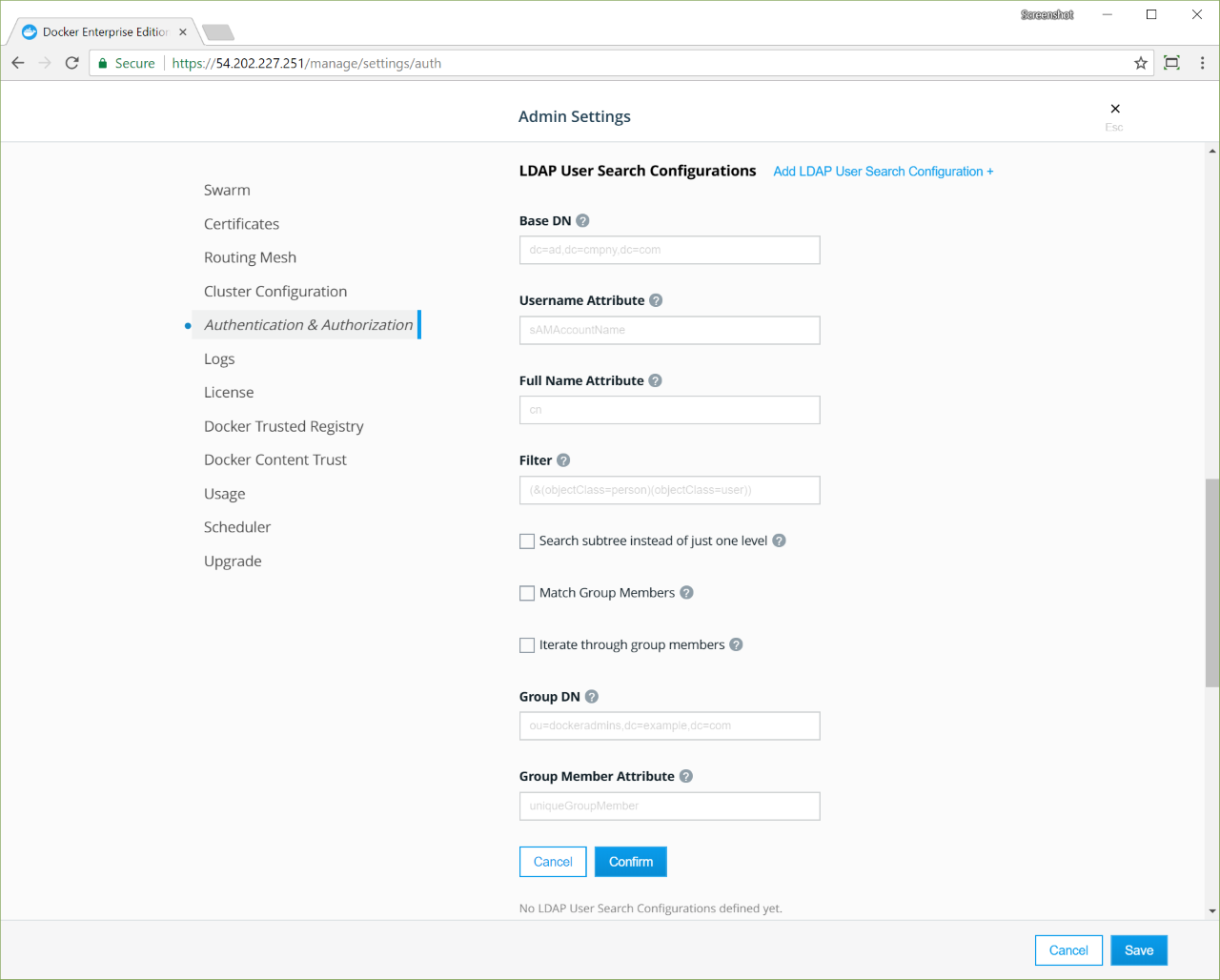

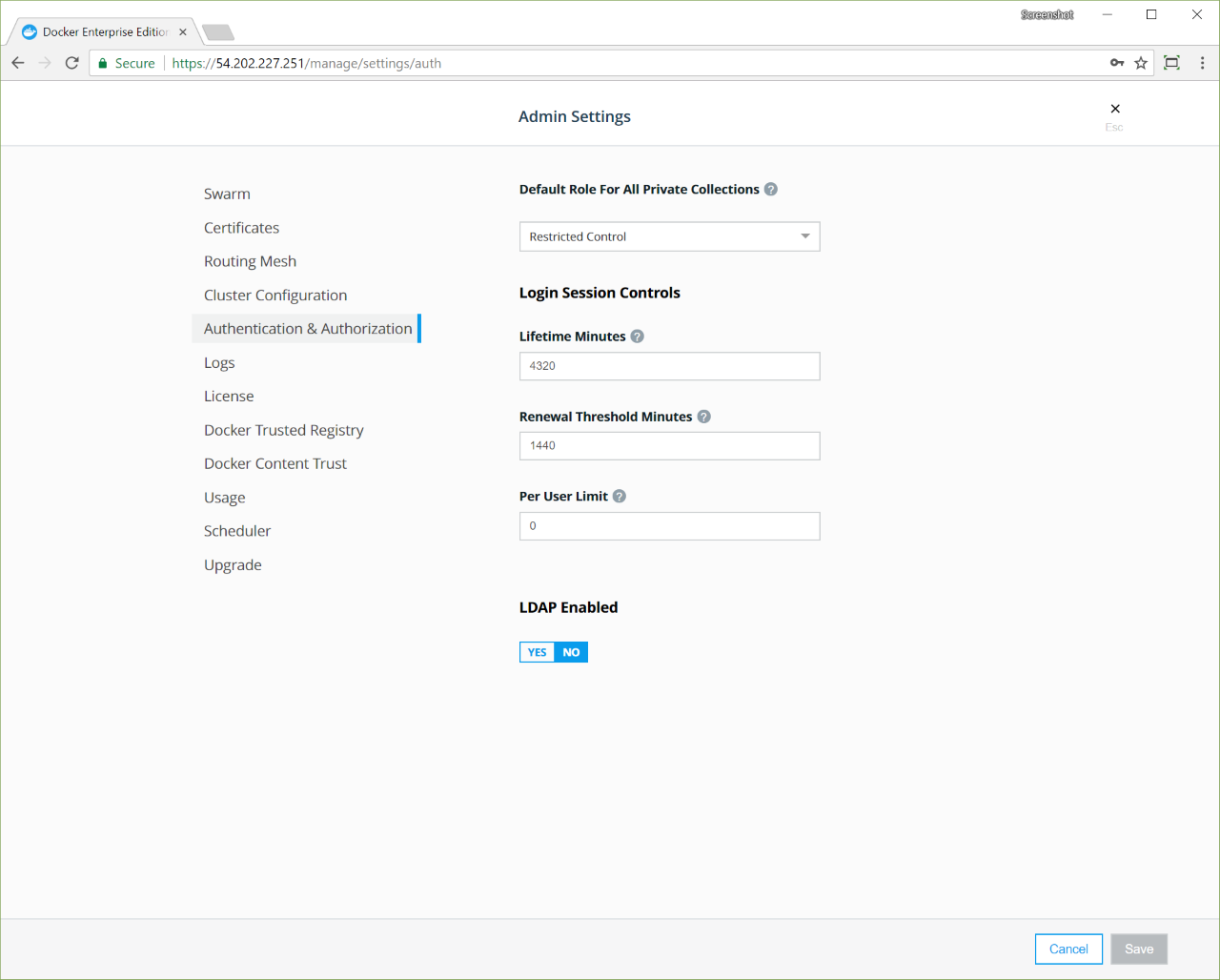

Docker Enterprise has its own built-in authentication mechanism with role-based access control (RBAC), so that you can control who can access and make changes to your cluster and applications. Also, Docker Enterprise authentication integrates with LDAP services and supports SAML SCIM to proactively synchronize with authentication providers. You can also opt to enable the PKI authenticati onto use client certificates, rather than username and password.

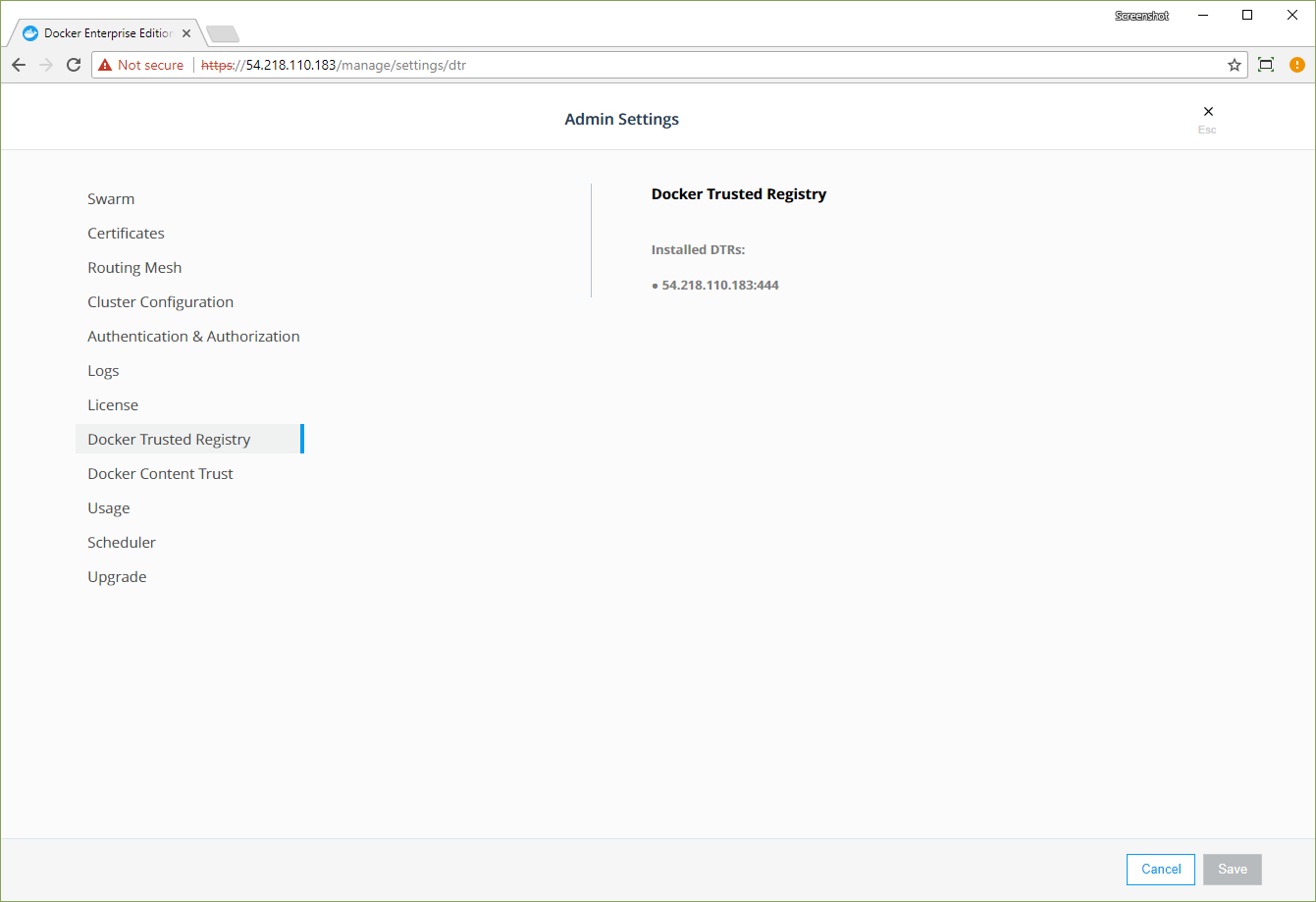

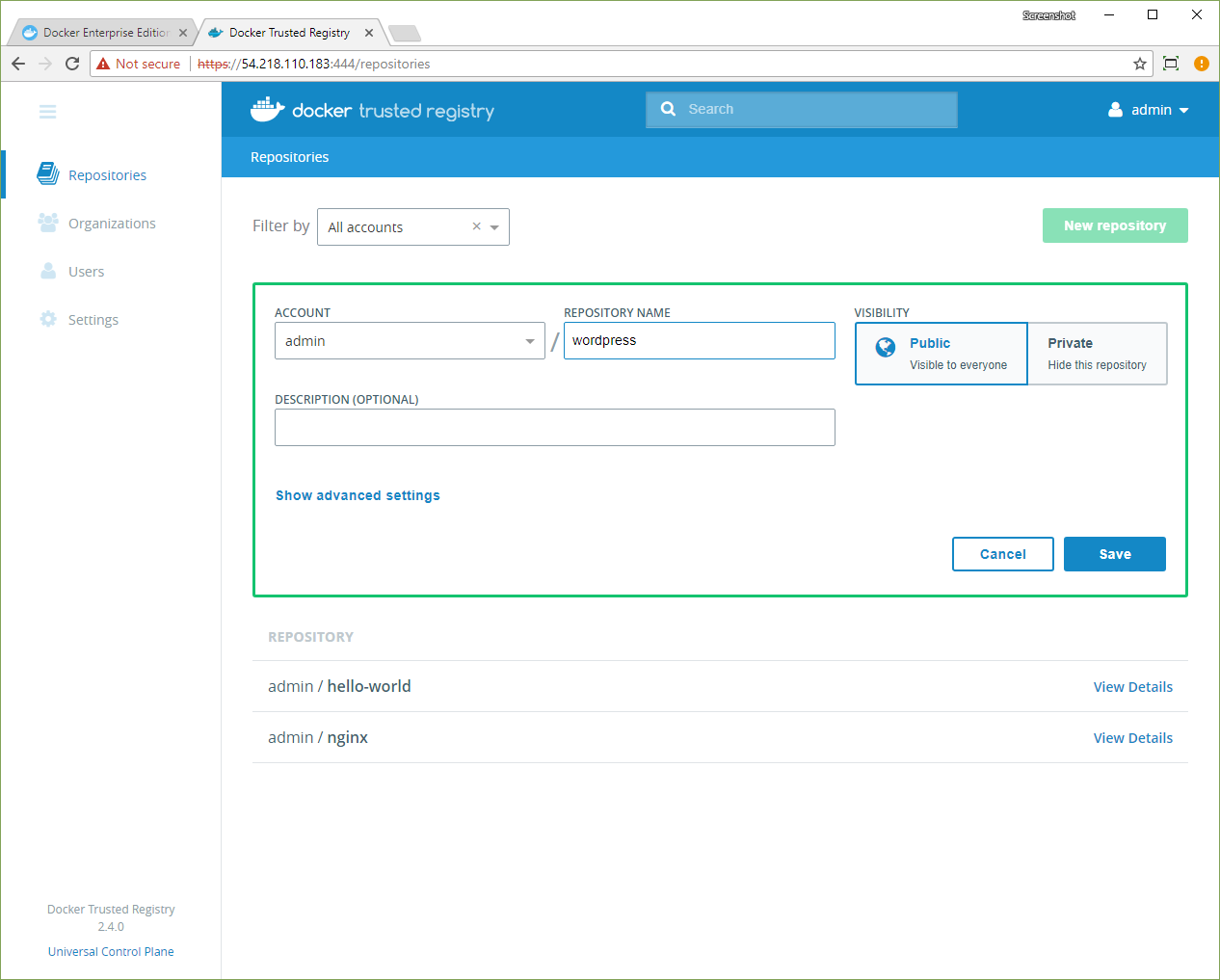

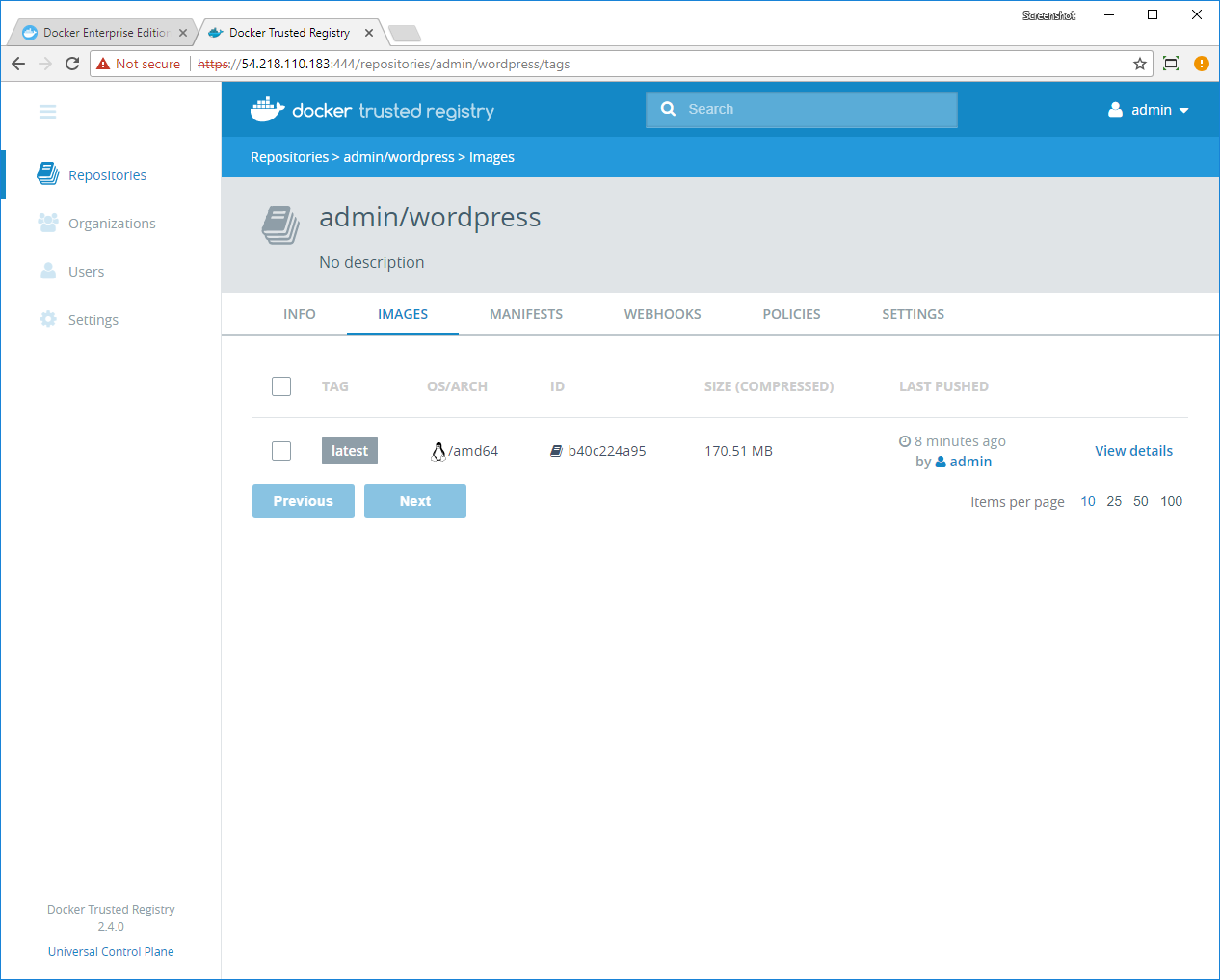

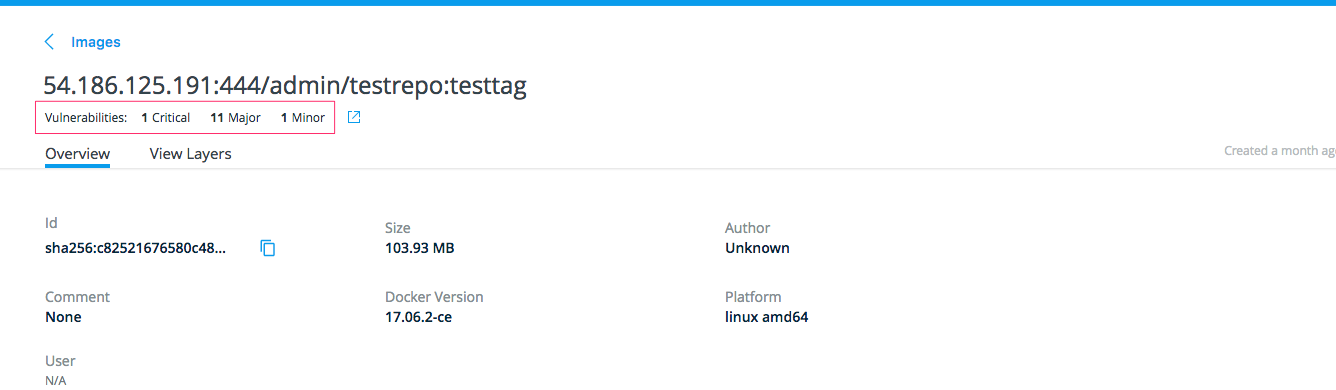

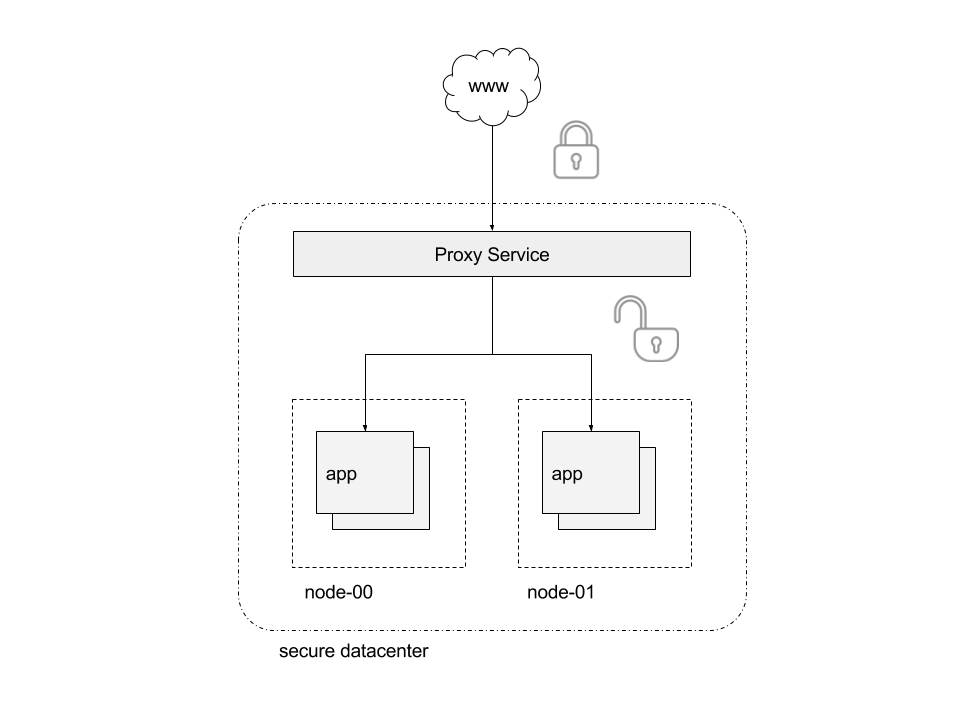

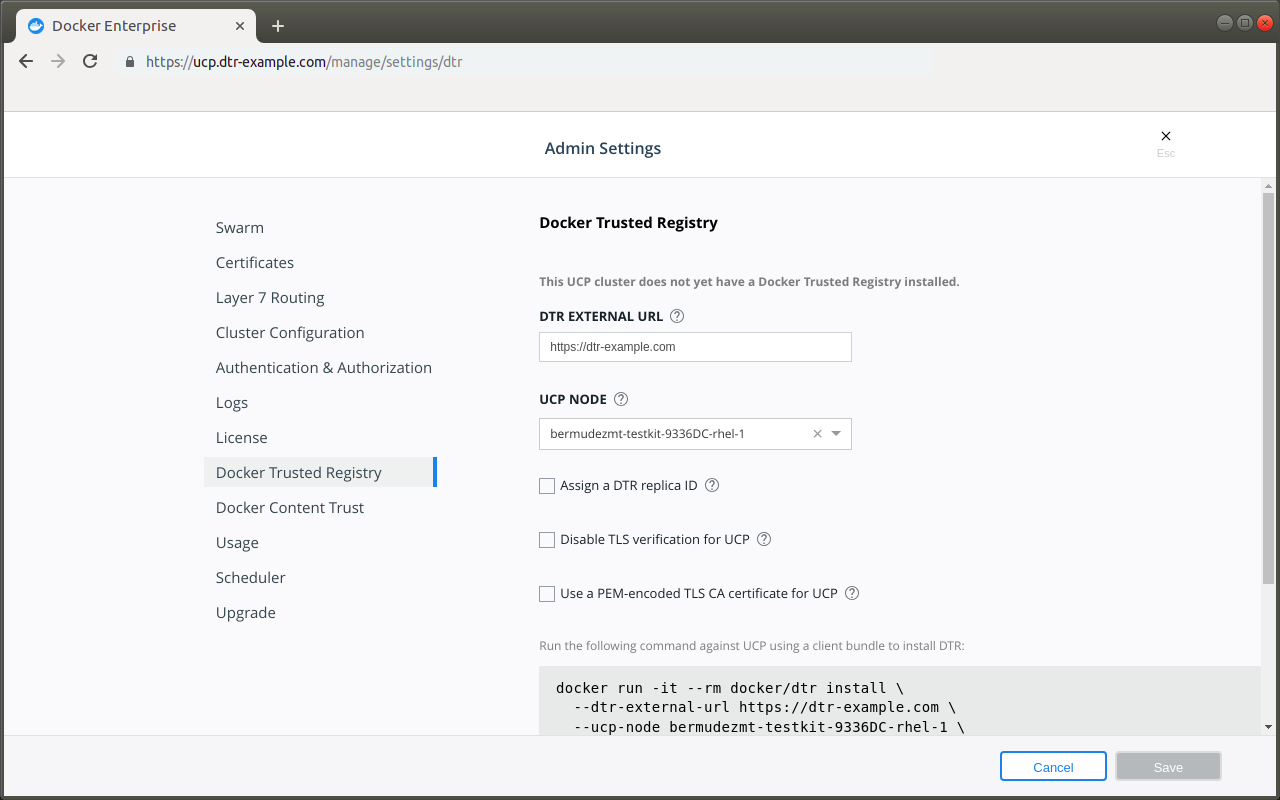

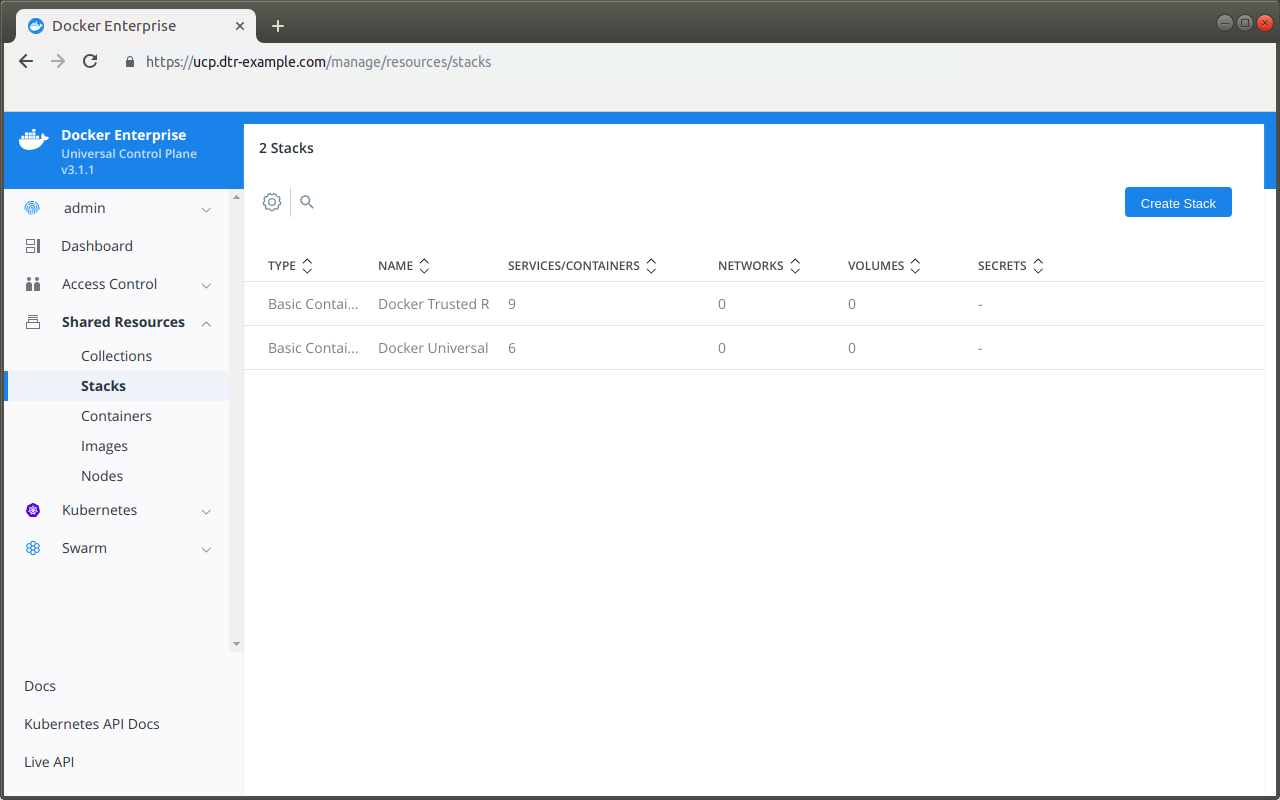

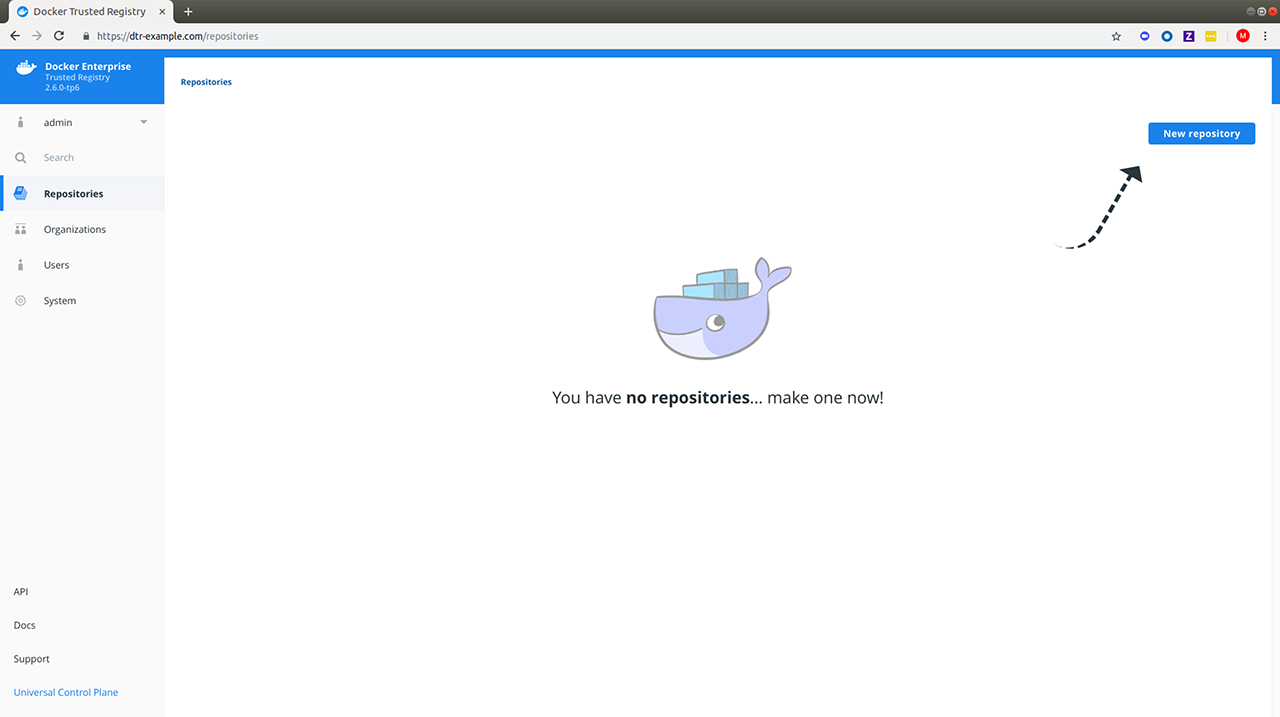

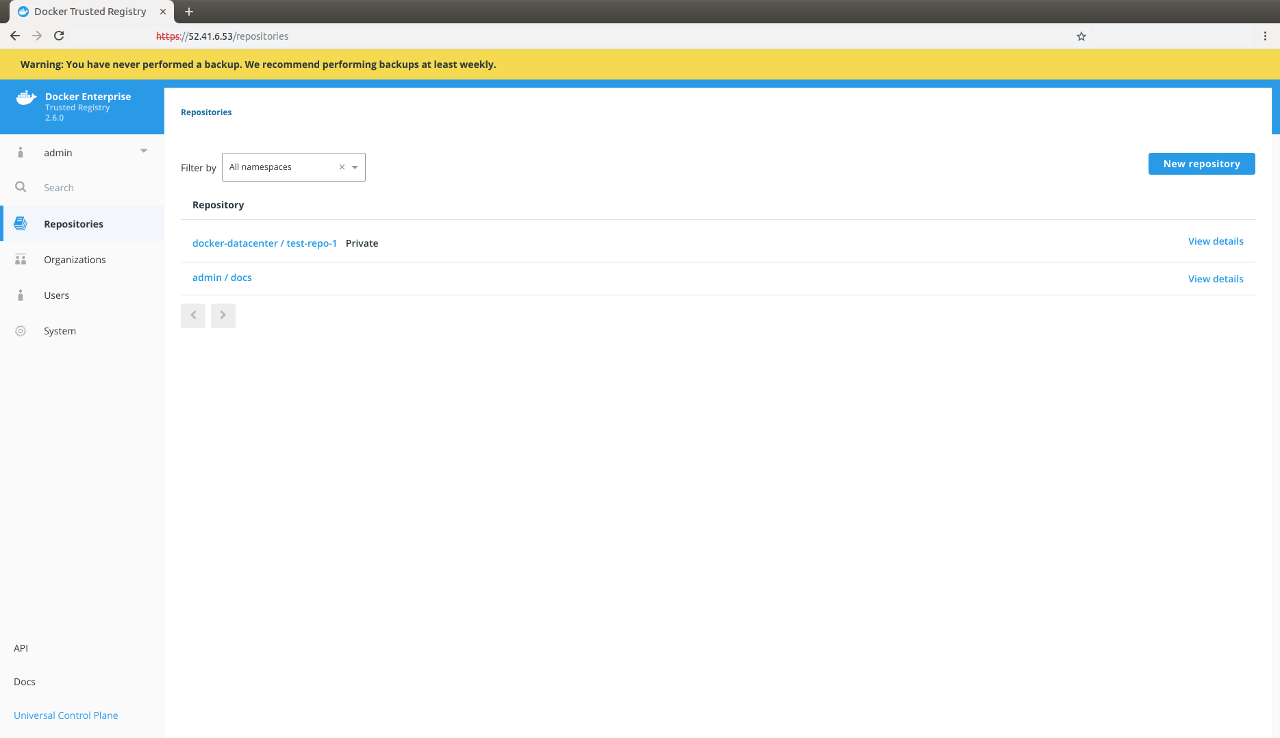

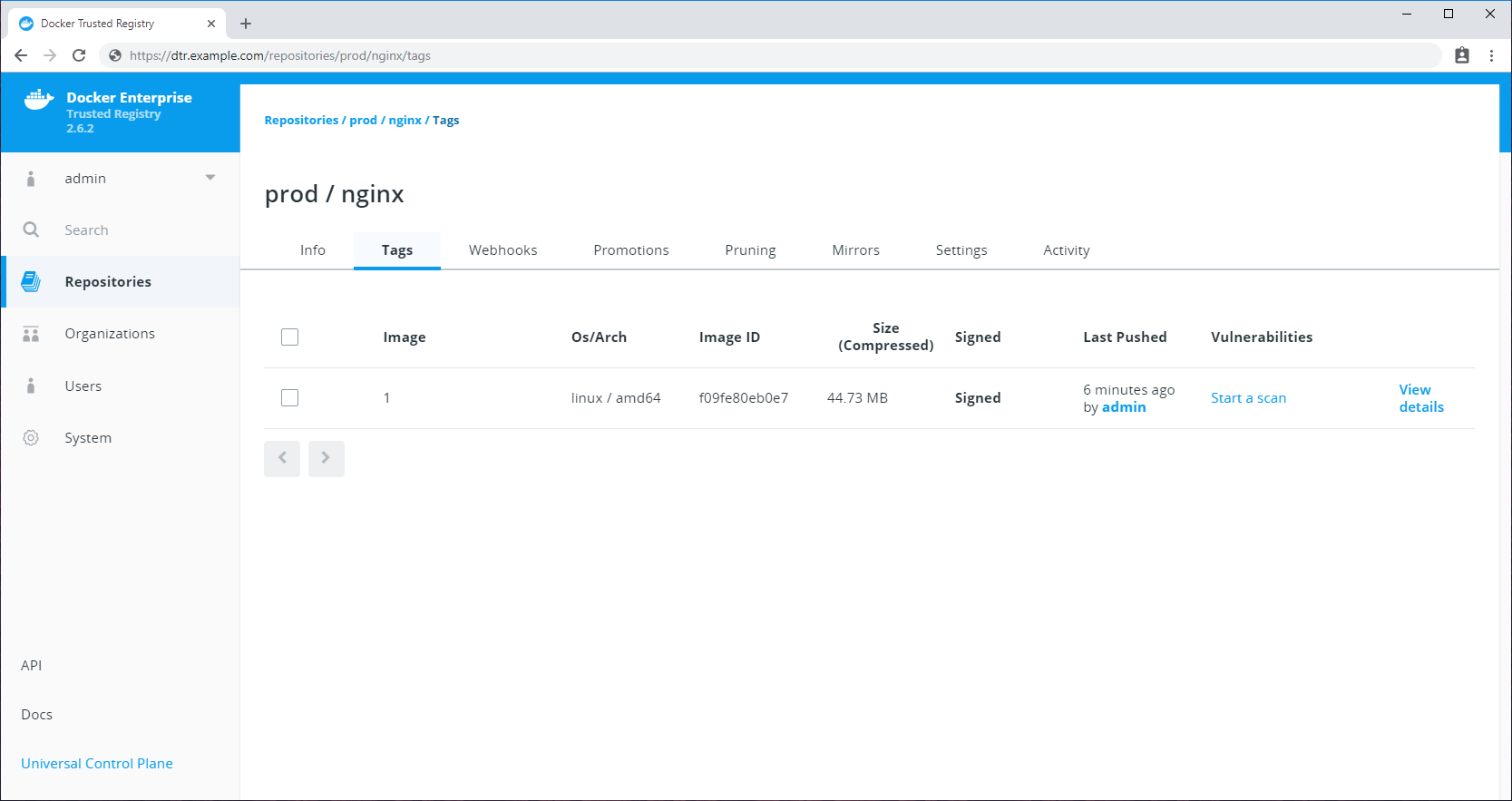

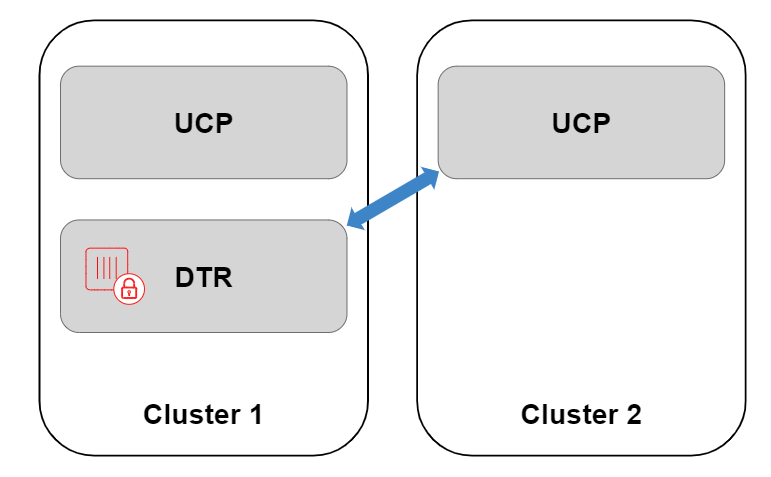

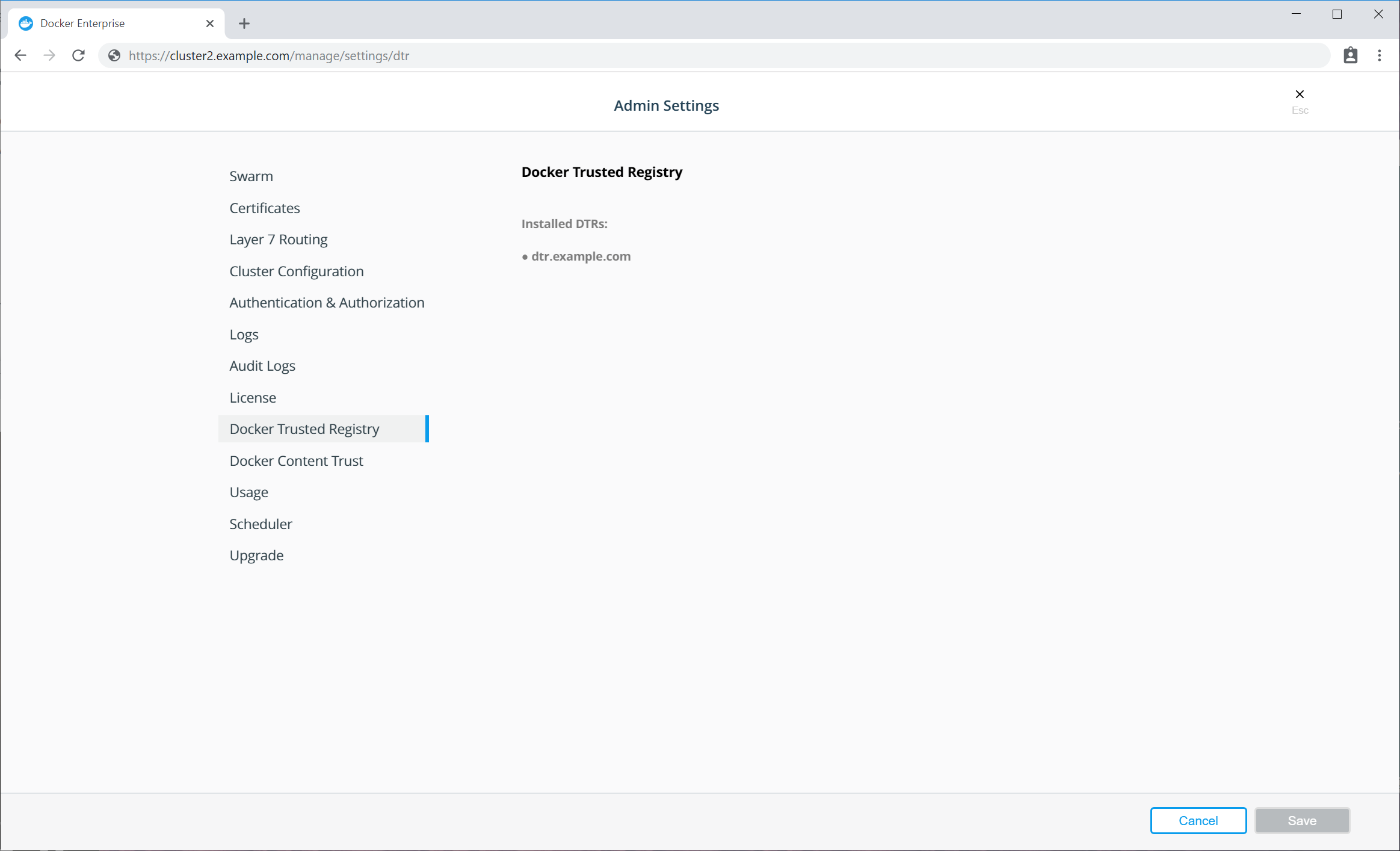

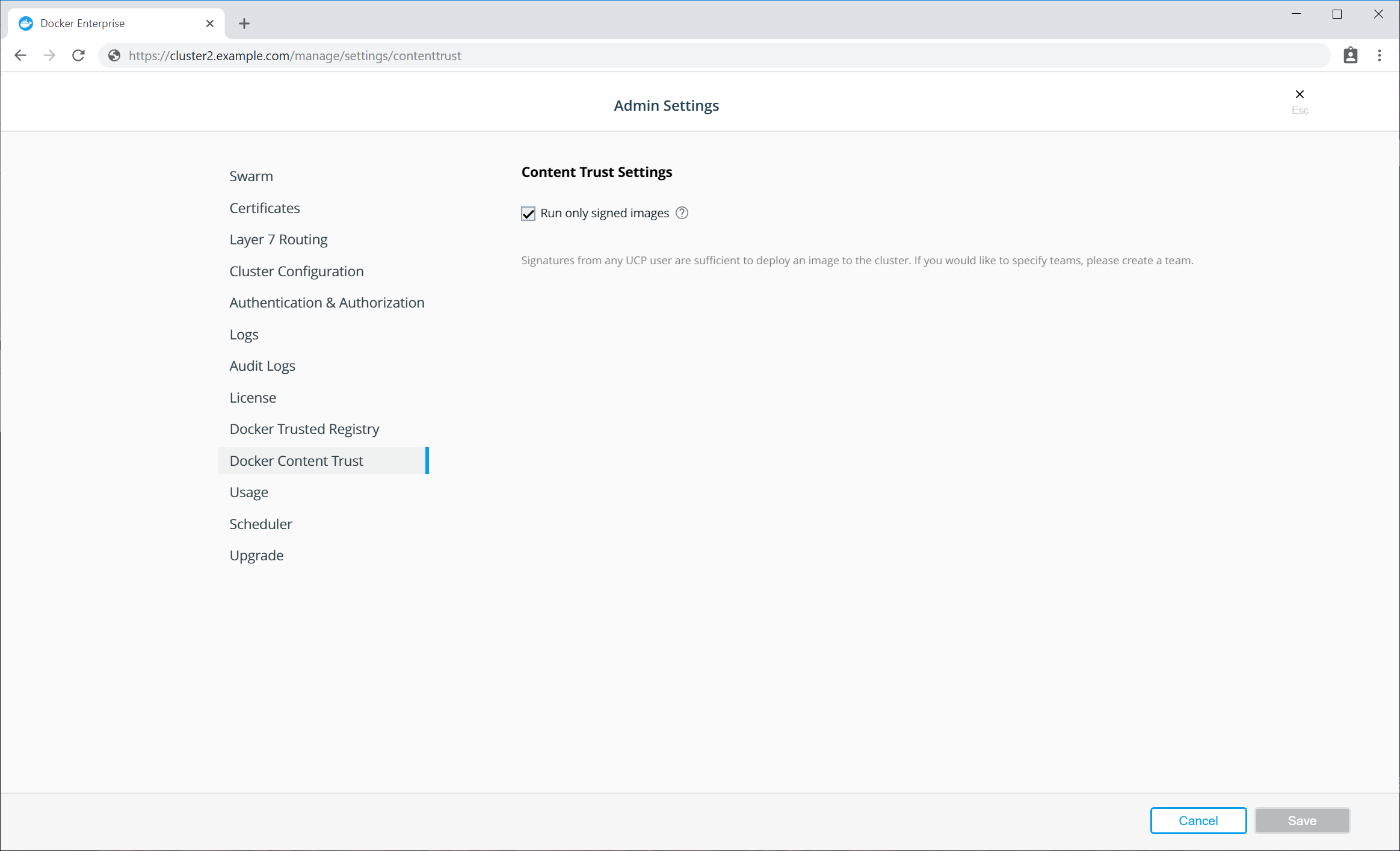

Docker Enterprise integrates with Docker Trusted Registry so that you can keep the Docker images you use for your applications behind your firewall, where they are safe and can’t be tampered with. You can also enforce security policies and only allow running applications that use Docker images you know and trust.

Windows application security¶

Windows applications typically require Active Directory authentication in order to communicate with other services on the network. Container-based applications use Group Managed Service Accounts (gMSA) to provide this authentication. Docker Swarm fully supports the use of gMSAs with Windows containers.

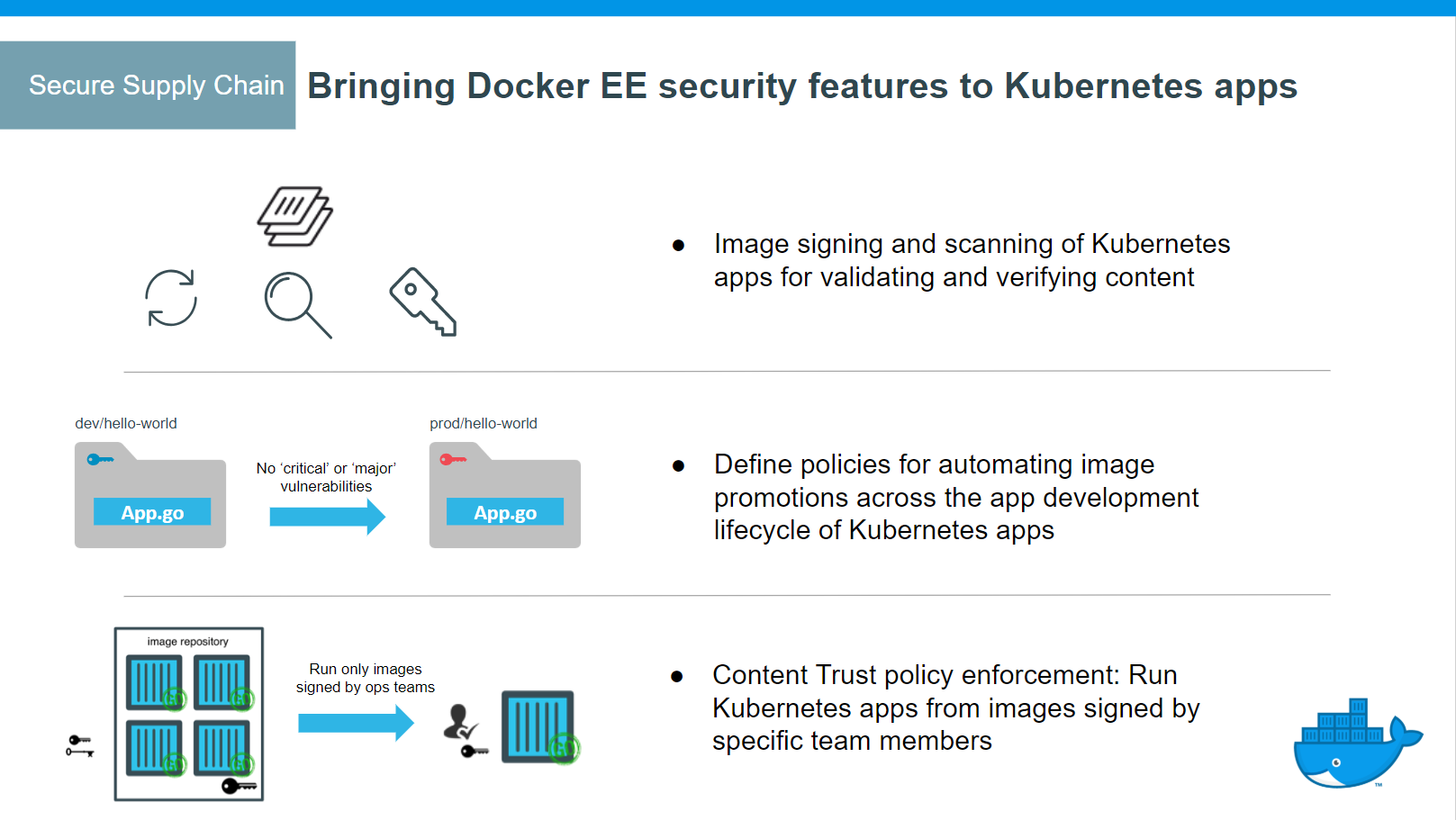

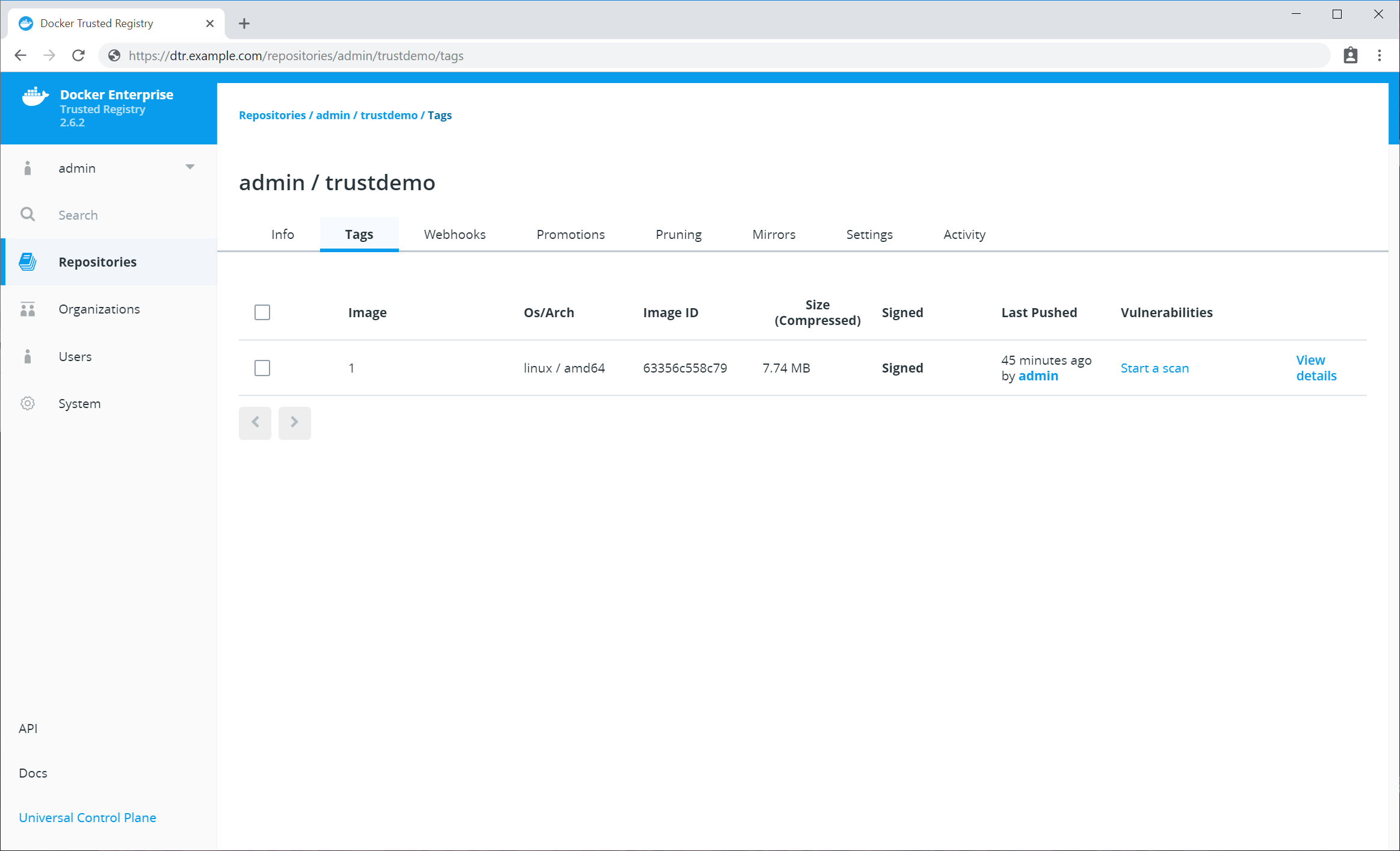

Secure supply chain¶

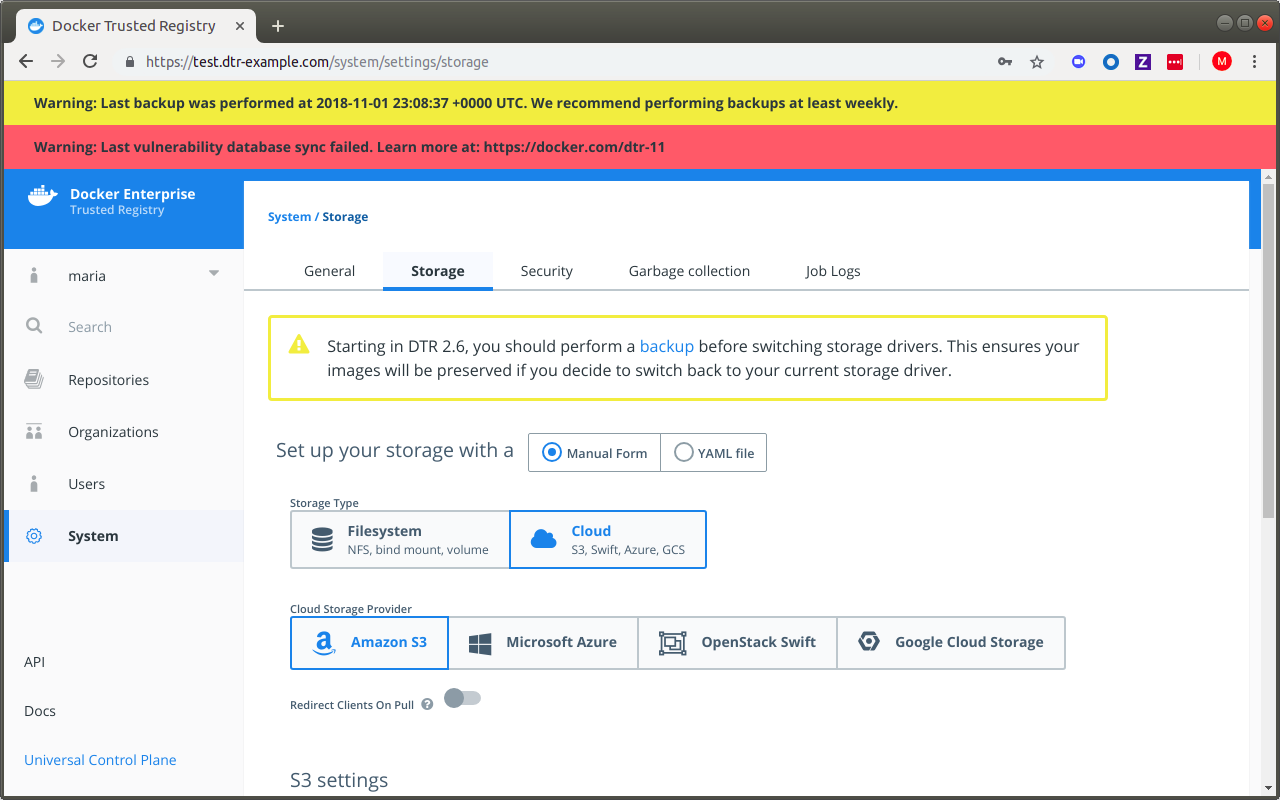

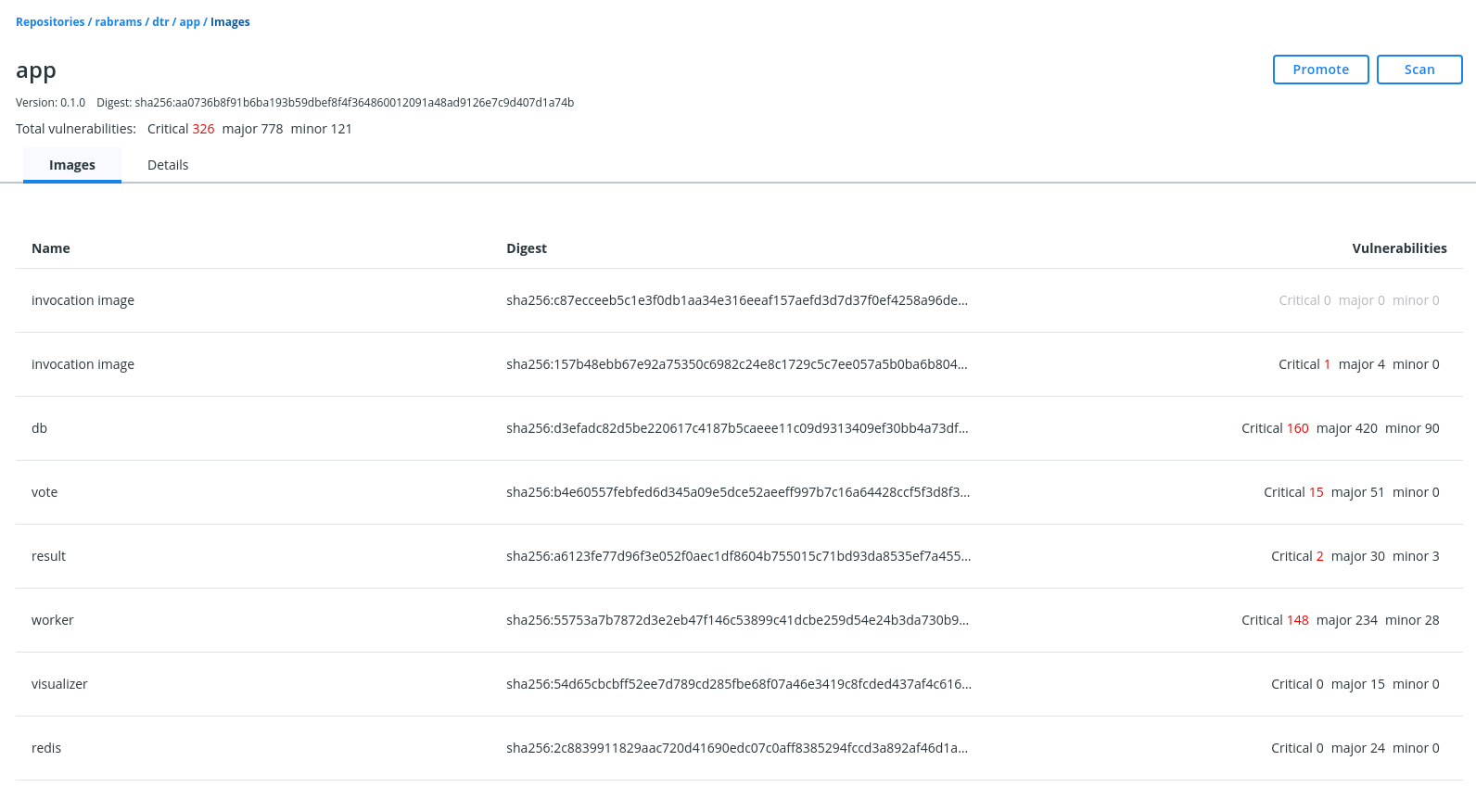

- DTR support for the Docker App format, based on the CNAB specification

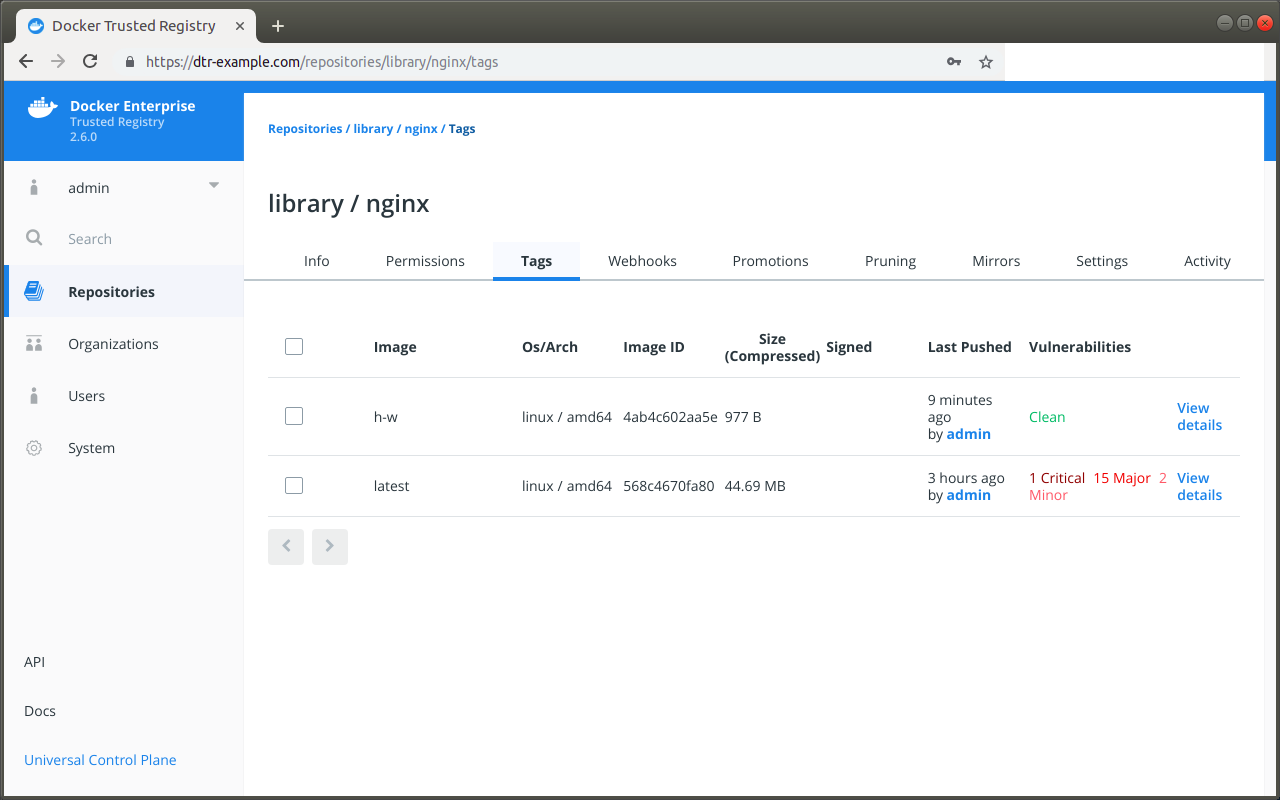

- Image signing and scanning of Kubernetes and Swarm images and Docker Apps for validating and verifying content

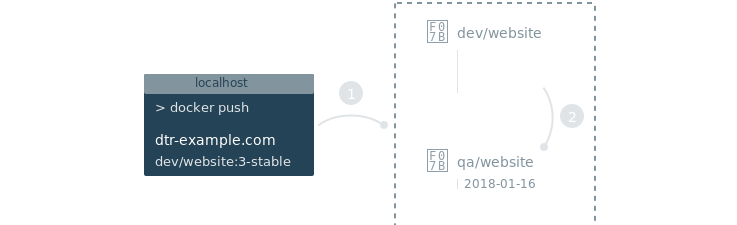

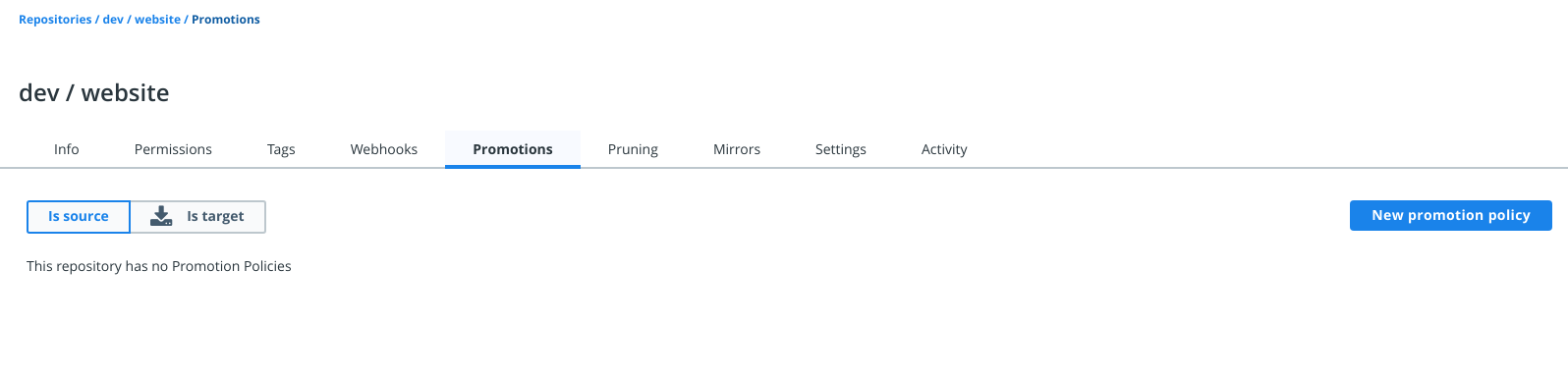

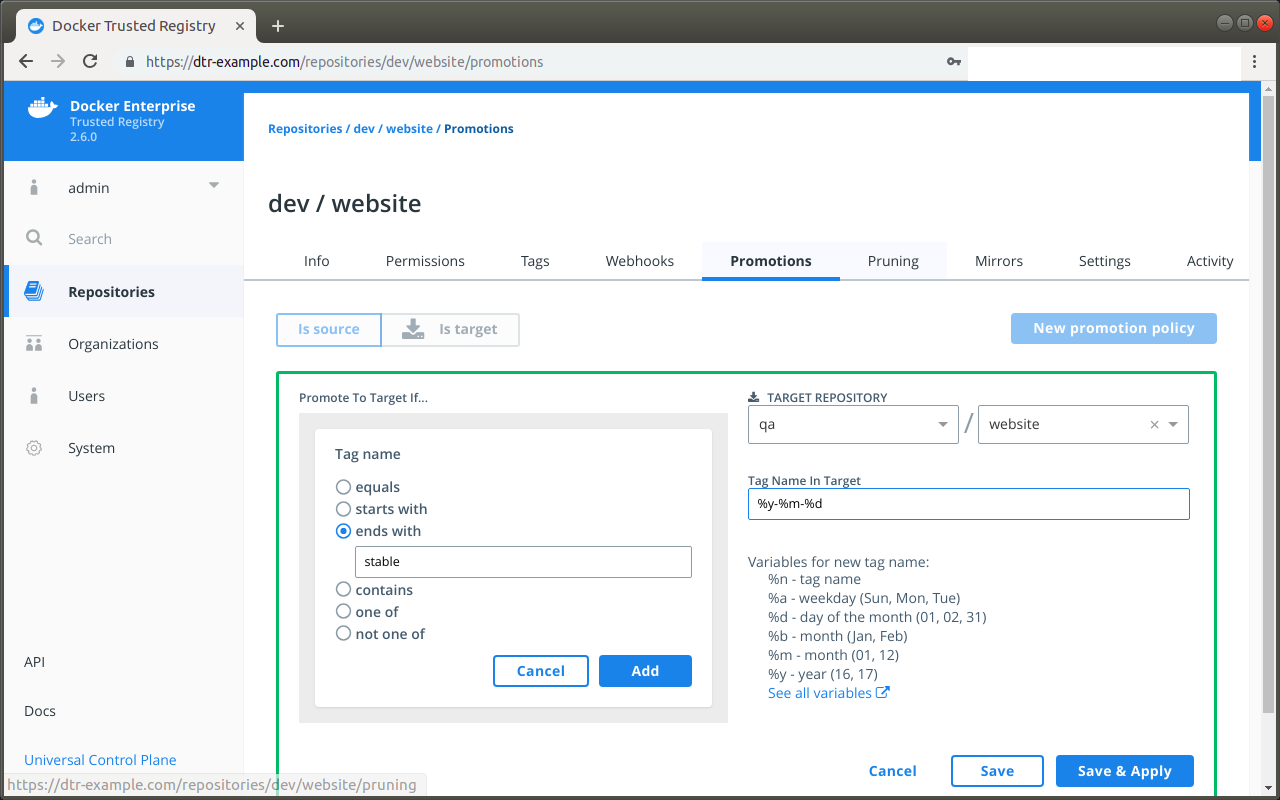

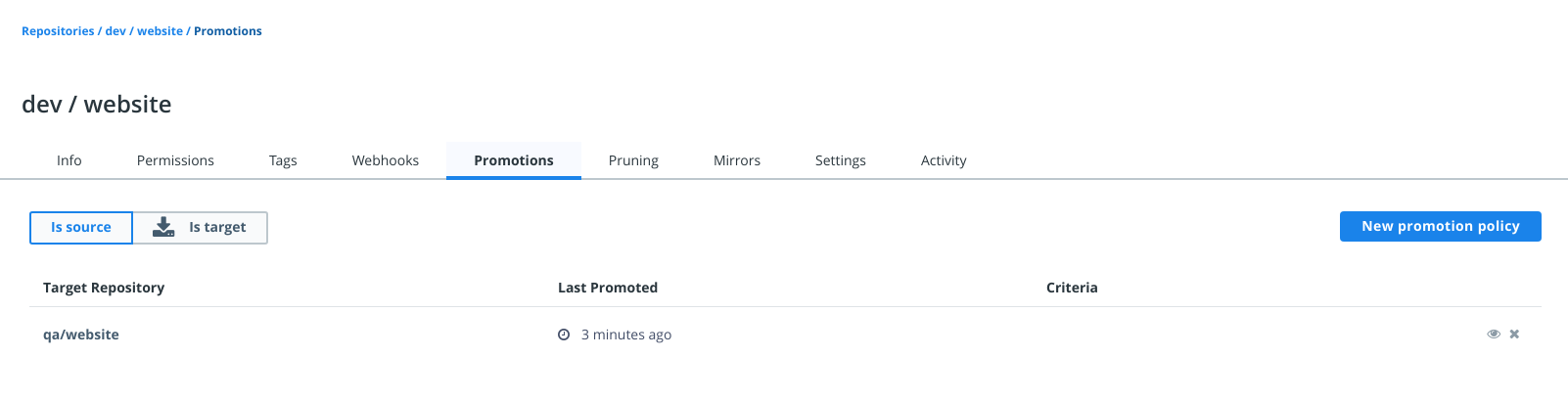

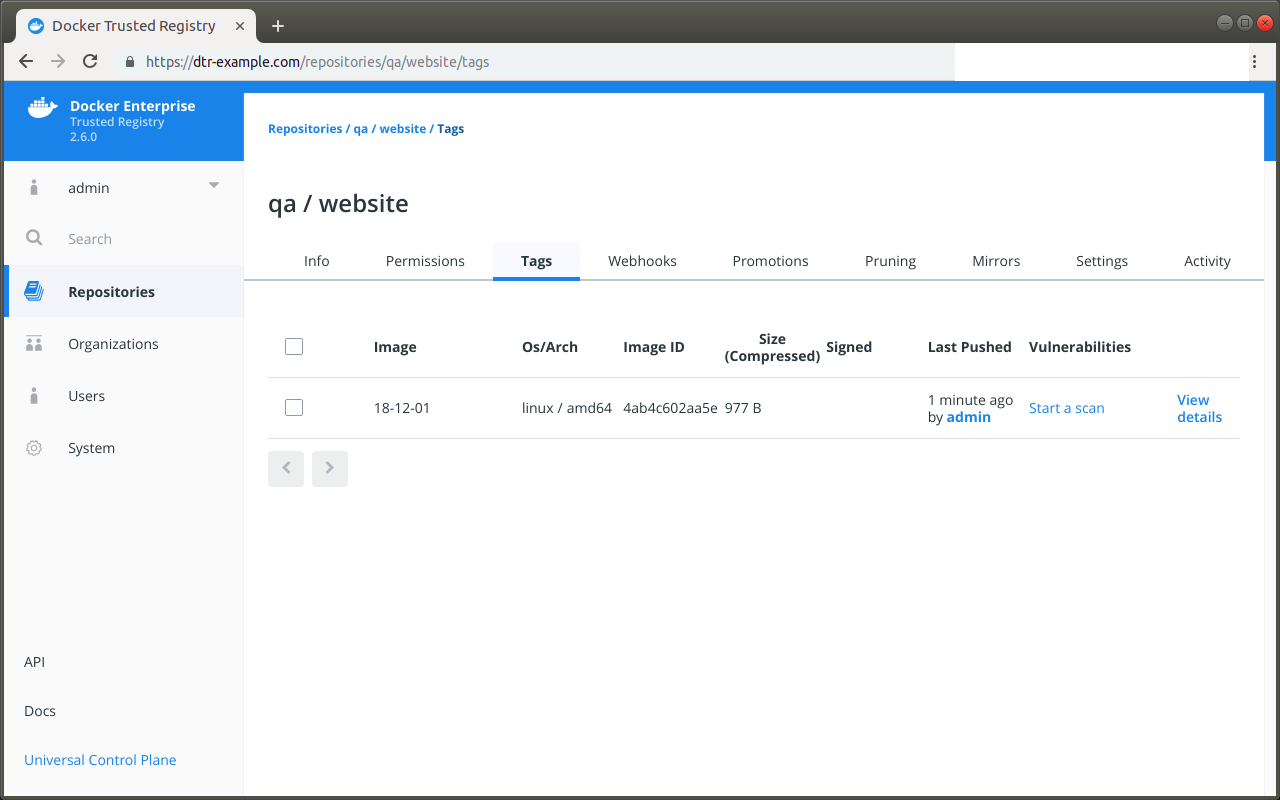

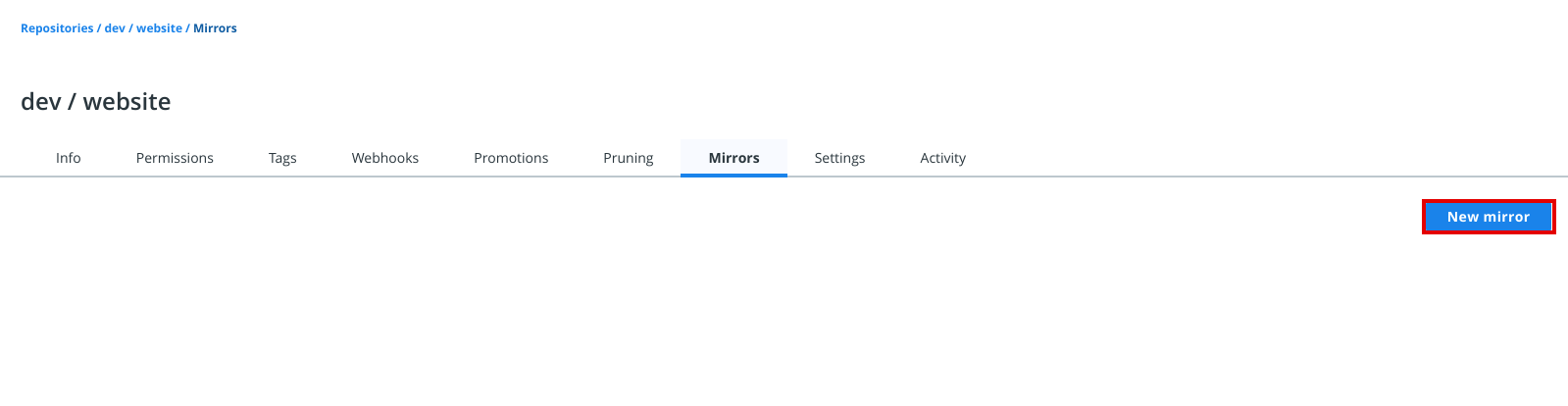

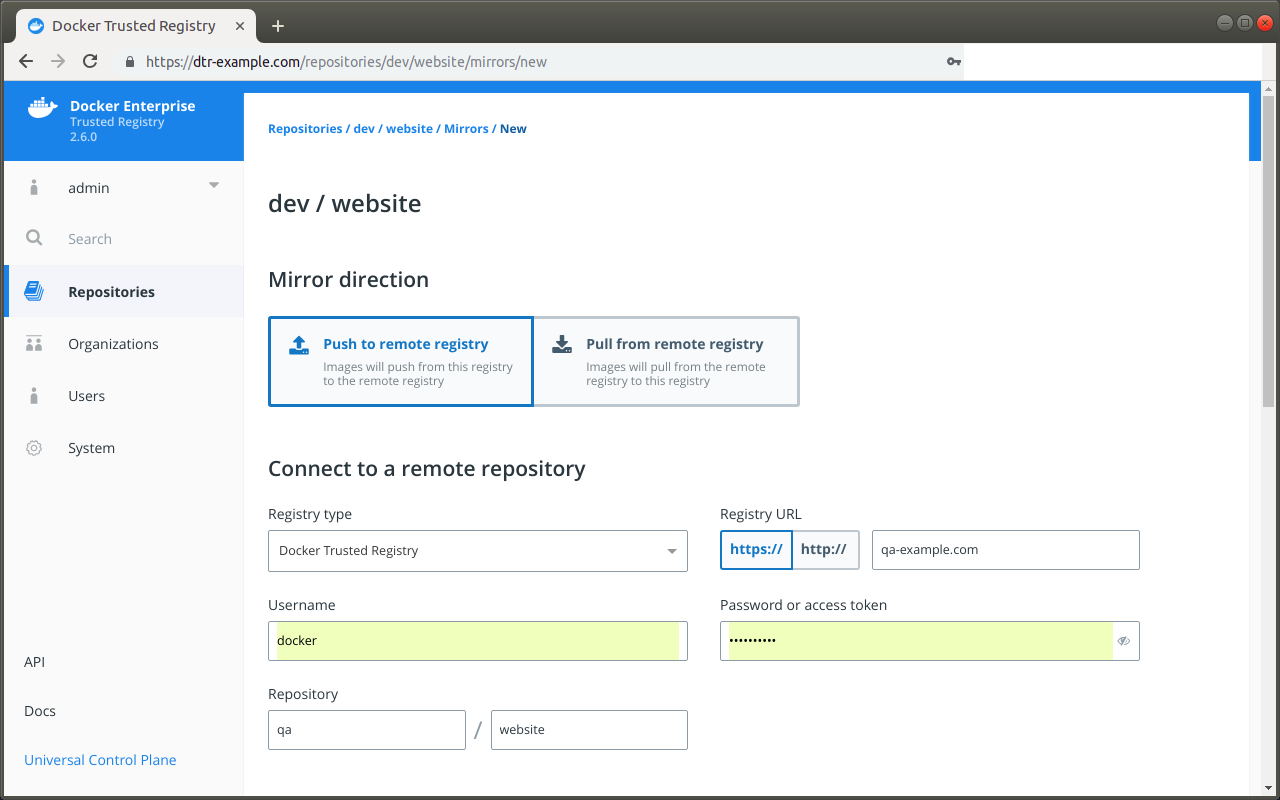

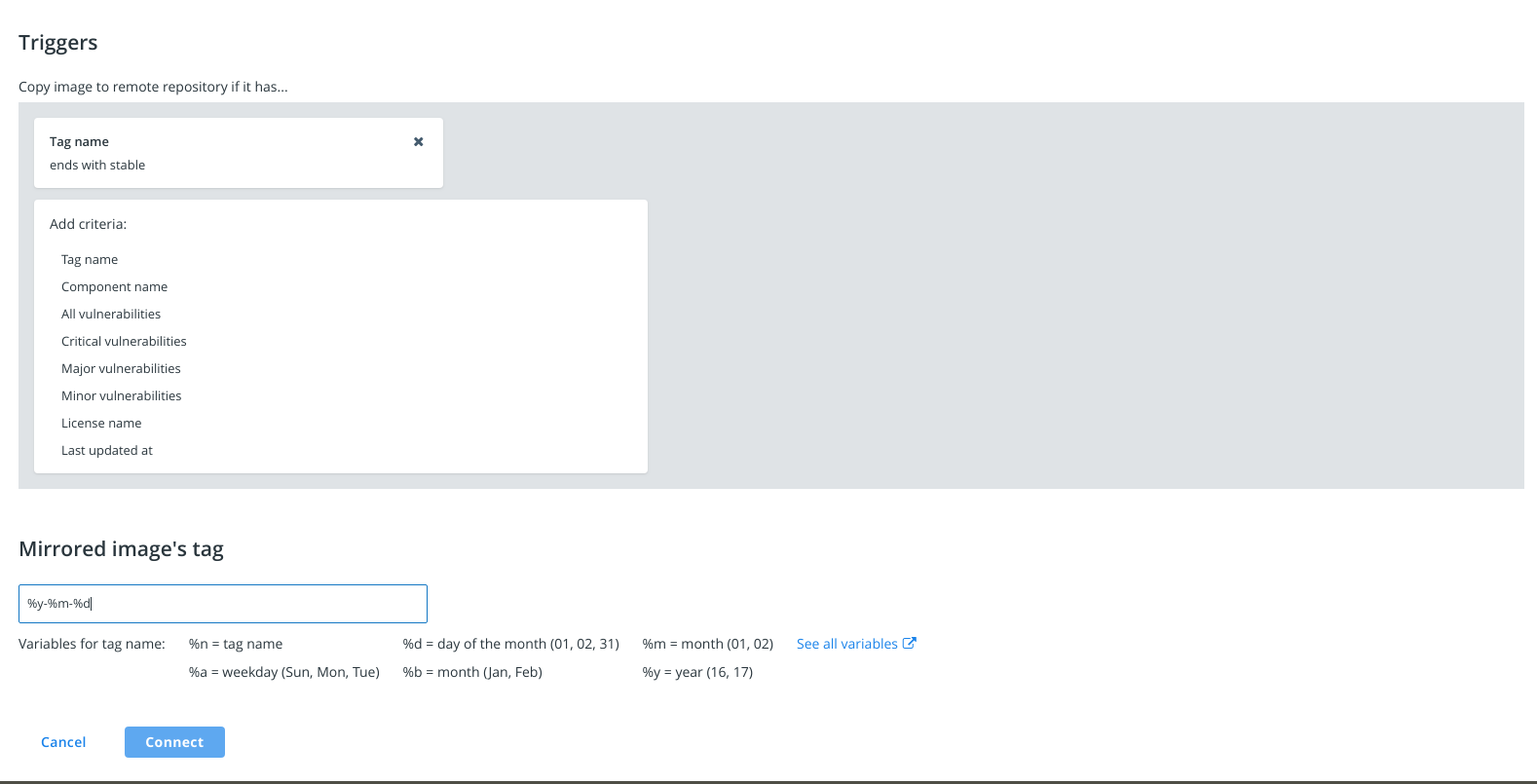

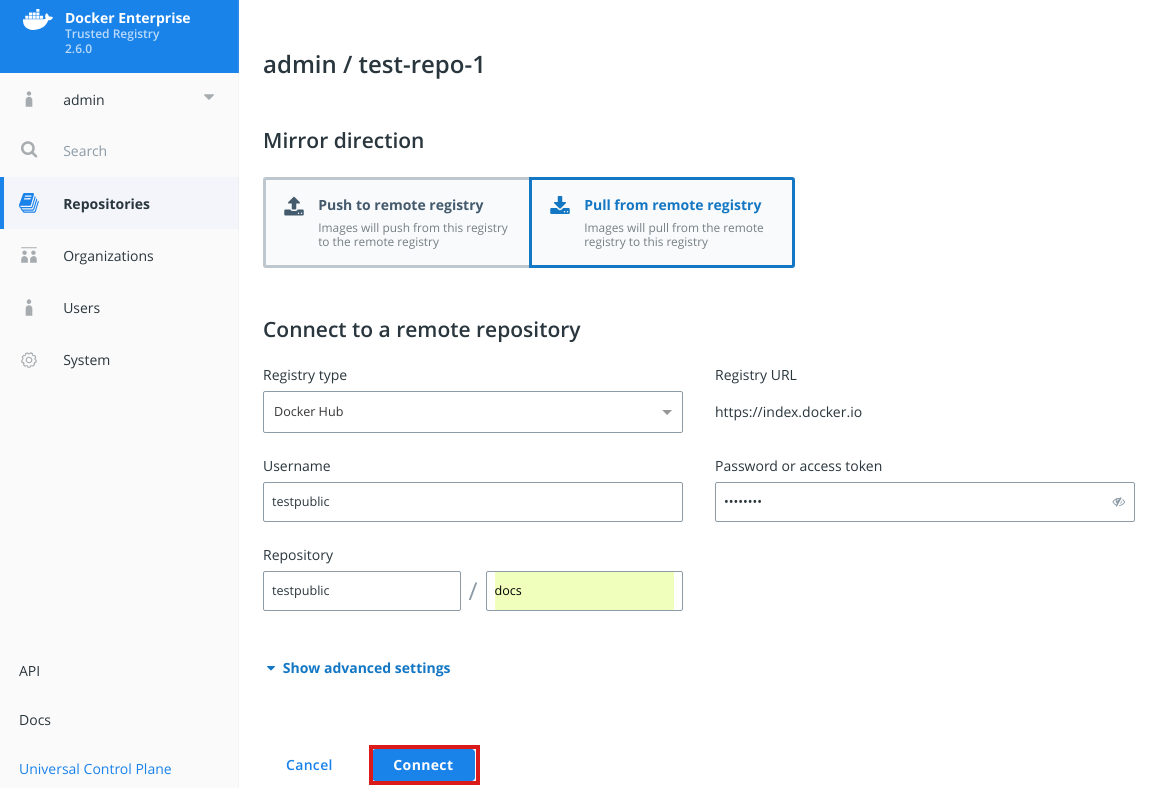

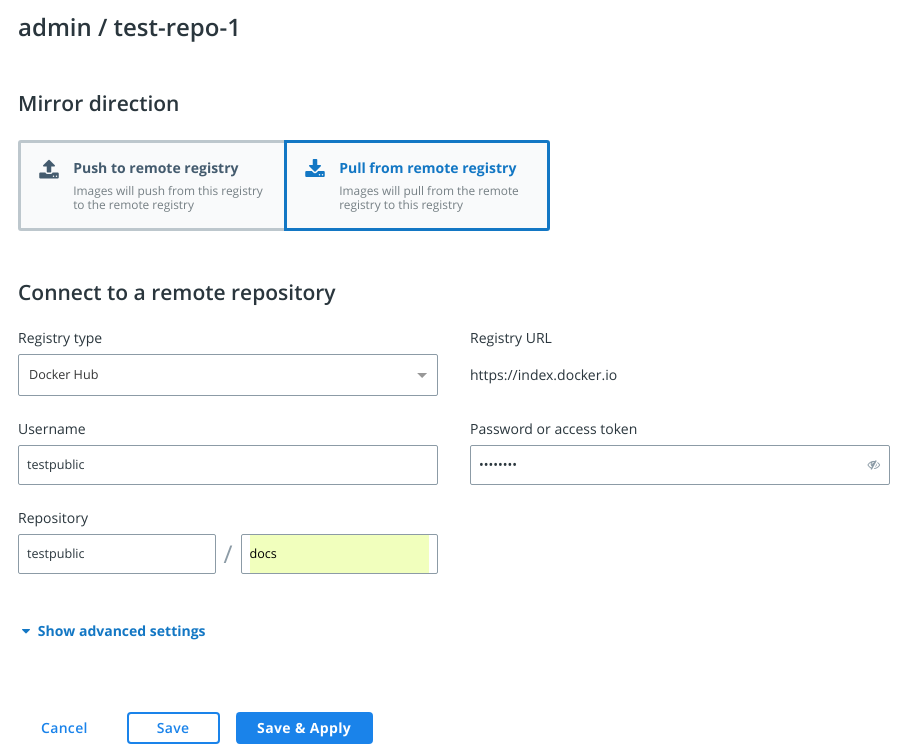

- Image promotion with mirroring between registries as well as Docker Hub

- Define policies for automating image promotions across the app development lifecycle of Kubernetes and Swarm apps

Docker Enterprise CLI¶

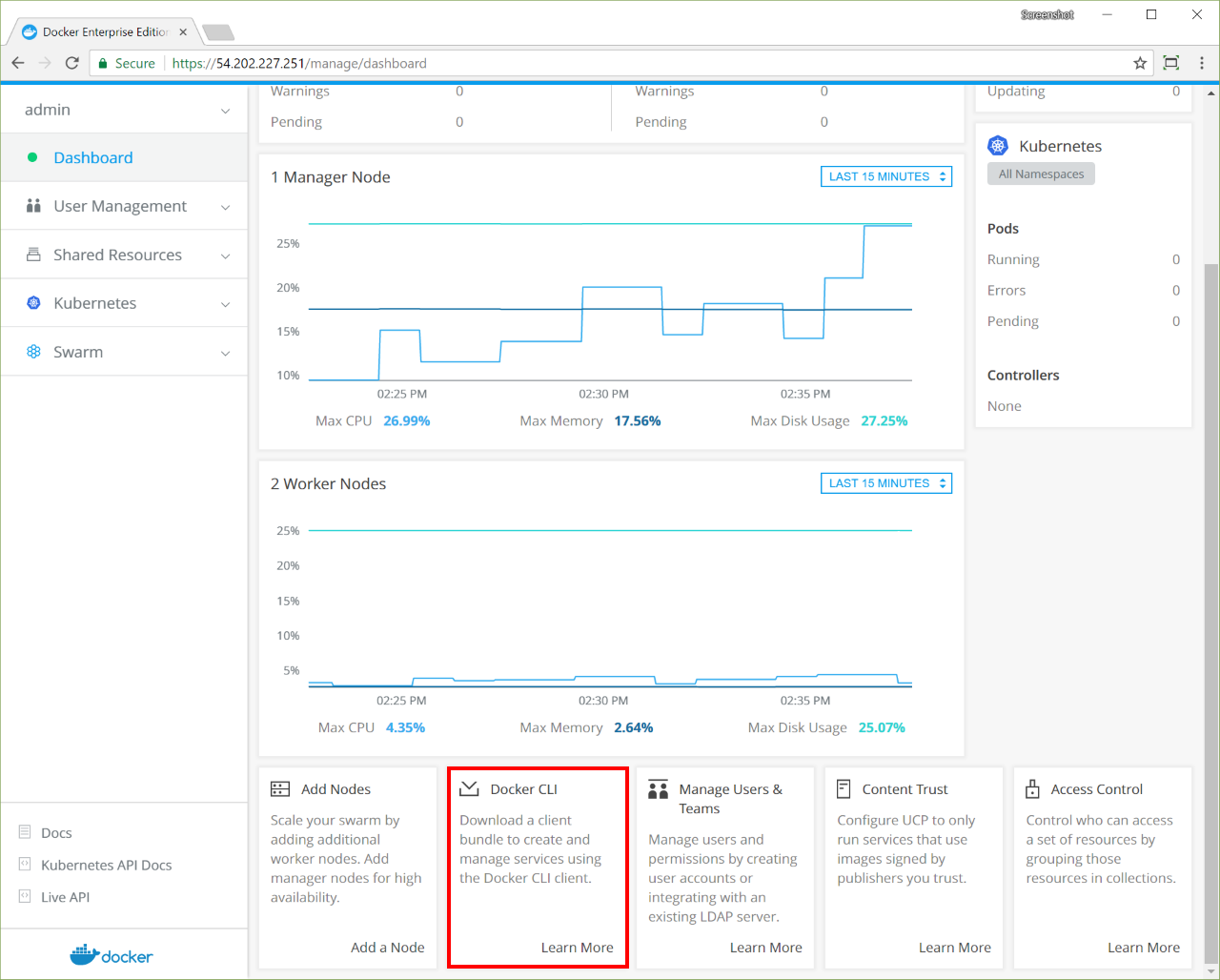

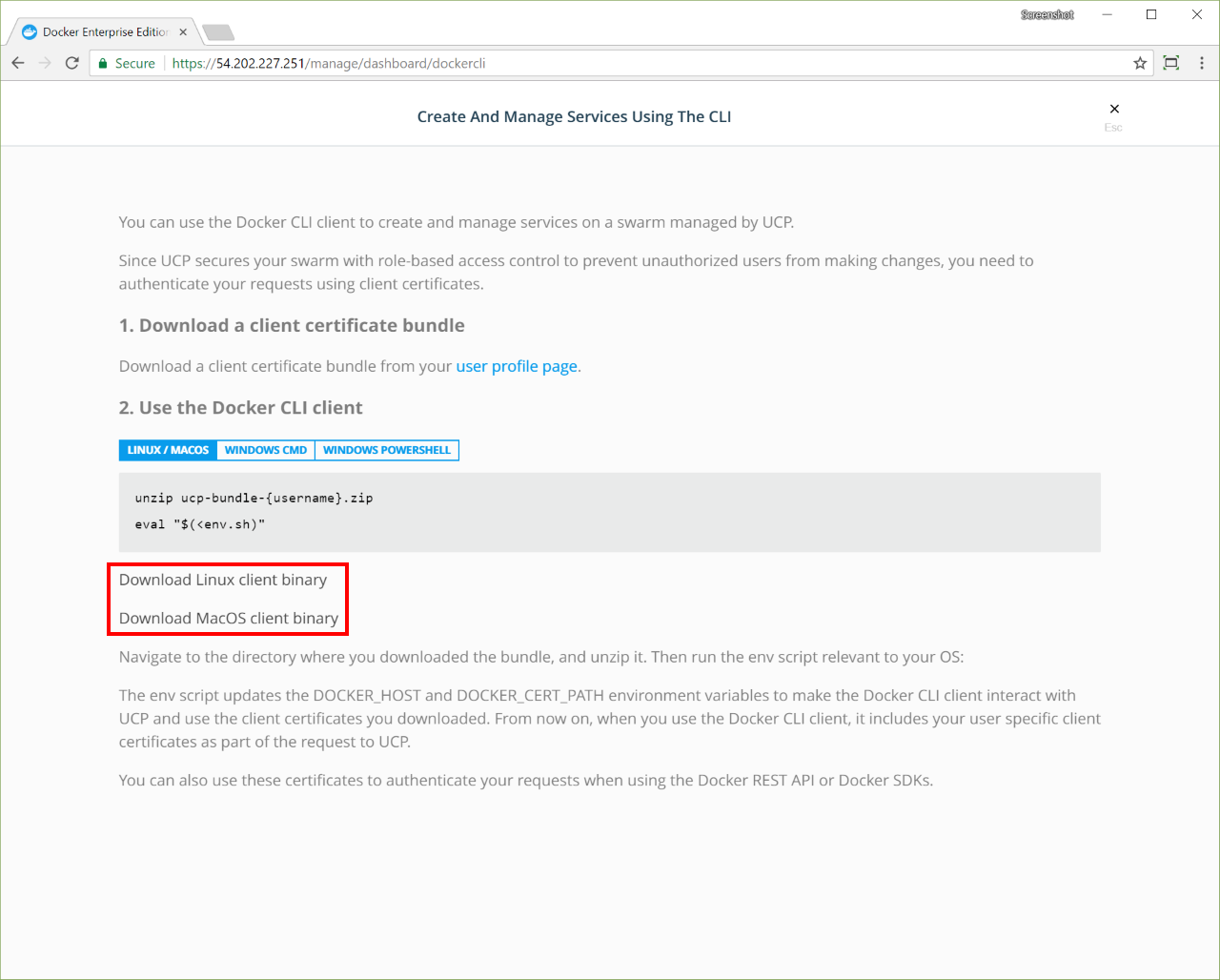

Docker Enterprise exposes the standard Docker API, so you can continue using the tools that you already know, including the Docker CLI client, to deploy and manage your applications.

For example, you can use the docker info command to check the status of a Swarm managed by Docker Enterprise:

docker info

Which produces output similar to the following:

Containers: 38

Running: 23

Paused: 0

Stopped: 15

Images: 17

Server Version: 17.06

...

Swarm: active

NodeID: ocpv7el0uz8g9q7dmw8ay4yps

Is Manager: true

ClusterID: tylpv1kxjtgoik2jnrg8pvkg6

Managers: 1

...

Manage Docker Enterprise¶

Backup Docker Enterprise¶

This document provides instructions and best practices for Docker Enterprise backup procedures for all components of the platform.

To back up Docker Enterprise, you must create individual backups for each of the following components:

If you do not create backups for all components, you cannot restore your deployment to its previous state.

Test each backup you create. One way to test your backups is to do a fresh installation on a separate infrastructure with the backup. Refer to Restore Docker Enterprise for additional information.

Note: Application data backup is not included in this information. Persistent storage data backup is the responsibility of the storage provider for the storage plugin or driver.

Restore Docker Enterprise¶

You should only restore Docker Enterprise Edition from a backup as a last resort. If you’re running Docker Enterprise in high-availability mode, you can remove unhealthy nodes from the swarm and join new ones to bring the swarm to an healthy state.

To restore Docker Enterprise, restore components individually and in the following order:

Enable authentication using TLS client certificates¶

In many organizations, authenticating to systems with a username and password combination is either restricted or outright prohibited. With Docker Enterprise 3.0, UCP’s CLI client certificate-based authentication has been extended to the web user interface (web UI). DTR has also been enhanced to work with UCP’s internally generated client bundles for client certificate-based authentication. If you have an external public key infrastructure (PKI) system, you can manage user authentication using a pool of X.509 client certificates in lieu of usernames and passwords.

Benefits¶

The following table outlines existing and added capabilities when using client certificates — both internal to UCP and issued by an external certificate authority (CA) — for authentication.

| O pera tion | Benefit |

|---|---|

| `UCP bro wser auth enti cati on < #ucp --dt r-br owse r-au then tica tion >`__ | Previously, UCP client bundles enabled communication between a local Docker client and UCP without the need of a username and password. Importing your client certificates into the browser extends this capability to the UCP web UI. |

| `DTR bro wser auth enti cati on < #ucp --dt r-br owse r-au then tica tion >`__ | You can bypass the login page for the DTR web UI when you use TLS client certificates as a DTR authentication method. |

`I mage p ulls and pu shes to DTR

mage -pul ls-a nd-p ushe s-to -dtr >`__ |

You can update Docker engine with a client certificate for

image pulls and pushes to DTR without the need for

docker login. |

`I mage sig ning

mage -sig ning >`__ |

You can use client certificates to sign images that you push to DTR. Depending on which you configure to talk to DTR, the certificate files need to be located in certain directories. Alternatively, you can enable system-wide trust of your custom root certificates. |

| `DTR API acc ess <#dt r-ap i-ac cess >`__ | You can use TLS client certificates in lieu of your user credentials to access the DTR API. |

| `No tary CLI op erat ions with DTR <#no tary -cli -ope rati ons- with -dtr >`__ | You can set your DTR as the remote trust server location and pass the certificate flags directly to the Notary CLI to access your DTR repositories. |

Limitations¶

- The security of client certificates issued by your organization’s PKI is outside of UCP’s control. UCP administrators are responsible for instructing their users on how to authenticate via client certificates.

- Username and password authentication cannot be disabled.

- If client certificates have been configured, they will be used for

all

docker pushanddocker pulloperations for all users of the same machine. - Docker Enterprise 3.0 does not check certificate revocation lists (CRLs) or Online Certificate Status Protocol (OCSP) for revoked certificates.

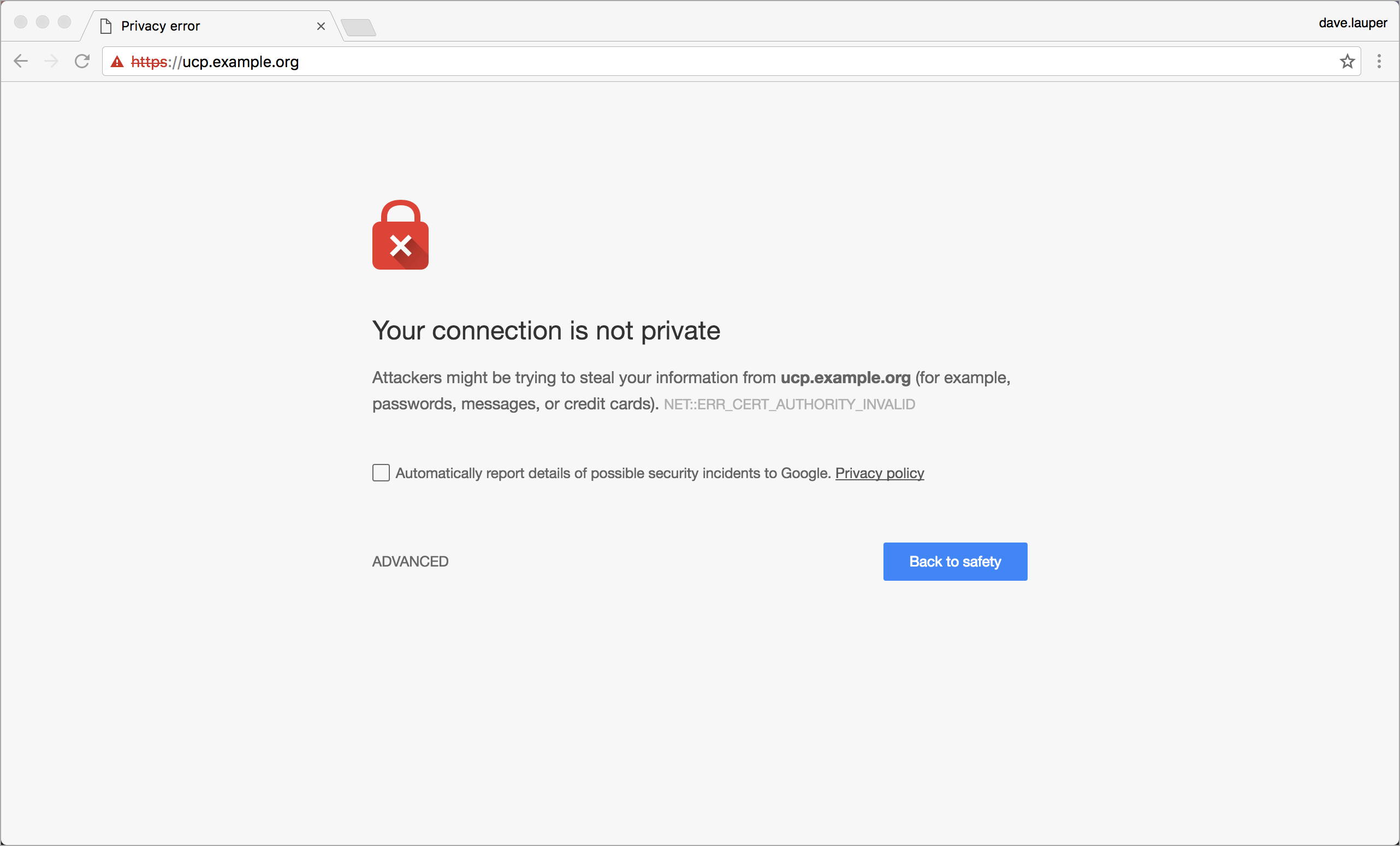

UCP / DTR browser authentication¶

The following instructions apply to UCP and DTR administrators. For non-admin users, contact your administrator for details on your PKI’s client certificate configuration.

To bypass the browser login pages and hide the logout buttons for both UCP and DTR, follow the steps below.

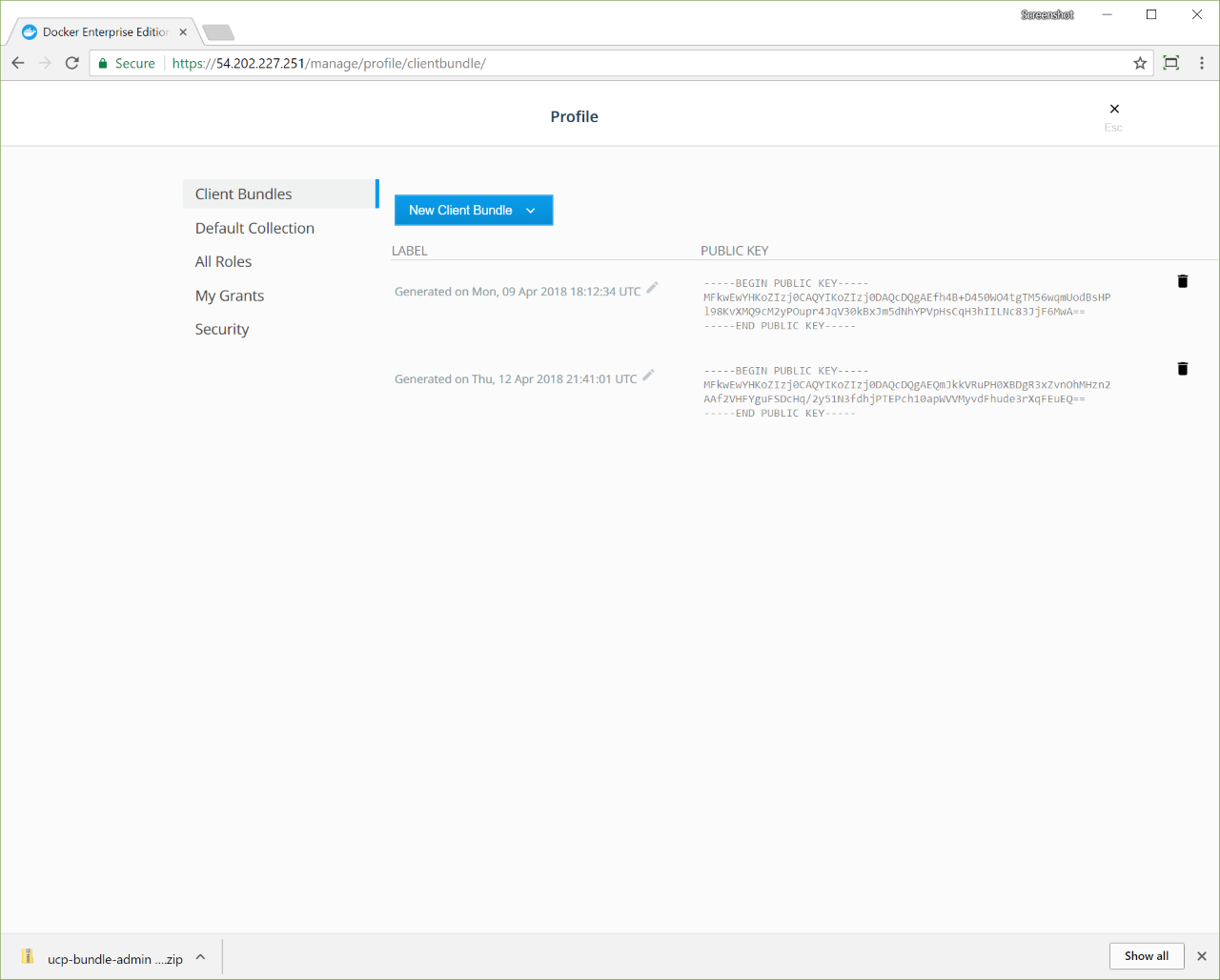

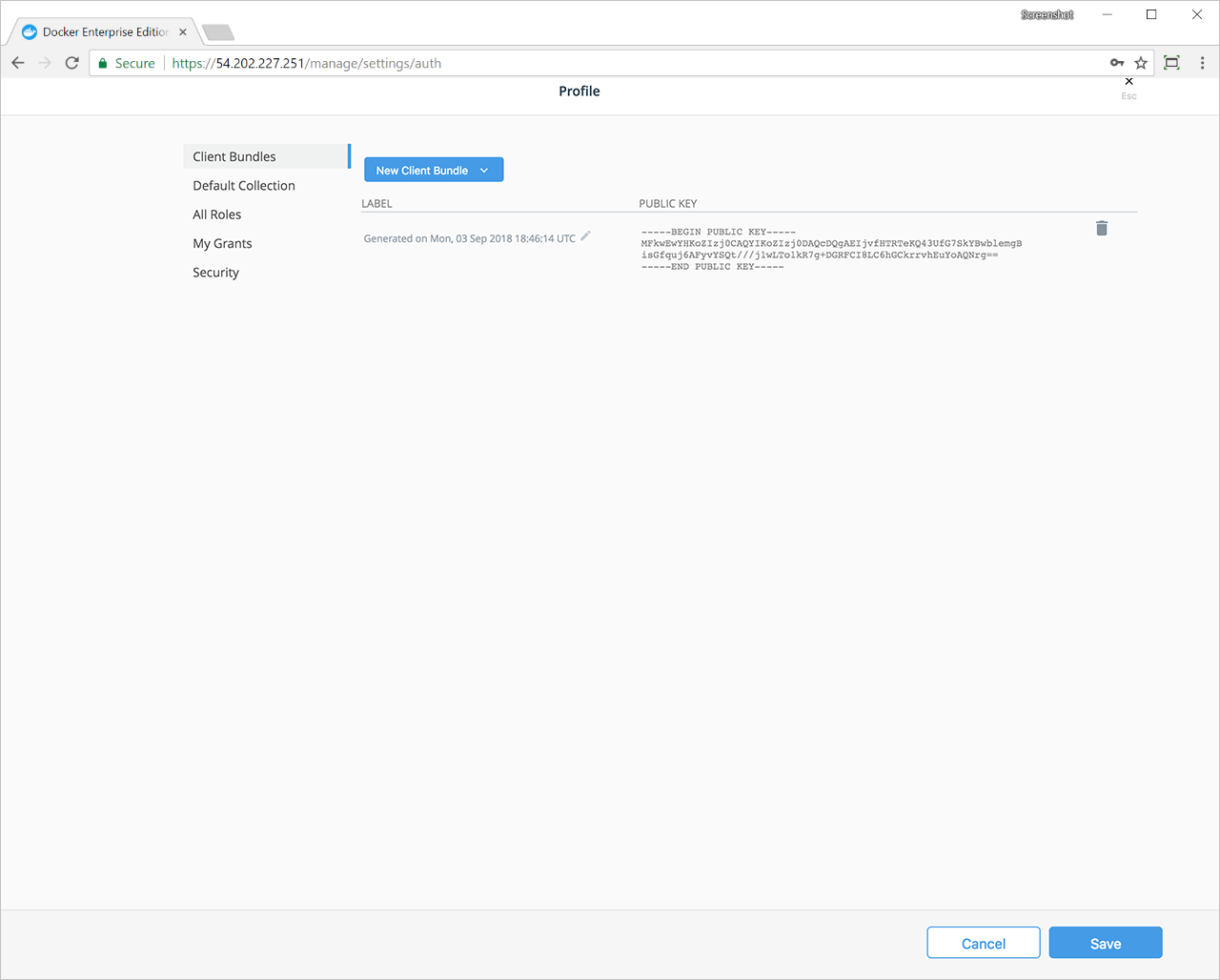

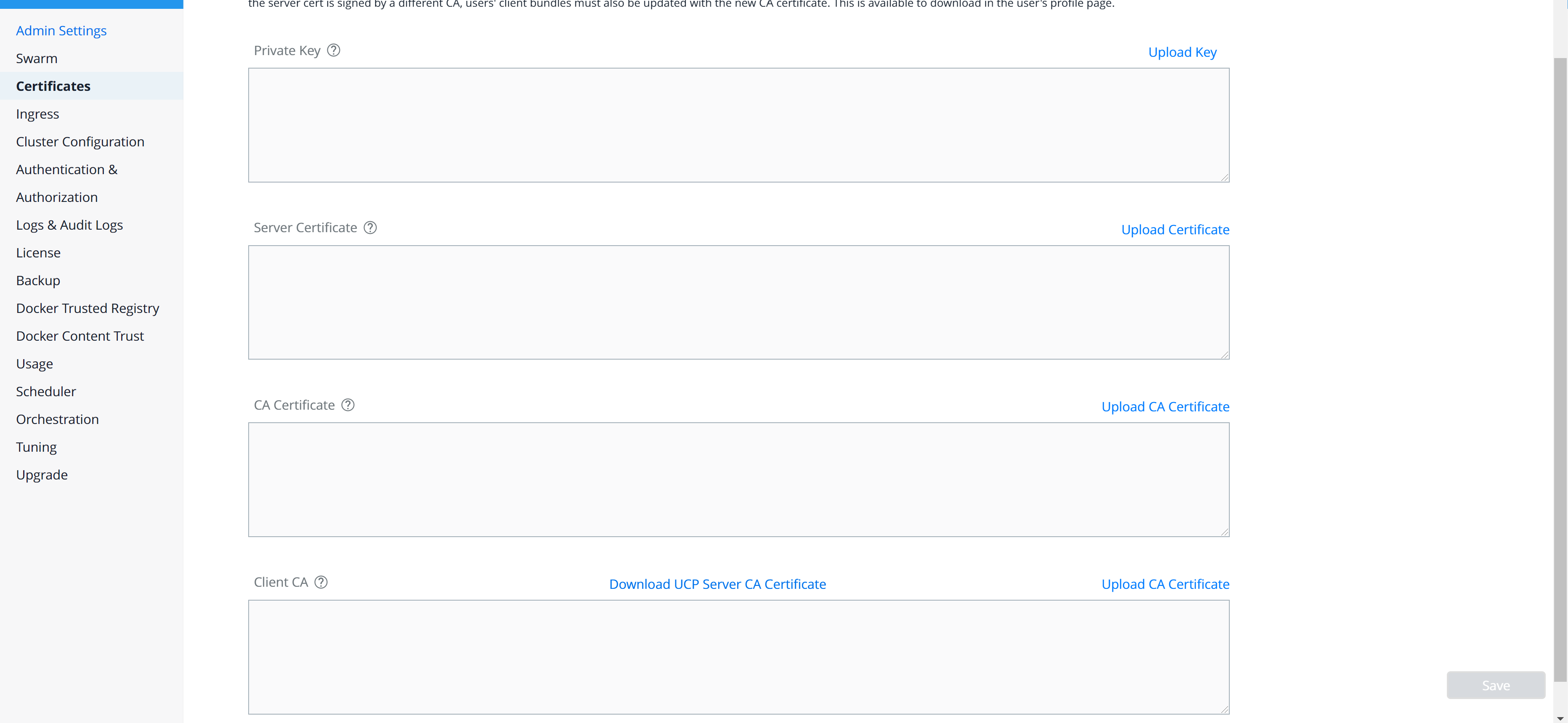

Add your organization’s root CA certificates via the UCP web UI or the CLI installation command. For testing purposes, you can download an admin client bundle from UCP and convert the client certificates to ``pkcs12` <#convert-your-client-certificates-to-a-PKCS12-file>`__

Download UCP’s

ca.pemfromhttps://<ucp-url>/caeither in the browser or viacurl. When usingcurl, redirect the response output to a file.curl -sk https://<ucp-url>/ca -o ca.pemEnable client certificate authentication for DTR. If previously installed, reconfigure DTR with your UCP hostname’s root CA certificate. This will be your organization’s root certificate(s) appended to UCP’s internal root CA certificates.

docker run --rm -it docker/dtr:2.7.0 reconfigure --debug --ucp-url \ <ucp-url> --ucp-username <ucp_admin_user> --ucp-password \ <ucp_admin_password> --enable-client-cert-auth --client-cert-auth-ca "$(cat ca.pem)"

See DTR installation and DTR reconfiguration CLI reference pages for an explanation of the different options.

Import the PKCS12 file into the browser or Keychain Access if you’re running macOS.

Client certificate to PKCS12 file conversion¶

From the command line, switch to the directory of your client bundle and

run the following command to convert the client bundle public and

private key pair to a .p12 file.

openssl pkcs12 -export -out cert.p12 -inkey key.pem -in cert.pem

Create with a simple password, you will be prompted for it when you import the certificate into the browser or Mac’s Keychain Access.

PKCS12 file browser import¶

Instructions on how to import a certificate into a web browser vary according to your platform, OS, preferred browser and browser version. As a general rule, refer to one of the following how-to articles: - Firefox: https://www.sslsupportdesk.com/how-to-import-a-certificate-into-firefox/ - Chrome: https://www.comodo.com/support/products/authentication_certs/setup/win_chrome.php - Internet Explorer: https://www.comodo.com/support/products/authentication_certs/setup/ie7.php

Image pulls and pushes to DTR¶

For pulling and pushing images to your DTR (with client certificate

authentication method enabled) without performing a docker login, do

the following:

Create a directory for your DTR public address or FQDN (Fully Qualified Domain Name) within your operating system’s TLS certificate directory.

As a superuser, copy the private key (

client.pem) and certificate (client.cert) to the machine you are using for pulling and pushing to DTR without doing adocker login. Note that the filenames must match.Obtain the CA certificate from your DTR server,

ca.crtfromhttps://<dtrurl>/ca, and copyca.crtto your operating system’s TLS certificate directory so that your machine’s Docker Engine will trust DTR. For Linux, this is/etc/docker/certs.d/<dtrurl>/. On Docker for Mac, this is/<home_directory>/certs.d/<dtr_fqdn>/.This is a convenient alternative to, for Ubuntu as an example, adding the DTR server certificate to

/etc/ca-certsand runningupdate-ca-certificates.curl curl -k https://<dtr>/ca -o ca.crtOn Ubuntu `

bash cp ca.crt /etc/ca-certsRestart the Docker daemon for the changes to take effect. See Configure your host for different ways to restart the Docker daemon.

Add your DTR server CA certificate to system level¶

You have the option to add your DTR server CA certificate to your

system’s trusted root certificate pool. This is MacOS Keychain or

/etc/ca-certificates/ on Ubuntu. Note that you will have to remove

the certificate if your DTR public address changes.

Image signing¶

DTR provides the Notary service for using Docker Content Trust (DCT) out of the box.

Implementation | Component Pairing | Settings |

|---|---|---|

Sign with docker trust sign |

| Copy ca.crt from https://<dtr-external-url>/ca to:

|

Enforce signature or hash verification on the Docker client |

| export DOCKER_CONTENT_TRUST=1 to enable content trust on the Docker client. Copy ca.crt from https://<dtr-external-url>/ca to /<home_directory>/.docker/tls/ on Linux and macOS. docker push will sign your images. |

Sign images that UCP can trust |

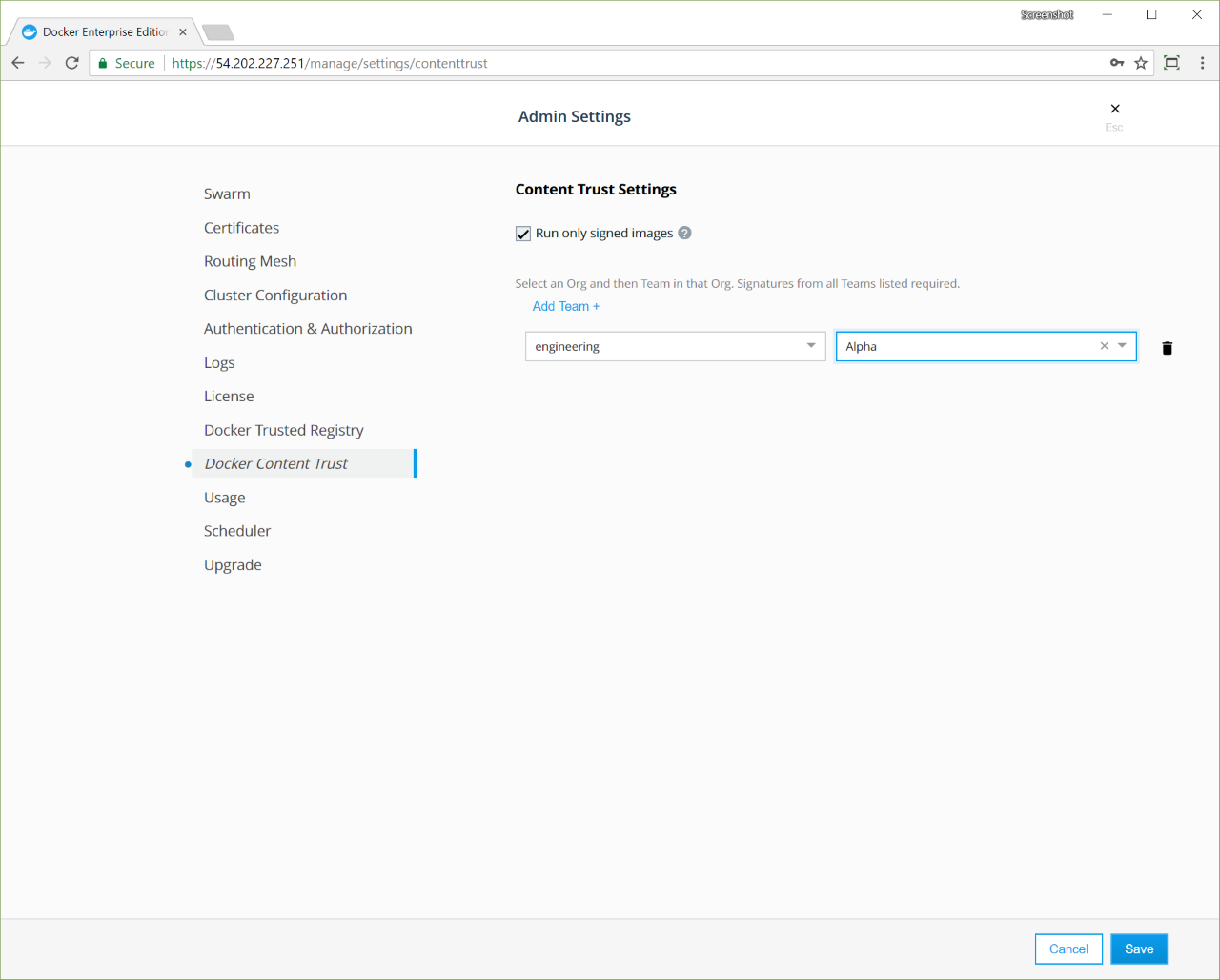

| Configure UCP to run only signed images. See Sign an image for detailed steps. |

DTR API access¶

With curl, you can interact with the DTR API by passing a public

certificate and private key pair instead of your DTR username and

password/authentication token.

curl --cert cert.pem --key key.pem -X GET \

"https://<dtr-external-url>/api/v0/repositories?pageSize=10&count=false" \

-H "accept:application/json"

In the above example, cert.pem contains the public certificate and

key.pem contains the private key. For non-admin users, you can

generate a client bundle from UCP or contact your administrator for your

public and private key pair.

For Mac-specific quirks, see curl on certain macOS versions.

Notary CLI operations with DTR¶

For establishing mutual trust between the Notary client and your trusted

registry (DTR) using the Notary CLI, place your TLS client certificates

in <home_directory>/.docker/tls/<dtr-external-url>/ as

client.cert and client.key. Note that the filenames must match.

Pass the FQDN or publicly accessible IP address of your registry along

with the TLS client certificate options to the Notary client. To get

started, see Use the Notary client for advanced

users.

Self-signed DTR server certificate

Also place

ca.crtin<home_directory>/.docker/tls/<dtr-external-url>/when you’re using a self-signed server certificate for DTR.

Troubleshooting tips¶

DTR authentication via client Certificates¶

Hit your DTR’s basic_info endpoint via curl:

curl --cert cert.pem --key key.pem -X GET "https://<dtr-external-url>/basic_info"

If successfully configured, you should see TLSClientCertificate

listed as the AuthnMethod in the JSON response.

{

"CurrentVersion": "2.7.0",

"User": {

"name": "admin",

"id": "30f53dd2-763b-430d-bafb-dfa361279b9c",

"fullName": "",

"isOrg": false,

"isAdmin": true,

"isActive": true,

"isImported": false

},

"IsAdmin": true,

"AuthnMethod": "TLSClientCertificate"

}

DTR as an insecure registry¶

Avoid adding DTR to Docker Engine’s list of insecure registries as a workaround. This has the side effect of disabling the use of TLS certificates.

DTR server certificate errors¶

Error response from daemon: Get https://35.165.223.150/v2/: x509: certificate is valid for 172.17.0.1, not 35.165.223.150

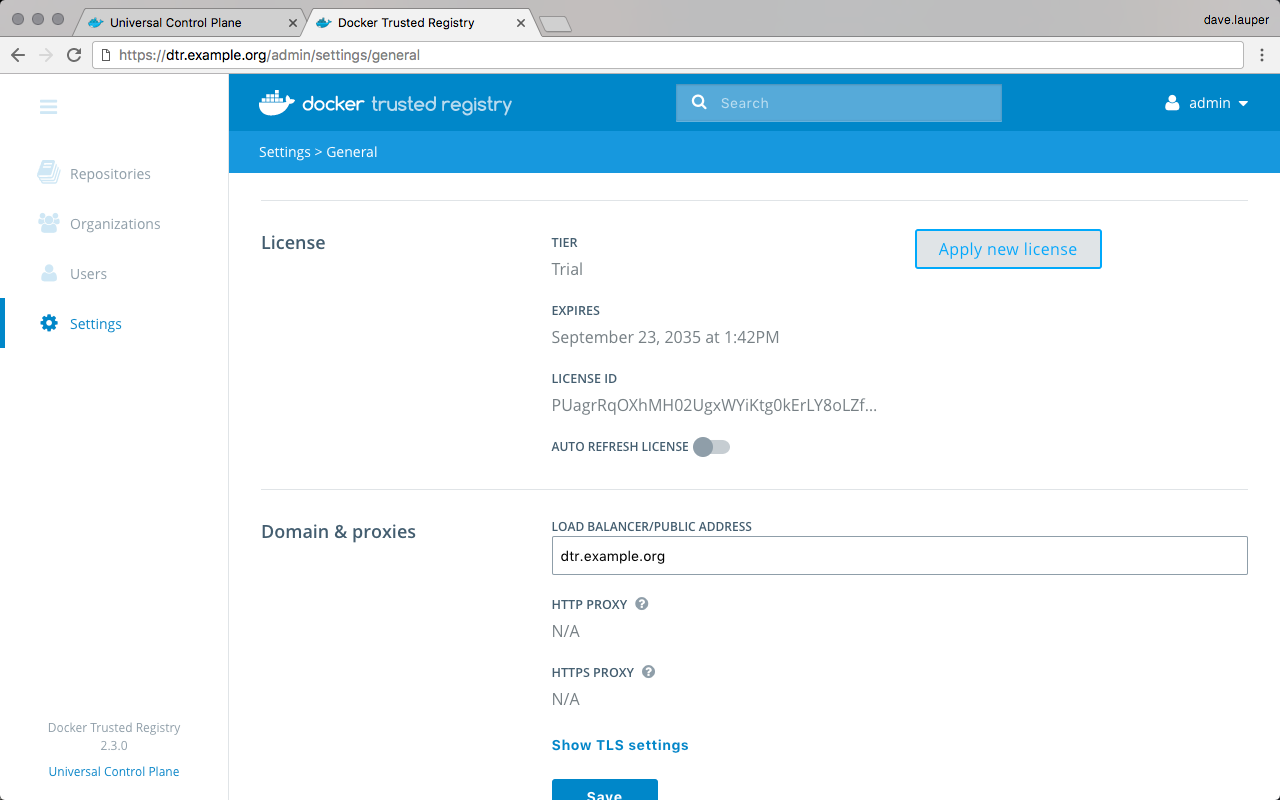

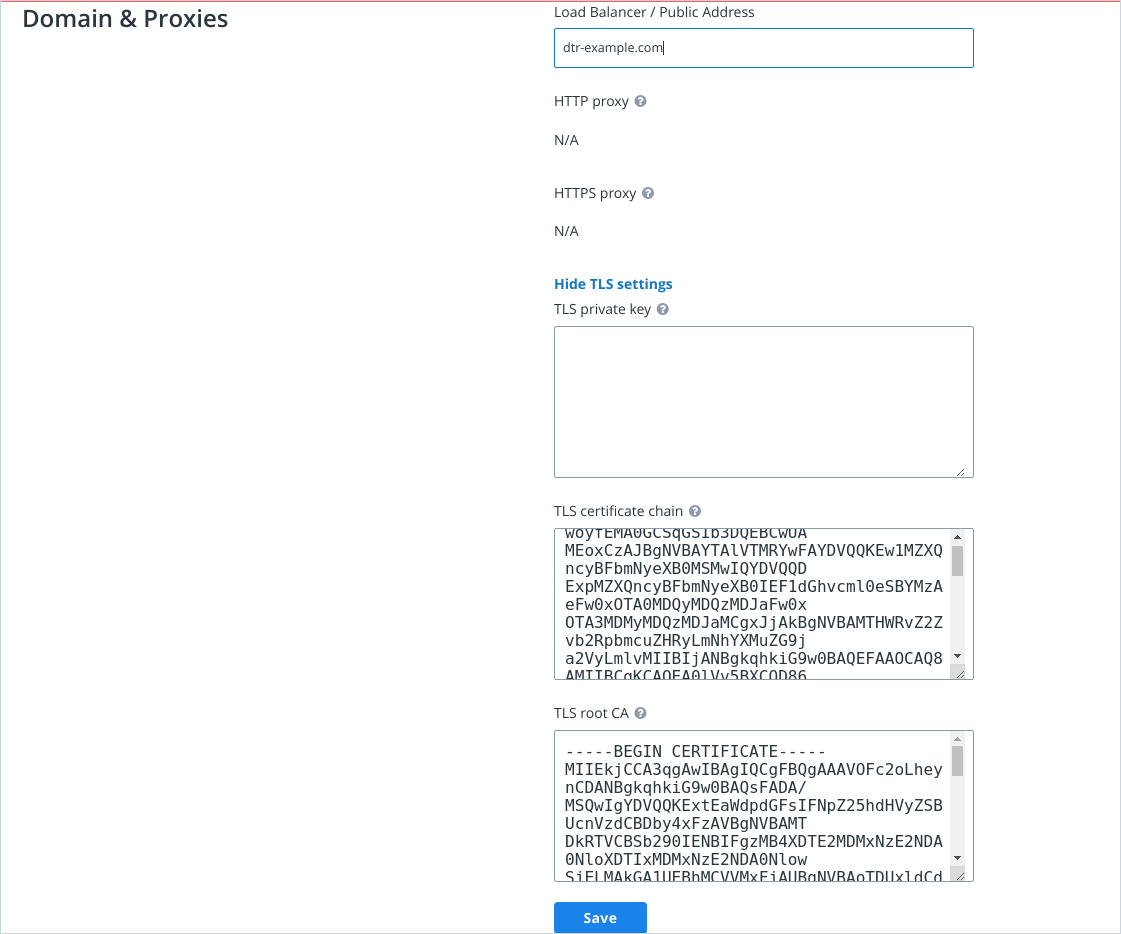

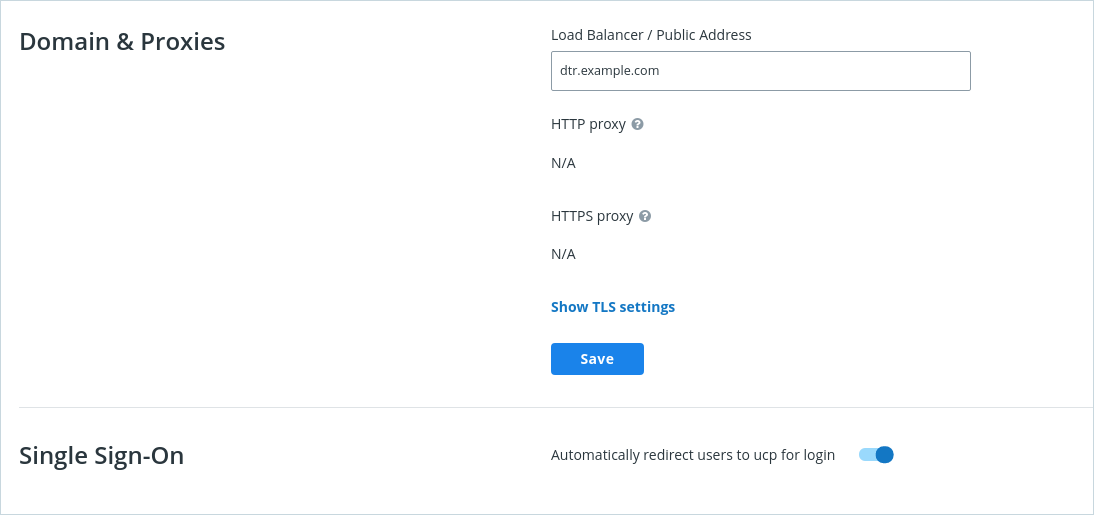

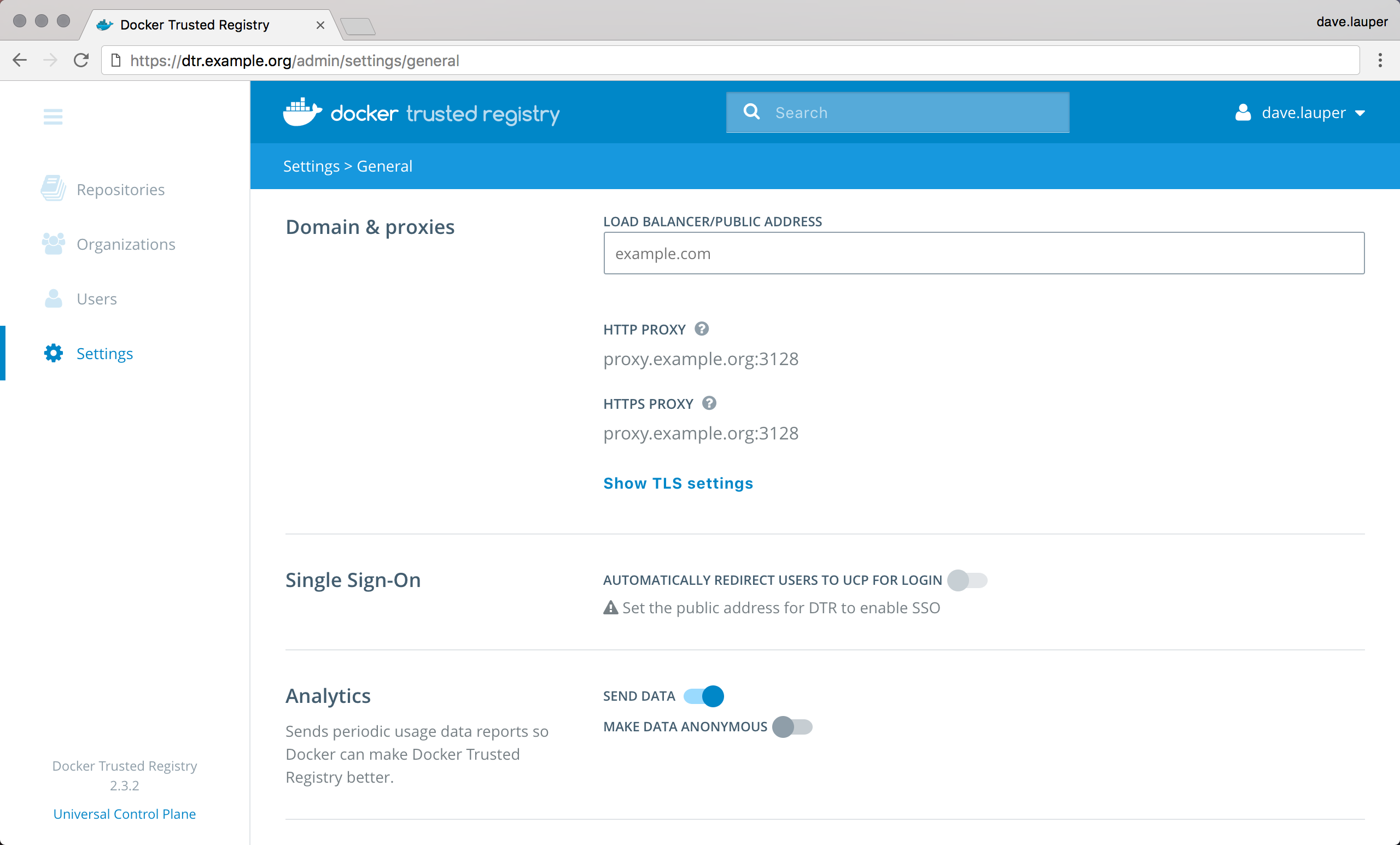

- On the web UI, make sure to add the IP address or the FQDN associated with your custom TLS certificate under System > General > Domains & Proxies.

- From the command line interface, reconfigure

DTR with the

--dtr-external-urloption and the associated PEM files for your certificate.

Intermediate certificates¶

For chain of trust which includes intermediate certificates, you may

optionally add those certificates when installing or reconfiguring DTR

with --enable-client-cert-auth and --client-cert-auth-ca. You

can do so by combining all of the certificates into a single PEM file.

curl on certain macOS versions¶

Some versions of macOS include curl which only accepts .p12

files and specifically requires a ./ prefix in front of the file

name if running curl from the same directory as the .p12 file:

curl --cert ./client.p12 -X GET \

"https://<dtr-external-url>/api/v0/repositories?pageSize=10&count=false" \

-H "accept:application/json"

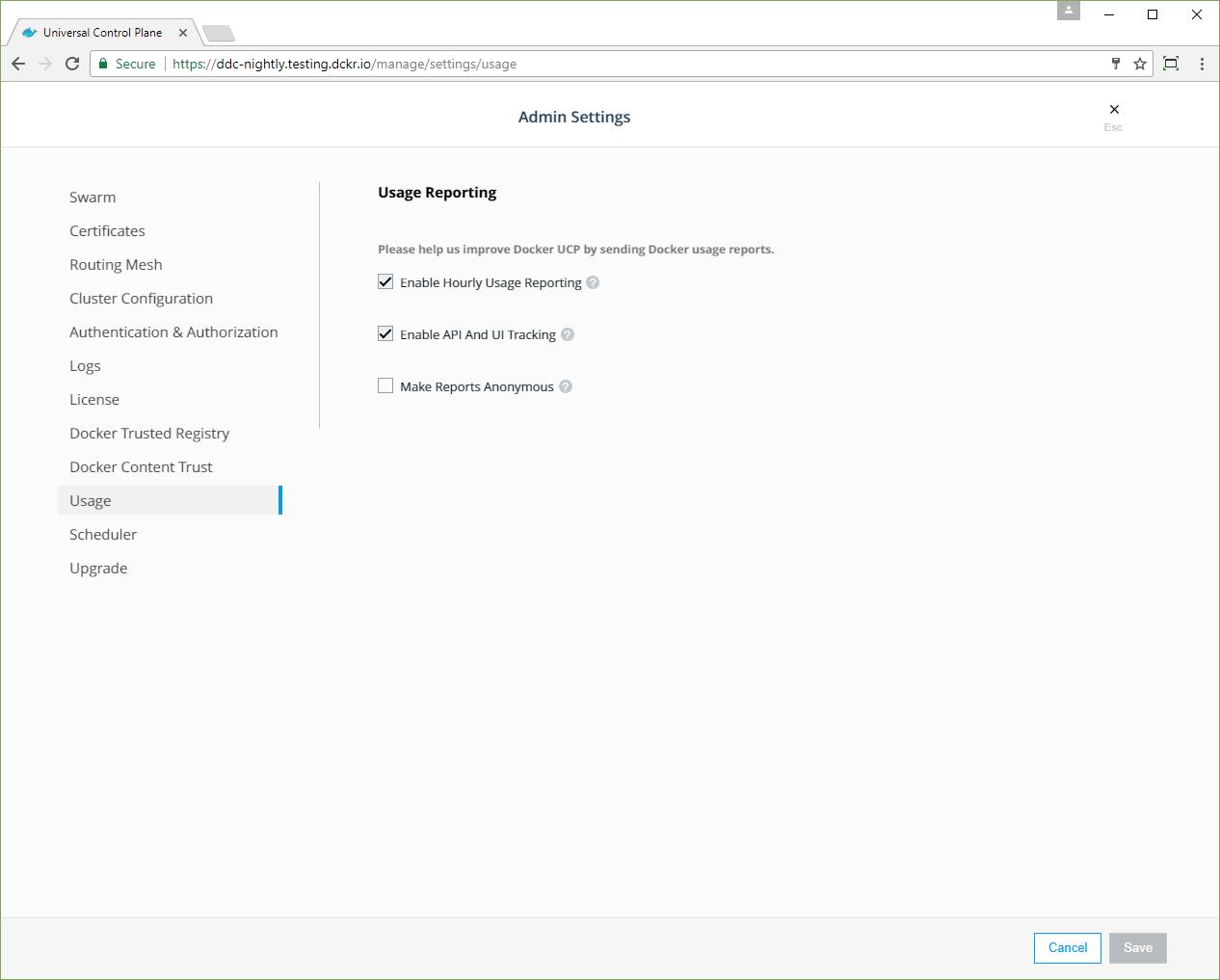

Manage usage data collection¶

Docker Engine - Enterprise version 17.06 and later includes a telemetry plugin. The plugin is enabled by default on Ubuntu starting with Docker Engine - Enterprise 17.06.0 and on the rest of the Docker Engine - Enterprise supported Linux distributions starting with version 17.06.2-ee-5. The telemetry plugin is not part of Docker Engine - Enterprise for Windows Server.

The telemetry plugin sends system information to Docker Inc. Docker uses this information to improve Docker Engine - Enterprise. For details about the telemetry plugin and the types of data it collects, see the telemetry plugin documentation.

If your Docker instance runs in an environment with no internet connectivity, the telemetry plugin does not collect or attempt to send any information to Docker Inc.

Manage data collection¶

If you don’t wish to send any usage data to Docker Inc., you can disable the plugin, either using the Docker CLI or using Universal Control Plane.

Warning

If you’re using Docker Engine - Enterprise with Universal Control Plane (UCP), use UCP to enable and disable metrics. Use the CLI only if you don’t have UCP. UCP re-enables the telemetry plugin for hosts where it was disabled with the CLI.

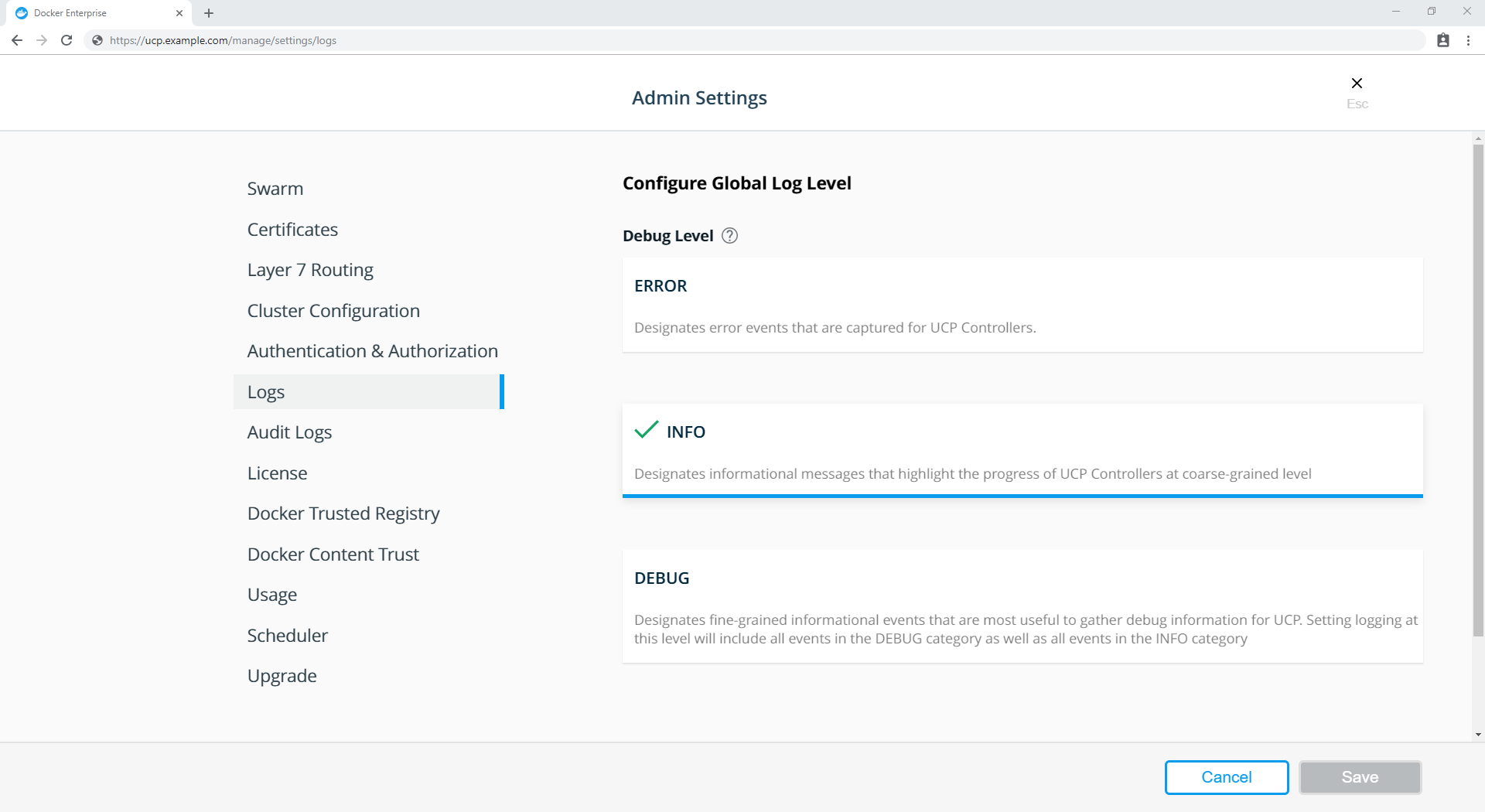

Use Universal Control Plane¶

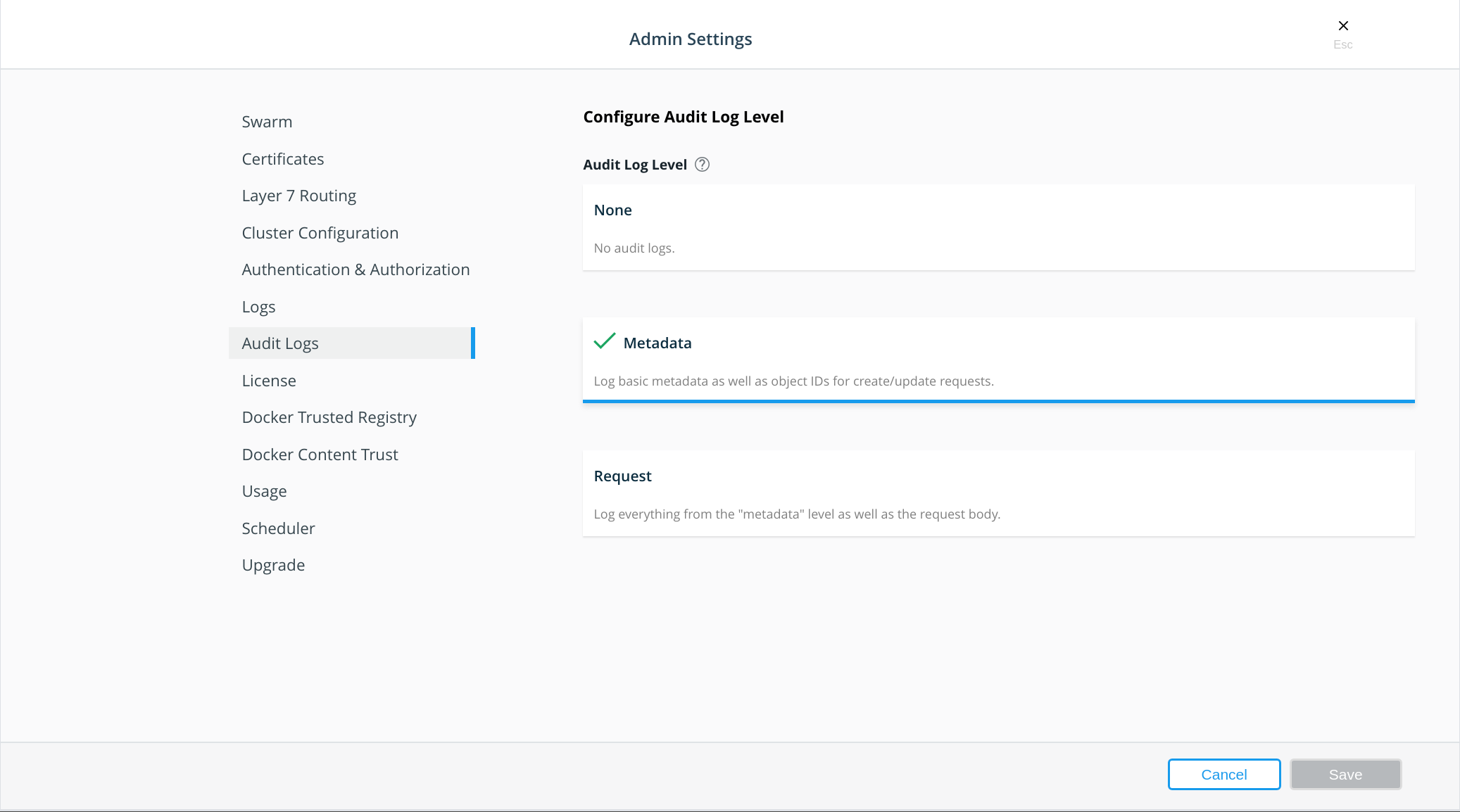

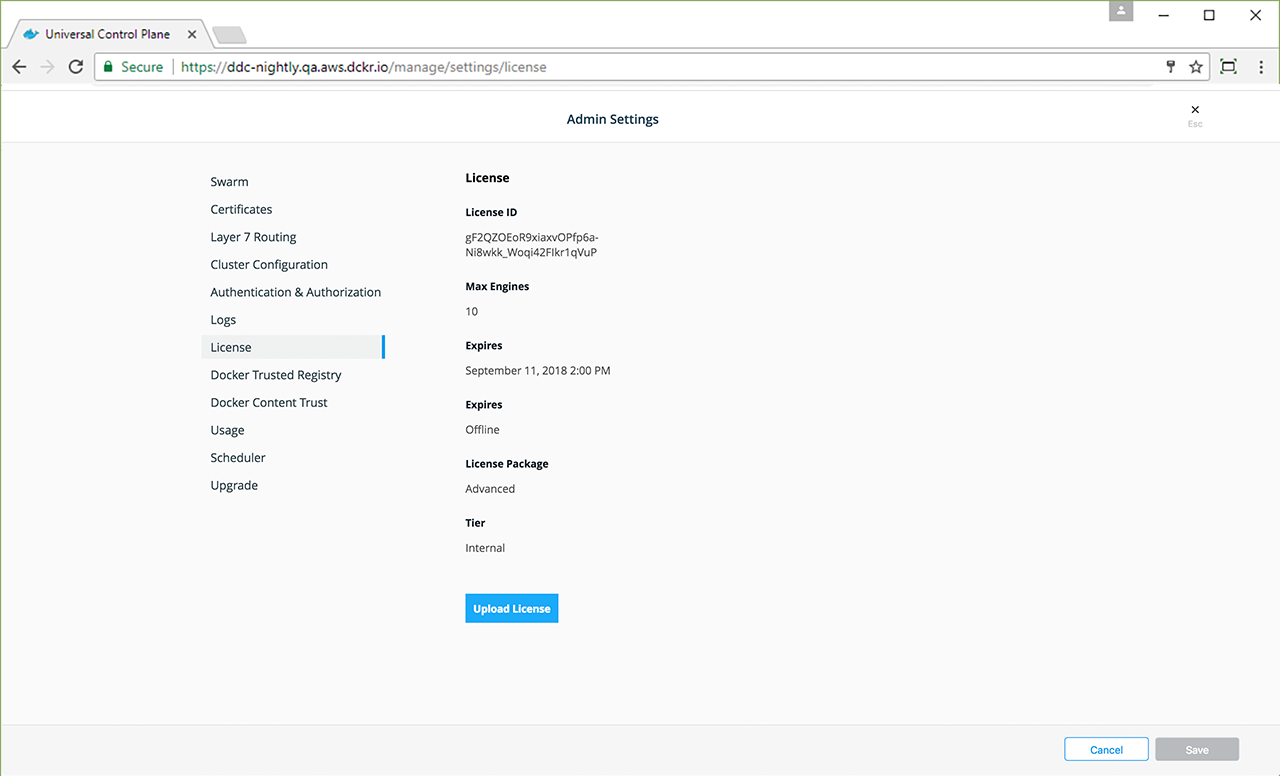

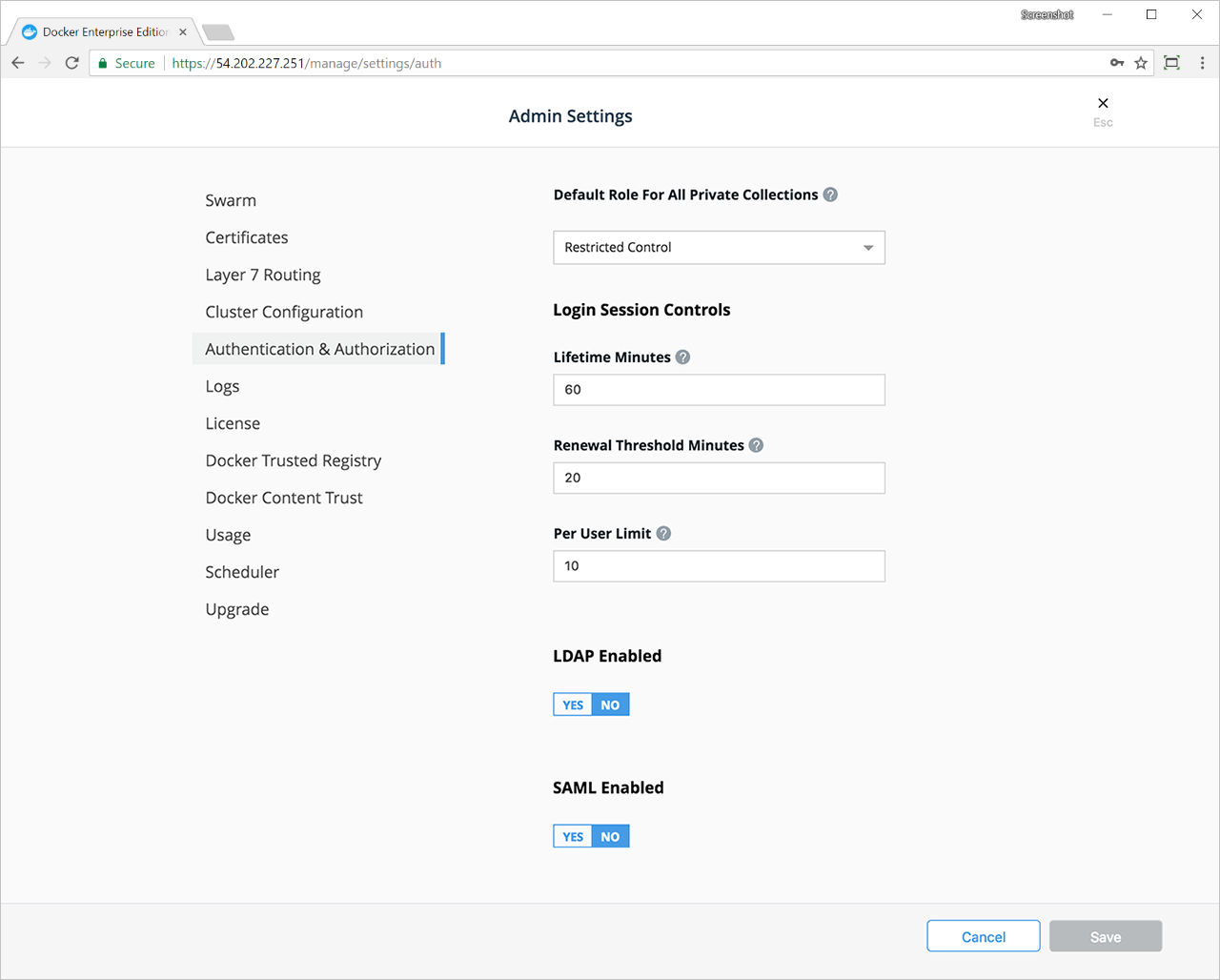

If you use Universal Control Plane with Docker Engine - Enterprise, do not use the Docker CLI to disable the telemetry plugin. Instead, you can manage the information sent to Docker by going to Admin Settings and choosing Usage.

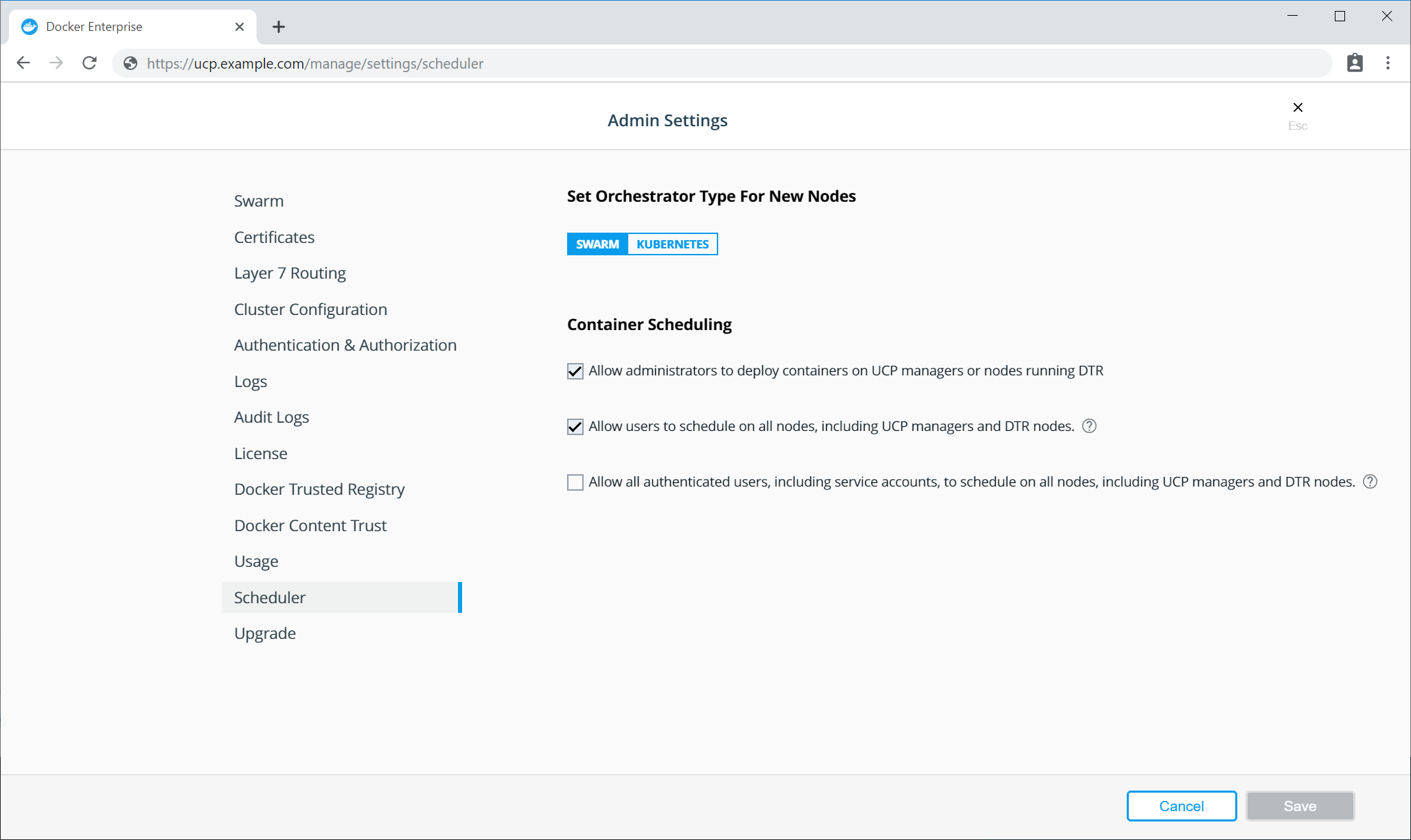

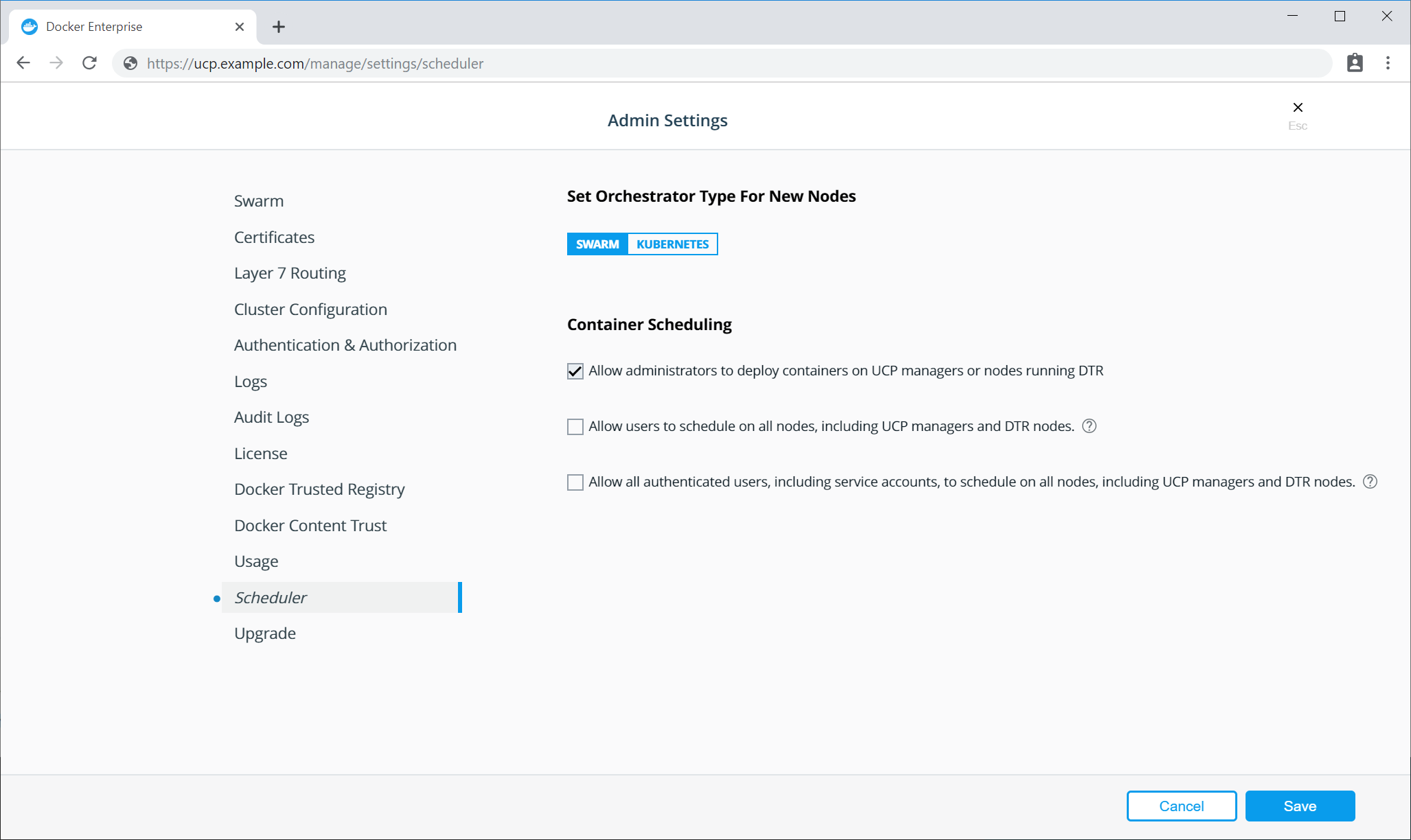

To disable the telemetry plugin, disable all three options and click Save. Enabling either or both of the top two options will enable the telemetry plugin. You can find out more about an individual option by clicking the ? icon.

Important

If API usage statistics are enabled, Docker gathers only aggregate stats about what API endpoints are used. API payload contents aren’t collected.

Use the CLI to control telemetry¶

At the engine level, there is a telemetry module built into the Docker

Enterprise Engine 18.09 or newer. It can be disabled by modifing the

daemon configuration file. By default this is stored

at /etc/docker/daemon.json.

{

"features": {

"telemetry": false

}

}

For the Docker daemon to pick up the changes in the configuration file, the Docker daemon will need to be restarted.

$ sudo systemctl reboot docker

To reenable the telemetry module, swap the value to

"telemetry": true or completely remove the "telemetry": false

line, as the default value is true.

Docker Enterprise Engine 18.03 or older¶

For Docker Enterprise Engine 18.03 or older, the telemetry module ran as

a Docker Plugin. To disable the telemetry plugin, use the

docker plugin disable with either the plugin NAME or ID:

$ docker plugin ls

ID NAME [..]

114dbeaa400c docker/telemetry:1.0.0.linux-x86_64-stable [..]

$ docker plugin disable docker/telemetry:1.0.0.linux-x86_64-stable

This command must be run on each Docker host.

To re-enable the telemetry plugin, you can use docker plugin enable

with either the plugin NAME or ID:

$ docker plugin ls

ID NAME [..]

114dbeaa400c docker/telemetry:1.0.0.linux-x86_64-stable [..]

$ docker plugin enable docker/telemetry:1.0.0.linux-x86_64-stable

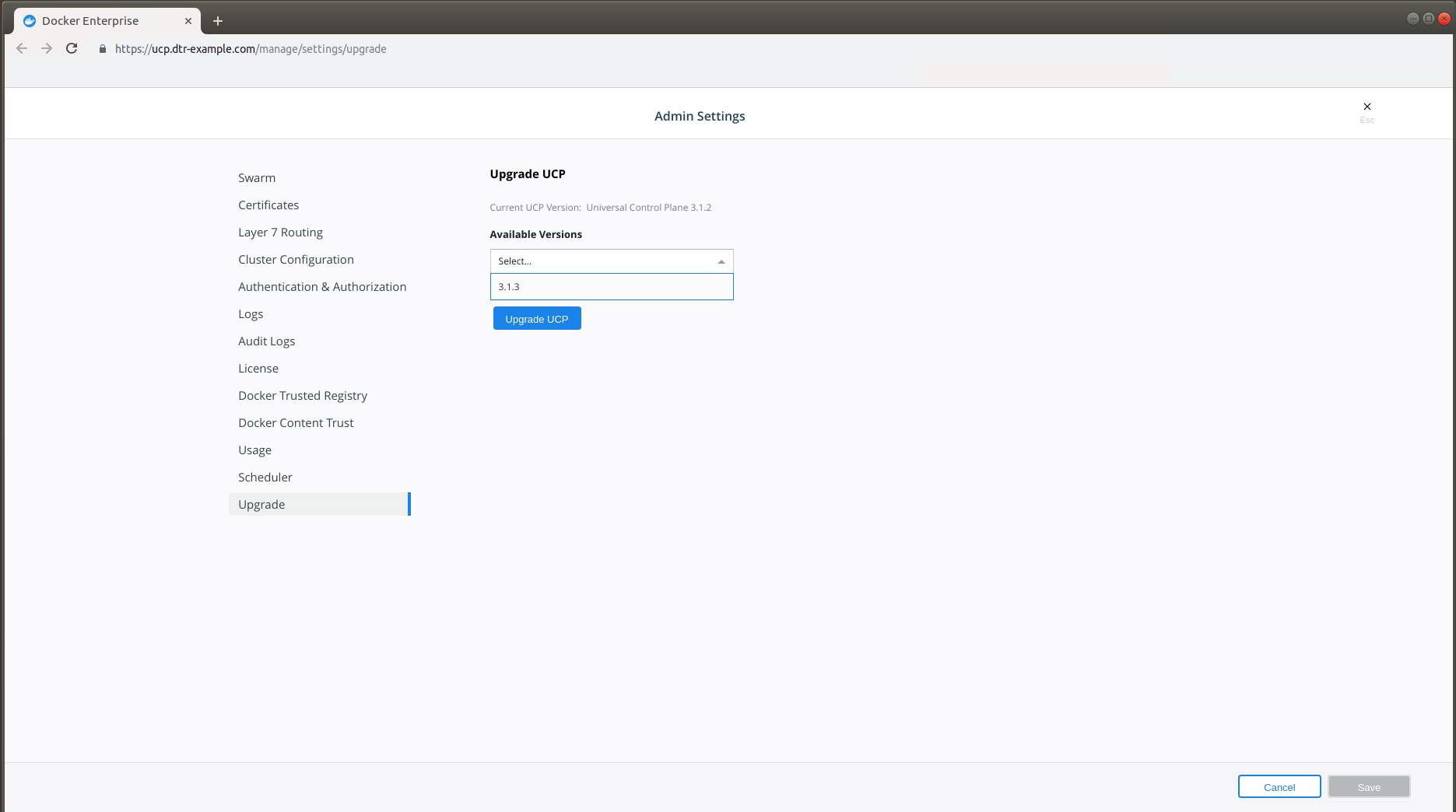

Upgrade Docker Enterprise¶

To upgrade Docker Enterprise, you must individually upgrade each of the following components:

Because some components become temporarily unavailable during an upgrade, schedule upgrades to occur outside of peak business hours to minimize impact to your business.

Cluster upgrade best practices¶

Docker Engine - Enterprise upgrades in Swarm clusters should follow these guidelines in order to avoid IP address space exhaustion and associated application downtime.

- New workloads should not be actively scheduled in the cluster during upgrades.

- Differences in the major (X.y.z.) or minor (x.Y.z) version numbers between the managers and workers can cause unintended consequences when new workloads are scheduled.

- Manager nodes should all be upgraded first before upgrading worker nodes. Upgrading manager nodes sequentially is recommended if live workloads are running in the cluster during the upgrade.

- Once manager nodes are upgraded worker nodes should be upgraded next and then the Swarm cluster upgrade is complete.

- If running UCP, the UCP upgrade should follow once all of the Swarm engines have been upgraded.

Create a backup¶

Before upgrading Docker Engine - Enterprise, you should make sure you create a backup. This makes it possible to recover if anything goes wrong during the upgrade.

Check the compatibility matrix¶

You should also check the compatibility matrix, to make sure all Docker Engine - Enterprise components are certified to work with one another. You may also want to check the Docker Engine - Enterprise maintenance lifecycle, to understand until when your version may be supported.

Apply firewall rules¶

Before you upgrade, make sure:

- Your firewall rules are configured to allow traffic in the ports UCP uses for communication. Learn about UCP port requirements.

- Make sure you don’t have containers or services that are listening on ports used by UCP.

- Configure your load balancer to forward TCP traffic to the Kubernetes API server port (6443/TCP by default) running on manager nodes.

Certificates

Externally signed certificates are used by the Kubernetes API server and the UCP controller. {: .important}

IP address consumption in 18.09+¶

In Swarm overlay networks, each task connected to a network consumes an

IP address on that network. Swarm networks have a finite amount of IPs

based on the --subnet configured when the network is created. If no

subnet is specified then Swarm defaults to a /24 network with 254

available IP addresses. When the IP space of a network is fully

consumed, Swarm tasks can no longer be scheduled on that network.

Starting with Docker Engine - Enterprise 18.09 and later, each Swarm node will consume an IP address from every Swarm network. This IP address is consumed by the Swarm internal load balancer on the network. Swarm networks running on Engine versions 18.09 or greater must be configured to account for this increase in IP usage. Networks at or near consumption prior to engine version 18.09 may have a risk of reaching full utilization that will prevent tasks from being scheduled on to the network.

Maximum IP consumption per network at any given moment follows the following formula:

Max IP Consumed per Network = Number of Tasks on a Swarm Network + 1 IP for each node where these tasks are scheduled

To prevent this from happening, overlay networks should have enough capacity prior to an upgrade to 18.09, such that the network will have enough capacity after the upgrade. The below instructions offer tooling and steps to ensure capacity is measured before performing an upgrade.

The above following only applies to containers running on Swarm overlay networks. This does not impact bridge, macvlan, host, or 3rd party docker networks.

Upgrade Docker Engine - Enterprise¶

To avoid application downtime, you should be running Docker Engine - Enterprise in Swarm mode and deploying your workloads as Docker services. That way you can drain the nodes of any workloads before starting the upgrade.

If you have workloads running as containers as opposed to swarm services, make sure they are configured with a restart policy. This ensures that your containers are started automatically after the upgrade.

To ensure that workloads running as Swarm services have no downtime, you need to:

- Determine if the network is in danger of exhaustion; and remediate to a new, larger network prior to upgrading.

- Drain the node you want to upgrade so that services get scheduled in another node.

- Upgrade the Docker Engine on that node.

- Make the node available again.

If you do this sequentially for every node, you can upgrade with no application downtime. When upgrading manager nodes, make sure the upgrade of a node finishes before you start upgrading the next node. Upgrading multiple manager nodes at the same time can lead to a loss of quorum, and possible data loss.

Determine if the network is in danger of exhaustion¶

Starting with a cluster with one or more services configured, determine whether some networks may require updating the IP address space in order to function correctly after an Docker Engine - Enterprise 18.09 upgrade.

- SSH into a manager node on a cluster where your applications are running.

- Run the following:

$ docker run -it --rm -v /var/run/docker.sock:/var/run/docker.sock docker/ip-util-check

If the network is in danger of exhaustion, the output will show similar warnings or errors:

Overlay IP Utilization Report

----

Network ex_net1/XXXXXXXXXXXX has an IP address capacity of 29 and uses 28 addresses

ERROR: network will be over capacity if upgrading Docker engine version 18.09

or later.

----

Network ex_net2/YYYYYYYYYYYY has an IP address capacity of 29 and uses 24 addresses

WARNING: network could exhaust IP addresses if the cluster scales to 5 or more nodes

----

Network ex_net3/ZZZZZZZZZZZZ has an IP address capacity of 61 and uses 52 addresses

WARNING: network could exhaust IP addresses if the cluster scales to 9 or more nodes

- Once you determine all networks are sized appropriately, start the upgrade on the Swarm managers.

With an exhausted network, you can triage it using the following steps.

- SSH into a manager node on a cluster where your applications are running.

- Check the

docker service lsoutput. It will display the service that is unable to completely fill all its replicas such as:

ID NAME MODE REPLICAS IMAGE PORTS

wn3x4lu9cnln ex_service replicated 19/24 nginx:latest

- Use

docker service ps ex_serviceto find a failed replica such as:

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

...

i64lee19ia6s \_ ex_service.11 nginx:latest tk1706-ubuntu-1 Shutdown Rejected 7 minutes ago "node is missing network attac…"

...

- Examine the error using

docker inspect. In this example, thedocker inspect i64lee19ia6soutput shows the error in theStatus.Errfield:

...

"Status": {

"Timestamp": "2018-08-24T21:03:37.885405884Z",

"State": "rejected",

"Message": "preparing",

**"Err": "node is missing network attachments, ip addresses may be exhausted",**

"ContainerStatus": {

"ContainerID": "",

"PID": 0,

"ExitCode": 0

},

"PortStatus": {}

},

...

- Adjust your network subnet in the deployment manifest, such that it has enough IPs required by the application.

- Redeploy the application.

- Confirm the adjusted service deployed successfully.

Manager upgrades when moving to Docker Engine - Enterprise 18.09 and later¶

The following is a constraint introduced by architectural changes to the Swarm overlay networking when upgrading to Docker Engine - Enterprise 18.09 or later. It only applies to this one-time upgrade and to workloads that are using the Swarm overlay driver. Once upgraded to Docker Engine - Enterprise 18.09, this constraint does not impact future upgrades.

When upgrading to Docker Engine - Enterprise 18.09, manager nodes cannot reschedule new workloads on the managers until all managers have been upgraded to the Docker Engine - Enterprise 18.09 (or higher) version. During the upgrade of the managers, there is a possibility that any new workloads that are scheduled on the managers will fail to schedule until all of the managers have been upgraded.

In order to avoid any impactful application downtime, it is advised to reschedule any critical workloads on to Swarm worker nodes during the upgrade of managers. Worker nodes and their network functionality will continue to operate independently during any upgrades or outages on the managers. Note that this restriction only applies to managers and not worker nodes.

Drain the node¶

If you are running live application on the cluster while upgrading, remove applications from nodes being upgrades as to not create unplanned outages.

Start by draining the node so that services get scheduled in another node and continue running without downtime.

For that, run this command on a manager node:

$ docker node update --availability drain <node>

Perform the upgrade¶

To upgrade a node individually by operating system, please follow the instructions listed below:

Post-Upgrade steps for Docker Engine - Enterprise¶

After all manager and worker nodes have been upgrades, the Swarm cluster

can be used again to schedule new workloads. If workloads were

previously scheduled off of the managers, they can be rescheduled again.

If any worker nodes were drained, they can be undrained again by setting

--availability active.

Docker Engine - Enterprise¶

Docker Engine - Enterprise is a client-server application with these major components:

- A server which is a type of long-running program called a daemon process (the dockerd command).

- A REST API which specifies interfaces that programs can use to talk to the daemon and instruct it what to do.

- A command line interface (CLI) client (the docker command).

Docker Engine - Enterprise can be installed on several linux distros as well as on Windows.

Docker Engine release notes¶

This document describes the latest changes, additions, known issues, and fixes for Docker Engine - Enterprise (Docker EE).

Docker EE is a superset of all the features in Docker CE. It incorporates defect fixes that you can use in environments where new features cannot be adopted as quickly for consistency and compatibility reasons.

Note

New in 18.09 is an aligned release model for Docker Engine - Enterprise. The new versioning scheme is YY.MM.x where x is an incrementing patch version. The enterprise engine is a superset of the community engine. They will ship concurrently with the same x patch version based on the same code base.

Note

The client and container runtime are now in separate packages from

the daemon in Docker Engine 18.09. Users should install and update

all three packages at the same time to get the latest patch releases.

For example, on Ubuntu:

sudo apt-get install docker-ee docker-ee-cli containerd.io. See

the install instructions for the corresponding linux distro for

details.

18.09.14¶

(2020-11-12)

Client¶

- Bumped to go1.13.15 to address CVE-2020-16845

Engine¶

- Bumped to go1.13.15 to address CVE-2020-16845

18.09.13¶

(2020-08-10)

Client¶

- Bumped to go1.13.13 to address CVE-2020-14039

Engine¶

- Bumped to go1.13.13 to address CVE-2020-14039

- Disable IPv6 router advertisements to address CVE-2020-13401

- Fixed license warning regression on Windows

- Bump vendor x/text to address CVE-2019-19794

- Bump vendor miekg/dns to address CVE-2018-17419

- Bump vendor x/crypto to address CVE-2020-7919

Version 18.09¶

18.09.12¶

(2020-06-24)

Networking¶

- Fix for ‘failed to get network during CreateEndpoint’

- Fix panic in the DNS resolver moby/moby#40715

- libnetwork: cleanup VFP during overlay network removal

Runtime¶

- Bump Golang to 1.13.10

- Cease listening on the same address multiple times

18.09.11¶

2019-11-14

Builder¶

- Fix builder-next: filter type in BuildKit GC config. docker/engine#409

Runtime¶

- Bump Golang to 1.12.12.

Swarm¶

- Fix update out of sequence and increase max recv gRPC message size for nodes and secrets. docker/swarmkit#2900

- Fix for specifying

--default-addr-poolfordocker swarm initnot picked up by ingress network. docker/swarmkit#2892

18.09.10¶

2019-10-08

Client¶

- Fix client version not being pinned when set. docker/engine#118

- Improve error message shown on Windows when daemon is not running or client does not have elevated permissions. docker/engine#343

- Mitigate against YAML files that have excessive aliasing. docker/cli#2119

Runtime¶

- Send exec exit event even if the exec fails to find the binary. docker/engine#357

- Devicemapper: use correct API to get the free loop device index. docker/engine#348

- Fix overlay2 busy error on mount using kernels >=5.2. docker/engine#333

- Sleep before attemping to restart event processing. docker/engine#362

- Seccomp: add sigprocmask (used by x86 glibc) to default profile. docker/engine#341

- Fix panic on 32-bit ARMv7 caused by misaligned struct member. docker/engine#364

- Fix

docker rmistuck in case of misconfigured system (such as dead NFS share). docker/engine#336 - Fix jsonfile logger: follow logs stuck when

max-sizeis set andmax-file=1. docker/engine#377

18.09.9¶

2019-09-03

Client¶

- Fix Windows absolute path detection on non-Windows. docker/cli#1990

- Fix Docker refusing to load key from delegation.key on Windows. docker/cli#1968

- Completion scripts updates for bash and zsh.

Logging¶

- Fix for reading journald logs. moby/moby#37819 moby/moby#38859

Networking¶

- Prevent panic on network attached to a container with disabled networking. moby/moby#39589

- Fix service port for an application becomes unavailable randomly. docker/libnetwork#2069

- Fix cleaning up

--config-onlynetworks--config-fromnetworkshave ungracefully exited. docker/libnetwork#2373

Runtime¶

- Update to Go 1.11.13.

- Fix a potential engine panic when using XFS disk quota for containers. moby/moby#39644

Swarm¶

- Fix

- grpc: received message larger than max

- errors.

- moby/moby#39306

- Fix an issue where nodes several tasks could not be removed. docker/swarmkit#2867

18.09.08¶

2019-07-17

Runtime¶

- Masked the secrets updated to the log files when running Docker

Engine in debug mode.

CVE-2019-13509:

If a Docker engine is running in debug mode, and

docker stack deployis used to redeploy a stack which includes non-external secrets, the logs will contain the secret.

Client¶

- Fixed rollback config type interpolation for

parallelismandmax_failure_ratiofields.

Known Issue¶

- There are important changes to the upgrade process that, if not correctly followed, can have an impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or later.

18.09.7¶

2019-06-27

Builder¶

- Fixed a panic error when building dockerfiles that contain only comments. moby/moby#38487

- Added a workaround for GCR authentication issue. moby/moby#38246

- Builder-next: Fixed a bug in the GCR token cache implementation workaround. moby/moby#39183

Networking¶

- Fixed an error where

--network-rmwould fail to remove a network. moby/moby#39174

Runtime¶

- Added performance optimizations in aufs and layer store that helps in massively parallel container creation and removal. moby/moby#39107, moby/moby#39135

- Updated containerd to version 1.2.6. moby/moby#39016

- Fixed CVE-2018-15664 symlink-exchange attack with directory traversal. moby/moby#39357

- Windows: fixed support for

docker service create --limit-cpu. moby/moby#39190 - daemon: fixed a mirrors validation issue. moby/moby#38991

- Docker no longer supports sorting UID and GID ranges in ID maps. moby/moby#39288

Logging¶

- Added a fix that now allows large log lines for logger plugins. moby/moby#39038

Known Issue¶

- There are important changes to the upgrade process that, if not correctly followed, can have an impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or later.

18.09.6¶

2019-05-06

Builder¶

- Fixed

COPYandADDwith multiple<src>to not invalidate cache ifDOCKER_BUILDKIT=1.moby/moby#38964

Networking¶

- Cleaned up the cluster provider when the agent is closed. docker/libnetwork#2354

- Windows: Now selects a random host port if the user does not specify a host port. docker/libnetwork#2369

Known Issues¶

- There are important changes to the upgrade process that, if not correctly followed, can have an impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or later.

18.09.5¶

2019-04-11

Builder¶

- Fixed

DOCKER_BUILDKIT=1 docker build --squash ..docker/engine#176

Client¶

- Fixed tty initial size error. docker/cli#1775

- Fixed dial-stdio goroutine leakage. docker/cli#1795

- Fixed the stack informer’s selector used to track deployment. docker/cli#1794

Networking¶

- Fixed

network=hostusing wrongresolv.confwithsystemd-resolved. docker/engine#180 - Fixed Windows ARP entries getting corrupted randomly under load. docker/engine#192

Runtime¶

- Now showing stopped containers with restart policy as

Restarting. docker/engine#181 - Now using original process spec for execs. docker/engine#178

Swarm Mode¶

- Fixed leaking task resources when nodes are deleted. docker/engine#185

Known Issues¶

- There are important changes to the upgrade process that, if not correctly followed, can have an impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or later.

18.09.4¶

2019-03-28

Builder¶

- Fixed

CVE-2019-13139

by adding validation for

git refto avoid misinterpretation as a flag. moby/moby#38944

Runtime¶

- Fixed

docker cperror for filenames greater than 100 characters. moby/moby#38634 - Fixed

layer/layer_storeto ensureNewInputTarStreamresources are released. moby/moby#38413 - Increased GRPC limit for

GetConfigs. moby/moby#38800 - Updated

containerd1.2.5. docker/engine#173

Swarm Mode¶

- Fixed nil pointer exception when joining node to swarm. moby/moby#38618

- Fixed issue for swarm nodes not being able to join as masters if http proxy is set. [moby/moby#36951]

Known Issues¶

- There are important changes to the upgrade process that, if not correctly followed, can have impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or later.

18.09.3¶

2019-02-28

Networking fixes¶

- Windows: now avoids regeneration of network IDs to prevent broken references to networks. docker/engine#149

- Windows: Fixed an issue to address `- restart always` flag on standalone containers not working when specifying a network. (docker/escalation#1037)

- Fixed an issue to address the IPAM state from networkdb if the manager is not attached to the overlay network. (docker/escalation#1049)

Runtime fixes and updates¶

- Updated to Go version 1.10.8.

- Modified names in the container name generator. docker/engine#159

- When copying an existing folder, xattr set errors when the target filesystem doesn’t support xattr are now ignored. docker/engine#135

- Graphdriver: fixed “device” mode not being detected if “character-device” bit is set. docker/engine#160

- Fixed nil pointer derefence on failure to connect to containerd. docker/engine#162

- Deleted stale containerd object on start failure. docker/engine#154

Known Issues¶

- There are important changes to the upgrade process that, if not correctly followed, can have impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or greater.

18.09.2¶

2019-02-11

Security fixes for Docker Engine - Enterprise¶

- Update

runcto address a critical vulnerability that allows specially-crafted containers to gain administrative privileges on the host. CVE-2019-5736 - Ubuntu 14.04 customers using a 3.13 kernel will need to upgrade to a supported Ubuntu 4.x kernel

For additional information, refer to the Docker blog post.

Known Issues¶

- There are important changes to the upgrade process that, if not correctly followed, can have impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or greater.

18.09.1¶

2019-01-09

In Docker versions prior to 18.09, containerd was managed by the Docker

engine daemon. In Docker Engine 18.09, containerd is managed by systemd.

Since containerd is managed by systemd, any custom configuration to the

docker.service systemd configuration which changes mount settings

(for example, MountFlags=slave) breaks interactions between the

Docker Engine daemon and containerd, and you will not be able to start

containers.

Run the following command to get the current value of the MountFlags

property for the docker.service:

sudo systemctl show --property=MountFlags docker.service

MountFlags=

Update your configuration if this command prints a non-empty value for

MountFlags, and restart the docker service.

Security fixes¶

- Upgraded Go language to 1.10.6 to resolve CVE-2018-16873, CVE-2018-16874, and CVE-2018-16875.

- Fixed authz plugin for 0-length content and path validation.

- Added

/proc/asoundto masked paths docker/engine#126

Improvements¶

- Updated to BuildKit 0.3.3 docker/engine#122

- Updated to containerd 1.2.2 docker/engine#144

- Provided additional warnings for use of deprecated legacy overlay and devicemapper storage drivers docker/engine#85

- prune: perform image pruning before build cache pruning docker/cli#1532

- Added bash completion for experimental CLI commands (manifest) docker/cli#1542

- Windows: allow process isolation on Windows 10 docker/engine#81

Fixes¶

- Disable kmem accounting in runc on RHEL/CentOS (docker/escalation#614, docker/escalation#692) docker/engine#121

- Fixed inefficient networking configuration docker/engine#123

- Fixed docker system prune doesn’t accept until filter docker/engine#122

- Avoid unset credentials in

containerddocker/engine#122 - Fixed iptables compatibility on Debian docker/engine#107

- Fixed setting default schema to tcp for docker host docker/cli#1454

- Fixed bash completion for

service update --forcedocker/cli#1526 - Windows: DetachVhd attempt in cleanup docker/engine#113

- API: properly handle invalid JSON to return a 400 status docker/engine#110

- API: ignore default address-pools on API < 1.39 docker/engine#118

- API: add missing default address pool fields to swagger docker/engine#119

- awslogs: account for UTF-8 normalization in limits docker/engine#112

- Prohibit reading more than 1MB in HTTP error responses docker/engine#114

- apparmor: allow receiving of signals from

docker killdocker/engine#116 - overlay2: use index=off if possible (fix EBUSY on mount) docker/engine#84

Packaging¶

- Add docker.socket requirement for docker.service. docker/docker-ce-packaging#276

- Add socket activation for RHEL-based distributions. docker/docker-ce-packaging#274

- Add libseccomp requirement for RPM packages. docker/docker-ce-packaging#266

Known Issues¶

- When upgrading from 18.09.0 to 18.09.1,

containerdis not upgraded to the correct version on Ubuntu. Learn more. - There are important changes to the upgrade process that, if not correctly followed, can have impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or greater.

18.09.0¶

2018-11-08

Important notes about this release¶

In Docker versions prior to 18.09, containerd was managed by the Docker

engine daemon. In Docker Engine 18.09, containerd is managed by systemd.

Since containerd is managed by systemd, any custom configuration to the

docker.service systemd configuration which changes mount settings

(for example, MountFlags=slave) breaks interactions between the

Docker Engine daemon and containerd, and you will not be able to start

containers.

Run the following command to get the current value of the MountFlags

property for the docker.service:

sudo systemctl show --property=MountFlags docker.service

MountFlags=

Update your configuration if this command prints a non-empty value for

MountFlags, and restart the docker service.

New features for Docker Engine EE¶

- FIPS Compliance added for Windows Server 2016 and later

- Docker Content Trust Enforcement for the Enterprise Engine. This allows the Docker Engine - Enterprise to run containers not signed by a specific organization.

New features¶

- Updated API version to 1.39 moby/moby#37640

- Added support for remote connections using SSH docker/cli#1014

- Builder: added prune options to the API moby/moby#37651

- Added “Warnings” to

/infoendpoint, and move detection to the daemon moby/moby#37502 - Allows BuildKit builds to run without experimental mode enabled. Buildkit can now be configured with an option in daemon.json moby/moby#37593 moby/moby#37686 moby/moby#37692 docker/cli#1303 docker/cli#1275

- Added support for build-time secrets using a

--secretflag when using BuildKit docker/cli#1288 - Added SSH agent socket forwarder

(

docker build --ssh $SSHMOUNTID=$SSH_AUTH_SOCK) when using BuildKit docker/cli#1438 / docker/cli#1419 - Added

--chownflag support forADDandCOPYcommands on Windows moby/moby#35521 - Added

builder prunesubcommand to prune BuildKit build cache docker/cli#1295 docker/cli#1334 - BuildKit: Adds configurable garbage collection policy for the BuildKit build cache docker/engine#59 / moby/moby#37846

- BuildKit: Adds support for

docker build --pull ...when using BuildKit moby/moby#37613 - BuildKit: Adds support or “registry-mirrors” and “insecure-registries” when using BuildKit docker/engine#59 / moby/moby#37852

- BuildKit: Enables net modes and bridge. moby/moby#37620

- Added

docker enginesubcommand to manage the lifecycle of a Docker Engine running as a privileged container on top of containerd, and to allow upgrades to Docker Engine Enterprise docker/cli#1260 - Exposed product license in

docker infooutput docker/cli#1313 - Showed warnings produced by daemon in

docker infooutput docker/cli#1225 - Added “local” log driver moby/moby#37092

- Amazon CloudWatch: adds

awslogs-endpointlogging option moby/moby#37374 - Added support for global default address pools moby/moby#37558 docker/cli#1233

- Configured containerd log-level to be the same as dockerd moby/moby#37419

- Added configuration option for cri-containerd moby/moby#37519

- Updates containerd client to v1.2.0-rc.1 moby/moby#37664, docker/engine#75 / moby/moby#37710

- Added support for global default address pools moby/moby#37558 docker/cli#1233

- Moved the

POST /sessionendpoint out of experimental. moby/moby#40028

Improvements¶

- Does not return “

<unknown>” in /info response moby/moby#37472 - BuildKit: Changes

--console=[auto,false,true]to--progress=[auto,plain,tty]docker/cli#1276 - BuildKit: Sets BuildKit’s ExportedProduct variable to show useful errors in the future. moby/moby#37439

- Hides

--data-path-addrflags when connected to a daemon that doesn’t support this option docker/docker/cli#1240 - Only shows buildkit-specific flags if BuildKit is enabled docker/cli#1438 / docker/cli#1427

- Improves version output alignment docker/cli#1204

- Sorts plugin names and networks in a natural order docker/cli#1166, docker/cli#1266

- Updates bash and zsh completion scripts

- Passes log-level to containerd. moby/moby#37419

- Uses direct server return (DSR) in east-west overlay load balancing docker/engine#93 / docker/libnetwork#2270

- Builder: temporarily disables bridge networking when using buildkit. moby/moby#37691

- Blocks task starting until node attachments are ready moby/moby#37604

- Propagates the provided external CA certificate to the external CA object in swarm. docker/cli#1178

- Removes Ubuntu 14.04 “Trusty Tahr” as a supported platform docker-ce-packaging#255 / docker-ce-packaging#254

- Removes Debian 8 “Jessie” as a supported platform docker-ce-packaging#255 / docker-ce-packaging#254

- Removes ‘docker-’ prefix for containerd and runc binaries docker/engine#61 / moby/moby#37907, docker-ce-packaging#241

- Splits “engine”, “cli”, and “containerd” to separate packages, and run containerd as a separate systemd service docker-ce-packaging#131, docker-ce-packaging#158

- Builds binaries with Go 1.10.4 docker-ce-packaging#181

- Removes

-ce/-eesuffix from version string docker-ce-packaging#206

Fixes¶

- BuildKit: Do not cancel buildkit status request. moby/moby#37597

- Fixes no error is shown if build args are missing during docker build moby/moby#37396

- Fixes error “unexpected EOF” when adding an 8GB file moby/moby#37771

- LCOW: Ensures platform is populated on

COPY/ADD. moby/moby#37563 - Fixes mapping a range of host ports to a single container port docker/cli#1102

- Fixes

trust inspecttypo: “AdminstrativeKeys” docker/cli#1300 - Fixes environment file parsing for imports of absent variables and those with no name. docker/cli#1019

- Fixes a potential “out of memory exception” when running

docker image prunewith a large list of dangling images docker/cli#1432 / docker/cli#1423 - Fixes pipe handling in ConEmu and ConsoleZ on Windows moby/moby#37600

- Fixes long startup on windows, with non-hns governed Hyper-V networks docker/engine#67 / moby/moby#37774

- Fixes daemon won’t start when “runtimes” option is defined both in config file and cli docker/engine#57 / moby/moby#37871

- Loosens permissions on

/etc/dockerdirectory to prevent “permission denied” errors when usingdocker manifest inspectdocker/engine#56 / moby/moby#37847 - Fixes denial of service with large numbers in

cpuset-cpusandcpuset-memsdocker/engine#70 / moby/moby#37967 - LCOW: Add

--platformtodocker importdocker/cli#1375 / docker/cli#1371 - LCOW: Add LinuxMetadata support by default on Windows moby/moby#37514

- LCOW: Mount to short container paths to avoid command-line length limit moby/moby#37659

- LCOW: Fix builder using wrong cache layer moby/moby#37356

- Fixes json-log file descriptors leaking when using

--followdocker/engine#48 moby/moby#37576 moby/moby#37734 - Fixes a possible deadlock on closing the watcher on kqueue moby/moby#37392

- Uses poller based watcher to work around the file caching issue in Windows moby/moby#37412

- Handles systemd-resolved case by providing appropriate resolv.conf to networking layer moby/moby#37485

- Removes support for TLS < 1.2 moby/moby#37660

- Seccomp: Whitelist syscalls linked to

CAP_SYS_NICEin default seccomp profile moby/moby#37242 - Seccomp: move the syslog syscall to be gated by

CAP_SYS_ADMINorCAP_SYSLOGdocker/engine#64 / moby/moby#37929 - SELinux: Fix relabeling of local volumes specified via Mounts API on selinux-enabled systems moby/moby#37739

- Adds warning if REST API is accessible through an insecure connection moby/moby#37684

- Masks proxy credentials from URL when displayed in system info docker/engine#72 / moby/moby#37934

- Fixes mount propagation for btrfs docker/engine#86 / moby/moby#38026

- Fixes nil pointer dereference in node allocation docker/engine#94 / docker/swarmkit#2764

Known Issues¶

There are important changes to the upgrade process that, if not correctly followed, can have impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or greater.

With https://github.com/boot2docker/boot2docker/releases/download/v18.09.0/boot2docker.iso, connection is being refused from a node on the virtual machine. Any publishing of swarm ports in virtualbox-created docker-machine VM’s will not respond. This is occurring on macOS and Windows 10, using docker-machine version 0.15 and 0.16.

The following

docker runcommand works, allowing access from host browser:docker run -d -p 4000:80 nginxHowever, the following

docker servicecommand fails, resulting in curl/chrome unable to connect (connection refused):docker service create -p 5000:80 nginxThis issue is not apparent when provisioning 18.09.0 cloud VM’s using docker-machine.

Workarounds:

- Use cloud VM’s that don’t rely on boot2docker.

docker runis unaffected.- For Swarm, set VIRTUALBOX_BOOT2DOCKER_URL=https://github.com/boot2docker/boot2docker/releases/download/v18.06.1-ce/boot2docker.iso.

This issue is resolved in 18.09.1.

Deprecation Notices¶

As of EE 2.1, Docker has deprecated support for Device Mapper as a storage driver. It will continue to be supported at this time, but support will be removed in a future release. Docker will continue to support Device Mapper for existing EE 2.0 and 2.1 customers. Please contact Sales for more information.

Docker recommends that existing customers migrate to using Overlay2 for the storage driver. The Overlay2 storage driver is now the default for Docker engine implementations.

As of EE 2.1, Docker has deprecated support for IBM Z (s390x). Refer to the Docker Compatibility Matrix for detailed compatibility information.

For more information on the list of deprecated flags and APIs, have a look at the deprecation information where you can find the target removal dates.

End of Life Notification¶

In this release, Docker has also removed support for TLS < 1.2 moby/moby#37660, Ubuntu 14.04 “Trusty Tahr” docker-ce-packaging#255 / docker-ce-packaging#254, and Debian 8 “Jessie” docker-ce-packaging#255 / docker-ce-packaging#254.

Get Docker Engine - Enterprise on Linux distros¶

Get Docker EE for CentOS¶

There are two ways to install and upgrade Docker Enterprise Edition (Docker EE) on CentOS:

- YUM repository: Set up a Docker repository and install Docker EE from it. This is the recommended approach because installation and upgrades are managed with YUM and easier to do.

- RPM package: Download the RPM package, install it manually, and manage upgrades manually. This is useful when installing Docker EE on air-gapped systems with no access to the internet.

Prerequisites¶

This section lists what you need to consider before installing Docker EE. Items that require action are explained below.

- Use CentOS 64-bit 7.1 and higher on

x86_64. - Use storage driver

overlay2ordevicemapper(direct-lvmmode in production). - Find the URL for your Docker EE repo at Docker Hub.

- Uninstall old versions of Docker.

- Remove old Docker repos from

/etc/yum.repos.d/.

Architectures and storage drivers¶

Docker EE supports CentOS 64-bit, latest version, running on x86_64.

On CentOS, Docker EE supports storage drivers, overlay2 and

devicemapper. In Docker EE 17.06.2-ee-5 and higher, overlay2 is the

recommended storage driver. The following limitations apply:

- OverlayFS: If

selinuxis enabled, theoverlay2storage driver is supported on CentOS 7.4 or higher. Ifselinuxis disabled,overlay2is supported on CentOS 7.2 or higher with kernel version 3.10.0-693 and higher. - Device Mapper: On production systems using

devicemapper, you must usedirect-lvmmode, which requires one or more dedicated block devices. Fast storage such as solid-state media (SSD) is recommended.

Find your Docker EE repo URL¶

To install Docker EE, you will need the URL of the Docker EE repository associated with your trial or subscription:

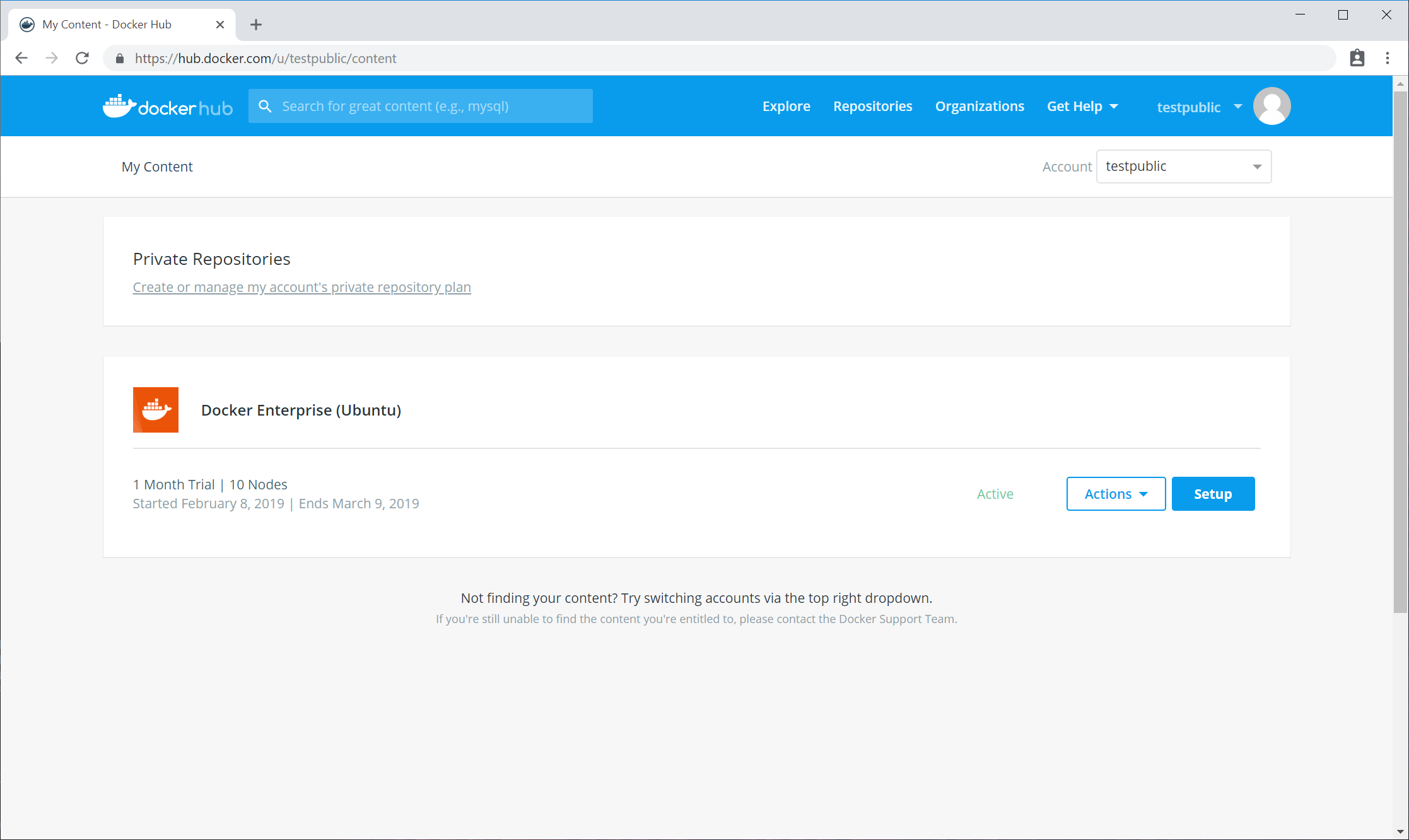

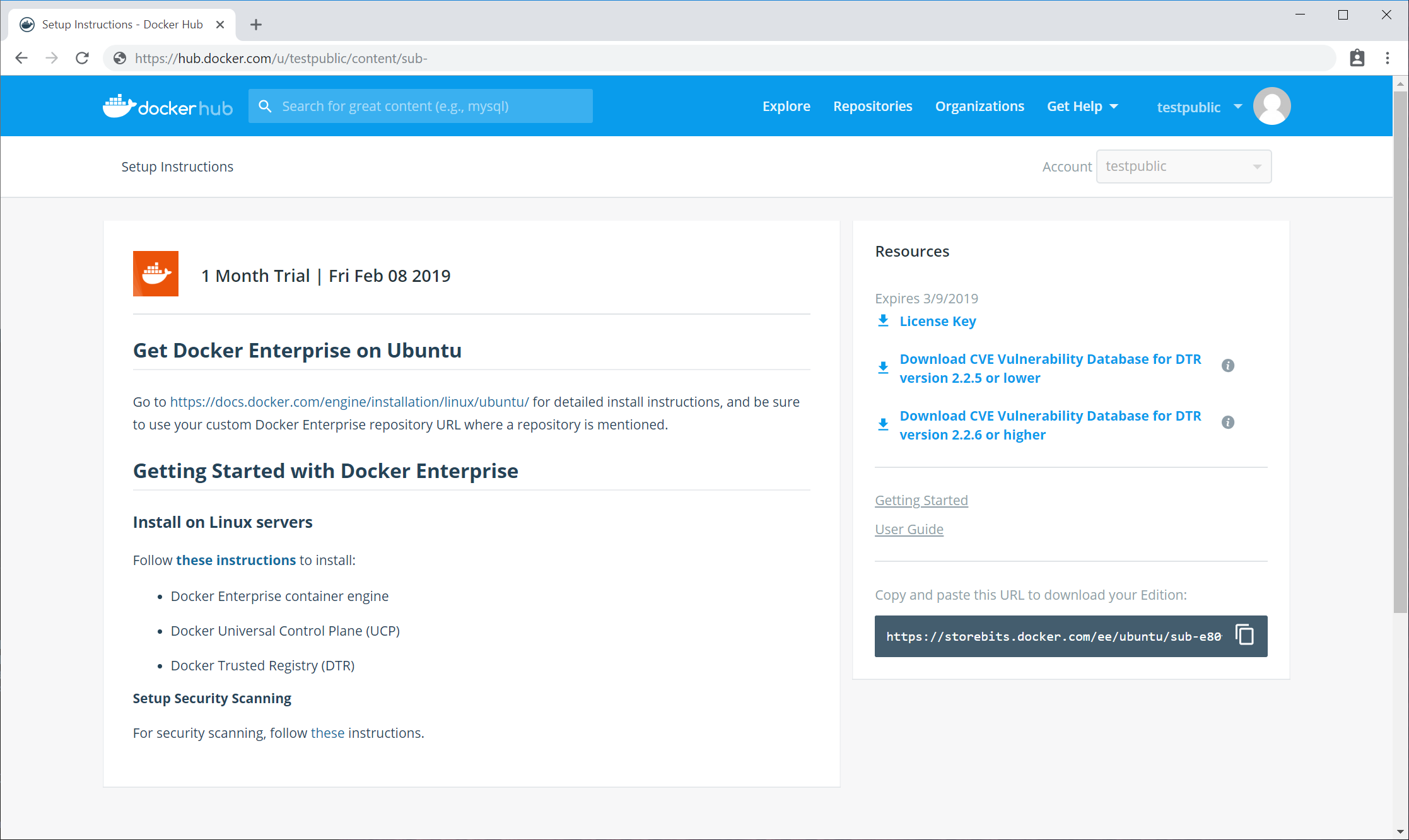

- Go to https://hub.docker.com/my-content. All of your subscriptions and trials are listed.

- Click the Setup button for Docker Enterprise Edition for Centos.

- Copy the URL from Copy and paste this URL to download your Edition and save it for later use.

You will use this URL in a later step to create a variable called,

DOCKERURL.

Uninstall old Docker versions¶

The Docker EE package is called docker-ee. Older

versions were called docker or docker-engine. Uninstall all

older versions and associated dependencies. The contents of

/var/lib/docker/ are preserved, including images, containers,

volumes, and networks. If you are upgrading from Docker Engine -

Community to Docker EE, remove the Docker Engine -

Community package as well.

$ sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

Repo install and upgrade¶

The advantage of using a repository from which to install Docker EE (or any software) is that it provides a certain level of automation. RPM-based distributions such as CentOS, use a tool called YUM that work with your repositories to manage dependencies and provide automatic updates.

Set up the repository¶

You only need to set up the repository once, after which you can install Docker EE from the repo and repeatedly upgrade as necessary.

Remove existing Docker repositories from

/etc/yum.repos.d/:$ sudo rm /etc/yum.repos.d/docker*.repo

Temporarily store the URL (that you copied above) in an environment variable. Replace

<DOCKER-EE-URL>with your URL in the following command. This variable assignment does not persist when the session ends:$ export DOCKERURL="<DOCKER-EE-URL>"

Store the value of the variable,

DOCKERURL(from the previous step), in ayumvariable in/etc/yum/vars/:$ sudo -E sh -c 'echo "$DOCKERURL/centos" > /etc/yum/vars/dockerurl'

Install required packages:

yum-utilsprovides the yum-config-manager utility, anddevice-mapper-persistent-dataandlvm2are required by the devicemapper storage driver:$ sudo yum install -y yum-utils \ device-mapper-persistent-data \ lvm2

Add the Docker EE stable repository:

$ sudo -E yum-config-manager \ --add-repo \ "$DOCKERURL/centos/docker-ee.repo"

Install from the repository¶

Install the latest patch release, or go to the next step to install a specific version:

$ sudo yum -y install docker-ee docker-ee-cli containerd.io

If prompted to accept the GPG key, verify that the fingerprint matches

77FE DA13 1A83 1D29 A418 D3E8 99E5 FF2E 7668 2BC9, and if so, accept it.To install a specific version of Docker EE (recommended in production), list versions and install:

List and sort the versions available in your repo. This example sorts results by version number, highest to lowest, and is truncated:

$ sudo yum list docker-ee --showduplicates | sort -r docker-ee.x86_64 19.03.ee.2-1.el7.entos docker-ee-stable-18.09 The list returned depends on which repositories you enabled, and is specific to your version of CentOS (indicated by ``.el7`` in this example).

Install a specific version by its fully qualified package name, which is the package name (

docker-ee) plus the version string (2nd column) starting at the first colon (:), up to the first hyphen, separated by a hyphen (-). For example,docker-ee-18.09.1.$ sudo yum -y install docker-ee-<VERSION_STRING> docker-ee-cli-<VERSION_STRING> containerd.io

For example, if you want to install the 18.09 version run the following:

sudo yum-config-manager --enable docker-ee-stable-18.09

Docker is installed but not started. The

dockergroup is created, but no users are added to the group.

Start Docker:

Note

If using

devicemapper, ensure it is properly configured before starting Docker.$ sudo systemctl start docker

Verify that Docker EE is installed correctly by running the

hello-worldimage. This command downloads a test image, runs it in a container, prints an informational message, and exits:$ sudo docker run hello-world

Docker EE is installed and running. Use

sudoto run Docker commands.

Upgrade from the repository¶

- Add the new repository.

- Follow the installation instructions and install a new version.

Package install and upgrade¶

To manually install Docker Enterprise, download the .rpm file for your

release. You need to download a new file each time you want to upgrade Docker

Enterprise.

Install with a package¶

Go to the Docker EE repository URL associated with your trial or subscription in your browser. Go to

centos/7/x86_64/stable-<VERSION>/Packagesand download the.rpmfile for the Docker version you want to install.Install Docker Enterprise, changing the path below to the path where you downloaded the Docker package.

$ sudo yum install /path/to/package.rpm

Docker is installed but not started. The

dockergroup is created, but no users are added to the group.Start Docker:

Note

If using

devicemapper, ensure it is properly configured before starting Docker.$ sudo systemctl start docker

Verify that Docker EE is installed correctly by running the

hello-worldimage. This command downloads a test image, runs it in a container, prints an informational message, and exits:$ sudo docker run hello-world

Docker EE is installed and running. Use

sudoto run Docker commands.

Uninstall Docker EE¶

Uninstall the Docker EE package:

$ sudo yum -y remove docker-ee

Delete all images, containers, and volumes (because these are not automatically removed from your host):

$ sudo rm -rf /var/lib/docker

Delete other Docker related resources:

$ sudo rm -rf /run/docker $ sudo rm -rf /var/run/docker $ sudo rm -rf /etc/docker

If desired, remove the

devicemapperthin pool and reformat the block devices that were part of it.

You must delete any edited configuration files manually.

Next steps¶

- Continue with user guides on Universal Control Plane (UCP) and Docker Trusted Registry (DTR).

Get Docker EE for Oracle Linux¶

There are two ways to install and upgrade :ref:`Docker Enterprise<docker-engine-enterprise> on Oracle Linux:

- YUM repository: Set up a Docker repository and install Docker EE from it. This is the recommended approach because installation and upgrades are managed with YUM and easier to do.

- RPM package: Download the RPM package, install it manually, and manage upgrades manually. This is useful when installing Docker EE on air-gapped systems with no access to the internet.

Prerequisites¶

This section lists what you need to consider before installing Docker EE. Items that require action are explained below.

- Use OL 64-bit 7.3 or higher on RHCK 3.10.0-514 or higher.

- Use the

devicemapperstorage driver only (direct-lvmmode in production). - Find the URL for your Docker EE repo at Docker Hub.

- Uninstall old versions of Docker.

- Remove old Docker repos from

/etc/yum.repos.d/. - Disable SELinux if installing or upgrading Docker EE 17.06.1 or newer.

Architectures and storage drivers¶

Docker Engine - Enterprise supports Oracle Linux 64-bit, versions 7.3 and higher, running the Red Hat Compatible kernel (RHCK) 3.10.0-514 or higher. Older versions of Oracle Linux are not supported.

On Oracle Linux, Docker Engine - Enterprise only supports the

devicemapper storage driver. In production, you must use it in

direct-lvm mode, which requires one or more dedicated block devices.

Fast storage such as solid-state media (SSD) is recommended.

Find your Docker EE repo URL¶

To install Docker EE, you will need the URL of the Docker EE repository associated with your trial or subscription:

- Go to https://hub.docker.com/my-content. All of your subscriptions and trials are listed.

- Click the Setup button for Docker Enterprise Edition for Oracle Linux.

- Copy the URL from Copy and paste this URL to download your Edition and save it for later use.

You will use this URL in a later step to create a variable called,

DOCKERURL.

Uninstall old Docker versions¶

The Docker Engine - Enterprise package is called docker-ee. Older

versions were called docker or docker-engine. Uninstall all

older versions and associated dependencies. The contents of

/var/lib/docker/ are preserved, including images, containers,

volumes, and networks.

$ sudo yum remove docker \

docker-engine \

docker-engine-selinux

Repo install and upgrade¶

The advantage of using a repository from which to install Docker Engine - Enterprise (or any software) is that it provides a certain level of automation. RPM-based distributions such as Oracle Linux, use a tool called YUM that work with your repositories to manage dependencies and provide automatic updates.

Set up the repository¶

You only need to set up the repository once, after which you can install Docker Engine - Enterprise from the repo and repeatedly upgrade as necessary.

Remove existing Docker repositories from

/etc/yum.repos.d/:$ sudo rm /etc/yum.repos.d/docker*.repo

Temporarily store the URL (that you copied above) in an environment variable. Replace

<DOCKER-EE-URL>with your URL in the following command. This variable assignment does not persist when the session ends:$ export DOCKERURL="<DOCKER-EE-URL>"

Store the value of the variable,

DOCKERURL(from the previous step), in ayumvariable in/etc/yum/vars/:$ sudo -E sh -c 'echo "$DOCKERURL/oraclelinux" > /etc/yum/vars/dockerurl'

Install required packages:

yum-utilsprovides the yum-config-manager utility, anddevice-mapper-persistent-dataandlvm2are required by the devicemapper storage driver:$ sudo yum install -y yum-utils \ device-mapper-persistent-data \ lvm2

Enable the

ol7_addonsOracle repository. This ensures access to thecontainer-selinuxpackage required bydocker-ee.$ sudo yum-config-manager --enable ol7_addons

Add the Docker Engine - Enterprise stable repository:

$ sudo -E yum-config-manager \ --add-repo \ "$DOCKERURL/oraclelinux/docker-ee.repo"

Install from the repository¶

Install the latest patch release, or go to the next step to install a specific version:

$ sudo yum -y install docker-ee docker-ee-cli containerd.io

If prompted to accept the GPG key, verify that the fingerprint matches

77FE DA13 1A83 1D29 A418 D3E8 99E5 FF2E 7668 2BC9, and if so, accept it.To install a specific version of Docker Engine - Enterprise (recommended in production), list versions and install:

List and sort the versions available in your repo. This example sorts results by version number, highest to lowest, and is truncated:

$ sudo yum list docker-ee --showduplicates | sort -r docker-ee.x86_64 19.03.ee.2-1.el7.oraclelinuix docker-ee-stable-18.09

The list returned depends on which repositories you enabled, and is specific to your version of Oracle Linux (indicated by

.el7in this example).Install a specific version by its fully qualified package name, which is the package name (

docker-ee) plus the version string (2nd column) starting at the first colon (:), up to the first hyphen, separated by a hyphen (-). For example,docker-ee-18.09.1.$ sudo yum -y install docker-ee-<VERSION_STRING> docker-ee-cli-<VERSION_STRING> containerd.io

For example, if you want to install the 18.09 version run the following:

sudo yum-config-manager --enable docker-ee-stable-18.09

Docker is installed but not started. The

dockergroup is created, but no users are added to the group.

Start Docker:

Note

If using

devicemapper, ensure it is properly configured before starting Docker.$ sudo systemctl start docker

Verify that Docker Engine - Enterprise is installed correctly by running the

hello-worldimage. This command downloads a test image, runs it in a container, prints an informational message, and exits:$ sudo docker run hello-world

Docker Engine - Enterprise is installed and running. Use

sudoto run Docker commands.

Package install and upgrade¶

To manually install Docker Enterprise, download the

.rpm file for your release. You need to

download a new file each time you want to upgrade Docker Enterprise.

Install with a package¶

Go to the Docker Engine - Enterprise repository URL associated with your trial or subscription in your browser. Go to

oraclelinux/. Choose your Oracle Linux version, architecture, and Docker version. Download the.rpmfile from thePackagesdirectory.Install Docker Enterprise, changing the path below to the path where you downloaded the Docker package.

$ sudo yum install /path/to/package.rpm

Docker is installed but not started. The

dockergroup is created, but no users are added to the group.Start Docker:

Note

If using

devicemapper, ensure it is properly configured before starting Docker.$ sudo systemctl start docker

Verify that Docker Engine - Enterprise is installed correctly by running the

hello-worldimage. This command downloads a test image, runs it in a container, prints an informational message, and exits:$ sudo docker run hello-world

Docker Engine - Enterprise is installed and running. Use

sudoto run Docker commands.

Upgrade with a package¶

- Download the newer package file.

- Repeat the installation procedure,

using

yum -y upgradeinstead ofyum -y install, and point to the new file.

Uninstall Docker Engine - Enterprise¶

Uninstall the Docker Engine - Enterprise package:

$ sudo yum -y remove docker-ee

Delete all images, containers, and volumes (because these are not automatically removed from your host):

$ sudo rm -rf /var/lib/docker

Delete other Docker related resources:

$ sudo rm -rf /run/docker $ sudo rm -rf /var/run/docker $ sudo rm -rf /etc/docker``

If desired, remove the

devicemapperthin pool and reformat the block devices that were part of it.

You must delete any edited configuration files manually.

Next steps¶

- Continue with user guides on Universal Control Plane (UCP) and Docker Trusted Registry (DTR).

Get Docker EE for Red Hat Linux¶

There are two ways to install and upgrade :ref:`Docker Enterprise<docker-engine-enterprise> on Red Hat Enterprise Linux:

- YUM repository: Set up a Docker repository and install Docker EE from it. This is the recommended approach because installation and upgrades are managed with YUM and easier to do.

- RPM package: Download the RPM package, install it manually, and manage upgrades manually. This is useful when installing Docker EE on air-gapped systems with no access to the internet.

Prerequisites¶

This section lists what you need to consider before installing Docker EE. Items that require action are explained below.

- Use RHEL 64-bit 7.4 and higher on

x86_64. - Use storage driver

overlay2ordevicemapper(direct-lvmmode in production). - Find the URL for your Docker EE repo at Docker Hub.

- Uninstall old versions of Docker.

- Remove old Docker repos from

/etc/yum.repos.d/. - Disable SELinux on s390x (IBM Z) systems before install/upgrade.

Architectures and storage drivers¶

Docker EE supports Red Hat Enterprise Linux 64-bit,

versions 7.4 and higher running on x86_64.

On Red Hat Enterprise Linux, Docker EE supports storage drivers, overlay2

and devicemapper. In Docker EE 17.06.2-ee-5 and higher, overlay2 is the

recommended storage driver. The following limitations apply:

- OverlayFS: If

selinuxis enabled, theoverlay2storage driver is supported on RHEL 7.4 or higher. Ifselinuxis disabled,overlay2is supported on RHEL 7.2 or higher with kernel version 3.10.0-693 and higher. - Device Mapper: On production systems using

devicemapper, you must usedirect-lvmmode, which requires one or more dedicated block devices. Fast storage such as solid-state media (SSD) is recommended.

FIPS 140-2 cryptographic module support¶

Federal Information Processing Standards (FIPS) Publication 140-2 is a United States Federal security requirement for cryptographic modules.

With Docker EE Basic license for versions 18.03 and later, Docker provides FIPS 140-2 support in RHEL 7.3, 7.4 and 7.5. This includes a FIPS supported cryptographic module. If the RHEL implementation already has FIPS support enabled, FIPS is also automatically enabled in the Docker engine.

To verify the FIPS 140-2 module is enabled in the Linux kernel, confirm

the file /proc/sys/crypto/fips_enabled contains 1.

$ cat /proc/sys/crypto/fips_enabled

1

Note

FIPS is only supported in Docker Engine EE. UCP and DTR currently do not have support for FIPS 140-2.

To enable FIPS 140-2 compliance on a system that is not in FIPS 140-2 mode, do the following:

Create a file called

/etc/systemd/system/docker.service.d/fips-module.conf. Add the

following:

[Service]

Environment="DOCKER_FIPS=1"

Reload the Docker configuration to systemd.

$ sudo systemctl daemon-reload

Restart the Docker service as root.

$ sudo systemctl restart docker

To confirm Docker is running with FIPS 140-2 enabled, run the

docker info command.

docker info --format {{.SecurityOptions}}

[name=selinux name=fips]

Disabling FIPS 140-2¶

If the system has the FIPS 140-2 cryptographic module installed on the operating system, it is possible to disable FIPS 140-2 compliance.

To disable FIPS 140-2 in Docker but not the operating system, set the

value DOCKER_FIPS=0 in the

/etc/systemd/system/docker.service.d/fips-module.conf.

Reload the Docker configuration to systemd.

$ sudo systemctl daemon-reload

Restart the Docker service as root.

$ sudo systemctl restart docker

Find your Docker EE repo URL¶

To install Docker Enterprise, you will need the URL of the Docker Enterprise repository associated with your trial or subscription:

- Go to https://hub.docker.com/my-content. All of your subscriptions and trials are listed.

- Click the Setup button for Docker Enterprise Edition for Red Hat Enterprise Linux.

- Copy the URL from Copy and paste this URL to download your Edition and save it for later use.

You will use this URL in a later step to create a variable called,

DOCKERURL.

Uninstall old Docker versions¶

The Docker EE package is called docker-ee. Older

versions were called docker or docker-engine. Uninstall all

older versions and associated dependencies. The contents of

/var/lib/docker/ are preserved, including images, containers,

volumes, and networks.

$ sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

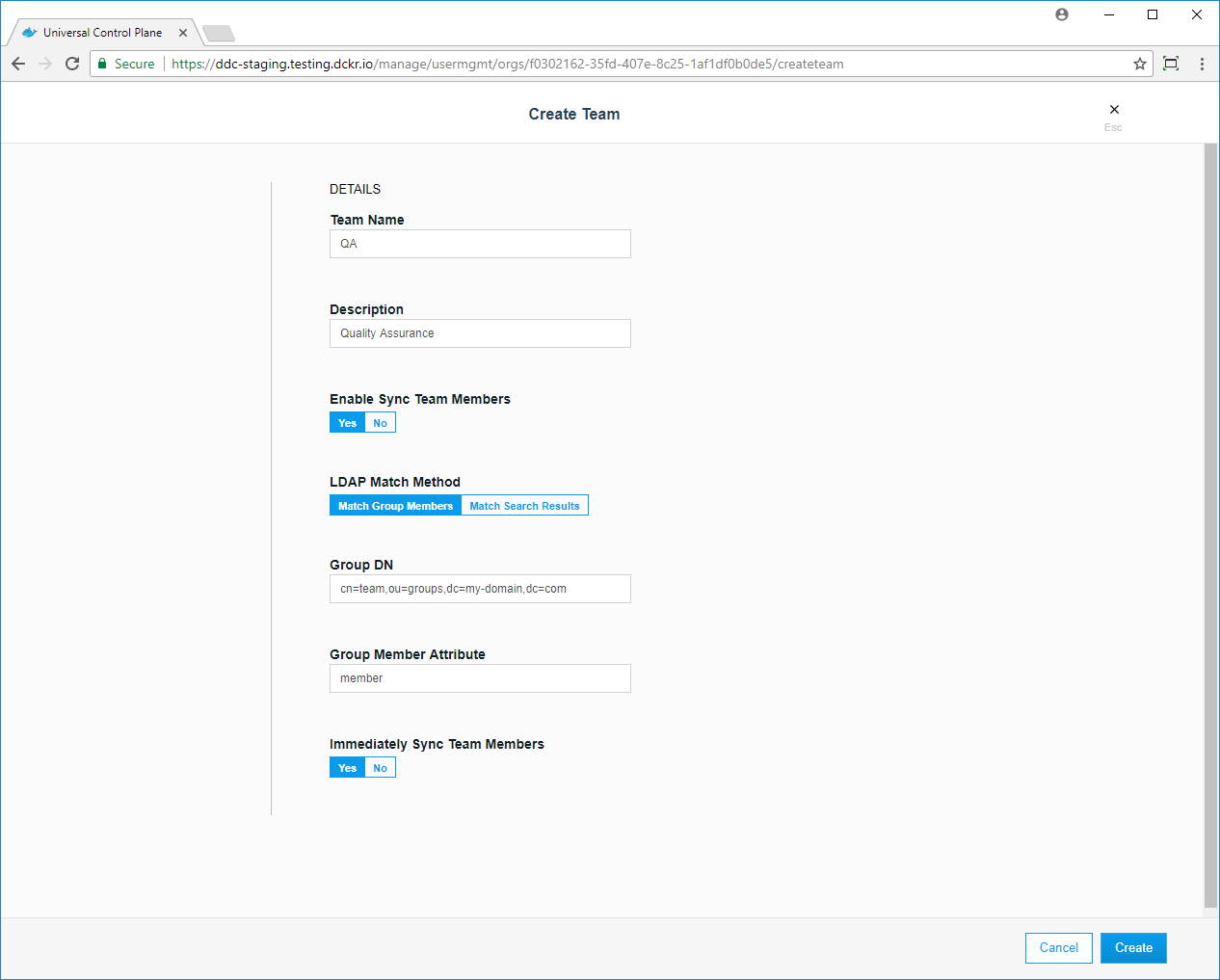

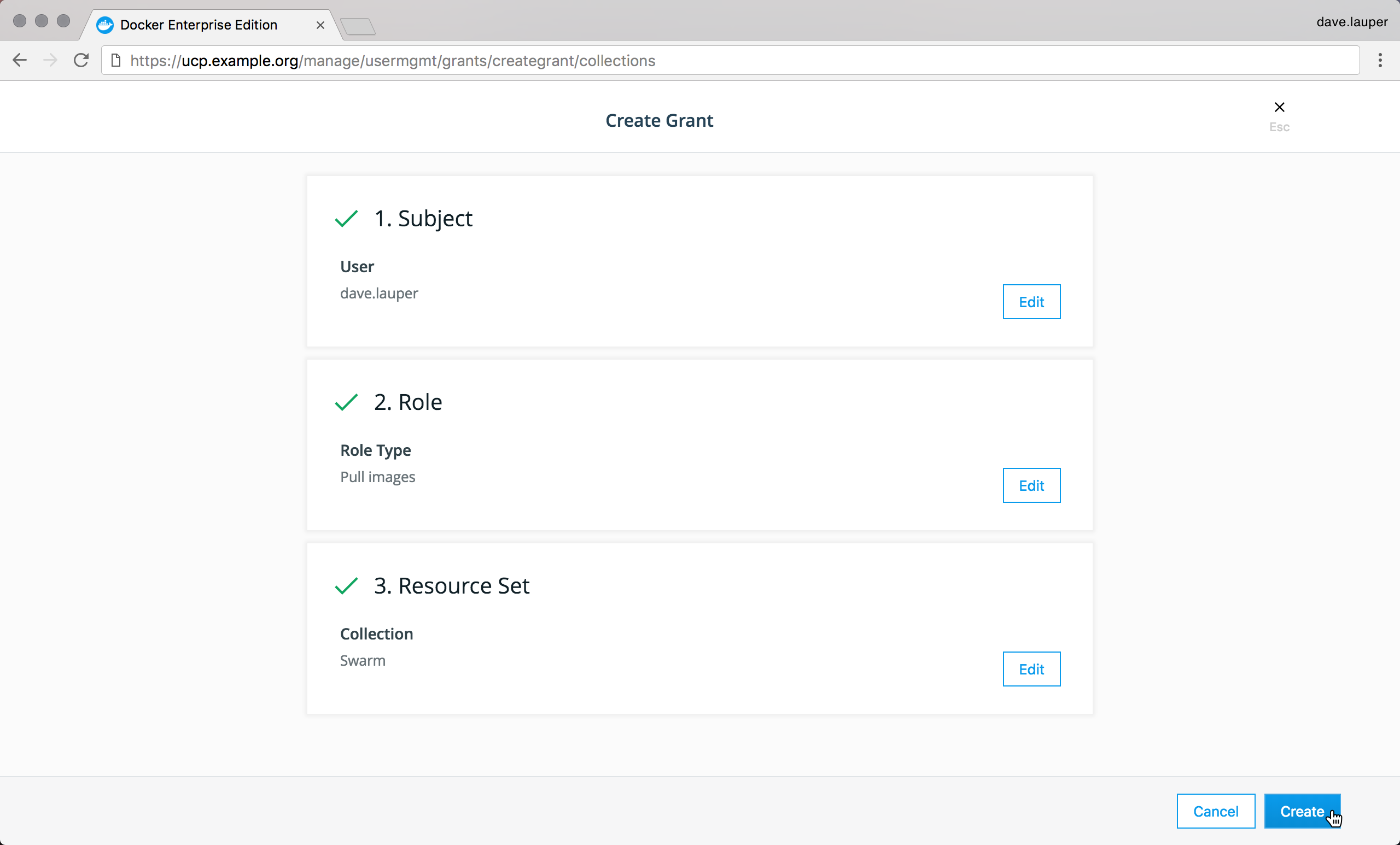

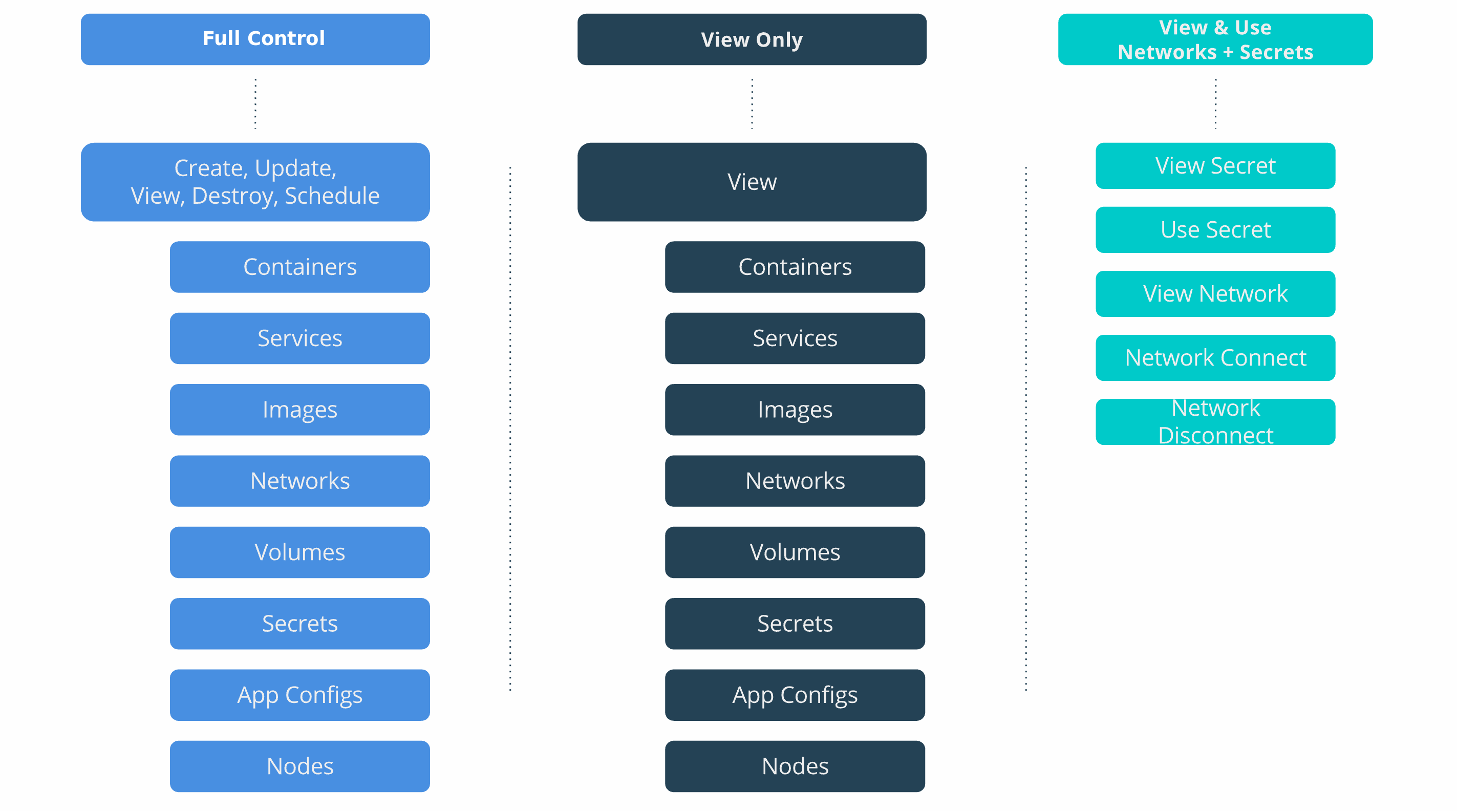

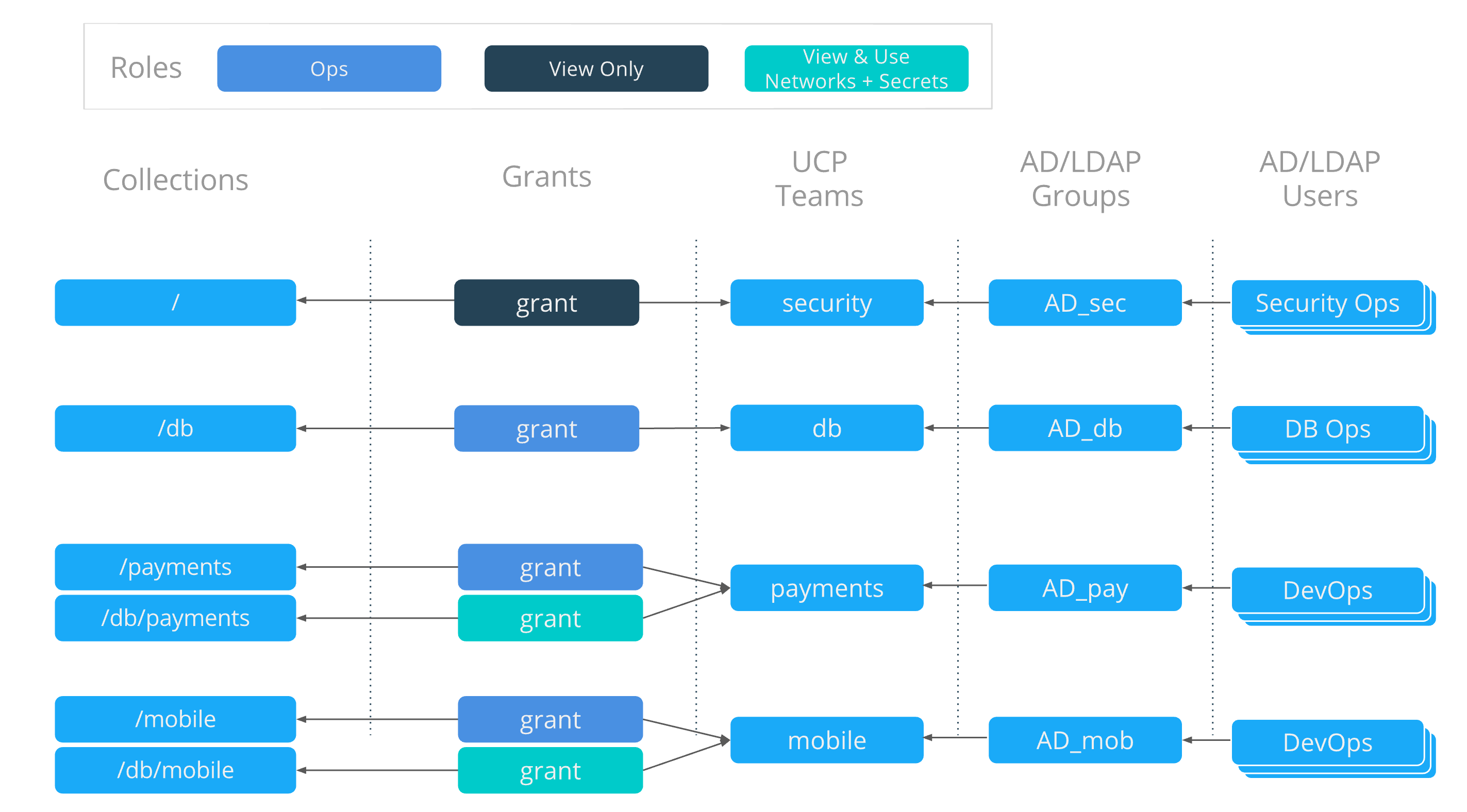

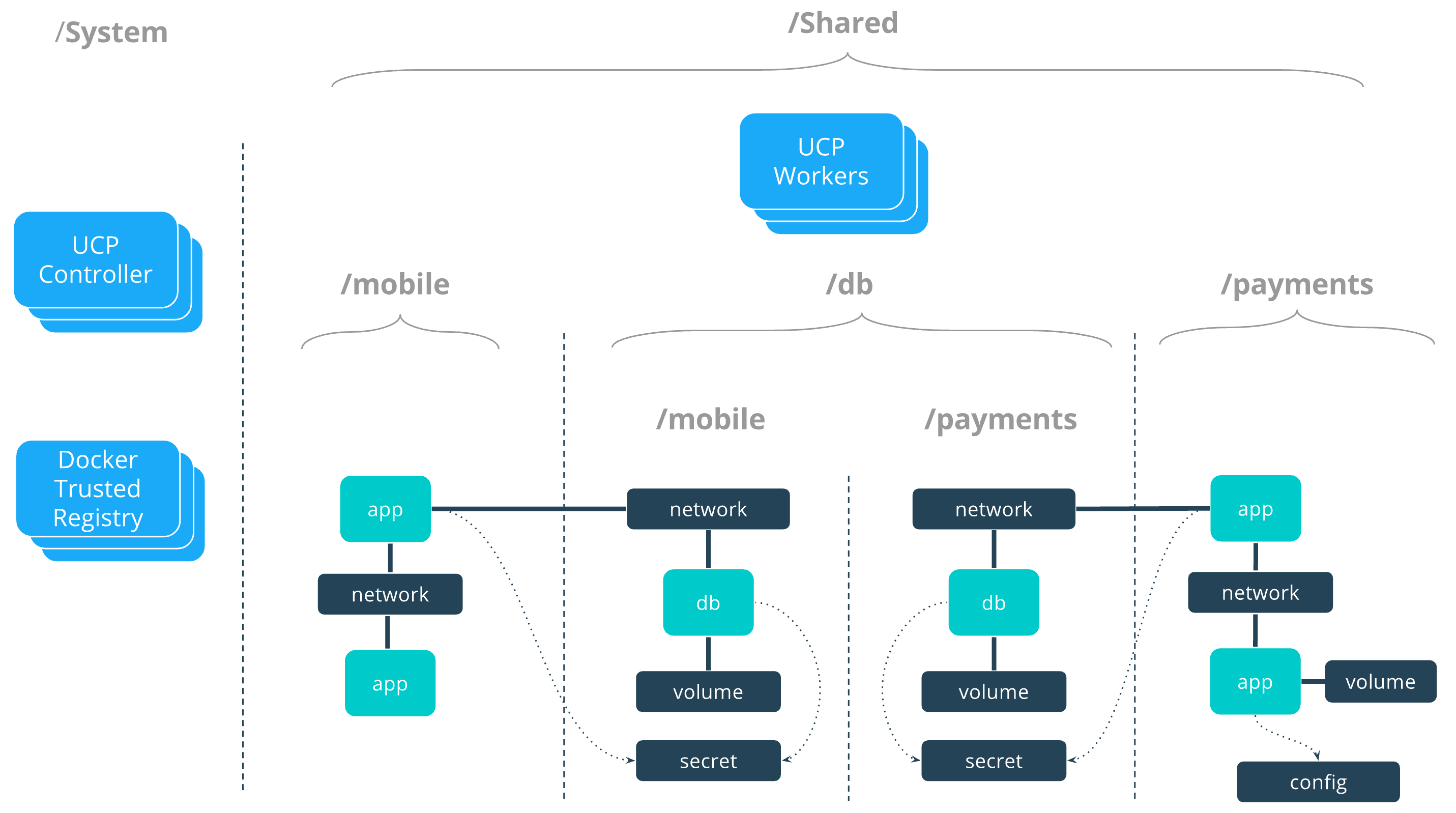

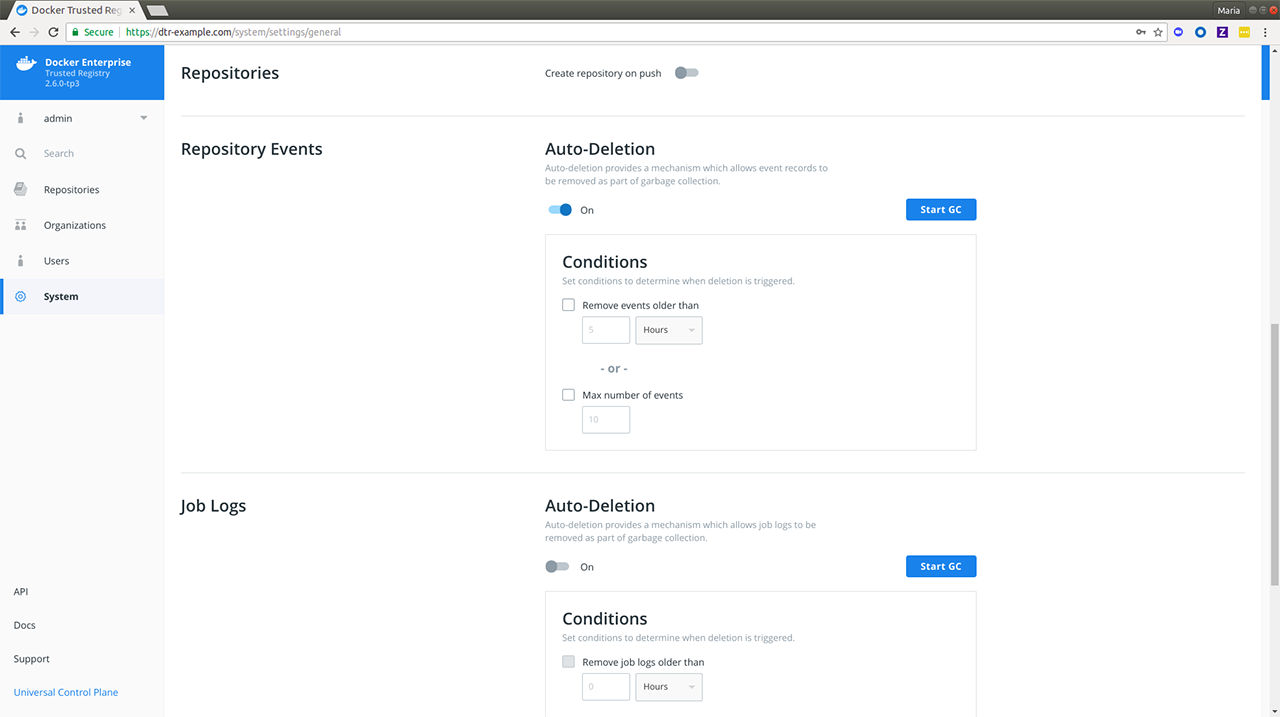

Repo install and upgrade¶