VMware vSphere 6.7 CSI Implementation Guide for Docker Enterprise

VMware vSphere 6.7 CSI Implementation Guide for Docker Enterprise¶

Overview¶

Docker Solution Briefs enable you to integrate the Docker Enterprise with popular 3rd party ecosystem solutions for networking, load balancing, storage, logging and monitoring, access management, and more.

This document is a walkthrough for installing VMware vSphere CSI driver on a Docker Enterprise 3.0 for Docker Kubernetes Service.

vSphere CSI Driver Overview¶

vSphere CSI driver enables customers to address persistent storage requirements for Docker Enterprise (Kubernetes orchestration) in vSphere environments. Docker users can now consume vSphere Storage (vSAN, VMFS, NFS) to support persistent storage for Docker Kubernetes Service

vSphere CSI driver is Docker Certified to use with Docker Enterprise and is available in Docker Hub.

Prerequisites¶

- Docker Enterprise 3.0 VMware

vSphere

- Tested on Docker Enterprise 3.0 - MCR 19.03, MKE 3.2.4, and MSR 2.7.2

- VMware vSphere 6.7 Update 3

Installation and Configuration¶

On ESX¶

Install Docker Enterprise 3.0 (MKE and MSR) and configure worker node orchestration to Kubernetes.

Highlevel Cluster diagram

Login to Docker Enterprise MKE Cluster Web UI using Docker username and password.

Docker Enterprise MKE Dashboard

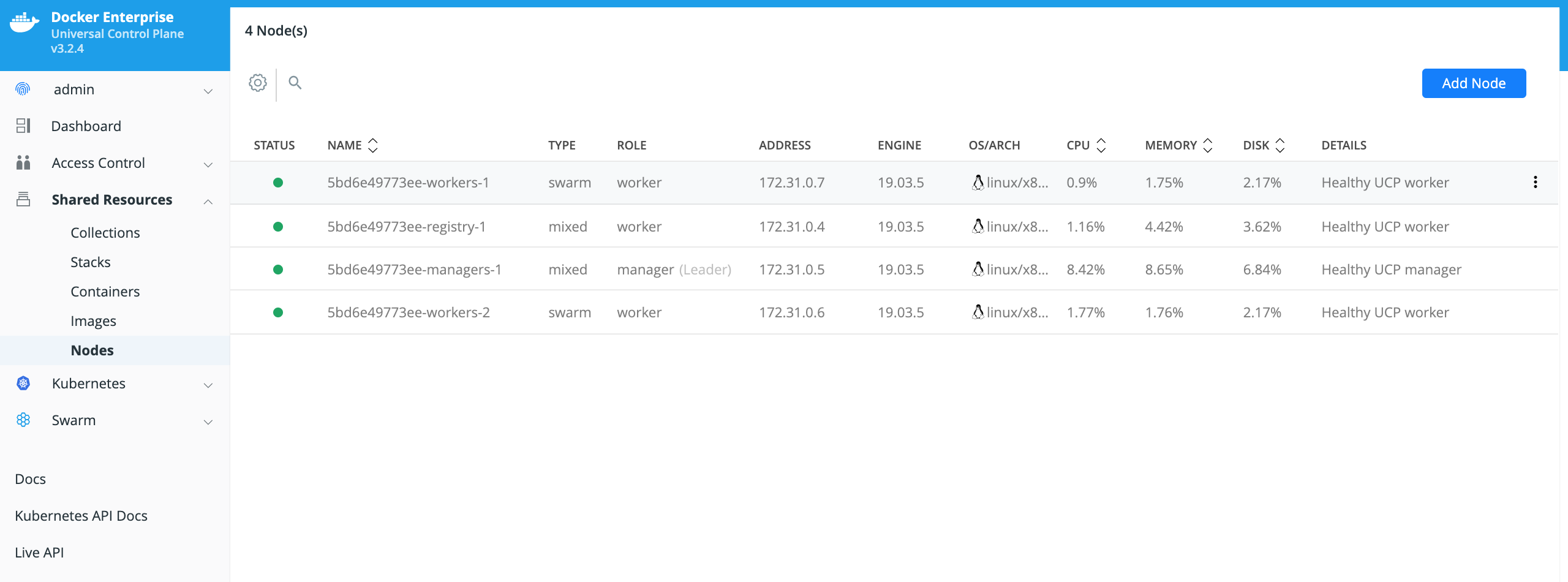

Configure Node Orchestration from Swarm to Kubernetes. Double click on highlighted node.

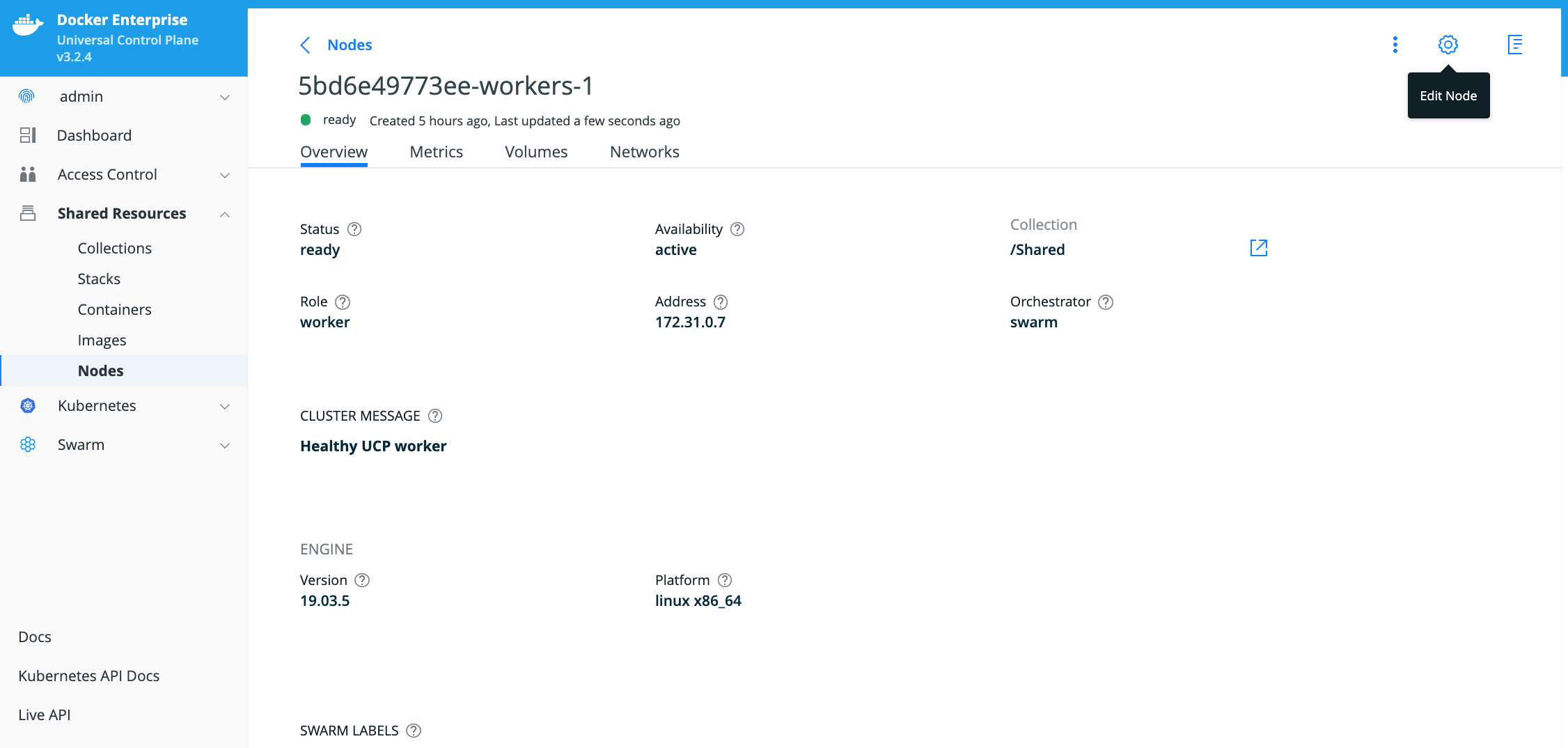

Click on the Edit Node on upper right hand, and change Orchestrator Type to kubernetes

Note

Repeat similar steps for all worker nodes.

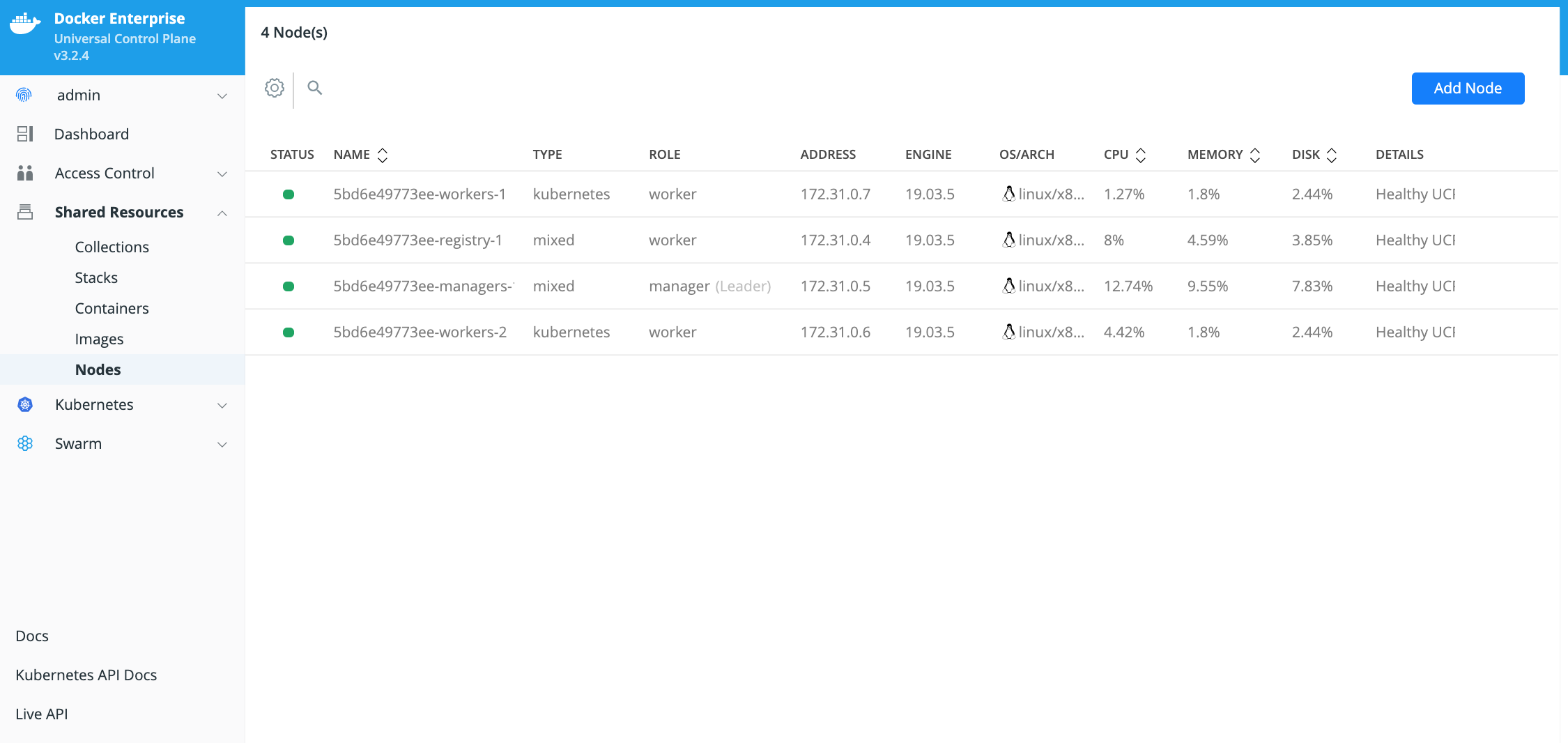

New display of nodes will show Orchestrator Type as kubernetes for workers nodes

Generate and download a Docker Enterprise MKE client bundle from MKE Web UI¶

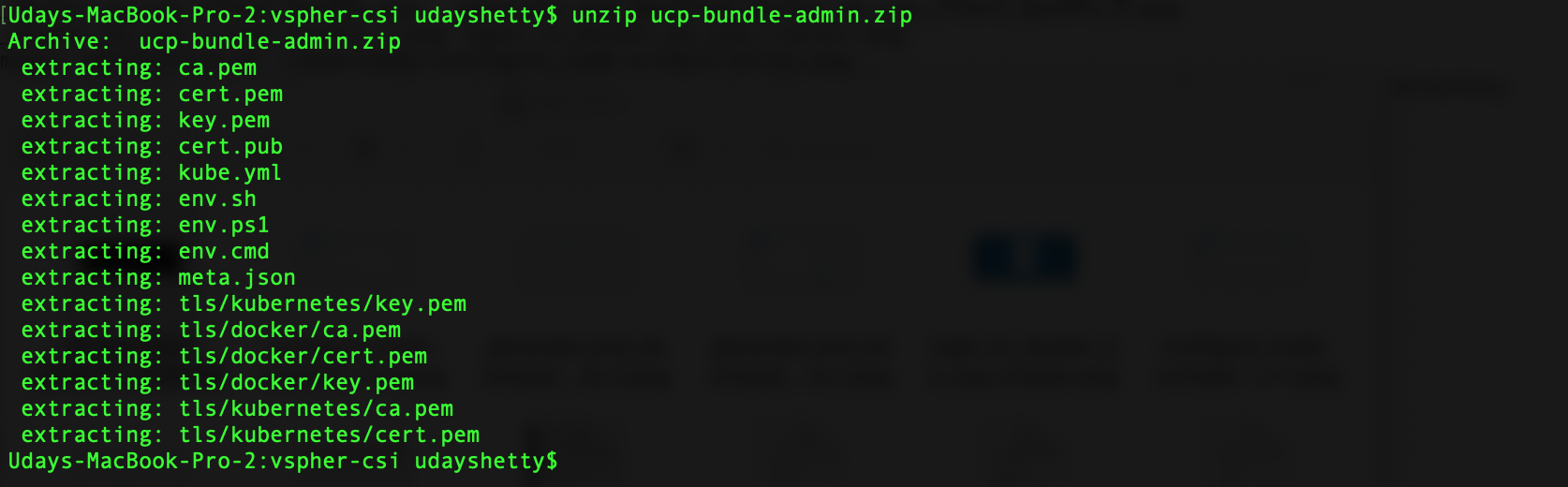

Locate the generated client bundle archive file and unzip it.

Note

The generated client bundle archive file will be downloaded to whatever folder your browser’s Download folder is configured for. You may have to move the generated client bundle archive file to the Docker client machine if it is a different machine than the machine it was downloaded to.

Run the following command from the Docker Enterprise client command shell to unzip the client bundle archive file.

$ unzip ucp-bundle-admin.zip

Example:

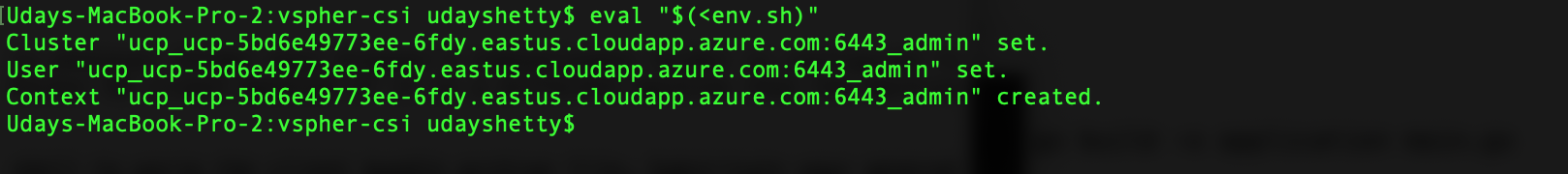

Configure Docker Enterprise client command shell.

Run the following command from the Docker Enterprise command shell.

$ eval "$(<env.sh)"

Example:

Test the Docker Enterprise MKE client bundle and configuration.

Run the docker version command from the Docker client command shell. (download Docker for Mac or Docker for Windows on your laptop or install Docker on your linux client)

$ docker version --format '{{println .Server.Platform.Name}}Client: {{.Client.Version}}{{range .Server.Components}}{{println}}{{.Name}}: {{.Version}}{{end}}'

Example:

Kubernetes kubectl command¶

The Kubernetes kubectl command must be installed on the Docker

client machine. Refer to Install and Set Up

kubectl

to download and install the version of the kubectl command that

matches the version of Kubernetes included with the Docker Enterprise

version you are running. You can run the docker version command to

display the version of Kubernetes installed with Docker Enterprise.

Prerequisite for installing vSphere CSI driver¶

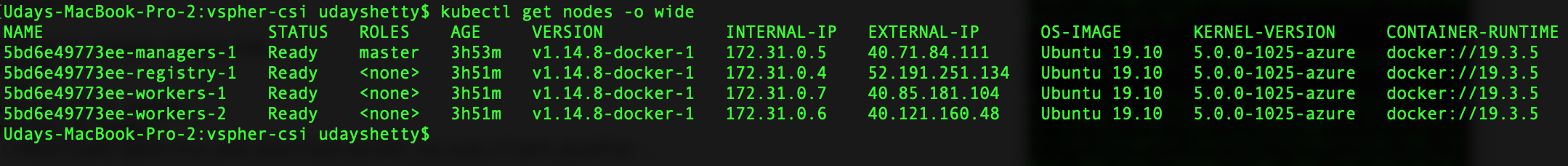

- Get cluster node information

$ kubectl get nodes -o wide

Example:

- Make sure master node(s) are tainted, requires 2 taints as shown below (master= and uninitialized=true)

$ kubectl taint node 5bd6e49773ee-managers-1 node-role.kubernetes.io/master=:NoSchedule

$ kubectl taint node 5bd6e49773ee-managers-1 node.cloudprovider.kubernetes.io/uninitialized=true:NoSchedule

- Make sure all the worker nodes are tainted with node.cloudprovider.kubernetes.io/uninitialized=true:NoSchedule

$ kubectl taint node 5bd6e49773ee-workers-1 node.cloudprovider.kubernetes.io/uninitialized=true:NoSchedule

$ kubectl taint node 5bd6e49773ee-workers-2 node.cloudprovider.kubernetes.io/uninitialized=true:NoSchedule

Verify the nodes are tainted

$ kubectl describe nodes | egrep "Taints:|Name:"

Example:

Install vSphere CSI driver¶

Follow instructions from this doc: https://cloud-provider-vsphere.sigs.k8s.io/tutorials/kubernetes-on-vsphere-with-kubeadm.html#install-vsphere-container-storage-interface-driver

Note

Since MKE doesn’t support cloud-provider option at the moment, will have to rely on the vSphere Cloud Provider (VCP) instead of Cloud Controller Manager (CCM) to discover the UUID of each VM.

Uninitialized taint needs to be manually removed from all nodes after CSI driver is installed.

Certification Test¶

The fastest way to verify the installation is to run the cert tests at https://github.com/docker/vol-test on Linux hosts.

To install the vol-test tools:

Clone https://github.com/docker/vol-test/

$ git clone https://github.com/docker/vol-test.git $ cd vol-test $ cd kubernetes

Run the tests as per the readme:e

Refer to the readme for the voltest package to tailor the configuration variables for your environment. This is the test result for the run.

Note

Please update the storageClassName in testapp.yaml that maps to an existing storage class that references to an existing storage policy in vSphere.

$ ./voltestkube -podurl http://10.156.129.220:33208 Pod voltest-0 is Running http://10.156.129.220:33208/status Reset test data for clean run Shutting down container Waiting for container restart - we wait up to 10 minutes Should be pulling status from http://10.156.129.220:33208/status .Get http://10.156.129.220:33208/status: dial tcp 10.156.129.220:33208: connect: connection refused .Get http://10.156.129.220:33208/status: dial tcp 10.156.129.220:33208: connect: connection refused Container restarted successfully, moving on Pod node voltest-0 is docker-2 Pod was running on docker-2 Shutting down container for forced reschedule http error okay here Waiting for container rechedule - we wait up to 10 minutes ......Container rescheduled successfully, moving on Pod is now running on docker-3 Going into cleanup... Cleaning up taint on docker-2 Test results: +-------------------------------------------------------+ Kubernetes Version: v1.14.7-docker-1 OK Test Pod Existence: Found pod voltest-0 in namespace default OK Confirm Running Pod: Pod running OK Initial Textfile Content Confirmation: Textcheck passes as expected OK Initial Binary Content Confirmation: Bincheck passes as expected OK Post-restart Textfile Content Confirmation: Textcheck passes as expected OK Post-restart Binaryfile Content Confirmation: Bincheck passes as expected OK Rescheduled Textfile Content Confirmation: Textcheck passes as expected OK Rescheduled Binaryfile Content Confirmation: Bincheck passes as expected OK All tests passed.

Monitoring and Troubleshooting¶

Monitor the VMware driver container on each node - make sure it’s active and running.

$ kubectl -n kube-system get pod -l app=vsphere-csi-node

Example Output:

$ kubectl -n kube-system get pod -l app=vsphere-csi-node

NAME READY STATUS RESTARTS AGE

vsphere-csi-node-8wm48 3/3 Running 0 14d

vsphere-csi-node-tstqr 3/3 Running 0 14d

vsphere-csi-node-wnn7r 3/3 Running 0 14d

See also