Neutron VLAN tenant networks with network nodes (no DVR)

Neutron VLAN tenant networks with network nodes (no DVR)¶

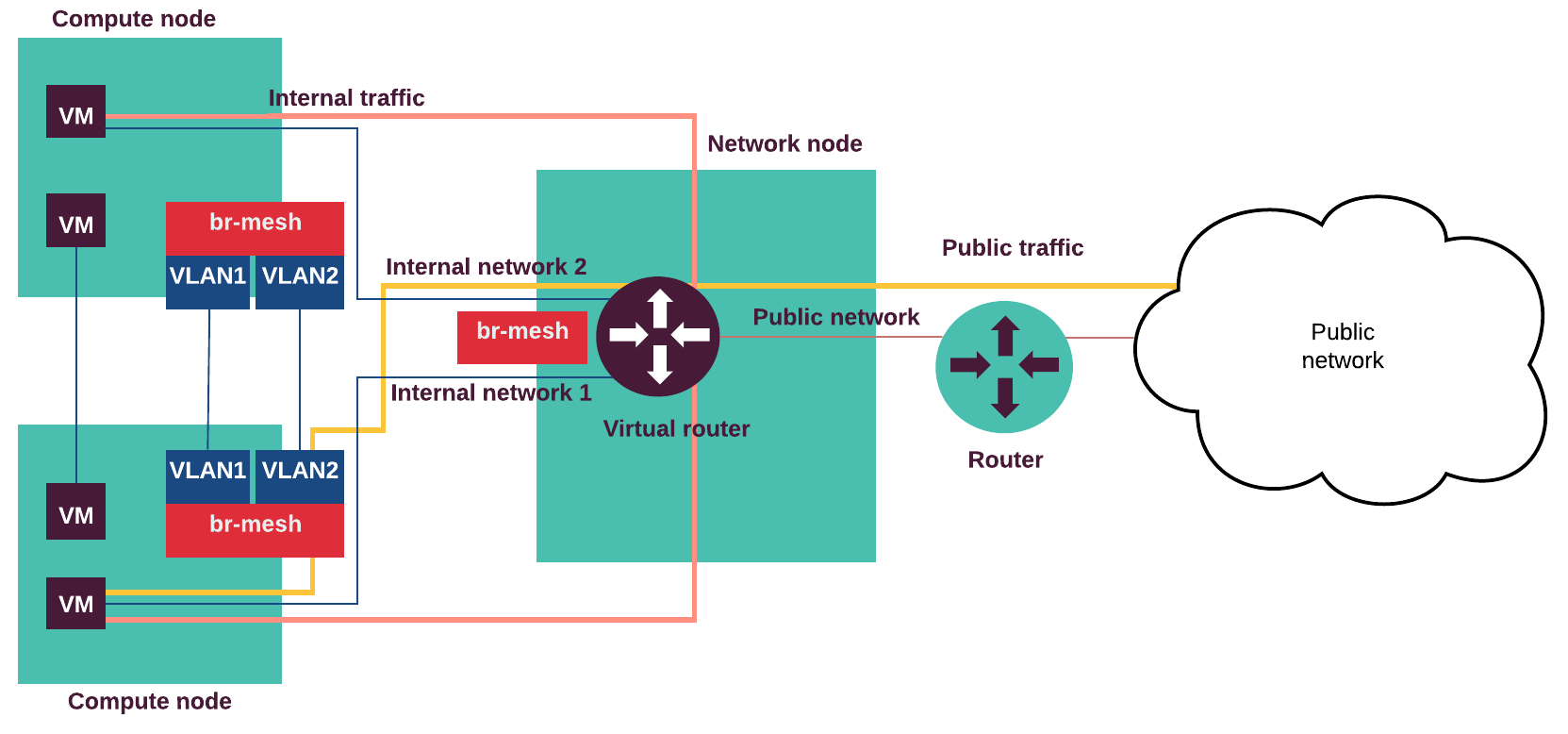

If you configure your network with Neutron OVS VXLAN tenant networks with network nodes and without a Distributed Virtual Router (DVR) on the compute nodes, all routing happens on the network nodes.

The following diagram displays internal and external traffic flow:

The internal traffic from one tenant virtual machine located on virtual

Internal network 1 goes to another virtual machine located in

the Internal network 2 through the virtual routers on the network node.

The external traffic from a virtual machine goes through the Internal

network 1 and the tenant VLAN (br-mesh) to the DVR on the

network node and through the Public network to the outside network.

The network node terminates private VLANs

and sends traffic to the external provider of VLAN networks.

Therefore, all tagged interfaces must be configured directly

in Neutron OVS as internal ports without Linux bridges.

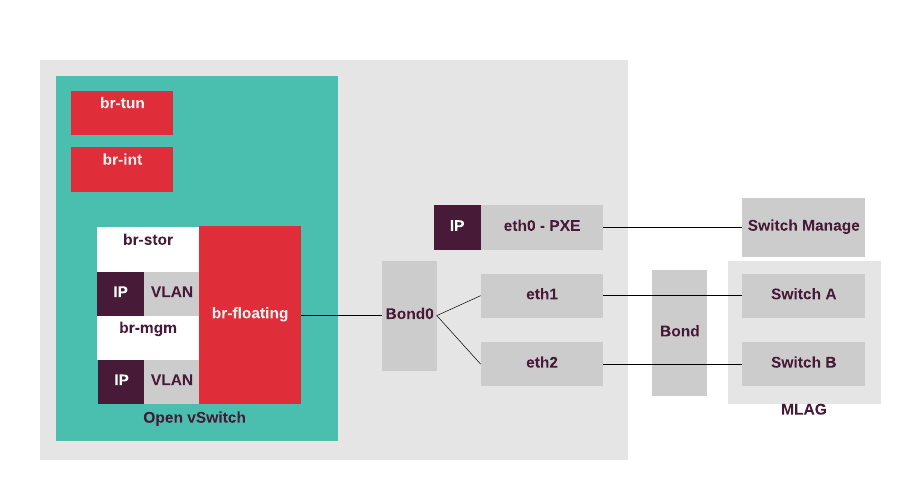

Bond0 is added into br-floating, which is mapped as physnet1

into the Neutron provider networks. br-floating is patched with

br-prv which is mapped as physnet2 for VLAN tenant

network traffic. br-mgm is an OVS internal port with a tag and

an IP address. br-prv is the Neutron OVS bridge which is

connected to br-floating through the patch interface. As storage

traffic handling on the network nodes is not required, all the

sub-interfaces can be created in Neutron OVS which enables creation

of VLAN providers through the Neutron API.

The following diagram displays the compute nodes configuration for the use case with Neutron VLAN tenant networks and external access configured on the network node only: