Docker Enterprise Reference Architectures master documentation

Warning

Mirantis stopped maintaining this documentation set as of 2021-07-21, in correlation with the End of Life date for MKE 3.2.x and MSR 2.7.x. The company continues to support MCR 19.03.x and its documentation.

For the latest MKE, MSR, and MCR product documentation, refer to:

Docker Enterprise Reference Architectures¶

Warning

Mirantis stopped maintaining this documentation set as of 2021-07-21, in correlation with the End of Life date for MKE 3.2.x and MSR 2.7.x. The company continues to support MCR 19.03.x and its documentation.

For the latest MKE, MSR, and MCR product documentation, refer to:

Introduction¶

This page provides expert advice and guidance from top Docker practitioners. Get design considerations, best practices, and decision support for architecting and building your environment.

Warning

Mirantis stopped maintaining this documentation set as of 2021-07-21, in correlation with the End of Life date for MKE 3.2.x and MSR 2.7.x. The company continues to support MCR 19.03.x and its documentation.

For the latest MKE, MSR, and MCR product documentation, refer to:

Deploy and Manage¶

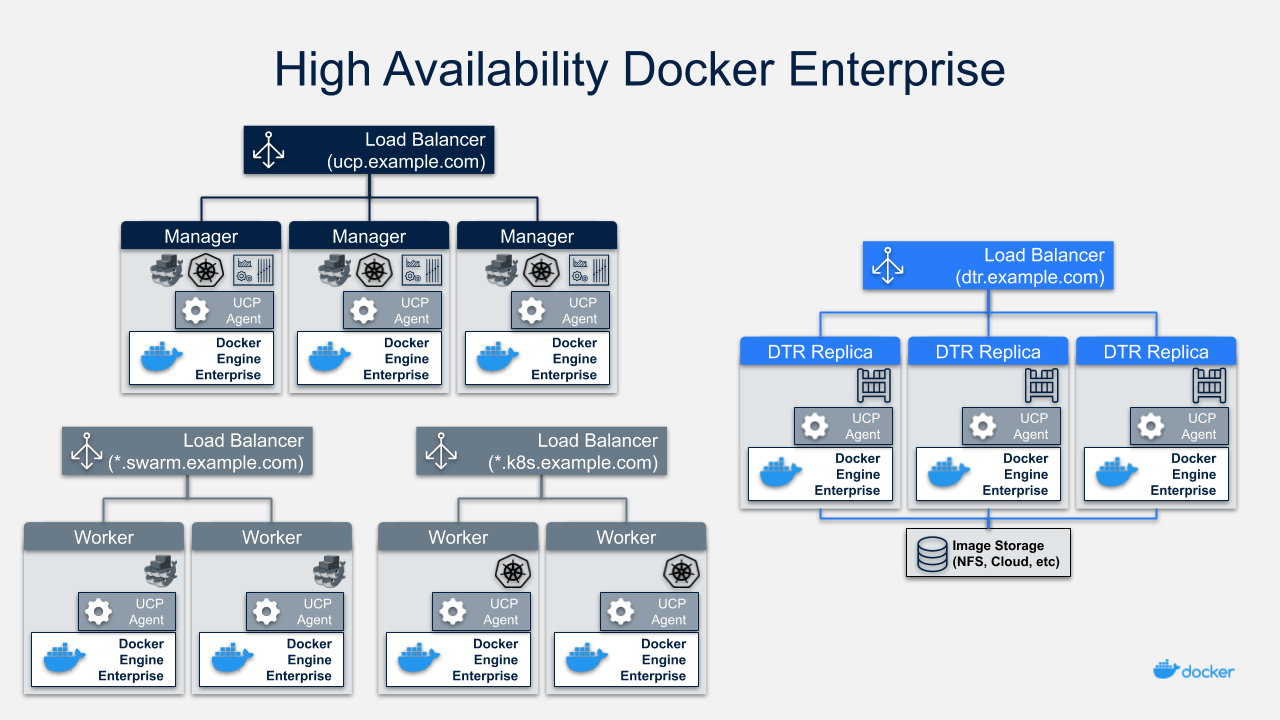

Using Docker Enterprise in high availability mode is recommended for minimal downtime. Learn best practices for deploying and managing Docker Enterprise in a standard, production level environment.

Running Docker Enterprise at Scale¶

Introduction¶

This reference architecture will help you plan large-scale Docker Enterprise deployments. It covers both the core Docker Enterprise platform, Mirantis Kubernetes Engine, and Mirantis Secure Registry. Use this guide to help size hardware and infrastructure for your Docker Enterprise deployments and to determine optimal configuration for your specific workloads.

What You Will Learn¶

For Docker Enterprise, Mirantis Kubernetes Engine, and Mirantis Secure Registry, the guide covers:

- What use case parameters are likely to drive scale requirements

- Known scale limits based on real-world tests

- Best practices to ensure good performance and future headroom for growth

Docker Enterprise and Mirantis Kubernetes Engine¶

This section covers configuration of the base Docker Enterprise platform and Mirantis Kubernetes Engine for optimal performance and growth potential.

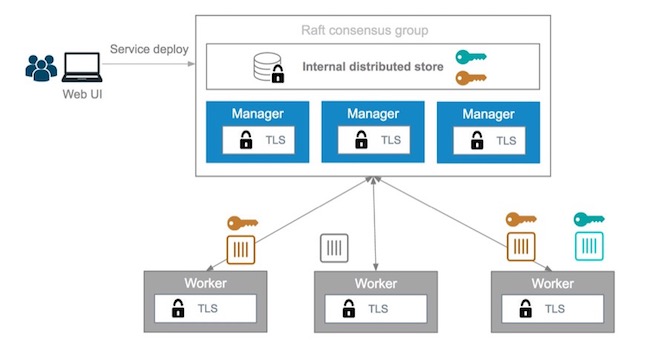

Number of Managers¶

The recommended number of managers for a production cluster is 3 or 5. A 3-manager cluster can tolerate the loss of one manager, and a 5-manager cluster can tolerate two instantaneous manager failures. Clusters with more managers can tolerate more manager failures, but adding more managers also increases the overhead of maintaining and committing cluster state in the Docker Swarm Raft quorum. In some circumstances, clusters with more managers (for example 5 or 7) may be slower (in terms of cluster-update latency and throughput) than a cluster with 3 managers and otherwise similar specs.

In general, increasing the manager count does not make cluster operations faster (it may make them slower in some circumstances), does not increase the max cluster update operation throughput, and does not increase the total number of worker nodes that the cluster can manage.

Even when managers are down and there’s no quorum, services and tasks on the cluster keep running and are steady-state stable (although updating cluster state is not possible without quorum). For that reason, Docker recommends investing in quickly recovering from individual manager failures (e.g. automation/scripts for quickly adding replacement managers) rather than planning clusters with a large number of managers.

1-manager clusters should only be used for testing and experimentation since loss of the manager will cause cluster loss.

See also

Check out the documentation on how manager and worker nodes work.

Manager Size and Type¶

Managers in a production cluster should ideally have at least 16GB of RAM and 4 vCPUs. Testing done by Docker has shown that managers with 16GB RAM are not memory constrained, even in clusters with 100s of workers and many services, networks, and other metadata.

Managers in production clusters should always use SSDs for the

/var/lib/docker/swarm mount point. Docker stores swarm cluster state

in this directory and will read and write many small updates as cluster

state is updated. SSDs ensure that updates can be committed with minimal

latency. SSDs are also recommended for clusters used for test and

experimentation to ensure good performance.

Increasing CPU speed and count and improving network latency between manager nodes will also improve cluster performance.

Worker Nodes Size and Count¶

For worker nodes, the overhead of Docker components and agents is not large — typically less than 1GB of memory. Deciding worker size and count can be done similar to how you currently size app or VM environments. For example, you can determine the app memory working set under load and factor in how many replicas you want for each app (for durability in case of task failure and/or for throughput). That will give you an idea of the total memory required across workers in the cluster.

Remember that Docker Swarm automatically reschedules tasks in case of worker node failure (or if you drain a node for upgrade or servicing), so don’t forget to leave headroom to handle tasks being rebalanced to other nodes.

Also remember that, unlike virtual machines, Docker containers add little or no memory or CPU overhead compared to running an app outside of a container. If you’re moving apps from individual VMs into containers, or if you’re consolidating many apps into a Docker Enterprise cluster, you should be able to do so with less resources than what’s currently used.

Segmenting Tasks and Limiting Resource Use¶

On production clusters, never run workloads on manager nodes. This is a configurable manager node setting in Mirantis Kubernetes Engine (MKE).

If the tasks and services deployed on your cluster have very different resource profiles and if you want to use different node types for different tasks (for example with different disk, memory, or CPU characteristics) you can use node labels and service constraints to control where Swarm schedules tasks for a particular service.

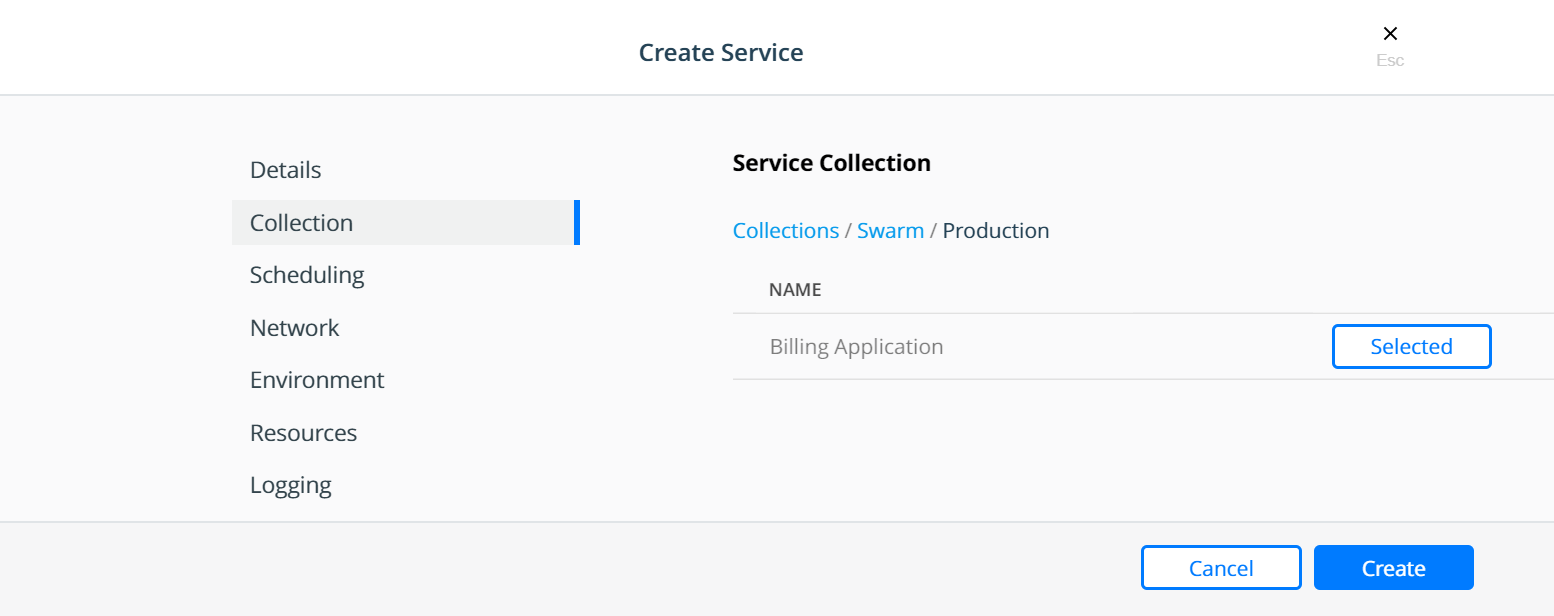

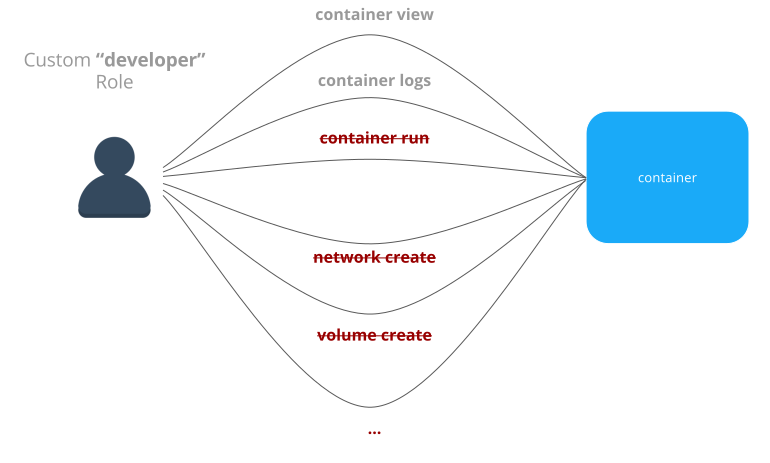

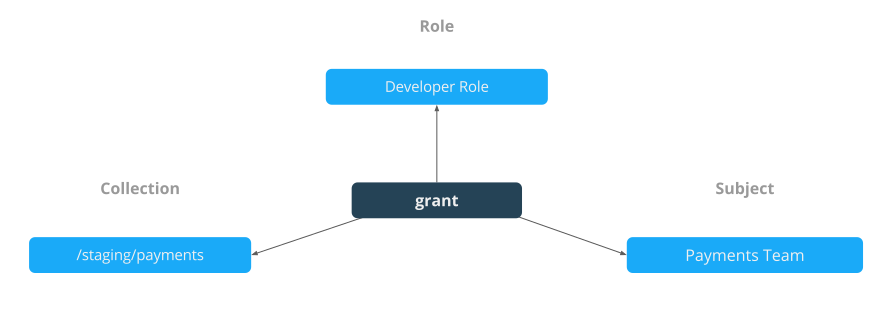

You can also put nodes into collections and control access based on user accounts and teams. This is useful for isolating tasks managed by teams or individuals that are prone to deploying apps that consume many resources or exhibit other noisy neighbor characteristics that negatively affect tasks run by other teams. See the RBAC Knowledge Base article for examples of how to structure teams and projects with Docker Enterprise Edition.

Resource Constraints¶

Docker Enterprise has support for applying resource limits to containers and

service tasks. Docker recommends using the --reserve-memory=<value>

and --limit-memory=<value> parameters when creating services. These

let Docker Enterprise better pack tasks on worker nodes based on expected

memory consumption.

Further, it might be a good idea to allocate a global (1 instance per node) “ghost” service that reserves a chunk (for example 2GB) of memory on each node that can be used by non-Docker system services. This is relevant because Docker Swarm does not currently account for worker node memory consumed by workloads not managed by Docker:

docker service create \

--name system-reservation \

--reserve-memory 2G \

--limit-memory 2G \

--reserve-cpu 1 \

--mode global \

nginx:latest

(nginx does not actually do any work in this service. Any small

image that does not consume a lot of memory or CPU can be used instead

of nginx).

See also

Check out the docs on container resource constraints and reserving memory or CPUs for a service.

Disk Space¶

For production clusters, there are few factors that drive worker disk space use that you should look out for:

- In-use Docker container images on worker nodes

- Local Docker volumes created for containers

- Container logs stored on worker nodes

- Mirantis Container Runtime logs stored on worker nodes

- Temporary scratch data written by containers

Container Images on Worker Nodes¶

To determine how much space to allocate for in-use images, try putting

some of your apps in containers and see how big the resulting images

are. Note that Docker images consist of layers, and if the same layer is

used by multiple containers (as is common of OS layers like ubuntu

or language framework layers like openjdk), only one copy of that

layer is stored and used on any given node or Mirantis Secure Registry.

Layer sharing also means that deploying a new version of your app

typically only consumes a relatively small amount of extra space on

nodes (since only the top layers that hold your app are changed).

Note that Docker Windows container images often end up being somewhat larger than Linux ones.

To keep in-use container image storage in check, try to ensure that app images derive from common base images. Also consider running regular scripts or cron-jobs to prune unused images, especially if nodes handle many image update (e.g. build servers or test systems that see more frequent deploys). See the docs on image-pruning for details.

Logs¶

For production clusters, Docker recommends aggregating container logs

using a logging driver or other third party service. Only the

json-file (and possibly journald) log drivers cause container

logs to accumulate on nodes, and in that case, care should be taken to

rotate or remove old container logs. See Docker Logging Design and Best Practices

for details.

Mirantis Container Runtime logs are stored on worker and manager nodes. The

amount of Mirantis Container Runtime logs generated varies with workload and

engine settings. For example, debug log level causes more logs to be

written. Mirantis Container Runtime logs should be managed (compacted and

eventually deleted) with a utility like logrotate.

Overlay Networking and Routing Mesh¶

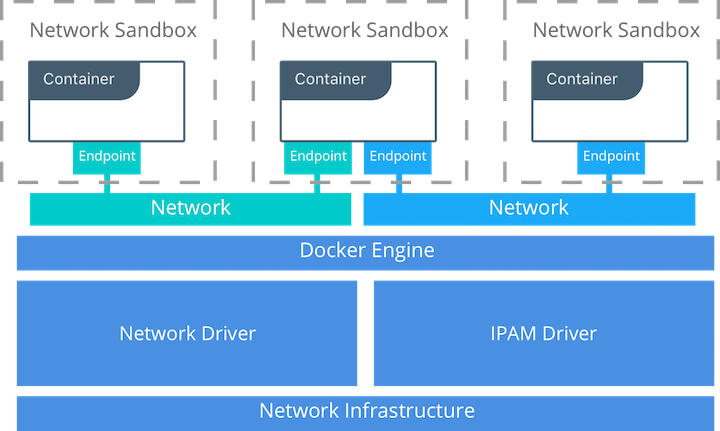

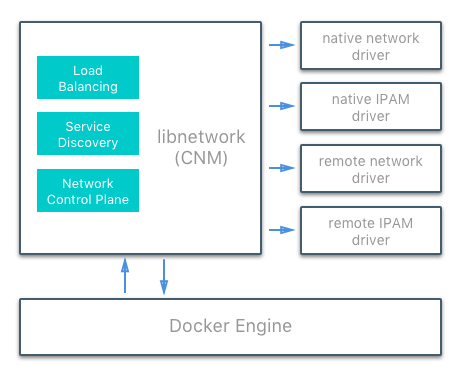

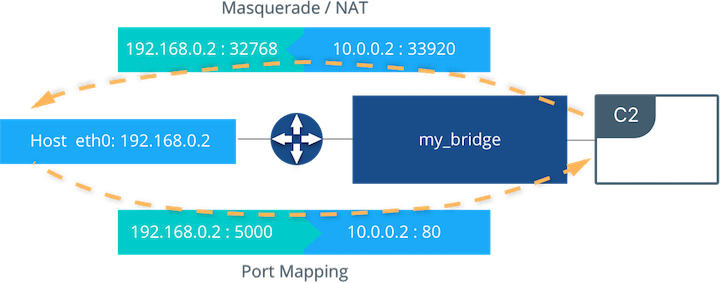

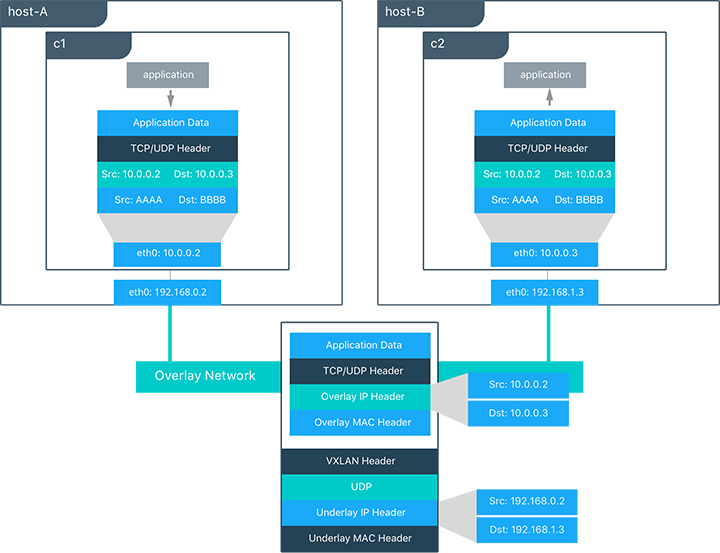

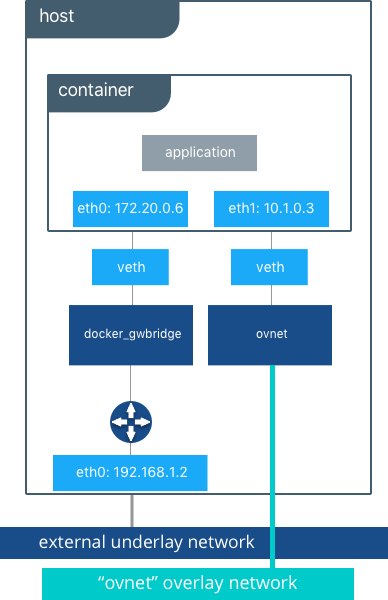

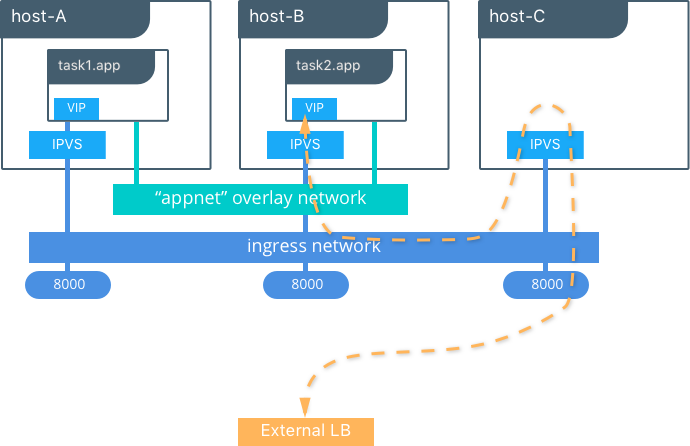

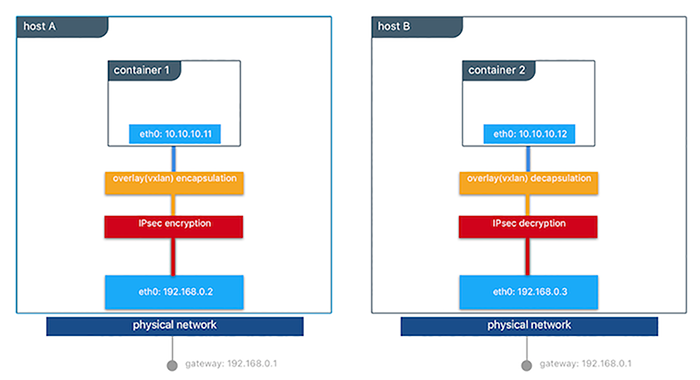

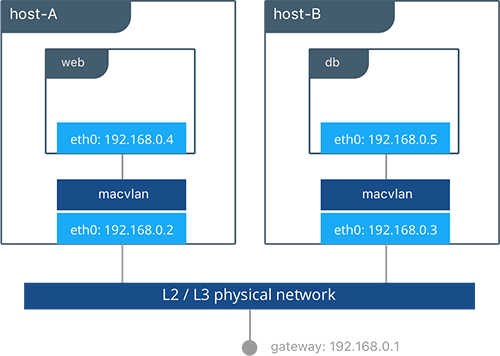

Docker Enterprise ships with a built-in, supported overlay networking driver for multi-host networking for use with Docker Swarm. Overlay networking incurs overhead associated with encapsulating network traffic and with managing IP addresses and other metadata that tracks networked tasks and services.

Docker Enterprise customers that have apps with very network high-throughput requirements or workloads that are extremely dynamic (high-frequency cluster or service updates) should consider minimizing reliance on the out-of-the-box Docker overlay networking and routing mesh. There are several ways to do that:

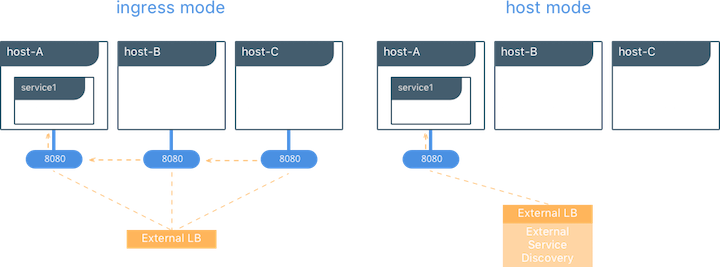

- Use host-mode publishing instead of routing mesh

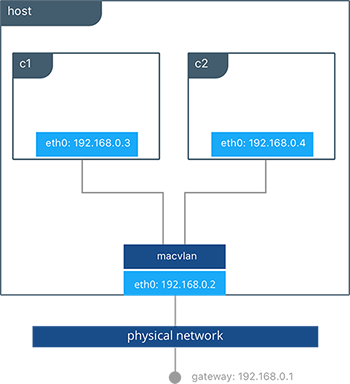

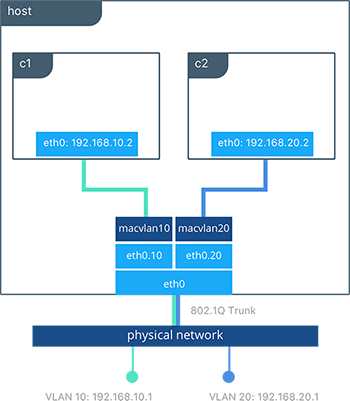

- Use the macvlan driver, which may have better performance than the default driver

- Use a non-Docker service discovery mechanism (like Consul)

- Consider using

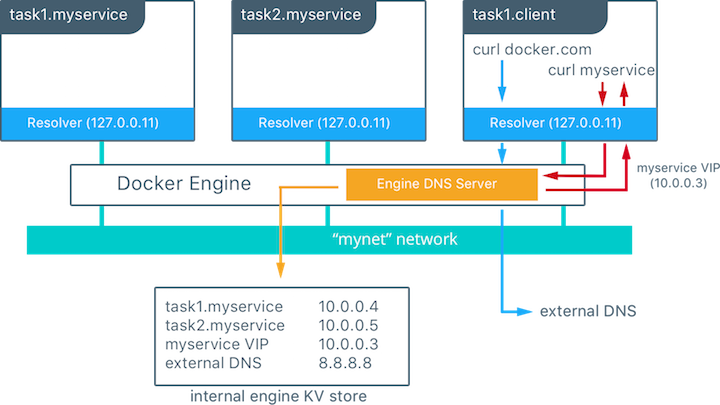

dnsrrinstead ofvipservice endpoints

Overlay network size should not exceed /24 blocks (the default) with

256 IP addresses when networks are used by services created using

VIP-based endpoint-mode (the default). Users should not work around this

by increasing the IP block size. Instead, either use dnsrr

endpoint-mode or use multiple smaller overlay networks.

Also be aware that Docker Enterprise may experience IP exhaustion if many tasks

are assigned to a single overlay network, for example if many services

are attached to that network or if services on the network are scaled to

many replicas. The problem may also manifest when tasks are rescheduled

because of node failures. In case of node failure, Docker currently

waits 24 hours to release overlay IP addresses. The problem can be

diagnosed by looking for failed to allocate network IP for task

messages in the Docker daemon logs.

HTTP Routing Mesh¶

Docker Enterprise Edition with Mirantis Kubernetes Engine come with a built-in HTTP Routing Mesh feature. HTTP Routing Mesh adds some overhead from extra network hops and routing control and should only be used for managing networking for externally exposed services. For networking and routing between services hosted on Docker, simply use the standard built-in Docker overlay networking for best performance.

Mirantis Secure Registry¶

This section covers configuration of Mirantis Secure Registry for scale and performance.

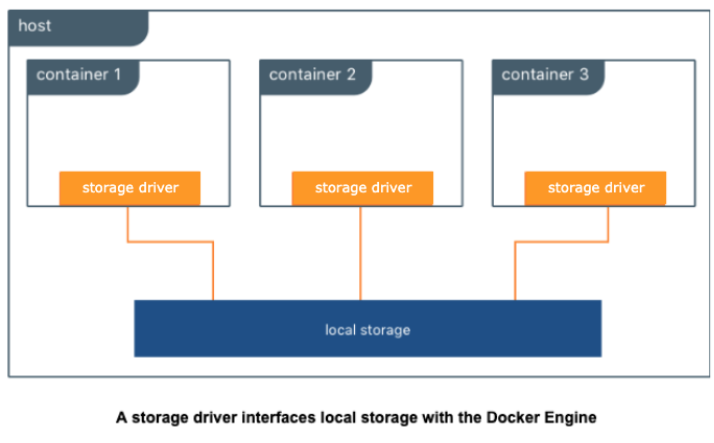

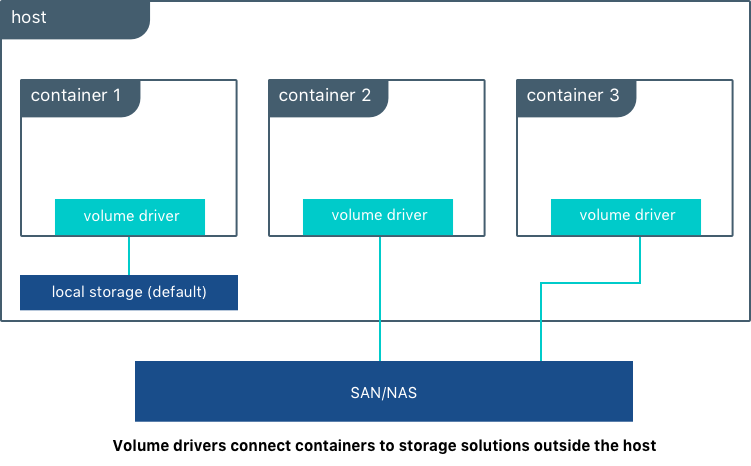

Storage Driver¶

Mirantis Secure Registry supports a wide range of storage backends. For scaling purposes, backend types can be classified either as filesystem-based (NFS, bind mount, volume) or cloud/blob-based (AWS S3, Swift, Azure Blob Storage, Google Cloud Storage).

For some uses, cloud/blob-based storage are more performant than

filesystem-based storage. This is because MSR can redirect layer GET

requests from clients directly to the backing store. By doing this the

actual image contents being pulled by Docker clients won’t have to

transit through MSR but can be fetched directly by Docker clients from

the backing store (once metadata has been fetched and credentials

checked by MSR).

When using filesystem-based storage (like NFS), ensure that MSR performance is not constrained by infrastructure. Common bottlenecks include host network interface cards, the load balancer deployed with MSR, throughput (IOPS) and latency of the backend storage system, and the CPU/memory of the MSR replica hosts.

Docker has tested MSR performance and determined that it can handle in excess of 1400 concurrent pulls of 1 GB container images using NFS-backed storage with 3 replicas.

Total Storage¶

The best way to understand future total image storage requirements is to gather and analyze the following data:

- Average image size

- Frequency of image updates/pushes

- Average size of image update size (considering that many images may share common base layers)

- Retention policies and requirements for storing old image artifacts

Use Mirantis Secure Registry Garbage Collection in combination with scripts or other automation that delete old images (using the MSR API) to keep storage use in check.

Storage Performance¶

The Mirantis Secure Registry write-load is likely to be high when many developers or build machines are pushing images to MSR at the same time.

Read-load is likely to be high when a new image version is pushed to MSR and is then deployed to a large Docker Enterprise cluster with many instances that are all pulling the updated image.

If the same MSR cluster instance is used for both developer/build-server artifact storage and for production image artifact storage for a large production Docker Enterprise MKE cluster, the MSR cluster instances will experience both high write and read load. For very large deployments consider using two (or more) MSR clusters - one focused on supporting developers and build-servers writing images and another one that can handle very high instantaneous read loads generated by production deployments.

When estimating MSR performance requirements, consider average image and image update sizes, how many developers and build machines will be pushing and pulling from your MSR setup, and how many production nodes will concurrently pull updated images during deploys. Ensure that you have enough MSR instances and that your backing storage has enough read and write throughput to handle peak load.

To increase image pull throughput, consider using MSR caches as an alternative to adding more replicas.

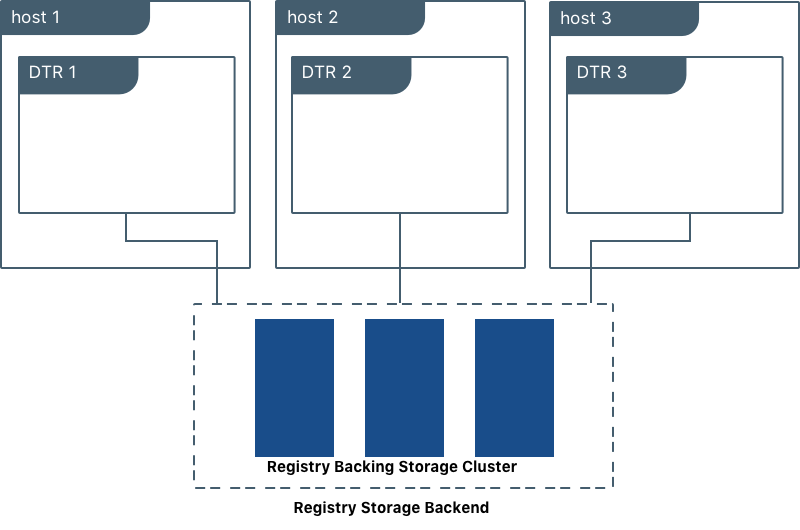

Replica Count¶

Mirantis Secure Registry maintains a quorum of replicas that store metadata about repos, images, tags, and other MSR objects. 3 replicas is the minimum number of replicas for a highly available deployment. 1-replica deployments should only be used for testing and experimentation.

When using multiple MSR replicas, configure a loadbalancer so that requests are distributed to all MSR replicas.

A MSR cluster with 5 or 7 replicas may take longer to commit metadata updates (such as image pushes or tag updates) than one with 3 replicas because it takes longer for updates to propagate with a larger quorum.

If using MSR Security Scanning, note that MSR will run at most one concurrent scan per MSR replica. Adding more MSR replicas (or changing to replicas with faster hardware) will increase MSR scanning throughput. Note that MSR does not currently re-scan stored images when the vulnerability database is updated. Backlogs of queued scans are most likely to result from lots of images being updated.

In summary, you may want to consider using more than 3 MSR replicas to achieve:

- Peak image push/pull throughput on NFS-based setups in excess of what 3 replicas can handle

- More than 3 concurrent image vulnerability scans

- Durability in case of more than 1 instantaneous MSR replica failure

Metadata Size and Cluster Operations¶

Mirantis Secure Registry stores metadata about repos, images, tags, and other

objects in a database (user data is maintained by Mirantis Kubernetes Engine).

You can determine the size of the MSR database by checking the size of the

/data directory in the dtr-rethink container.

The time required to complete MSR cluster operations such as replica-join, backup, and restore is determined by the amount of metadata held by MSR.

Cluster Size¶

If you’re planning a large Docker Enterprise deployment that’s going to be used by multiple groups or business units, you should consider whether to run a single cluster or multiple clusters (e.g. one for each business unit). Both are valid options, but you will typically get greater benefits from consolidation by using just one or a few clusters.

Docker Enterprise Edition has strong team-based multi-tenancy controls, including assigning collections of worker nodes to only run tasks and services created by specific teams. Using these features with a single - or a few - clusters, will let multiple business units or groups use Docker Enterprise Edition without the overhead of configuring and operating multiple clusters.

Even so, there might be good reasons to use multiple clusters:

- Stages: Even for smaller deployments it is a good idea to have separate non-production and production clusters. This allows critical changes and updates to be tested in isolation before deploying in production. More granular segmentation can be done if there are many stages (Test, QA, Staging, Prod, etc).

- Team or Application separation: Docker Enterprise team-based multi-tenancy controls allow multiple apps to be safely run in the same cluster, but more stringent security requirements may necessitate separate clusters.

- Region: Regional redundancy, compliance laws, or latency can all be reasons to segment workloads into multiple clusters.

The same concerns apply when planning how many MSR clusters to use. Note that Docker Enterprise with Mirantis Kubernetes Engine and MSR are currently limited to a 1:1 mapping between MKE and MSR cluster instances, although multiple MKE clusters can share a single MSR cluster with some feature limitations.

Summary¶

Planning your Docker Enterprise deployment with scaling in mind will help maintain optimal performance, adequate disk space, and more as workloads grow. It will also allow you to perform upgrades with little to no downtime.

See also

While using this guide to plan and architect large-scale Docker Enterprise Edition deployments, also consider the recommendations in Docker Enterprise Best Practices and Design Considerations.

Design Considerations and Best Practices to Modernize Traditional Apps (MTA)¶

Introduction¶

The Docker Containers as a Service (CaaS) platform delivers a secure, managed application environment for developers to build, ship, and run enterprise applications and custom business processes. Containerize legacy apps with Docker Enterprise Edition (EE) to reduce costs, enable portability across infrastructure, and increase security.

What You Will Learn¶

In an enterprise, there can be hundreds or even thousands of traditional or legacy applications developed by in-house and outsourced teams. Application technology stacks can vary from a simple Excel macro, to multi-tier J2EE, all the way to clusters of elastic microservices deployed on a hybrid cloud. Applications are also deployed to several heterogeneous environments (development, test, UAT, staging, production, etc.), each of which can have very different requirements. Packaging an application in a container with its configuration and dependencies guarantees that the application will always work as designed in any environment.

In this document you will learn best practices for modernizing traditional applications with Docker EE. It starts with high-level decisions such as what applications to Dockerize and methodology, then moves on to more detailed decisions such as what components to put in images, what configuration to put in containers, where to put different types of configuration, and finally how to store assets for building images and configuration in version control.

What Applications to Modernize?¶

Deciding which applications to containerize depends on the difficulty of the Dockerizing versus the potential gains in speed, portability, compute density, etc. The following sections describe, in order of increasing difficulty, different categories of components and approaches for containerizing them.

Stateless¶

In general, components which are stateless are the easiest to Dockerize because there is no need to take into account persistent data such as with databases or a shared filesystem. This is also a general best practice for microservices and allows them to scale easier as each new instance can receive requests without any synchronization of state.

Some examples of these are:

- Web servers with static resources — Apache, Nginx, IIS

- Application servers with stateless applications — Tomcat, nodeJS, JBoss, Symphony, .NET

- Microservices — Spring Boot, Play

- Tools — Maven, Gradle, scripts, tests

Stateful¶

Components which are stateful are not necessarily harder to Dockerize. However, because the state of the component must be stored or synchronized with other instances, there are operational considerations.

Some examples of these are:

Application servers with stateful applications — There is often a need to store user sessions in an application. Two approaches to handling this case are to use a load balancer with session affinity to ensure the user always goes to the same container instance or to use an external session persistence mechanism which all container instances share. There are also some components that provide native clustering such as portals or persistence layer caches. It is usually best to let the native software manage synchronization and states between instances. Having the instances on the same overlay network allows them to communicate with each other in a fast, secure way.

Databases — Databases usually need to persist data on a filesystem. The best practice is to only containerize the database engine while keeping its data on the container host itself. This can be done using a host volume, for example:

$ docker run -d \ -v /var/myapp/data:/var/lib/postgresql/data \ postgres

Applications with shared filesystems - Content Management Systems (CMS) use filesystems to store documents such as PDFs, pictures, Word files, etc. This can also be done using a host volume which is often mounted to a shared filesystem so several instances of the CMS can access the files simultaneously.

Complex Product Installation¶

Components that have a complex production installation are usually the hardest to Dockerize because they cannot be captured in a Dockerfile.

Some examples of these are:

- Non-scriptable installation — These can include GUI-only installation/configuration or products that require multi-factor authentication.

- Non-idempotent installation process — Some installation processes can be asynchronous where the installation script has terminated but then starts background processes. The completion of the entire installation process includes waiting for a batch process to run or a cluster to synchronize without returning a signal or clear log message.

- Installation with external dependencies — Some products require an external system to reply for downloading or activation. Sometimes for security reasons this can only be done on a specific network or for a specific amount of time making it difficult to script the installation process.

- Installation that requires fixed IP address — Some products require a fixed IP address for a callback at install time but can then be configured once installed to use a hostname or DNS name. Since container IP address are dynamically generated, the IP address could be difficult to determine at build time.

In this case instead of building an image from a Dockerfile the image should be build by first running a base container, installing the product, and then saving the changes out to an image. An example of this is:

$ docker commit -a "John Smith" -m "Installed CMS" mycontainer cms:2

Note

Tools or Test Container. When debugging services that have

dependencies on each other, it is often helpful to create a

container with tools to test connectivity or the health of a

component. Common cases are network tools like telnet, netcat,

curl, wget, SQL clients, or logging agents. This avoids adding

unnecessary debugging tools to the containers that run the production

loads. One popular image for this is the netshoot troubleshooting

container.

Methodology¶

Two different use cases for modernizing traditional applications are:

- End of Life — Containerizing an application without further development

- Continued Development — Containerizing an application that has ongoing development

Depending on the use case, the methodology for containerizing the application can change. The following sections discuss each of them.

End of Life¶

An application that is at its end of life has no further development or upgrades. There is no development team, and it is only maintained by operations. There is no requirement to deploy the application in multiple environments (development, test, uat, staging, production) because there are no new versions to test. To containerize this type of application, the best solution would be to copy the contents of the existing server into an image. The Docker community provides open source tools such as Image2Docker to do this, which will create a Dockerfile based upon analysis of existing Windows or Linux machines:

Once a Dockerfile is generated with these tools, it can then be further modified and operationalized depending on the complexity of application. An image can then be built from the Dockerfile and run by an operations team in Docker EE.

Continued Development¶

If the application will continue to be actively developed, then there are other considerations to take into account. When containerizing an application it might be tempting to refactor, re-architect, or upgrade it at the same time. We recommend starting with a “lift and shift” approach where the application is first containerized with the minimal amount of changes possible. The application can be regression tested before further modifications are made. Some rules of thumb are:

- Keep the existing application architecture

- Keep the same versions of OS, components, and application

- Keep the deployment simple (static and not elastic)

Once the application is containerized, it will then be much easier and faster to implement and track changes such as:

- Upgrade to a newer version of application server

- Refactor to microservices

- Dynamically scale or elastic deployment

In a “lift and shift” scenario the choice of base libraries or components such as an application server or language version as well as the underlying OS are already determined by the legacy application. The next step is determining the best way to integrate this “stack” into a Docker image. There are several approaches to this depending on the commonality of the components, the customization of components in the application, and adherence to any enterprise support policies. There are different ways to obtain a stack of components in an image:

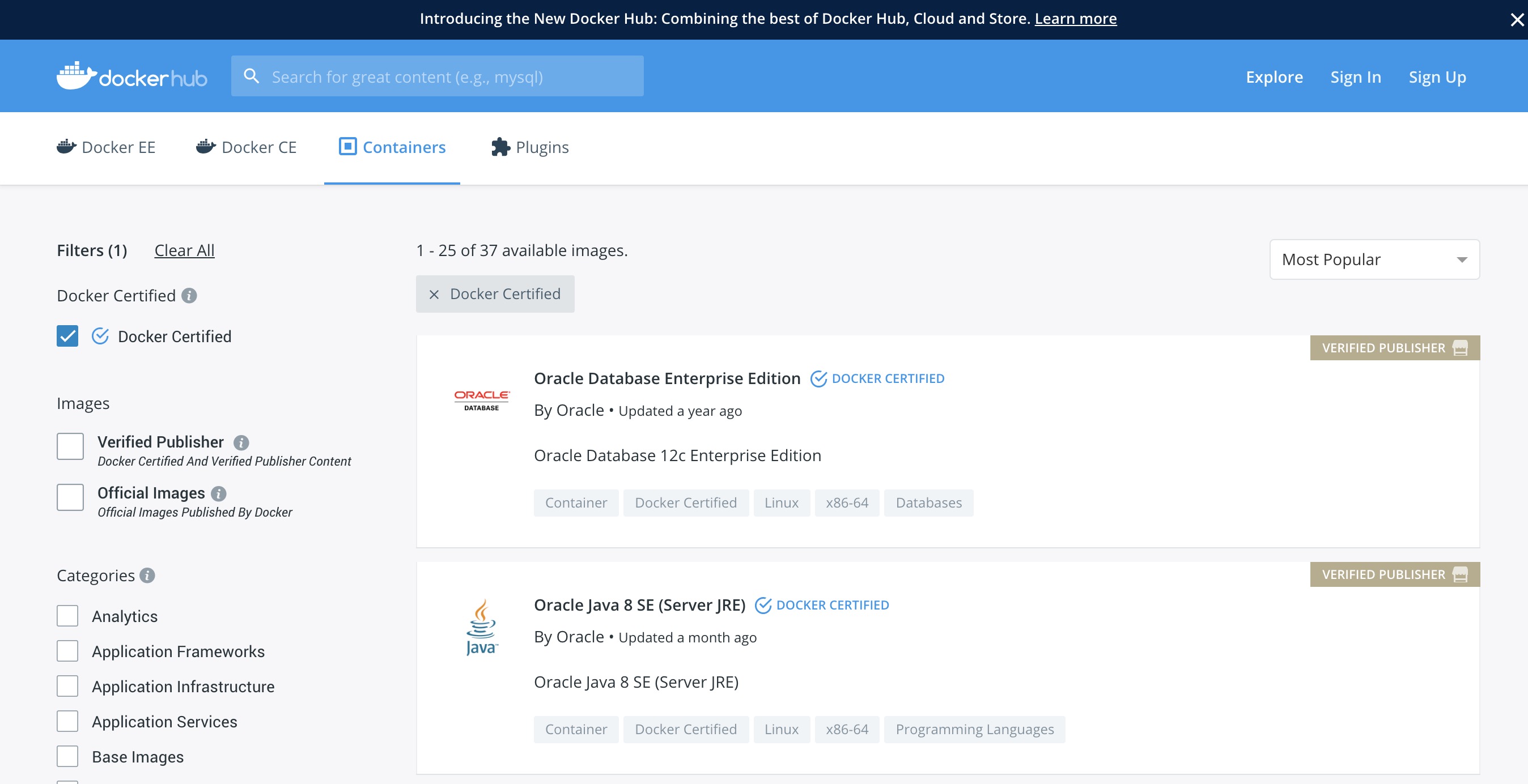

- Open source image — A community image from Docker Hub

- Docker Certified — A certified image from Docker Hub, built with best practices, tested and validated against the Docker EE platform and APIs, pass security requirements, and are collaboratively supported (eg, Splunk Enterprise)

- Verified Publisher — A verified image from Docker Hub, published and maintained by a commercial entity (eg, Sysdig Inspect)

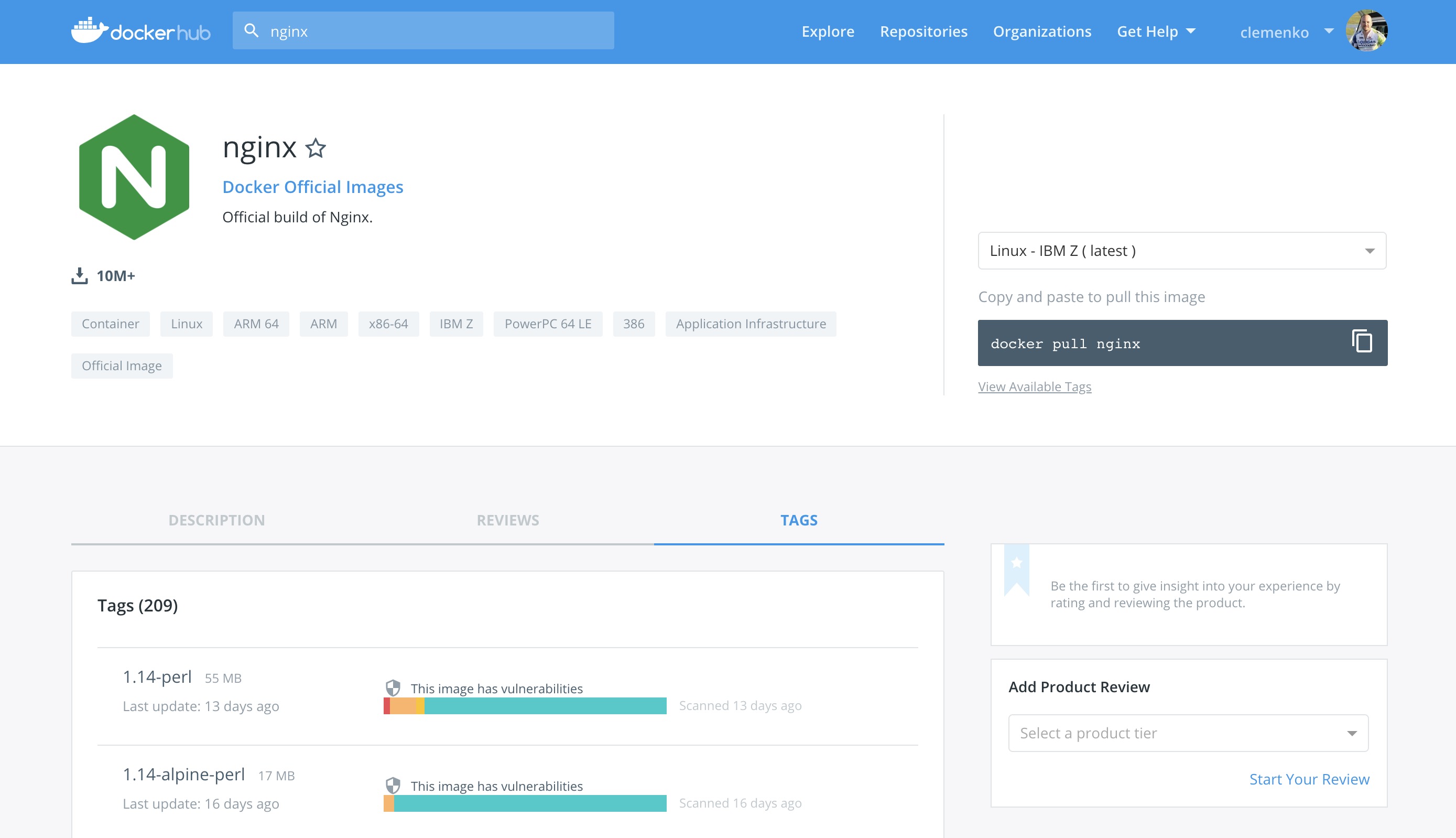

- Official image — Official Images are a curated set of Docker open source and “drop-in” solution repositories hosted on Docker Hub (eg, nginx, alpine, redis)

- Enterprise image — An internal image built, maintained, and distributed by an enterprise-wide devops team

- Custom image — A custom image built for the application by the development team

While the open source and certified images can be pulled and used “as is” the enterprise and custom images must be built from Dockerfiles. One way of creating an initial Dockerfile is to use the Image2Docker tools mentioned before. Another option is to copy the referenced Dockerfile of an image found in Docker Hub or Store.

The following table summarizes the advantages and disadvantages of each choice:

| Open-source | Certified | Enterprise | Custom | |

|---|---|---|---|---|

| Advantages |

|

|

|

|

| Disadvantages |

|

|

|

|

A common enterprise scenario is to use a combination of private and custom images. Typically, an enterprise will develop a hierarchy of base images depending on how diverse their technology stacks are. The next section describes this concept.

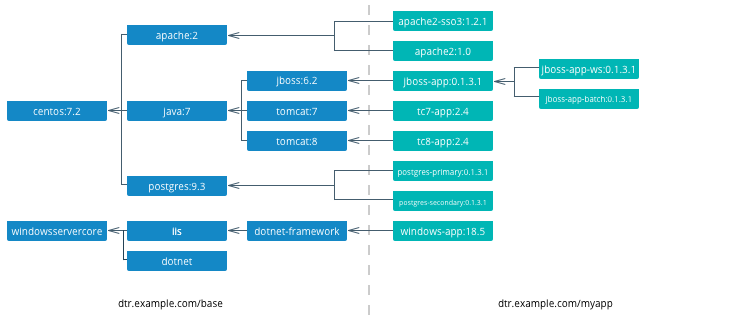

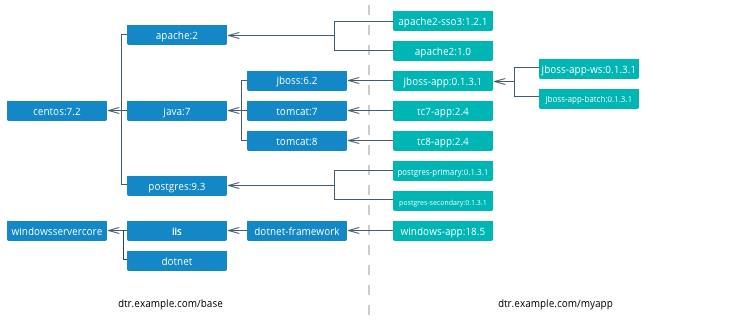

Image Hierarchy¶

Docker images natively provide inheritance. One of the benefits of deriving from base images is that any changes to a base or upstream image are inherited by the child image simply by rebuilding that image without any change to the child Dockerfile. By using inheritance, an enterprise can very simply enforce policies with no changes to the Dockerfiles for their many applications. Typically, an enterprise will develop a hierarchy of base images depending on how diverse their technology stacks are. The following is an example of an image hierarchy.

On the left are the enterprise-wide base images typically provided by the global operations team, and on the right are the application images. Even on the application side, depending on how large an application or program is, there can be a hierarchy as well.

Note

Create a project base image. In a project team with a complicated application stack there are often common libraries, tools, configurations, or credentials that are specific to the project but not useful to the entire enterprise. Put these items in a “project base image” from which all project images derive.

What to Include in an Image¶

Another question that arises when modernizing is what components of an application stack to put in an image. You can include an entire application stack such as the the official GitLab image, or you can do the opposite, which would be to break up an existing monolithic application into microservices, each residing in its own image.

Image Granularity¶

In general, it is best to have one component per image. For example, a reverse proxy, an application server, or a database engine would each have its own image. What about an example where several web applications (e.g. war) are deployed on the same application server? Should they be separated and each have its own image or should they be in the same image? The criteria for this decision are similar to non-containerized architectural decisions:

- Release Lifecycle — Are the application release schedules tightly coupled or are they independent?

- Runtime Lifecycle — If one application stops functioning should all application be stopped?

- Scalability — Do the applications need to be scaled separately or can they be scaled together?

- Security — Does one application need a higher level of security such as TLS?

- High Availability — Is one application mission critical needing redundancy and the others can tolerate a single point of failure and downtime?

Existing legacy applications will already have groupings of applications per application server or machine based upon operational experience and the above criteria. In a pure “lift and shift” scenario for example the entire application server can be put in one container.

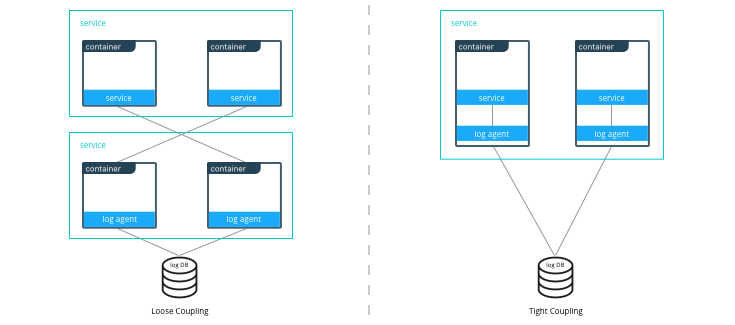

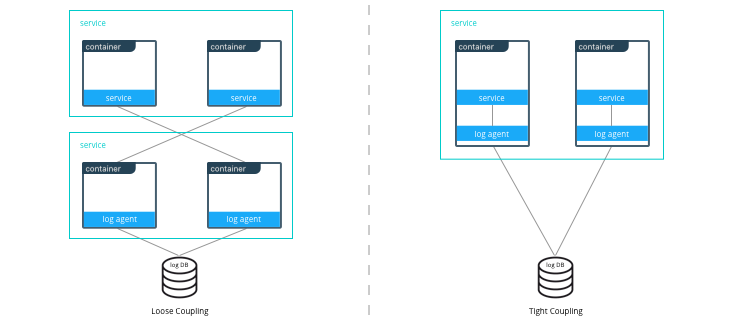

Similarly with microservices, the same criteria apply. For example, consider a microservice that depends on a logging agent to push logs to a centralized logging database. The following diagram shows two different strategies for a high availability deployment for the microservice.

If the microservice and logging agent are loosely coupled, they can be run in separate containers such as in the configuration on the left. However, if the service and the logging agent are tightly coupled and their release lifecycles are identical, then putting the two processes in the same container can simplify deployments and upgrades as illustrated in the configuration on the right. To manage multiple processes there are several lightweight init systems for containers such as tini, dumb-init, and runit.

Hardening Images¶

A question that arises frequently is which parts of the component should go into an image? The engine or server, the application itself, the configuration files? There are several main approaches:

- Create only a base image and inject the things that vary per release or environment

- Create an image per release and inject the things that vary per environment

- Create an image per release and environment

In some cases, a component does not have an application associated with it or its configuration does not vary per environment, so a base image is appropriate. An example of this might be a reverse proxy or a database. In other cases such as an application which requires an application server, using a base image would require mounting a volume for a certain version of an application.

The following table summarizes the advantages and disadvantages of each choice:

| Base Image | Release Image | Environment Image | |

|---|---|---|---|

| What’s inside the image | OS, middleware, dependencies | Base image, release artifacts, configuration generic to the environment | Release image, configuration specific to the environment |

| What’s outside the image | Release artifacts, configuration, secrets | Configuration specific to the environment, secrets | Secrets |

| Advantages | Most flexible at run time, simple, one image for all use cases | Some flexibility at run time while securing a specific version of an application | Most portable, traceable, and secure as all dependencies are in the image |

| Disadvantages | Less portable, traceable, and secure as dependencies are not included in the image | Less flexible, requires management of release images | Least flexible, requires management of many images |

| Examples | Tomcat

dtr.example.com/base/tomcat7:3 |

Tomcat + myapp-1.1.war

dtr.example.com/myap p/tomcat7:3 |

Tomcat + myapp-1.1.war + META-INF/context.xml

dtr.example.com/myapp/tomcat7:3-dev |

Usually a good choice is to use a release image. This gives the best combination of a sufficiently immutable image while maintaining the flexibility of deploying to different environments and topologies. How to configure the images per different environments is discussed in the next section.

Configuration Management¶

A single enterprise application will typically have four to twelve

environments to deploy on before going into production. Without Docker

installing, configuring, and managing these environments, a

configuration management system such as Puppet, Chef, Salt, Ansible,

etc. would be used. Docker natively provides mechanisms through

Dockerfiles and docker-compose files to manage the configuration of

these environments as code, and thus configuration management can be

handled through existing version control tools already used by

development teams.

Environment Topologies¶

The topologies of application environments can be different in order to optimize resources. In some environments it doesn’t make sense to deploy and scale all of the components in an application stack. For example, in functional testing only one instance of a web server is usually needed whereas in performance testing several instances are needed, and the configuration is tuned differently. Some common topologies are:

- Development — A single instance per component, debug mode

- Integration, Functional testing, UAT, Demonstration - A single instance per component, small dataset, and integration to test external services, debug mode

- Performance Testing — Multiple instances per component, large dataset, performance tuning

- Robustness Testing — Multiple instances per component, large dataset, integration to test external services, batch processing, and disaster recovery, debug mode

- Production and Staging — Multiple instances per component, production dataset, integration to production external services, batch processing, and disaster recovery, performance tuning

The configuration of components and how they are linked to each other is

specified in the docker-compose file. Depending on the environment

topology, a different docker-compose can be used. The

extends

feature can be used to create a hierarchy of configurations. For

example:

myapp/

common.yml <- common configurations

docker-compose-dev.yml <- dev specific configs extend common.yml

docker-compose-int.yml

docker-compose-prod.yml

Configuration Buckets¶

In a typical application stack there are tens or even hundreds of

properties to configure in a variety of places. When building images and

running containers or services there are many choices as to where and

when a property should be set depending on how that property is used. It

could be in a Dockerfile, docker-compose file, environment variable,

environment file, property file, entry point script, etc. This can

quickly become very confusing in a complicated image hierarchy

especially when trying to adopt DRY principles. The following table

shows some common groupings based on lifecycles to help determine where

to put configurations.

| When | What | Where | Examples |

|---|---|---|---|

| Yearly build time | Enterprise policies and tools | Enterprise base image Dockerfiles | FROM centos6.6RUN yum -y --noplugins install bzip2 tar sudo curl net-tools |

| Monthly build time | Application policies and tools | Application base image Dockerfiles | COPY files/dynatrace-agent-6.1.0.7880-unix.jar /opt/dynatrace/ |

| Monthly/weekly build time | Application release | Release image Dockerfiles | COPY files/MY_APP_1.3.1-M24_1.war /opt/jboss/standalone/deployments/ |

| Weekly/daily deploy time | Static environment configuration | Environment variables, docker-compose, .env | environment:-MOCK=true-GATEWAY_URL=https://example.com/ws |

| Deploy time | Dynamic environment configuration | Secrets, entrypoint.sh, vault, CLI, volumes | $ curl -H "X-Vault-Token: f3b09679-3001-009d-2b80-9c306ab81aa6" -X GET https://vlt.example.com:8200/v1/secret/db |

| Run time | Elastic environment configuration | Service discovery, profiling, debugging, volumes | $ consul-template -consul consul.example.com:6124 -template "/tmp/nginx.ctmpl:/var/nginx/nginx.conf:service nginx restart" |

The process of figuring out where to configure properties is very similar to code refactoring. For example, properties and their values that are identical in child images can be abstracted into a parent image.

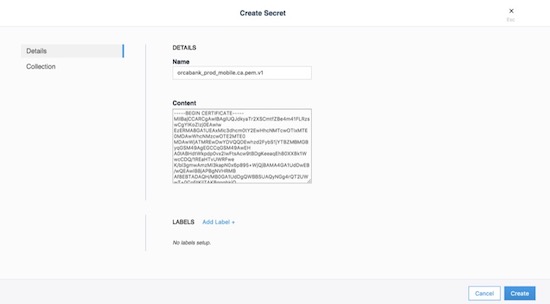

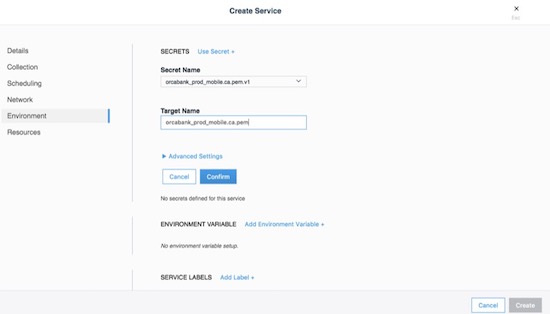

Secrets Management¶

Starting with Mirantis Container Runtime 17.03 (and Docker CS Engine 1.13), native secrets management is supported. Secrets can be created and managed using RBAC in Docker Enterprise. Although Docker EE can manage all secrets, there might already be an existing secrets management system, or there might be the requirement to have one central system to manage secrets in Docker and non-Docker environments. In these cases, a simple strategy to adopt for Docker environments is to create a master secret managed by Docker EE which can then be used in an entry point script to access the exiting secrets management system at startup time. The recovered secrets can then be used within the container.

Dockerfile Best Practices¶

As the enterprise IT landscape and the Docker platform evolve, best practices around the creation of Dockerfiles have emerged. Docker keeps a list of best practices on docs.docker.com.

Docker Files and Version Control¶

Docker truly allows the concept of “Infrastructure as Code” to be applied in practice. The files that Docker uses to build, ship, and run containers are text-based definition files and can be stored in version control. There are different text-based files related to Docker depending on what they are used for in the development pipeline.

- Files for creating images — Dockerfiles,

docker-compose.yml,entrypoint.sh, and configuration files - Files for deploying containers or services —

docker-compose.yml, configuration files, and run scripts

These files are used by different teams from development to operations in the development pipeline. Organizing them in version control is important to have an efficient development pipeline.

If you are using a “release image” strategy, it can be a good idea to separate the files for building images and those used for running them. The files for building images can usually be kept in the same version control repository as the source code of an application. This is because release images usually follow the same lifecycle as the source code.

For example:

myapp/

src/

test/

Dockerfile

docker-compose.yml <- build images only

conf/

app.properties

app.xml

entrypoint.sh

Note

A docker-compose file with only

build

configurations for different components in an application stack can

be a convenient way to build the whole application stack or

individual components in one file.

The files for running containers or services follow a different lifecycle, so they can be kept in a separate repository. In this example, all of the configurations for the different environments are kept in a single branch. This allows for very simple version control strategy, and configurations for all environments can be viewed in one place.

For example:

myapp/

common.yml

docker-compose-dev.yml

docker-compose-int.yml

docker-compose-prod.yml

conf/

dev.env

int.env

prod.env

However, this single branch strategy quickly becomes difficult to maintain when different environments need to deploy different versions of an application. A better strategy is to have each environment’s run configuration is in a separate branch. For example:

myapp/ <- int branch

docker-compose.yml

conf/

app.env

The advantages of this are multiple:

- Tags per release can be placed on a branch, allowing an environment to be easily rolled back to any prior tag.

- Listing the history of changes to the configuration of a single environment becomes trivial.

- When a new application release requires the same modification to all of the different environments and configuration files, it can be done using the merge function from the version control as opposed to copying and pasting the changes into each configuration file.

Repositories for Large Files¶

When building Docker images, inevitably there will be large binary files that need to be used. Docker build does not let you access files outside of the context path, and it is not a good idea to store these directly in a version control, especially a distributed one such as git, as the repositories will rapidly become too large and unwieldy.

There are several strategies for storing large files:

- Web Server — They can be stored on a shared filesystem, served by

a web server, and then accessed by exposing them with the

ADD <URL> <dest>command in the Dockerfile. This is the easiest method to setup, but there is no support for versions of files or RBAC on files. - Repository Manager — They can be stored as files in a repository manager such as Nexus or Artifactory, which provide support for versions and RBAC.

- Centralized Version Control — They can be stored as files in a centralized version control system such as SVN, which eliminates the problem of pulling all versions of large binary files.

- Git Large File Storage — They can be stored using Git LFS. This gives you all of the benefits of git, and the Docker build is under one context. However, there is a learning curve to using Git LFS.

Summary¶

This document discusses best practices for modernizing traditional applications to Docker. It starts with high-level decisions such as what applications to Dockerize and methodology, then moves on to more detailed decisions such as what components to put in images, what configuration to put in containers, where to put different types of configuration, and finally how to store assets for building images and configuration in version control. Follow these best practices to modernize your traditional applications.

Modernizing Traditional .NET Framework Applications¶

Introduction¶

Docker containers have long been used to enable the development of new applications leveraging modern application architectural patterns like microservices, but Docker containers are not just for new applications. Traditional or Brownfield applications can also be migrated to containers and Docker Enterprise Edition to take advantage of the benefits that Docker Enterprise provides.

What You Will Learn¶

This reference architecture provides guidance and examples for modernizing traditional .NET Framework applications to Docker Enterprise Edition. You will learn to identify the types of .NET Framework applications that are good candidates for containerization, the “lift-and-shift” approach to containerization with little to no code changes, how to get started, and guidance around various .NET Framework applications and Windows Server containers, including handling Windows Integrated Authentication, networking, logging, and monitoring.

This document focuses primarily on custom .NET Framework applications. It does not cover commercial off-the-shelf (COTS) .NET Framework applications such as SharePoint and Sitecore. Although it may be possible to run these COTS applications in Docker Enterprise, guidance on how to do so for these applications are beyond the scope of this reference architecture. Also, .NET Core is not covered. All references to .NET applications refer to .NET Framework applications and not .NET Core applications.

Refactoring to microservices architectures is also not covered in this document. At the end of the containerization process discussed in this reference architecture, your .NET Framework application will be ready should you decide to refactor parts of the application to microservices.

Note

Before continuing, please become familiar with the reference architecture Design Considerations and Best Practices to Modernize Traditional Apps

See the caveats section for additional important information to be aware of.

Caveats¶

Before you begin there are some things to be aware of that will impact your deployment of applications on Docker Enterprise.

Note

Windows Server 2019 is the recommended platform to run Windows containerized applications. Versions prior to Windows Server 2016 do not support running containers of any type. Windows 2016, while capable of supporting containers, is not Microsoft’s recommended container host platform.

Desktop based apps with graphical user interfaces (GUIs) cannot yet be containerized

Due to the unique nature of certain Windows features (e.g. networking, security, file system) there are several items of note regarding the deployment of a Docker service. Below is a list of these issues including the current “best practices” used to work around them.

Networking (see Example compose file for a service running on Windows nodes below)

For services that need to be reachable outside the swarm, Linux containers are able to use Docker swarm’s ingress routing mesh. However, Windows Server 2016 does not currently support the ingress routing mesh. Therefore Docker services scheduled for Windows Server 2016 nodes that need to be accessed outside of swarm need to be configured to bypass Docker’s routing mesh. This is done by publishing ports using

hostmode which publishes the service’s port directly on the node where it is running.Additionally, Docker’s DNS Round Robin is the only load balancing strategy supported by Windows Server 2016 today; therefore, for every Docker service scheduled to these nodes, the

--endpoint-modeparameter must also be specified with a value ofdnsrr.When running Docker for Windows there is an issue related to container IP addresses. The IP address shown when using the

docker inspectcommand for a container is incorrect. To browse a web site or api running in a container you must use thedocker execcommand and query the IP address from within the container (e.g.ipconfig). Also, port assignments are ignored by Docker for Windows when running Windows containers (e.g.docker run -p 8080:80). Run the example app to illustrate this issue.Docker Objects

Configs use the

SYSTEMandADMINISTRATORpermissions- When using a Docker Config object to replace the

web.configfile (ASP.Net apps), IIS will not be able to consume the file. IIS requires (by default)BUILTIN\IIS_IUSRScredentials applied to files it will read/write to. - Due to the fact that Docker Config objects are attached after the

container is created, assigning rights to the application folder

during a

docker buildwill not solve this problem. Files added by the Config will retain their original credentials (ADMINISTRATOR&SYSTEM).

- When using a Docker Config object to replace the

Secrets stored on node temporarily

- Microsoft Windows has no built-in driver for managing RAM disks,

so within running Windows containers, secrets are persisted in

clear text to the container’s root disk. However, the secrets are

explicitly removed when a container stops. In addition, Windows

does not support persisting a running container as an image using

docker commitor similar commands. - On Windows, we recommend enabling BitLocker on the volume containing the Docker root directory on the host machine to ensure that secrets for running containers are encrypted at rest.

- Secret files with custom targets are not directly bind-mounted

into Windows containers, since Windows does not support

non-directory file bind-mounts. Instead, secrets for a container

are all mounted in

C:\ProgramData\Docker\internal\secrets(an implementation detail which should not be relied upon by applications) within the container. Symbolic links are used to point from there to the desired target of the secret within the container. The default target isC:\ProgramData\Docker\secrets. - When creating a service which uses Windows containers, the options

to specify UID, GID, and mode are not supported for secrets.

Secrets are currently only accessible by administrators and users

with

systemaccess within the container.

- Microsoft Windows has no built-in driver for managing RAM disks,

so within running Windows containers, secrets are persisted in

clear text to the container’s root disk. However, the secrets are

explicitly removed when a container stops. In addition, Windows

does not support persisting a running container as an image using

AD authentication requires use of Integrated Windows Authentication

Windows node must be joined to the AD domain

Common base images for Windows applications

- Additional Windows Features may be required depending on app requirements

ASP.Net applications: microsoft/aspnet

WCF Services: microsoft/iis

Console Applications: microsoft/dotnet-framework

.Net build tools: microsoft/dotnet-framework

- Used for multi-stage builds (use the SDK variants)

ASP.Net Core applications: microsoft/aspnetcore

ASP.Net Core build tools: microsoft/aspnetcore-build

- Used for multi-stage builds

Windows base OS images: microsoft-windows-base-os-images

Example compose file for a service running on Windows nodes¶

version: '3.3'

services:

website:

image: mcr.microsoft.com/windows/servercore/iis:windowsservercore-ltsc2019 # serves a default site on port 80

ports:

- mode: host # host mode networking

deploy:

replicas: 1

placement:

constraints:

- engine.labels.os == windows # place service only on Windows nodes

labels:

com.docker.lb.hosts: app.example.org # Replace with a real URL

com.docker.lb.network: mystack_myoverlay # the network that the layer 7 mesh will hand-off to

com.docker.lb.port: 80 # the port the service expects traffic on

endpoint_mode: dnsrr # dns round robin load balancing

networks:

- myoverlay # custom overlay network the service will use

networks:

myoverlay: # the custom service definition

driver: overlay

Application Selection¶

Before diving in, it’s important to understand there are different types of .NET Framework applications. Although not intended to be exhaustive, this section describes the most common types of .NET Framework applications and considerations that need to be made for these applications before proceeding with containerization.

| Application Type | Considerations |

|---|---|

| ASP.NET Framework Applications |

|

| WCF Services |

|

| Windows Services |

|

| Desktop Applications |

|

| Console Applications |

|

| COTS Applications |

|

Application Dependencies¶

When initially getting started with the app containerization process, avoid applications that have many dependencies, components, and/or many tiers. Begin with a 2-3 tier application first until you are comfortable with the containerization process before moving to more complex applications.

Additionally, for applications that have component dependencies, ensure that the components can be installed without interaction (i.e., unattended installation or scripted). Components that require interaction during installation can’t be added to the Dockerfile.

Lastly, for applications that have dependencies to services or external systems (e.g. databases, file shares, web services, etc.) ensure that the addresses/endpoints for those services are stored in configuration files and are resolvable from the Docker Enterprise Windows Server hosts. Any hard-coded service references will need to be refactored prior to containerization.

Application Containerization¶

When containerizing an application it is important to determine what the desired outcome state is for the application. It is recommended that applications be divided into two categories.

- Applications that will be rearchitected to be microservices, horizontally scalable, geo-redundant, highly available, stateless, etc…

- Applications that will not be rearchitected but will take advantage of an improved development pipeline.

For the first scenario (rearchitected) the applications should be built as microservices and should deployed in a container native fashion.

For the second scenario a “lift and shift” approach should be applied to allow for the agility and portability of containers without significant rewriting of the application.

With a “lift and shift” approach, some rules of thumb are:

- Keep the .NET Framework version the same

- Keep the existing application architecture

- Keep the same versions of components and application dependencies

- Keep the deployment simple: static and not elastic

Once the application is successfully containerized it should then be easier and faster to change, for example:

- Upgrade to a newer version of application server

- Integrate into a simplified CI/CD pipeline

- Deploy the application against any Docker Enterprise environment regardless of location

- Reduction of technical debt

With a rearchitecting approach containers can provide the same benefits as for lift and shift with the addition of:

- Higher flexibility and agility of developing more targeted services

- Ease of unit testing

- Higher velocity of pipeline execution

- Increased frequency of deployments.

The following sections discuss the application containerization process.

Creating the Dockerfile¶

Note

Refer to `Best practices for writing Dockerfiles <https://docs.docker.com/develop/develop-images/dockerfile\_best-practices/>`_ for information on creating the Dockerfile.

The first step in a lift and shift approach is to create the Dockerfile, and the first step in creating the Dockerfile is choosing the right base Docker image to use. All containerized .NET Framework applications use an image that is based on Microsoft’s Windows Server Core base OS image.

Microsoft Base Images¶

Depending on the type of .NET Framework application, consider using the following as base images to start:

| Application Type | Image | Notes |

|---|---|---|

| ASP.NET Applications | microsoft/aspnet | IIS and ASP.NET Framework pre installed |

| WCF Services | microsoft/servercore-iis | Assumes the WCF service is hosted in IIS. If hosted in another application, another base image may be more appropriate. |

| Windows Services | microsoft/dotnet-framework | .NET Framework pre installed |

| Console Applications | microsoft/dotnet-framework | .NET Framework pre installed |

It’s important to enable windows features required by your application. This is done using Powershell commands in your Dockerfile To optimize your image, don’t include any unnecessary Windows features that aren’t being used by your application.

You can use the default settings,or use your own customized application pool for your web app. Note that if you use a domain account or service account for your application pool identity, you cannot just specify a domain account in your Dockerfile. You need to set the identity to one of the built-in types and then use a Group Managed Service Account (gMSA) via a Credential Spec when running the container. See the section Integrated Windows Authentication for more details.

Any settings that have been configured manually for the web application through IIS (e.g. authentication settings, etc.) must be added to your Dockerfile manually. Note that IIS management console should not be used to apply changes to running containers.

The following Dockerfile is an example of a final Dockerfile:

# escape=`

FROM mcr.microsoft.com/dotnet/framework/aspnet:3.5-windowsservercore-ltsc2019

SHELL ["powershell", "-Command", "$ErrorActionPreference = 'Stop'; $ProgressPreference = 'SilentlyContinue';"]

# used only for gMSA authentication. Remove if using integrated auth.

RUN Enable-WindowsOptionalFeature -Online -FeatureName IIS-WindowsAuthentication

# Create the App Pool - not needed if you’re using default App pool

RUN Import-Module WebAdministration; `

New-Item -Path IIS:\AppPools\MyAppPool; `

Set-ItemProperty -Path IIS:\AppPools\MyAppPool -Name managedRuntimeVersion -Value 'v4.0'; `

Set-ItemProperty -Path IIS:\AppPools\MyAppPool -Name processModel -value @{identitytype='ApplicationPoolIdentity'}

# Set up website: MyApp

RUN New-Item -Path 'C:\MyApp' -Type Directory -Force;

# Not needed if you use the default web site.

RUN New-Website -Name 'MyApp' -PhysicalPath 'C:\MyApp' -Port 80 -ApplicationPool 'MyAppPool' -Force;

# This disables Anonymous Authentication and enables Windows Authentication

RUN $siteName='MyApp'; `

Set-WebConfigurationProperty -filter /system.WebServer/security/authentication/AnonymousAuthentication -name enabled -value false -location $sitename; `

Set-WebConfigurationProperty -filter /system.WebServer/security/authentication/windowsAuthentication -name enabled -value true -location $sitename;

EXPOSE 80

COPY ["MyApp", "/MyApp"]

RUN $path='C:\MyApp'; `

$acl = Get-Acl $path; `

$newOwner = [System.Security.Principal.NTAccount]('BUILTIN\IIS_IUSRS'); `

$acl.SetOwner($newOwner); `

dir -r $path | Set-Acl -aclobject $acl

In the above Dockerfile, a new app pool was explicitly created and configuration was added to disable Anonymous Authentication and enable Windows Authentication. This image can now be built and pushed to Mirantis Secure Registry:

docker image build -t dtr.example.com/demos/myapp:1.0-10.0.14393.1715 .

docker image push dtr.example.com/demos/myapp:1.0-10.0.14393.1715

During the build and debugging process, for IIS-hosted applications such as the above, you may also want to build a second Dockerfile that enables remote IIS management:

# escape=`

FROM dtr.example.com/demos/myapp:1.0-10.0.14393.1715

SHELL ["powershell", "-Command", "$ErrorActionPreference = 'Stop'; $ProgressPreference = 'SilentlyContinue';"]

# Enable Remote IIS Management

RUN Install-WindowsFeature Web-Mgmt-Service; `

NET USER dockertester 'Docker1234' /ADD; `

NET LOCALGROUP 'Administrators' 'testing' /add; `

Configure-SMRemoting.exe -enable; `

sc.exe config WMSVC start=auto; `

Set-ItemProperty -Path HKLM:\SOFTWARE\Microsoft\WebManagement\Server -Name EnableRemoteManagement -Value 1

EXPOSE 80 5985

With the above Dockerfile, the container’s IIS is available at

<container-ip>:5985 and can be reviewed remotely on another machine

with IIS management console installed. The user is dockertester with

a password of Docker1234. Note that IIS management console should

not be used to apply changes to running containers. It should only be

used to troubleshoot and determine if the instructions in the Dockerfile

have been properly applied.

The above Dockerfile also represents a typical Dockerfile created for .NET Framework applications. The high level steps in such a Dockerfile are:

- Select a base image

- Install Windows features and other dependencies

- Install and configure your application

- Expose ports

One step that is often in a Dockerfile but not in the above example is the use of CMD or ENTRYPOINT.

The ASP.NET Framework base image used in the above example already contains an entrypoint that was sufficient for this application. You can choose to create your own entrypoint for your application so you can change or add additional functionality. One scenario to use an entrypoint for is when your application needs to wait for services that it requires. Typically, a Powershell script is created to handle the wait logic:

# PowerShell entrypoint.ps1

while ((Get-Service "MyWindowsService").Status -ne "Running") {

Start-Sleep -Seconds 10;

}

and the Dockerfile contains an ENTRYPOINT entry that points to that

Powershell file:

ENTRYPOINT ["powershell", ".\\entrypoint.ps1"]

Image Tags and Windows Versions¶

When using one of the previously mentioned Microsoft Base Images, it is important to use the right tag. With default settings, Microsoft only supports containers whose base image version exactly matches the host’s operating system version as described in Windows container requirements on docs.microsoft.com. Although a container may start or even appear to work even if its base version doesn’t match the host’s version, Microsoft cannot guarantee full functionality so it’s best to always match the versions.

To determine the Windows Server version of the Docker Windows Server host, use the following Powershell command:

Get-ItemProperty "HKLM:\SOFTWARE\Microsoft\Windows NT\CurrentVersion" | % {"{0}.{1}.{2}.{3}" -f $_.CurrentMajorVersionNumber,$_.CurrentMinorVersionNumber,$_.CurrentBuildNumber,$_.UBR}

The output will be something like 10.0.17763.678. When using one of

Microsoft’s base images, use an image tagged with the full version

number outputted by the above command. For example, a Dockerfile for an

ASP.NET 3.5 web application would start with the following:

# escape=`

FROM mcr.microsoft.com/dotnet/framework/aspnet:3.5-windowsservercore-ltsc2019

When tagging your own images, it’s a good practice with Windows Server containers to also indicate the full Windows Server version number.

Note

For containers started with Hyper-V isolation --isolation=hyperv, the

version match requirement is not necessary.

Integrated Windows Authentication¶

One of the unique aspects often found in Windows-based applications is the use of Integrated Windows Authentication (IWA). It is often used with Windows-based applications to validate a client’s identity, where the client’s identity/account is maintained in Active Directory. A client, in this case, may be an end user, a computer, an application, or a service.

A common pattern is to use Integrated Windows Authentication for applications hosted in IIS to authenticate the application’s end users. With this approach, the application authenticates with the credentials of the user currently logged in, eliminating the need for the application and the user to maintain another set of credentials for authentication purposes. Another common use of IWA is to use it for service-to-service authentication, such as the authentication that happens between an ASP.NET Framework application (more specifically, the application’s process identity) and a backend service like a SQL Server service.

Because containers cannot currently be joined to an Active Directory domain as required for Integrated Windows Authentication to work, some additional configuration is required for applications that require IWA as these applications are migrated to containers. The following sections provide the configuration steps needed to enable IWA.

Group Managed Service Accounts¶

A Group Managed Service Account (gMSA), introduced in Windows Server 2012, is similar to a Managed Service Account (MSA). Like a MSA, gMSAs are managed domain accounts that can be used by applications and services as a specific user principal used to connect to and access network resources. Unlike MSAs, which can only be used by a single instance of a service, a gMSA can be used by multiple instances of a service running across multiple computers, such as in a server farm or in load-balanced services. Similarly, containerized applications and services use the gMSA when access to domain resources (file shares, databases, directory services, etc.) from the container are needed.

Prior to creating a Group Managed Service Account for a containerized application or service, ensure that Windows Server worker nodes that are part of your Docker Swarm cluster are joined to your Active Directory domain. This is required to access and use the gMSA. Additionally, it is highly recommended to create an Active Directory group specifically for managing the Windows Server hosts in your Docker Swarm cluster.

To create an Active Directory group called Container Hosts, the

following Powershell command can be used:

New-ADGroup "Container Hosts" -Group Global

To add your Windows Server worker nodes to this group:

$group = Get-ADGroup "Container Hosts";

$host = Get-ADComputer "Windows Worker Node Name";

Add-ADGroupMember $group -Members $host;

For the Active Directory domain controller (DC) to begin managing the passwords for Group Managed Service Accounts, a root key for the Key Distribution Service (KDS) is first needed. This step is only required once for the domain.

The Powershell cmdlet Get-KDSRootKey can be used to check if a root

key already exists. If not, a new root key can be added with the

following:

Add-KDSRootKey -EffectiveImmediately

Note that although the -EffectiveImmediately parameter is used, the

key is not immediately replicated to all domain controllers. Additional

information on creating KDS root keys that are effective immediately for

test environments can be found at Create the Key Distribution Services KDS Root Key.

Once the KDS root key is created and the Windows Server worker nodes are

joined to the domain, a Group Managed Service Account can then be

created for use by the containerized application. The Powershell cmdlet

New-ADServiceAccount

is used to create a gMSA. At a minimum, to ensure that the gMSA will

work properly in a container, the -Name, -ServicePrincipalName,

and -PrincipalsAllowedToRetrieveManagedPasswords options should be

used:

New-ADServiceAccount -Name mySvcAcct -DNSHostName myapp.example.com `

-ServicePrincipalName HTTP/myapp.example.com `

-PrincipalsAllowedToRetrieveManagedPasswords 'Container Hosts'

Name- the account name that is given to the gMSA in Active Directory.DNSHostName- the DNS host name of the service.ServicePrincipalName- the unique identifier(s) for the service that will be using the gMSA account.PrincipalsAllowedToRetrieveManagedPasswords- the principals that are allowed to use the gMSA. In this example,Container Hostsis the name of the Active Directory group where all Windows Server worker nodes in the Swarm have been been added to.

Once the Group Managed Service Account has been created, you can test to see if the gMSA can be used on the Windows Server worker node by executing the following Powershell commands on that node:

Add-WindowsFeature RSAT-AD-Powershell;

Import-Module ActiveDirectory;

Install-ADServiceAccount mySvcAcct;

Test-ADServiceAccount mySvcAcct;

Credential Specs¶

Once a Group Managed Service Account is created, the next step is to create a credential spec. A credential spec is a file that resides on the Windows Server worker node and stores information about a gMSA. When a container is created, you can specify a credential spec for a container to use, which then uses the associated gMSA to access network resources.

To create a credential spec, open a Powershell session on one of the Windows Server worker nodes in the Swarm and execute the following commands:

Invoke-WebRequest https://raw.githubusercontent.com/Microsoft/Virtualization-Documentation/live/windows-server-container-tools/ServiceAccounts/CredentialSpec.psm1 -OutFile CredentialSpec.psm1

Import-Module .\CredentialSpec.psm1;

New-CredentialSpec -Name myapp -AccountName mySvcAcct;

The first two lines simply downloads and imports into the session a Powershell module from Microsoft’s virtualization team that contains Powershell functions for creating and managing credential specs.

The New-CredentialSpec function is used on the last line to create a

credential spec. The -Name parameter indicates the name for the

credential spec (and is used to name the credential spec JSON file), and

the -AccountName parameter indicates the name of the Group Managed

Service Account to use.

Credential specs are created and stored in the

C:\ProgramData\docker\CredentialSpecs\ directory by default. The

Get-CredentialSpec Powershell function can be used to list all

credential specs on the current system. For each credential spec file

you create, copy the file to the same directory on the other Windows

Server worker nodes that are part of the cluster.

The contents of a credential spec file should look similar to the following:

{

"CmsPlugins": [

"ActiveDirectory"

],

"DomainJoinConfig": {

"Sid": "S-1-5-21-2718210484-3565342085-4281728074",

"MachineAccountName": "mySvcAcct",

"Guid": "274490ad-0f72-4bdd-af6b-d8283ca3fa69",

"DnsTreeName": "example.com",

"DnsName": "example.com",

"NetBiosName": "DCKR"

},

"ActiveDirectoryConfig": {

"GroupManagedServiceAccounts": [

{

"Name": "mySvcAcct",

"Scope": "example.com"

},

{

"Name": "mySvcAcct",

"Scope": "DCKR"

}

]

}

}

Once the credential spec file is created, it can be used by a container

by specifying it as the value of the --security-opt parameter passed

to the docker run command:

docker run --security-opt "credentialspec=file://myapp.json" `

-d -p 80:80 --hostname myapp.example.com `

dtr.example.com/demos/myapp:1.0-10.0.14393.1715

Notice in the above example, the --hostname value specified matches

the Service Principal Name that was assigned when the Group Managed

Service Account was created. This is also required for Integrated

Windows Authentication to function properly.

When configuring for use in a Docker stack, the credential_spec and

hostname keys can be used in the Docker Compose YAML file as in the

following example:

version: "3.3"

services:

web:

image: dtr.example.com/demos/myapp:1.0-10.0.14393.1715

credential_spec:

file: myapp.json

hostname: myapp.example.com

Networking¶

Networking is another aspect to consider when containerizing your

Windows application’s services and components. For services that need to

be available outside the swarm, Linux containers are able to use Docker

swarm’s ingress routing

mesh. However,

Windows Server 2016 does not currently support the ingress routing mesh.

Therefore Docker services scheduled for Windows Server 2016 nodes that

need to be accessed outside of swarm need to be configured to bypass

Docker’s routing mesh. This is done by publishing ports using host

mode which publishes the service’s port directly on the node where it is

running.

Additionally, Docker’s DNS Round Robin is the only load balancing

strategy supported by Windows Server 2016 today; therefore, for every

Docker service scheduled to these nodes, the --endpoint-mode

parameter must also be specified with a value of dnsrr. For example:

docker service create `

--publish mode=host,target=80,port=80 `

--endpoint-mode dnsrr `

--constraint "node.os.platform == windows" `

dtr.example.com/demos/myapp:1.0-10.0.14393.1715

Because ingress routing mesh is not being used, an error could occur

should a client attempt to access the service on a node where the

service isn’t currently deployed. One approach to ensure the service is

accessible from multiple nodes is to deploy the service in global

mode which places a single instance of the service on each node:

docker service create `

--publish mode=host,target=80,port=80 `

--endpoint-mode dnsrr `

--mode global `

--constraint "node.os.platform == windows" `

dtr.example.com/demos/myapp:1.0-10.0.14393.1715

Creating a global service ensures that one and only one instance of that

service runs on each node. However, if replicated deployment mode is

what is desired, additional considerations and configurations need to be

made to properly handle load balancing and service discovery. With

host publishing mode, it is your responsibility to provide a list of

IP addresses and ports to your load balancer. Doing so typically

requires a custom registrator service on each Windows Server host that

uses Docker events to monitor containers starting and stopping.

Implementation of the custom registrator service is out of scope for

this article.

Note that Docker’s routing and service discovery for services on the

same overlay network works without additional configuration.

For more details about swarm networking in general, see the Exploring Scalable, Portable Docker Swarm Container Networks reference architectures.

HTTP Routing Mesh¶

Another option to consider for services available outside the swarm is

Mirantis Kubernetes Engine’s (MKE) HTTP Routing Mesh (HRM). HRM

works at the application layer (L7) and uses the Host HTTP request

header found in HTTP requests to route incoming requests to the

corresponding service. Docker services can participate in the HRM by

adding a com.docker.ucp.mesh.http label and attaching it to an HRM

network (ucp-hrm is a default network):

docker service create `

--name aspnet_app `

--port 80 `

--network ucp-hrm `

--label com.docker.ucp.mesh.http.demoappweb: "external_route=http://mydemoapp.example.com,internal_port=80" `

--placement "node.os.platform == windows" `

dtr.example.com/demos/myapp:1.0-10.0.14393.1715

In the above example, because of the value for the

com.docker.ucp.mesh.http.demoappweb label, inbound HTTP traffic

received with mydemoapp.example.com Host HTTP request header

will be routed to a container for this service on the container’s port

80. More details on how to use HTTP Routing Mesh can be found in the

ucp-ingress-swarm

Logging¶