Enable OpenContrail DPDK

Enable OpenContrail DPDK¶

OpenContrail 4.x uses DPDK libraries version 17.02.

Caution

Starting from OpenContrail version 4.x, the Mellanox NICs are not supported in the DPDK-based OpenContrail deployments.

A workload running on a DPDK vRouter does not provide better pps if an application is not DPDK-aware. The performance result is the same as for kernel vRouter.

To enable the OpenContrail DPDK pinning:

Verify that you have performed the following procedures:

Verify your NUMA nodes on the host operating system to identify the available vCPUs. For example:

lscpu | grep NUMA NUMA node(s): 1 NUMA node0 CPU(s): 0-11

Include the following class to

cluster.<name>.openstack.computeand configure thevhost0interface:classes: ... - system.opencontrail.compute.dpdk ... parameters: linux: network: interfaces: ... # other interface setup ... vhost0: enabled: true type: eth address: ${_param:single_address} netmask: 255.255.255.0 name_servers: - 8.8.8.8 - 1.1.1.1

Set the parameters in

cluster.<name>.openstack.initon all compute nodes:compute_vrouter_tasksetHexadecimal mask of CPUs used for DPDK-vRouter processes

compute_vrouter_socket_memSet of amount HugePages in Megabytes to be used by vRouter-DPDK taken for each NUMA node. Set size is equal to NUMA nodes count, elements are divided by comma

compute_vrouter_dpdk_pciPCI of a DPDK NIC. In case of BOND there must be 0000:00:00.0

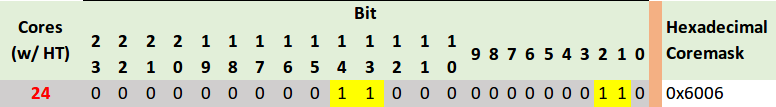

Calculate the hexadecimal mask. To enhance vRouter with DPDK technology, several isolated host CPUs should be used for such DPDK processes as statistics, queue management, memory management, and poll-mode drivers. To perform this, you need to configure the hexadecimal mask of CPUs to be consumed by vRouter-DPDK.

The way to calculate the hexadecimal mask is simple as a set of CPUs corresponds to the bits sequence size of CPUs number.

0on i-th place in this sequence means that CPU numberiwill not be taken for usage, and1has the opposite meaning. Simple translation of binary-to-hexadecimal based on bit sequence of size 24 is illustrated below (vRouter is bound to 4 cores: 14,13,2,1.)

Pass the hexadecimal mask to vRouter-DPDK command line using the following parameters. For example:

compute_vrouter_taskset: "-c 1,2" # or hexadecimal 0x6 compute_vrouter_socket_mem: '1024' # or '1024,1024' for 2 NUMA nodes

Specify the MAC address and in some cases PCI for every node.

Example

openstack_compute_node02: name: ${_param:openstack_compute_node02_hostname} domain: ${_param:cluster_domain} classes: - cluster.${_param:cluster_name}.openstack.compute params: salt_master_host: ${_param:reclass_config_master} linux_system_codename: trusty compute_vrouter_dpdk_mac_address: 00:1b:21:87:21:99 compute_vrouter_dpdk_pci: "'0000:05:00.1'" primary_first_nic: enp5s0f1 # NIC for vRouter bindSelect from the following options:

If you are performing the initial deployment of your environment, proceed with the further environment configurations.

If you are making changes to an existing environment, re-run salt configuration on the Salt Master node:

salt "cmp*" state.sls opencontrail

Note

For the changes to take effect, servers require a reboot.

If you need to set different values for each compute node, define them in

cluster.<NAME>.infra.config.Example

openstack_compute_node02: name: ${_param:openstack_compute_node02_hostname} domain: ${_param:cluster_domain} classes: - cluster.${_param:cluster_name}.openstack.compute params: salt_master_host: ${_param:reclass_config_master} linux_system_codename: trusty compute_vrouter_dpdk_mac_address: 00:1b:21:87:21:99 compute_vrouter_dpdk_pci: "'0000:05:00.1'" compute_vrouter_taskset: "-c 1,2" compute_vrouter_socket_mem: "1024" primary_first_nic: enp5s0f1 # NIC for vRouter bind