Enable OVS DPDK support

Enable OVS DPDK support¶

Before you proceed with the procedure, verify that you have performed the preparatory steps described in Prepare your environment for OVS DPDK.

While enabling DPDK for Neutron Open vSwitch, you can configure a number of settings specific to your environment that assist in optimizing your network performance, such as manual pinning and others.

To enable OVS DPDK:

Verify your NUMA nodes on the host operating system to see what vCPUs are available. For example:

lscpu | grep NUMA NUMA node(s): 1 NUMA node0 CPU(s): 0-11

Include the class to

cluster.<name>.openstack.computeand configure thedpdk0interface. Select from the following options:Single interface NIC dedicated for DPDK:

... - system.neutron.compute.nfv.dpdk ... parameters: linux: network: interfaces: ... # other interface setup … dpdk0: name: ${_param:dpdk0_name} pci: ${_param:dpdk0_pci} driver: igb_uio enabled: true type: dpdk_ovs_port n_rxq: 2 br-prv: enabled: true type: dpdk_ovs_bridgeOVS DPDK bond with 2 dedicated NICs

... - system.neutron.compute.nfv.dpdk ... parameters: linux: network: interfaces: ... # other interface setup … dpdk0: name: ${_param:dpdk0_name} pci: ${_param:dpdk0_pci} driver: igb_uio bond: dpdkbond1 enabled: true type: dpdk_ovs_port n_rxq: 2 dpdk1: name: ${_param:dpdk1_name} pci: ${_param:dpdk1_pci} driver: igb_uio bond: dpdkbond1 enabled: true type: dpdk_ovs_port n_rxq: 2 dpdkbond1: enabled: true bridge: br-prv type: dpdk_ovs_bond mode: active-backup br-prv: enabled: true type: dpdk_ovs_bridge

Calculate the hexadecimal coremask.

As well as for OpenContrail, OVS-DPDK needs logical cores parameter to be set. Open vSwitch requires two parameters:

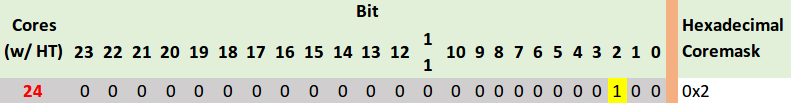

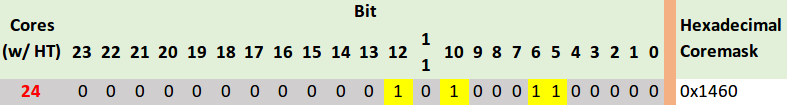

lcoremask to DPDK processes andPMD maskto spawn threads for poll-mode packet processing drivers. Both parameters must be calculated respectively to isolated CPUs and are representing hexadecimal numbers. For example, if we need to take single CPU number 2 for Open vSwitch and 4 CPUs with numbers 5, 6, 10 and 12 for forwarding PMD threads, we need to populate parameters below with the following numbers:The

lcoresmask example:

PMD CPU mask example:

Define the parameters in the

cluster.<name>.openstack.initif they are the same for all compute nodes. Otherwise, specify them incluster.<name>.infra.config:dpdk0_nameName of port being added to OVS bridge

dpdk0_pciPCI ID of physical device being added as a DPDK physical interface

compute_dpdk_driverKernel module to provide userspace I/O support

compute_ovs_pmd_cpu_maskHexadecimal mask of CPUs to run DPDK Poll-mode drivers

compute_ovs_dpdk_socket_memSet of amount HugePages in Megabytes to be used by OVS-DPDK daemon taken for each NUMA node. Set size is equal to NUMA nodes count, elements are divided by comma

compute_ovs_dpdk_lcore_maskHexadecimal mask of DPDK lcore parameter used to run DPDK processes

compute_ovs_memory_channelsNumber of memory channels to be used.

Example

compute_dpdk_driver: uio compute_ovs_pmd_cpu_mask: "0x6" compute_ovs_dpdk_socket_mem: "1024" compute_ovs_dpdk_lcore_mask: "0x400" compute_ovs_memory_channels: "2"

Optionally, map the port RX queues to specific CPU cores.

Configuring port queue pinning manually may help to achieve maximum network performance through matching the ports that run specific workloads with specific CPU cores. Each port can process a certain number of Transmit and Receive (RX/TX) operations, therefore it is up to the Network Administrator to decide on the most efficient port mapping. Keeping a constant polling rate on some performance critical ports is essential in achieving best possible performance.

Example

dpdk0: ... pmd_rxq_affinity: "0:1,1:2"

The example above illustrates pinning of the queue 0 to core 1 and pinning of the queue 1 to core 2, where cores are taken in accordance with

pmd_cpu_mask.Specify the MAC address and in some cases PCI for every node.

Example

openstack_compute_node02: name: ${_param:openstack_compute_node02_hostname} domain: ${_param:cluster_domain} classes: - cluster.${_param:cluster_name}.openstack.compute params: salt_master_host: ${_param:reclass_config_master} linux_system_codename: xenial dpdk0_name: enp5s0f1 dpdk1_name: enp5s0f2 dpdk0_pci: '"0000:05:00.1"' dpdk1_pci: '"0000:05:00.2"'If the VXLAN neutron tenant type is selected, set the local IP address on

br-prvfor VXLAN tunnel termination:... - system.neutron.compute.nfv.dpdk ... parameters: linux: network: interfaces: ... # other interface setup … br-prv: enabled: true type: dpdk_ovs_bridge address: ${_param:tenant_address} netmask: 255.255.255.0Select from the following options:

If you are performing the initial deployment of your environment, proceed with further environment configurations.

If you are making changes to an existing environment, re-run salt configuration on the Salt Master node:

salt "cmp*" state.sls linux.network,neutron

Note

For the changes to take effect, servers require a reboot.

If you need to set different values for each compute node, define them in

cluster.<NAME>.infra.config.Example

openstack_compute_node02: name: ${_param:openstack_compute_node02_hostname} domain: ${_param:cluster_domain} classes: - cluster.${_param:cluster_name}.openstack.compute params: salt_master_host: ${_param:reclass_config_master} linux_system_codename: xenial dpdk0_name: enp5s0f1 dpdk1_name: enp5s0f2 dpdk0_pci: '"0000:05:00.1"' dpdk1_pci: '"0000:05:00.2"' compute_dpdk_driver: uio compute_ovs_pmd_cpu_mask: "0x6" compute_ovs_dpdk_socket_mem: "1024" compute_ovs_dpdk_lcore_mask: "0x400" compute_ovs_memory_channels: "2"