High availability in Kubernetes

High availability in Kubernetes¶

Caution

Kubernetes support termination notice

Starting with the MCP 2019.2.5 update, the Kubernetes component is no longer supported as a part of the MCP product. This implies that Kubernetes is not tested and not shipped as an MCP component. Although the Kubernetes Salt formula is available in the community driven SaltStack formulas ecosystem, Mirantis takes no responsibility for its maintenance.

Customers looking for a Kubernetes distribution and Kubernetes lifecycle management tools are encouraged to evaluate the Mirantis Kubernetes-as-a-Service (KaaS) and Docker Enterprise products.

In a Calico-based MCP Kubernetes cluster, the control plane services are highly available and work in active-standby mode. All Kubernetes control components run on every Kubernetes Master node of a cluster with one node at a time being selected as a master replica and others running in the standby mode.

Every Kubernetes Master node runs an instance of kube-scheduler and

kube-controller-manager. Only one service of each kind is active

at a time, while others remain in the warm standby mode.

The kube-controller-manager and kube-scheduler services

elect their own leaders natively.

API servers work independently while external or internal

Kubernetes load balancer dispatches requests between all of them.

Each of the three Kubernetes Master nodes runs its own instance of

kube-apiserver. All Kubernetes Master nodes services work with

the Kubernetes API locally, while the services that run on the Kubernetes Nodes

access the Kubernetes API by directly connecting to an instance of

kube-apiserver.

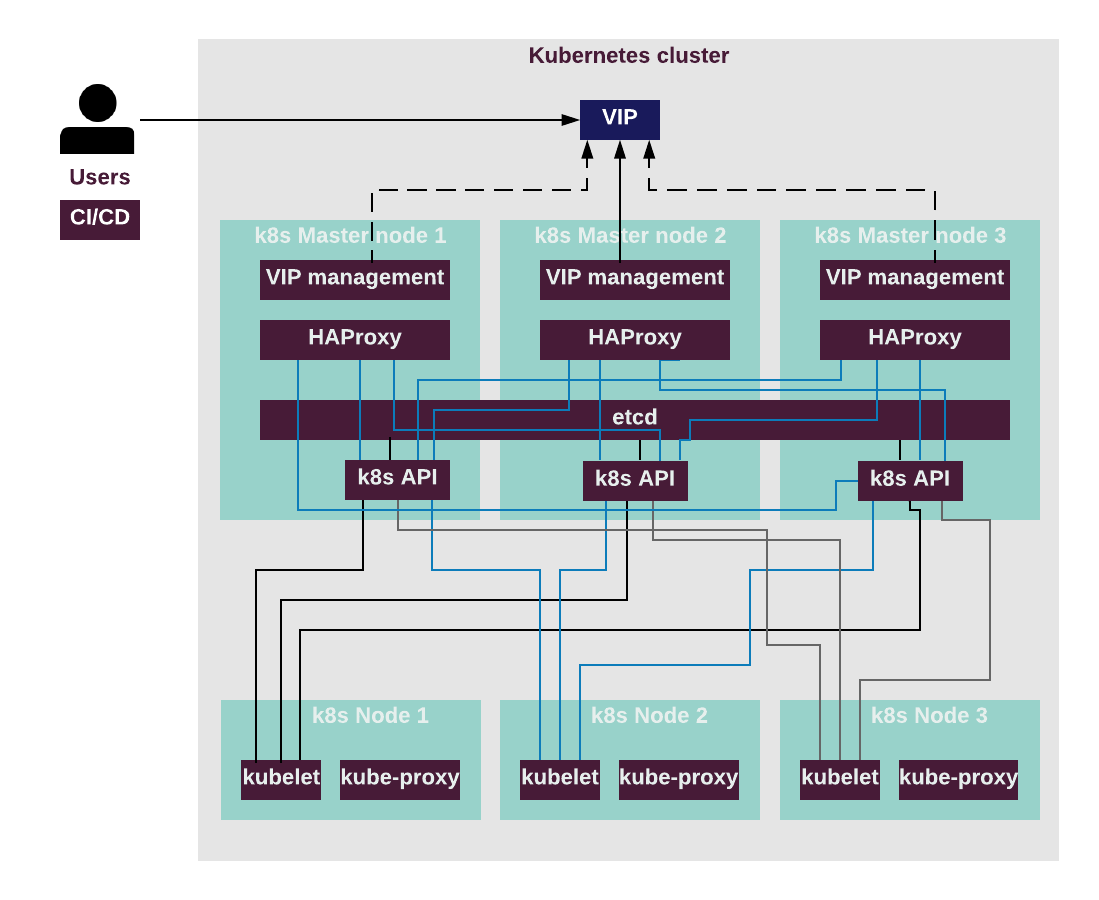

The following diagram describes the API flow in a highly available

Kubernetes cluster where each API instance on every Kubernetes Master node

interacts with each HAProxy instance, etcd cluster, and each kubelet

instance on the Kubernetes Nodes.

High availability of the proxy server is ensured by HAProxy.

HAProxy provides access to the Kubernetes API endpoint

by redirecting requests to instances of kube-apiserver

in a round-robin fashion. The proxy server sends API traffic to

available backends and HAProxy prevents the traffic from going to the

unavailable nodes. The Keepalived daemon provides VIP management

for the proxy server. Optionally, SSL termination can be configured

on HAProxy, so that the traffic to kube-apiserver instances

goes over the internal Kubernetes network.