MCP Reference Architecture Q4`18 documentation

MCP Reference Architecture¶

Note

The MCP Reference Architecture guide now includes the updated content from the MCP Standard Configuration document that has been removed from the MCP documentation set.

Preface¶

This documentation provides information on how to use Mirantis products to deploy cloud environments. The information is for reference purposes and is subject to change.

Intended audience¶

This documentation is intended for deployment engineers, system administrators, and developers; it assumes that the reader is already familiar with network and cloud concepts.

Documentation history¶

The following table lists the released revisions of this documentation:

| Revision date | Description |

|---|---|

| February 8, 2019 | Q4`18 GA |

Introduction¶

Mirantis product is Mirantis Cloud Platform (MCP). This is a software product that is installed on bare metal servers in a datacenter and provides virtualization cloud capabilities to the end users of the platform. MCP also includes deployment and lifecycle management (LCM) tools that enable cloud operators to deploy and update the Mirantis Cloud Platform using automated integration and delivery pipeline.

MCP capabilities¶

Mirantis Cloud Platform (MCP) provides two broad categories of capabilities to two distinct groups of users:

- Cloud operators

Users from this category are engineers responsible for operations of the cloud platform. They are interested in stability of the platform, reliable life cycle operations, and timely update of the platform software.

- Tenant users

Users from this category run workloads on the cloud platform using interfaces provided by the platform. They need to understand what types of virtual resources are available on the cloud platform, how to utilize them, and what are the limitations of the platform interfaces.

Cloud operators and administrators can use MCP to manage the following elements of the cloud platform infrastructure:

- Physical infrastructure

Hardware servers, host operating system.

- Cloud platform software

Hypervisors, control plane services, identity information.

- Network configuration

Host networking, IP routing, filtering, and VPN.

Tenant users can use MCP to manage the following resources provided by the cloud platform:

- Virtual infrastructure

Virtual server instances and/or containers, virtual networks and resources, virtual storage, and tenants identity information.

- Applications running on the infrastructure

Any workloads that run in the virtual infrastructure using resources of physical infrastructure agnostically through virtualization mechanisms.

MCP design¶

Mirantis Cloud Platform provides capabilites described above as functions of its software components.

DriveTrain¶

DriveTrain is code name for the MCP LCM framework that includes Gerrit, Jenkins, MCP Registry, SaltStack, Reclass, and metadata model. The DriveTrain components perform the following functions:

- SaltStack

Flexible and scalable deployment and configuration management and orchestration engine that is used for automated lifecycle management of MCP clusters.

- Reclass

Reclass is an External Node Classifier (ECN) that, coupled with SaltStack, provides an inventory of nodes for easy configuration management.

- Reclass metadata model

The metadata model is a hierarchical file based store that allows to define all parameter values used by Salt to configure services of MCP. The model hierarchy is merged and exposed to Salt through the Reclass ENC.

- Gerrit

Git repository and code review management system in which all MCP codebase and the metadata model are stored and through which all changes to MCP clusters are delivered.

- Jenkins

Build automation tool that, coupled with Gerrit, enables continuous integration and continuous delivery of updates and upgrades to the MCP clusters.

- MCP Registry

A set of repositories with binary artifacts required for MCP cluster deployment and functioning. This is a local mirror of Registry published by Mirantis from its product delivery infrastructure.

- MAAS

Metal-as-a-Service (MAAS) is a provisioning software that allows you to manage physical machines.

- OpenLDAP

OpenLDAP server stores and provides identity information for other components of DriveTrain and, optionally, for MCP clusters.

MCP clusters¶

Using DriveTrain, you can deploy and manage multiple MCP clusters of different types. MCP clusters provide certain Operator and Tenant functions, as described below.

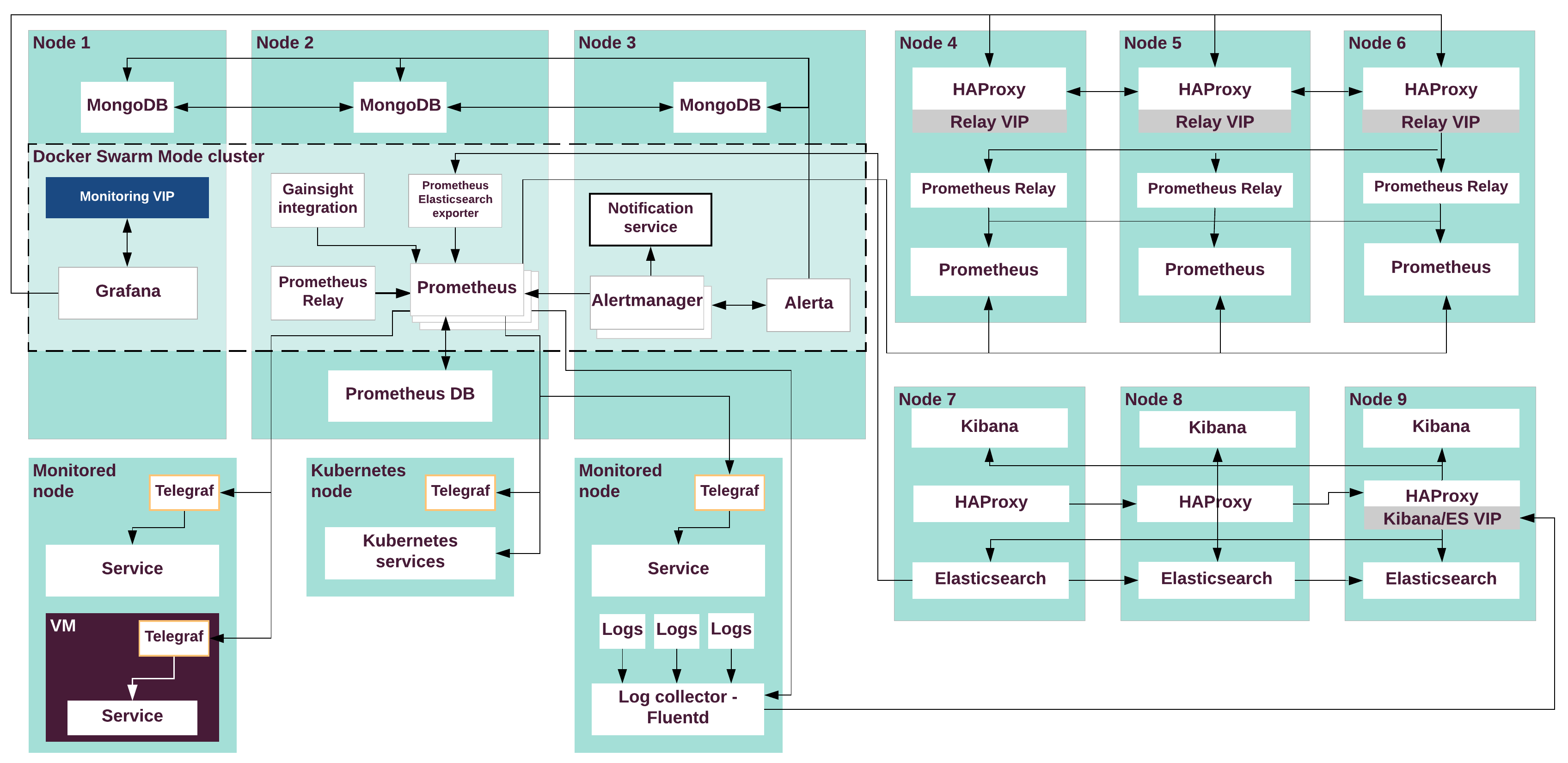

- StackLight Logging, Monitoring, and Alerting (LMA)

Responsible for collection, analysis, and visualization of critical monitoring data from physical and virtual infrastructure, as well as alerting and error notifications through a configured communication system, such as email.

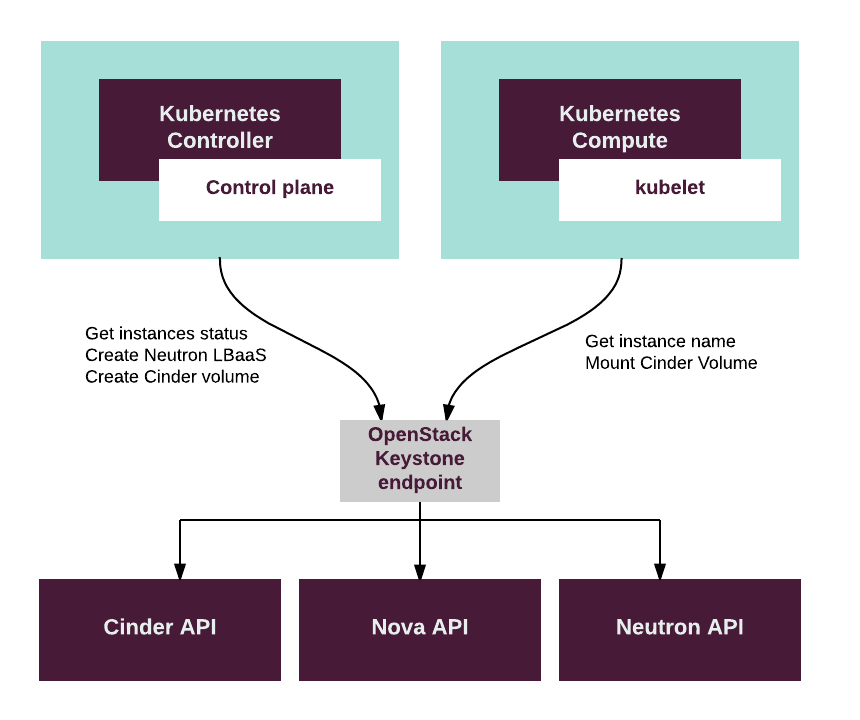

- OpenStack

Platform that manages virtual infrastructure resources, including virtual servers, storage devices, networks and networking services such as load balancers, and provides management functions to Tenant users.

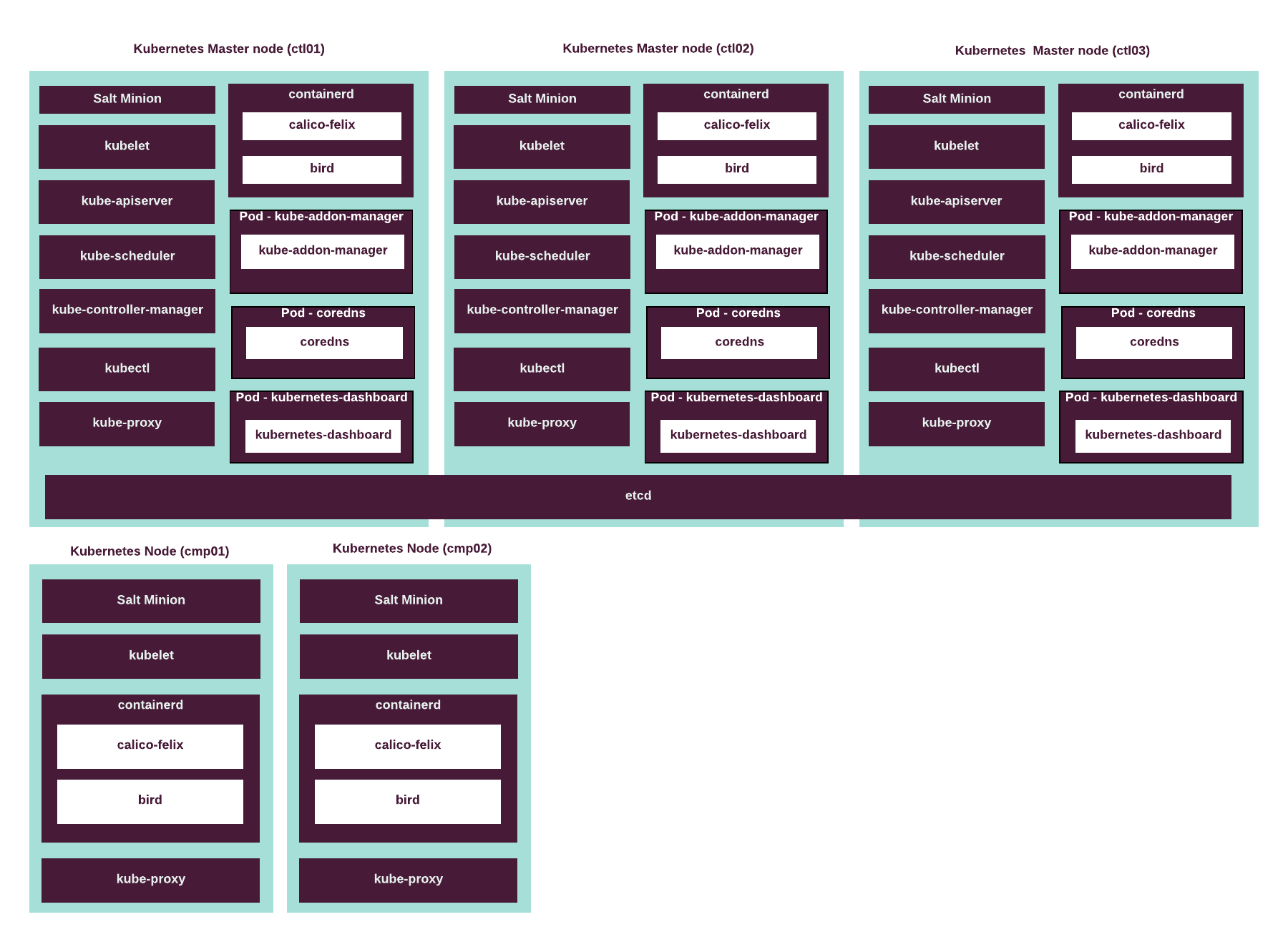

- Kubernetes support terminated since 2019.2.5

Platform that manages virtual infrastructure resources, including container images, pods, storage and networking resources for containerized applications.

- Ceph

Distributed storage platform that provides storage resources, such as objects and virtual block devices, to virtual and physical infrastructure.

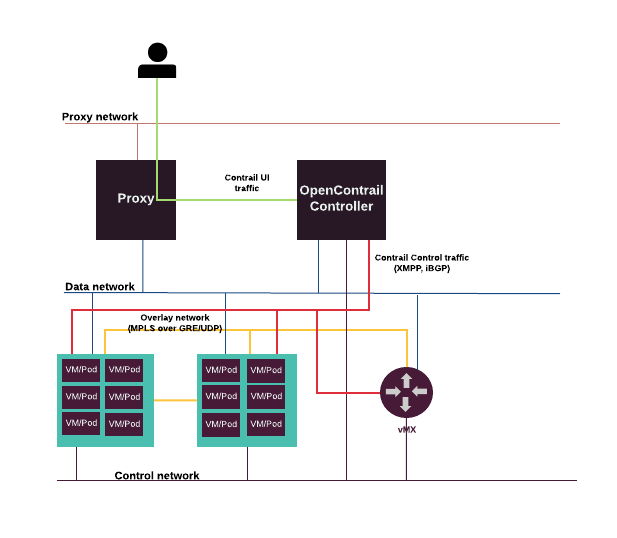

- OpenContrail (optional)

MCP enables you to deploy OpenContrail as a software-defined networking solution. MCP OpenContrail is based on official OpenContrail releases with additional customizations by Mirantis.

Note

If you run MCP OpenContrail SDN, you need to have Juniper MX or SRX hardware or virtual router to route traffic to and from OpenStack tenant VMs.

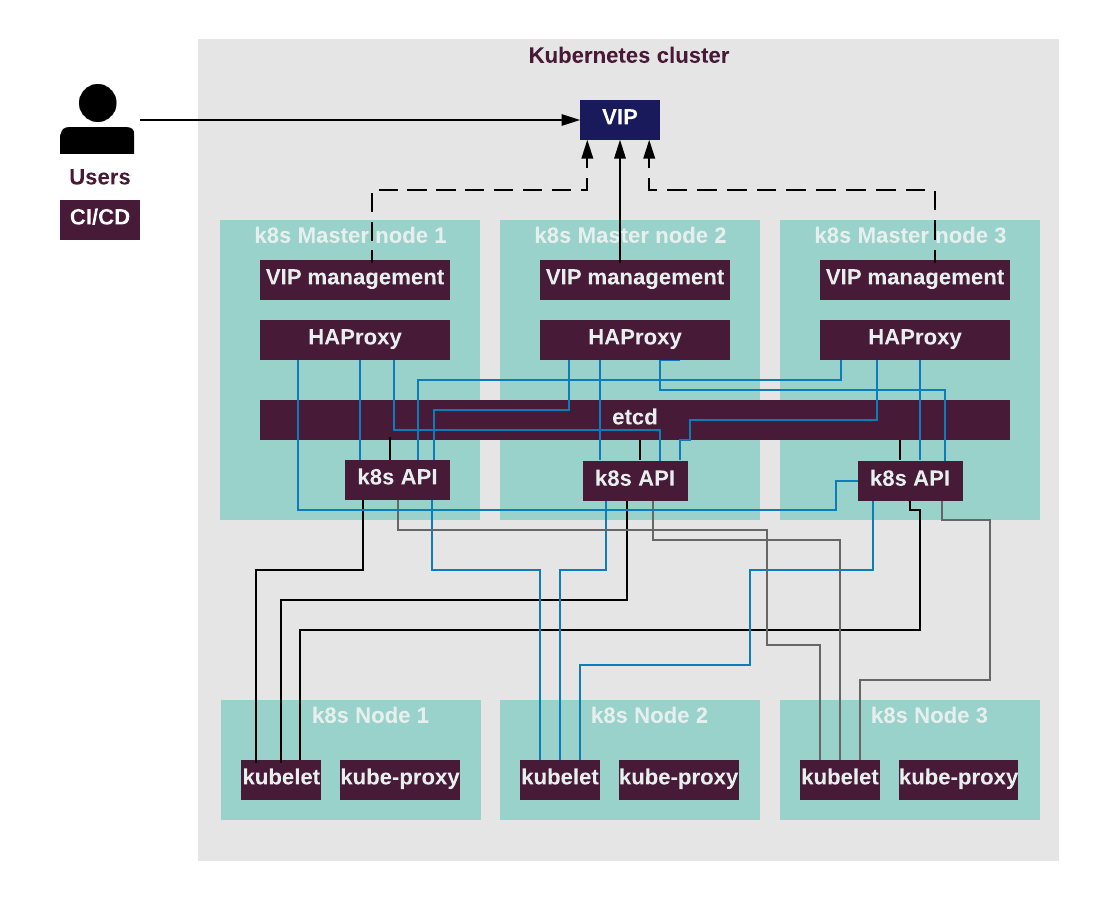

- High Availability

In MCP, the high availability of control plane services is ensured by Keepalived and HAProxy. Keepalived is a Linux daemon that provides redundancy for virtual IP addresses. HAProxy provides load balancing for network connections.

Cloud infrastructure¶

A cloud infrastructure consists of the physical infrastructure, network configuration, and cloud platform software.

In large data centers, the cloud platform software required for managing user workloads runs on separate servers from where the actual workloads run. The services that manage the workloads coupled with the hardware on which they run are typically called the control plane, while the servers that host user workloads are called the data plane.

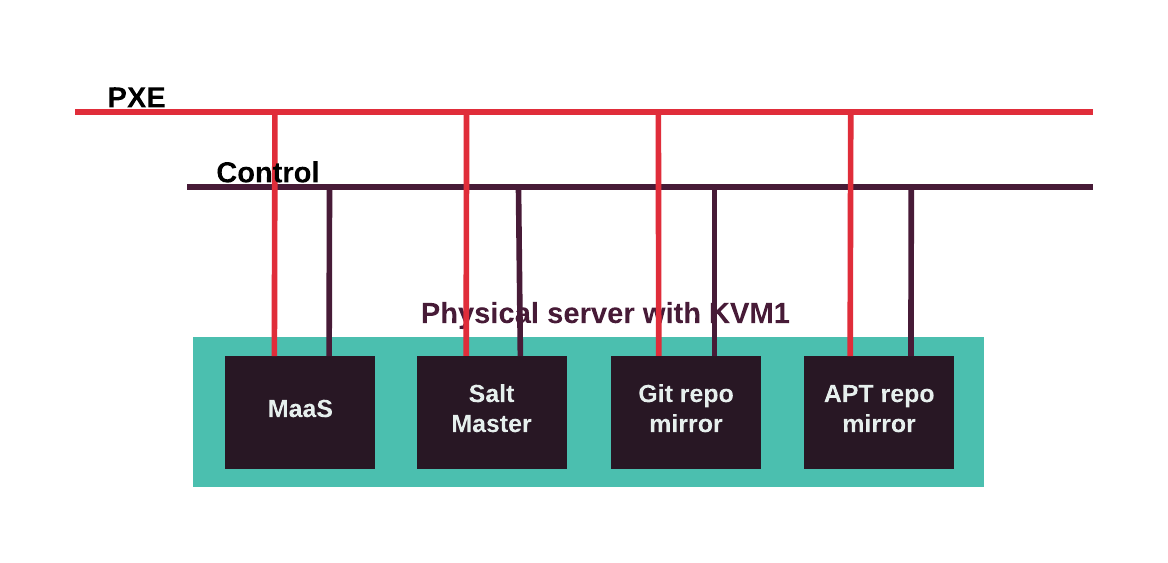

In MCP, the control plane is hosted on the infrastructure nodes. Infrastructure nodes run all the components required for deployment, lifecycle management, and monitoring of your MCP cluster. A special type of infrastructure node called the foundation node, in addition to other services, hosts a node that runs the bare-metal provisioning service called MAAS and the Salt Master service that provides infrastructure automation.

MCP employs modular architecture approach by using the Reclass model to describe configuration and distribution of services across the infrastructure nodes. This allows the product to arrange the same services into different configurations depending on the use case.

Infrastructure management capabilities¶

MCP provides the following infrastructure management capabilities to cloud operators and administrators:

- Install MCP and its components on bare metal infrastructure.

- Update components of MCP to improve existing capabilities and get security or other fixes.

- Upgrade cloud platform components and other components of MCP installation to gain new capabilities.

- Add, remove, and replace elements of the control plane and data plane physical and virtual infrastructure, including hypervisor servers and servers that host control plane services.

- Configure bare metal servers, including disk and network settings, operating system, and IP routing.

- Collect and expose metrics and logs from the infrastructure.

- Generate alerts and notifications about events in the infrastructure.

- Deploy distributed massively-scaled shared storage (Ceph) and attach it to a cloud in order to provide reliable storage to virtual machines.

Deployment and lifecycle management automation¶

MCP utilizes the Infrastructure-as-Code concept for deployment and lifecycle management of a cloud datacenter. In this concept, all infrastructure elements are described in definition files. Changes in the files are reflected in the configuration of datacenter hosts and cloud services.

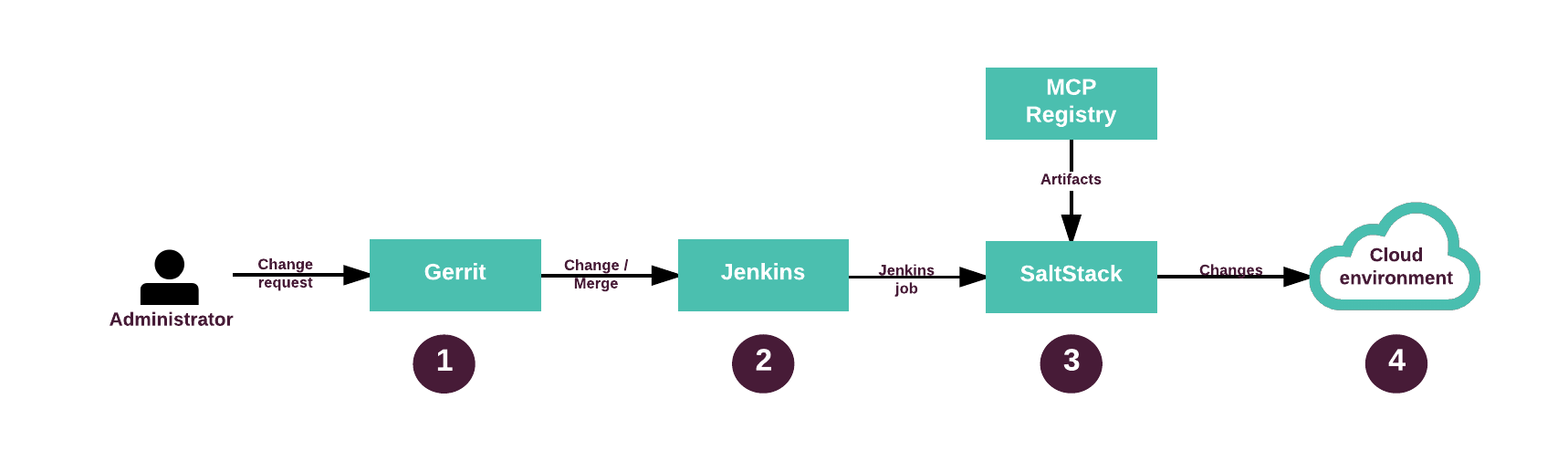

DriveTrain is the lifecycle management (LCM) engine of MCP. It allows cloud operators to deploy and manage MCP clusters.

DriveTrain implements an opinionated approach to Infrastructure-as-Code. Cloud operators can use DriveTrain to describe their infrastructures as declarative class-based metadata model. Changes in the model parameters are applied through DriveTrain LCM orchestration.

The LCM orchestration is handled by Groovy pipelines executed by the Jenkins server. The configuration management is provided by Salt formulas executed by the SaltStack agents (minions).

LCM pipeline overview¶

DriveTrain implements lifecycle management (LCM) operations as Jenkins pipelines. For the list of the components of DriveTrain, see MCP design.

The following diagram describes the workflow of the DriveTrain LCM pipeline:

| # | Description |

|---|---|

| 1 | An operator submits changes to the cluster metadata model in Gerrit for review and approval. |

| 2 | Depending on your configuration and whether you have a staging environment or deploy changes directly to a production MCP cluster, the workflow might slightly differ. Typically, with a staging MCP cluster, you trigger a deployment job in Jenkins before merging the change. This allows you to verify it before promoting to production. However, if you deploy an MCP cluster onto production, you might want to approve and merge the change first. |

| 3 | Jenkins job invokes the required SaltStack formulas and Reclass models from Gerrit and artifacts from the MCP Registry. |

| 4 | SaltStack applies changes to the cloud environment. |

See also

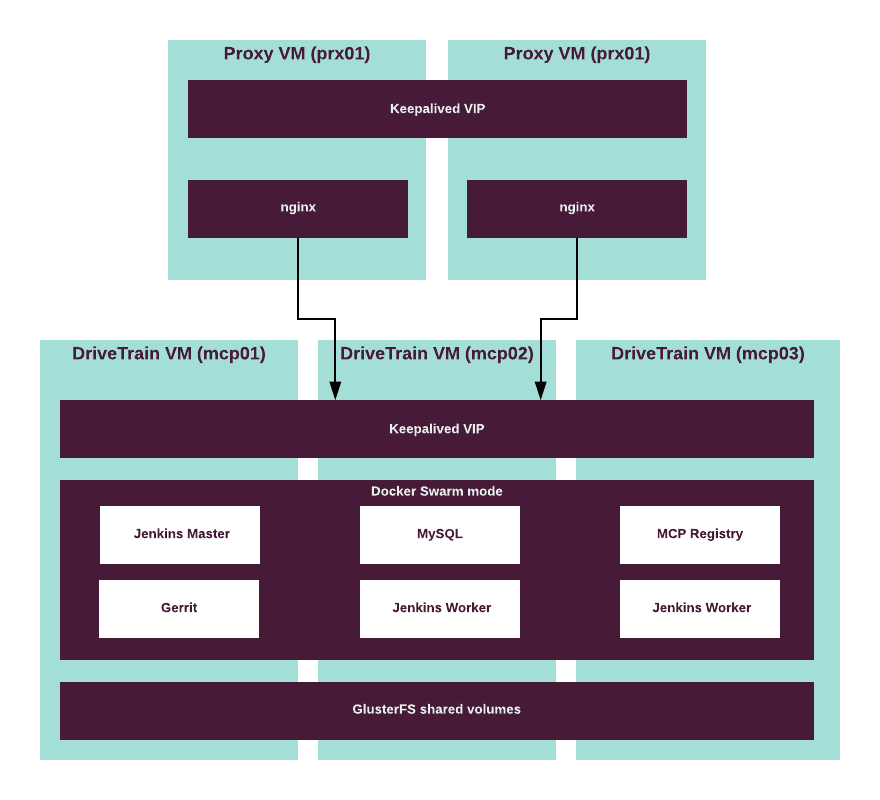

High availability in DriveTrain¶

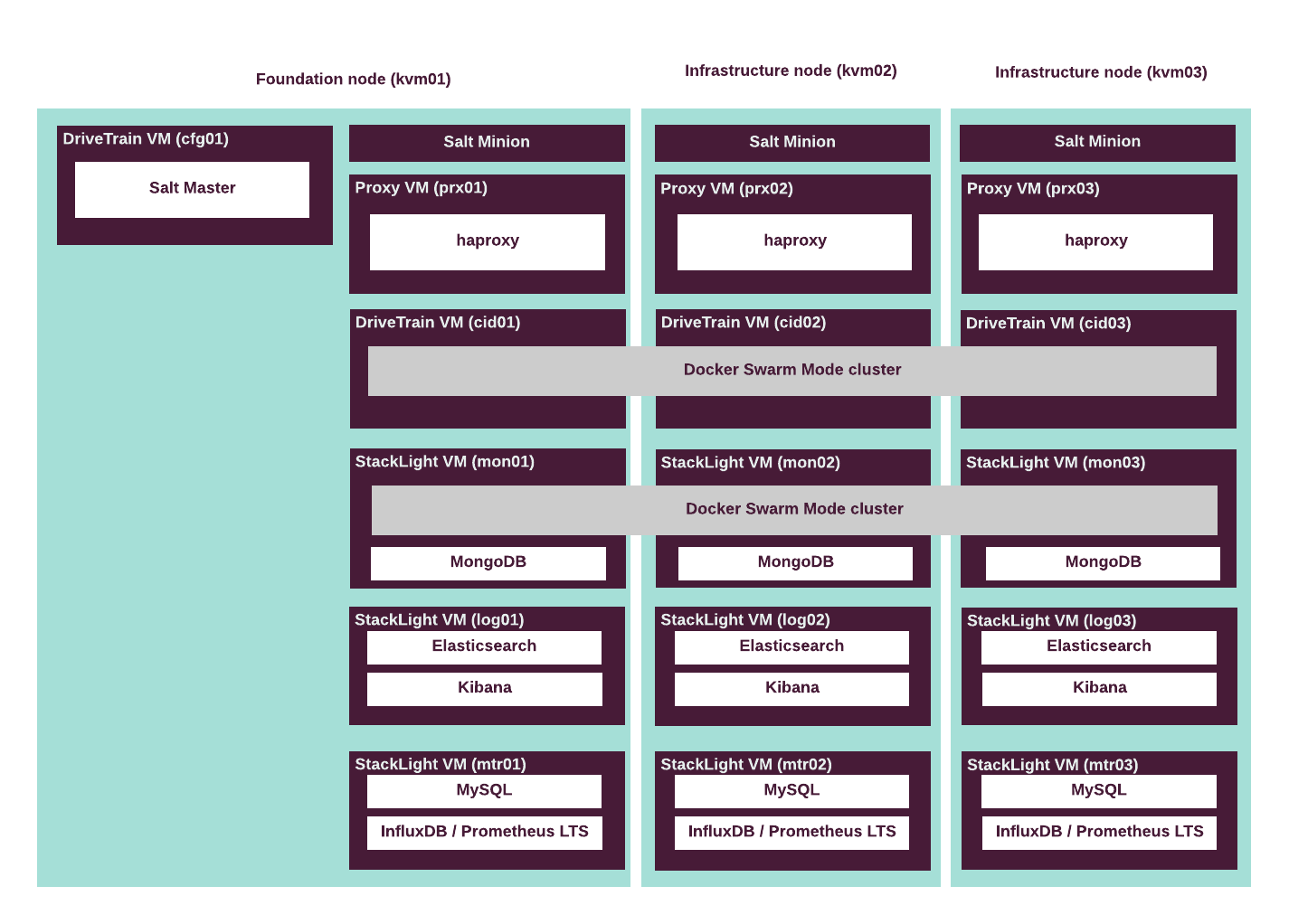

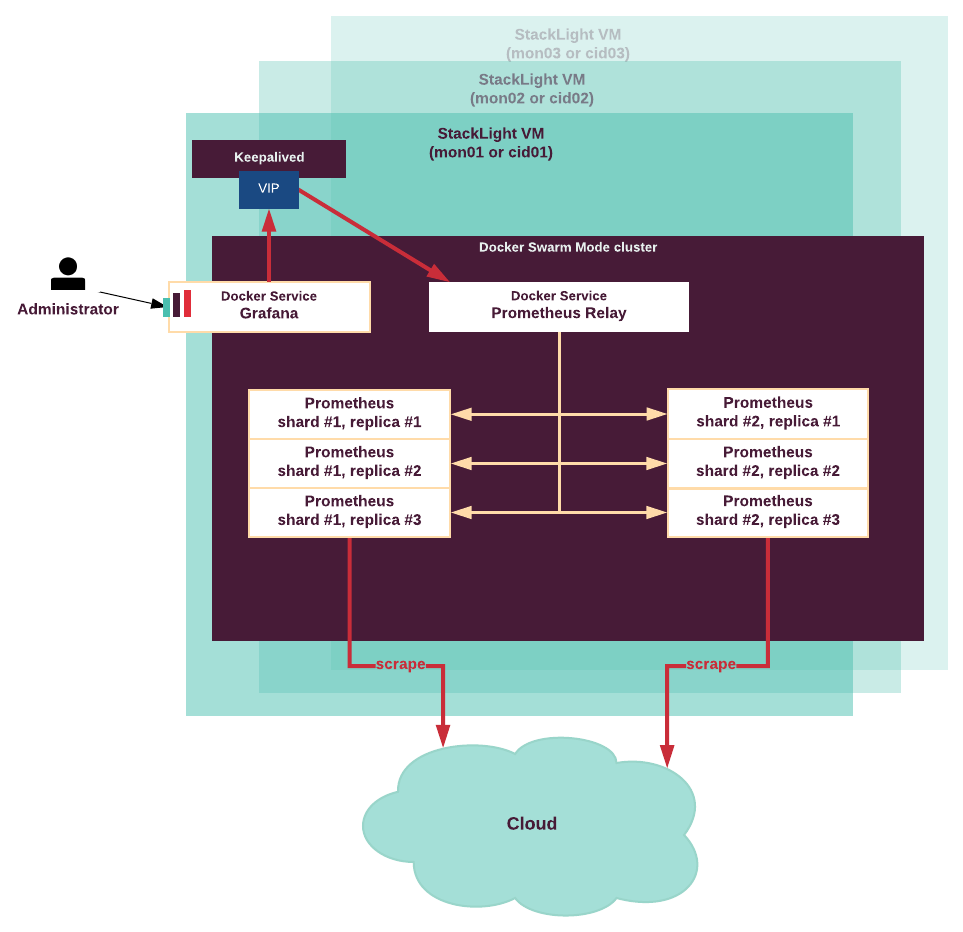

DriveTrain is the integration framework for the MCP product. Therefore, its continuous availability is essential for the MCP solution to function properly. Although you can deploy DriveTrain in the single node Docker Swarm mode for testing purposes, most production environments require a highly-available DriveTrain installation.

All DriveTrain components run as containers in Docker Swarm mode cluster which ensures services are provided continuously without interruptions and are susceptible to failures.

The following components ensure high availability of DriveTrain:

- Docker Swarm mode is a special Docker mode that provides

Docker cluster management. Docker Swarm cluster ensures:

- High availability of the DriveTrain services. In case of failure on any infrastructure node, Docker Swarm reschedules all services to other available nodes. GlusterFS ensures the integrity of persistent data.

- Internal network connectivity between the Docker Swarm services through the Docker native networking.

- Keepalived is a routing utility for Linux that provides a single point of entry for all DriveTrain services through a virtual IP address (VIP). If the node on which the VIP is active fails, Keepalived fails over the VIP to other available nodes.

- nginx is web-server software that exposes the DriveTrain service’s APIs that run in a private network to a public network space.

- GlusterFS is a distributed file system that ensures the integrity of the MCP Registry and Gerrit data by storing the data in a shared storage on separate volumes. This ensures that persistent data is preserved during the failover.

The following diagram describes high availability in DriveTrain:

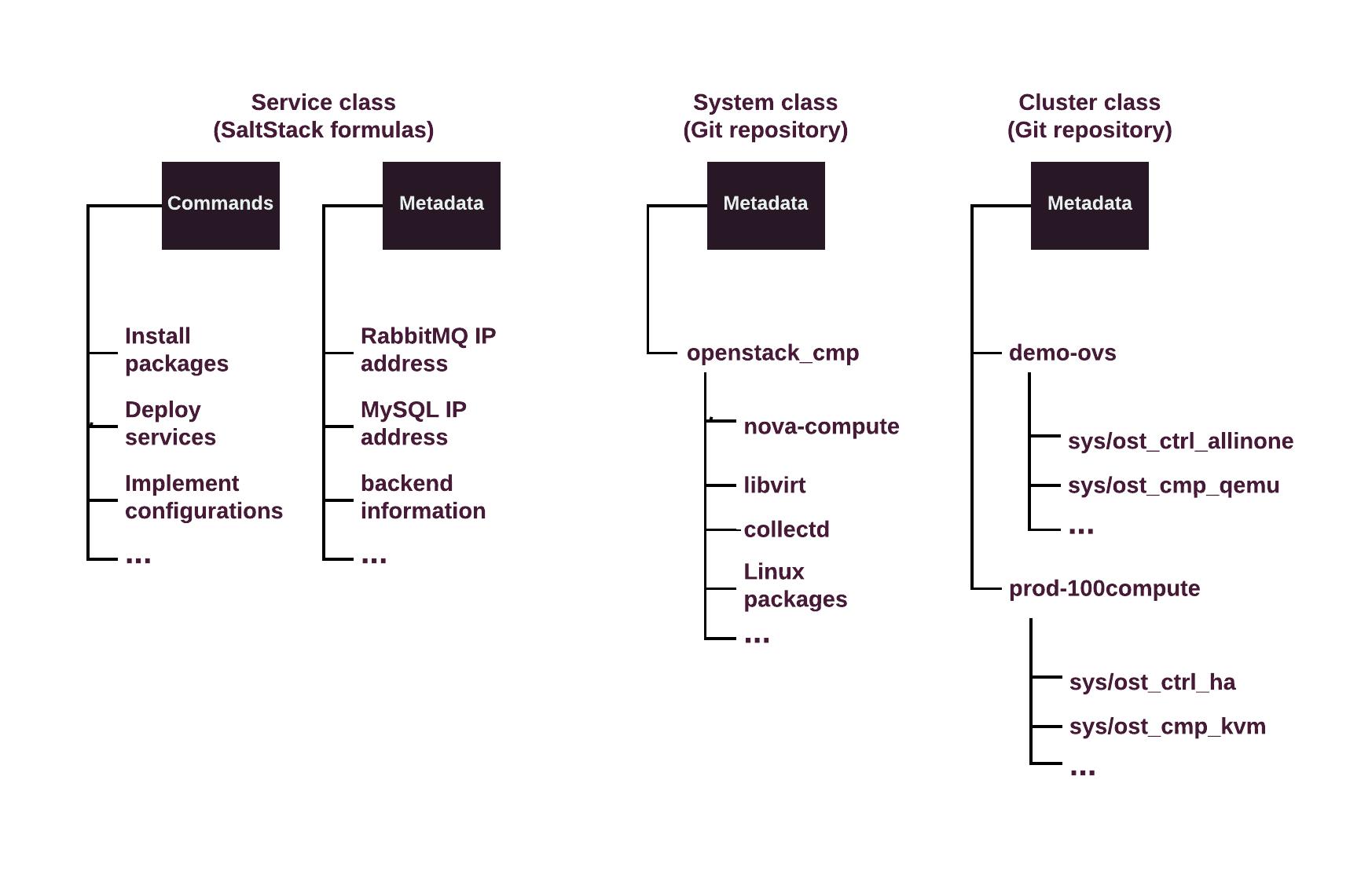

SaltStack and Reclass metadata model¶

SaltStack is an automation tool that executes formulas. Each SaltStack formula defines one component of the MCP cluster, such as MySQL, RabbitMQ, OpenStack services, and so on. This approach enables MCP product developers to combine the components as needed so that services do not interfere with each other and can be reused in multiple scenarios.

Reclass is an external node classifier (ENC) which enables cloud operators to manage an inventory of nodes by combining different classes into MCP cluster configurations. Reclass operates classes which you can view as tags or categories of metadata parameters.

The metadata model itself consists of hierarchically structured classes and corresponding parameters.

The following diagram displays the Mirantis Reclass metadata model’s hierarchy of classes:

| Service class | System class | Cluster class |

|---|---|---|

A service class defines one service, or a group of related services, and the most specific configuration parameters for them. The parameters in this layer of the metadata model are translated directly into values in the configuration files for the corresponding service, and so on. The service classes are provided by and match the Salt formulas installed onto the Salt Master node. A metadata parameter value defined in one of the service classes might be overridden by values from higher levels in the hierarchy, which include the system and cluster levels. |

A system class defines a role (with different granularity) that is applied to a node, logical or physical. System classes typically include and combine service classes and other system classes in a way to describe completely configured, integrated, and ready-to-use system. The system classes are distributed as a Git repository. The repository is copied to the Salt Master node during the bootstrap of DriveTrain. A metadata parameter value set in a system class could be overridden by the values from a higher level in the hierarchy, which is the cluster level. |

A cluster class defines configuration of a specific MCP cluster. This kind of classes can combine system classes according to the architecture of the cluster. A cluster metadata model is typically generated using the automation pipeline that is executed by DriveTrain Jenkins. This pipeline uses Cookiecutter as a templating tool to generate the cluster model. The cluster metadata model is distributed as a Git repository. |

Infrastructure nodes overview¶

Infrastructure nodes are the physical machines that run all required services for the MCP cluster deployment, lifecycle management, and monitoring, also known as control plane services.

The exact number of the infrastructure nodes in each MCP environment and distribution of the MCP components across the infrastructure nodes depend on the use case and are defined in the deployment model.

The MCP Cloud Provider Reference Configuration requires 9 infrastructure nodes to run control plane services. See OpenStack compact cloud for details.

Note

You can use either Neutron or OpenContrail SDN, but not both at the same time.

Infrastructure nodes disk layout¶

Infrastructure nodes are typically installed on hardware servers. These servers run all components of management and control plane for both MCP and the cloud itself. It is very important to configure hardware servers properly upfront because changing their configuration after initial deployment is costly.

For instructions on how to configure the disk layout for MAAS to provision the hardware machines, see MCP Deployment Guide: Add a custom disk layout per node in the MCP model.

Consider the following recommendations:

- Layout

- Mirantis recommends using the

LVMlayout for disks on infrastructure nodes. This option allows for more operational flexibility, such as resizing the Volume Groups and Logical Volumes for scale-out. - LVM Volume Groups

According to Hardware requirements for Cloud Provider Infrastructure, an infrastructure node typically has two or more SSD disks. These disks must be configured as LVM Physical Volumes and joined into a Volume Group.

The name of the Volume Group is the same across all infrastructure nodes to ensure consistency of LCM operations. Mirantis recommends following the

vg_<role>naming convention for the Volume Group. For example,vg_root.- LVM Logical Volumes

The following table summarizes the recommended Logical Volume schema for infrastructure nodes in the CPI reference architecture. The

/var/lib/libvirt/images/size may be adjusted to the size of all VMs hosted on the node depending on the VCP VMs size. The disk size for a large deployment may require more that 3 TB for StackLight LMA and OpenContrail.Follow the instructions in the MCP Deployment Guide to configure infrastructure nodes in your cluster model.

| Server role | Server names | Logical Volume path | Mount point | Size |

|---|---|---|---|---|

| All roles | kvm01 - kvm09 |

/dev/vg_root/lv_root |

'/' |

50 GB |

| VCP infrastructure | kvm01, kvm02, kvm03 |

/dev/vg_root/lv_gluster |

/srv/glusterfs |

200 GB |

| VCP infrastructure | kvm01, kvm02, kvm03 |

/dev/vg_root/lv_mcp_images |

/var/lib/libvirt/images |

1200 GB |

| StackLight LMA | kvm04, kvm05, kvm06 |

/dev/vg_root/lv_mcp_images |

/var/lib/libvirt/images |

5500 GB |

| Tenant gateway | kvm07, kvm08, kvm09 |

/dev/vg_root/lv_mcp_images |

/var/lib/libvirt/images |

700 GB |

Hardware requirements for Cloud Provider Infrastructure¶

The reference architecture for MCP Cloud Provider Infrastructure (CPI) use case requires 9 infrastructure nodes to run the control plane services.

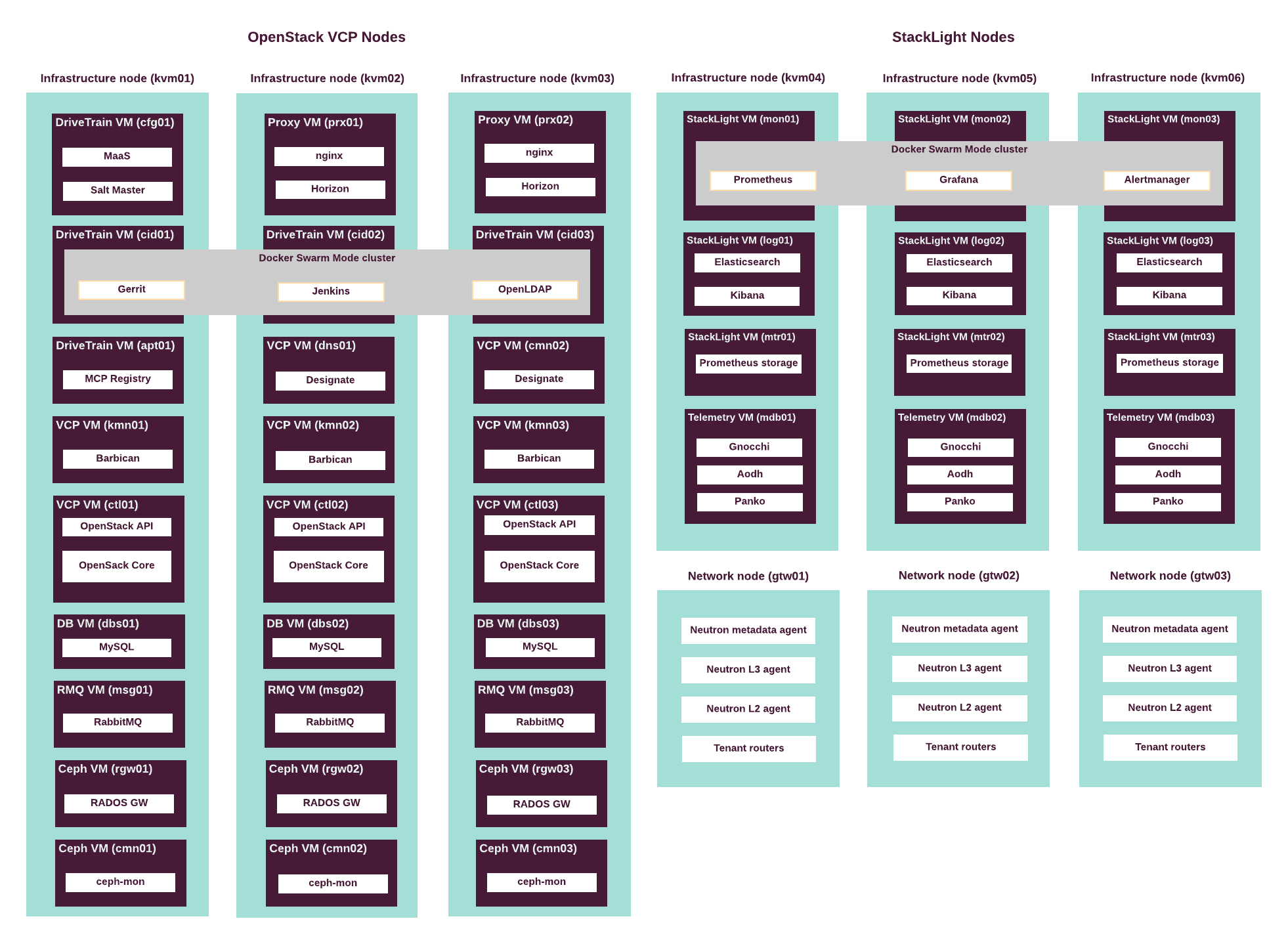

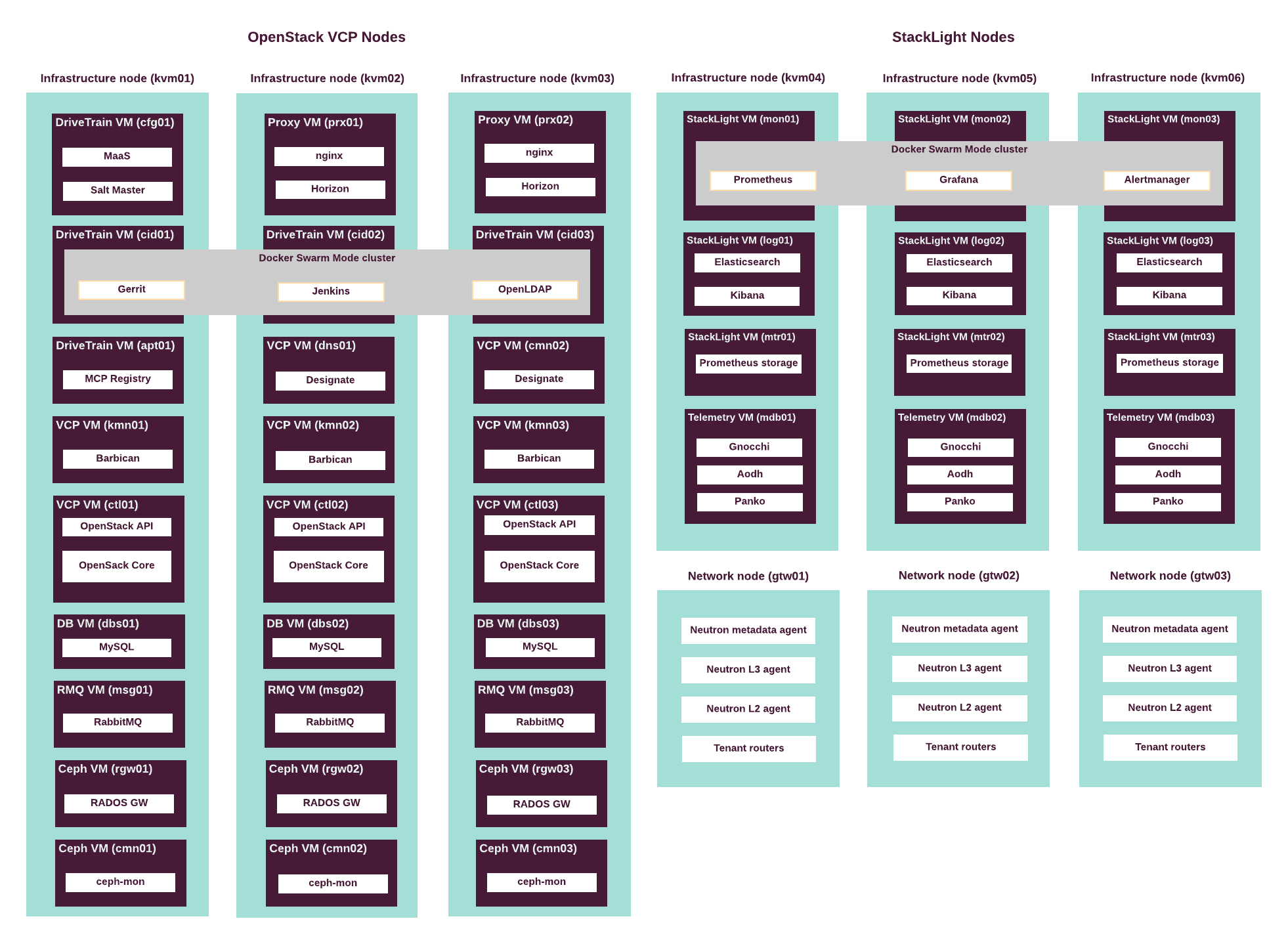

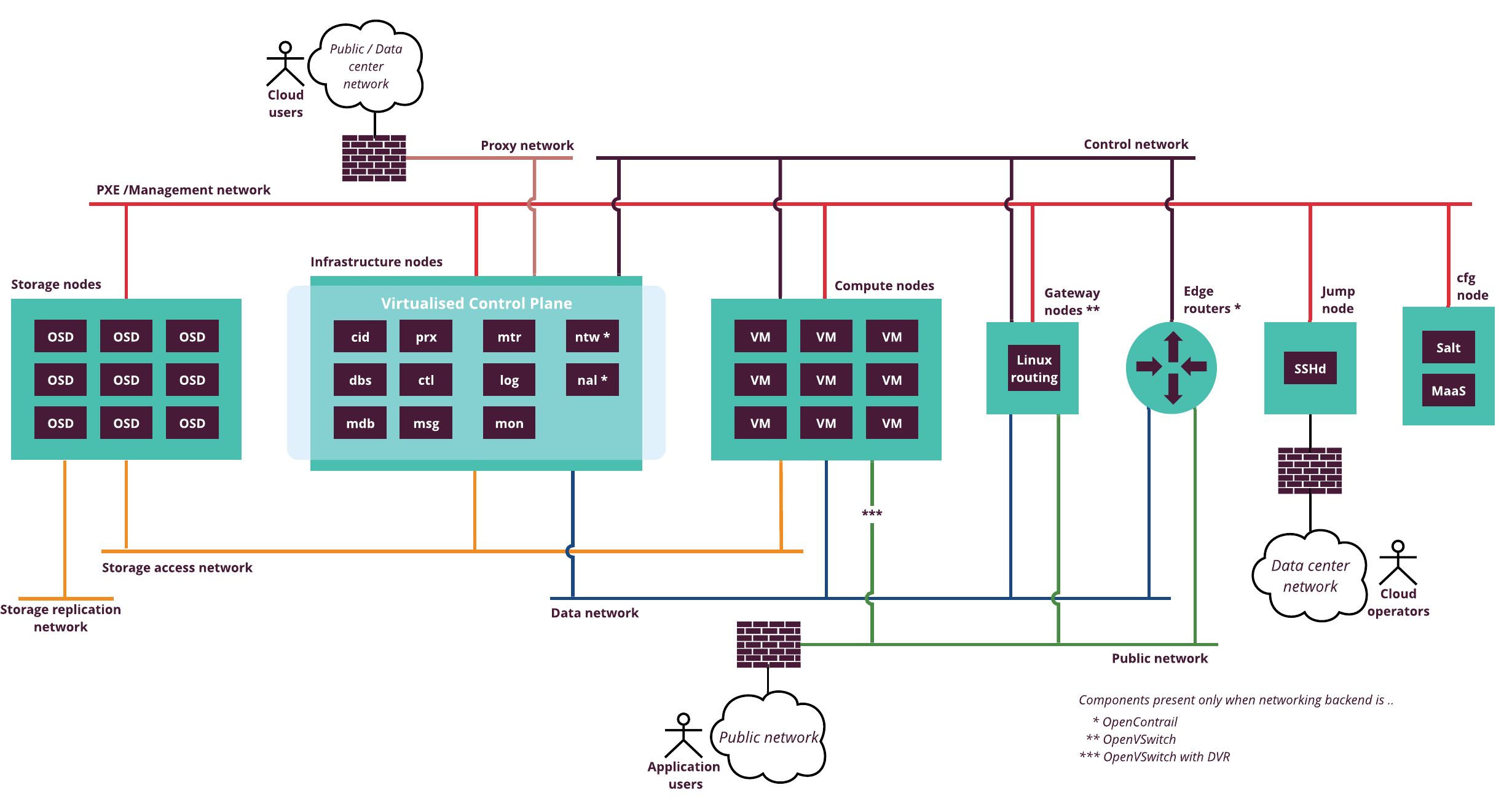

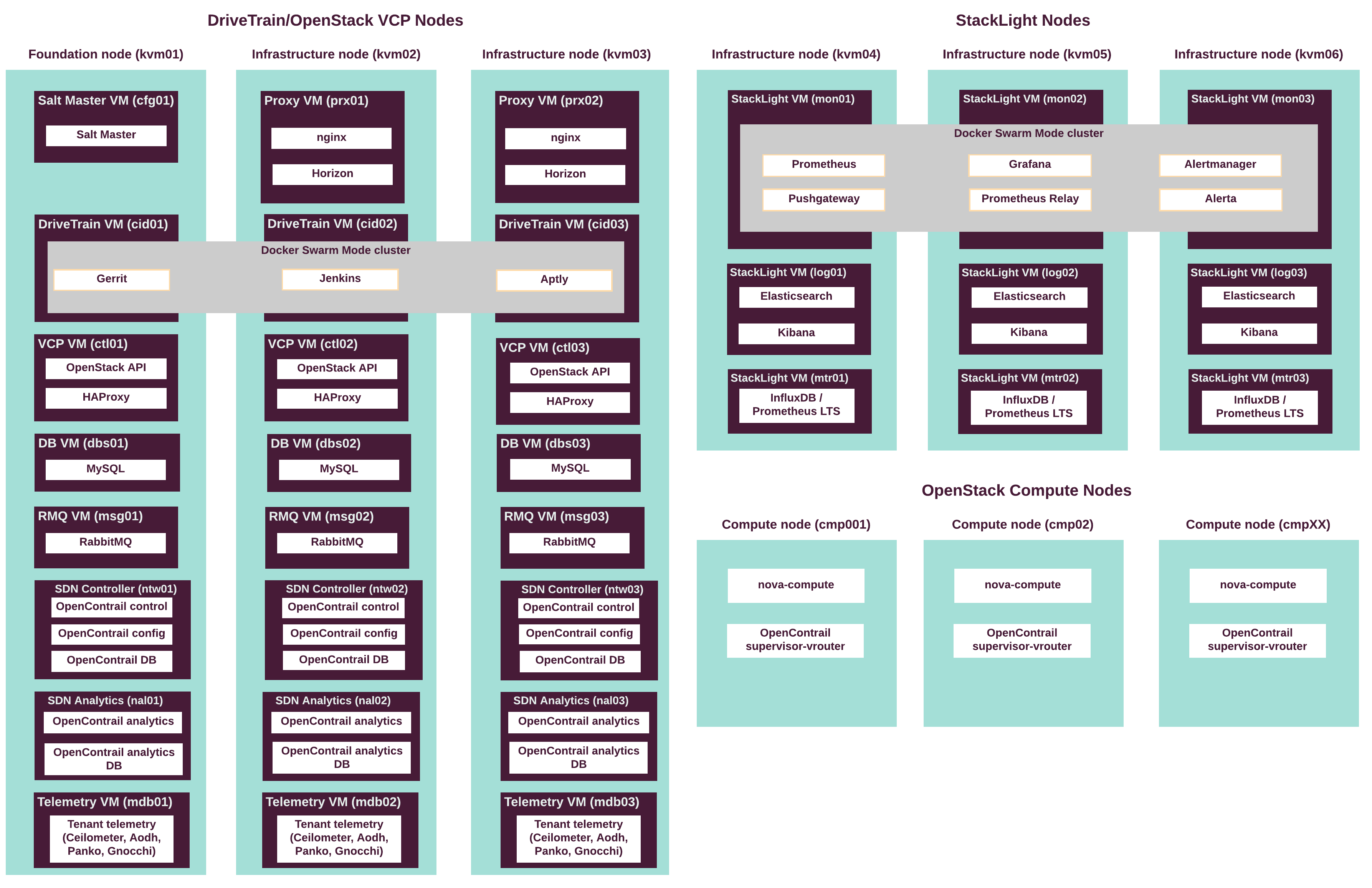

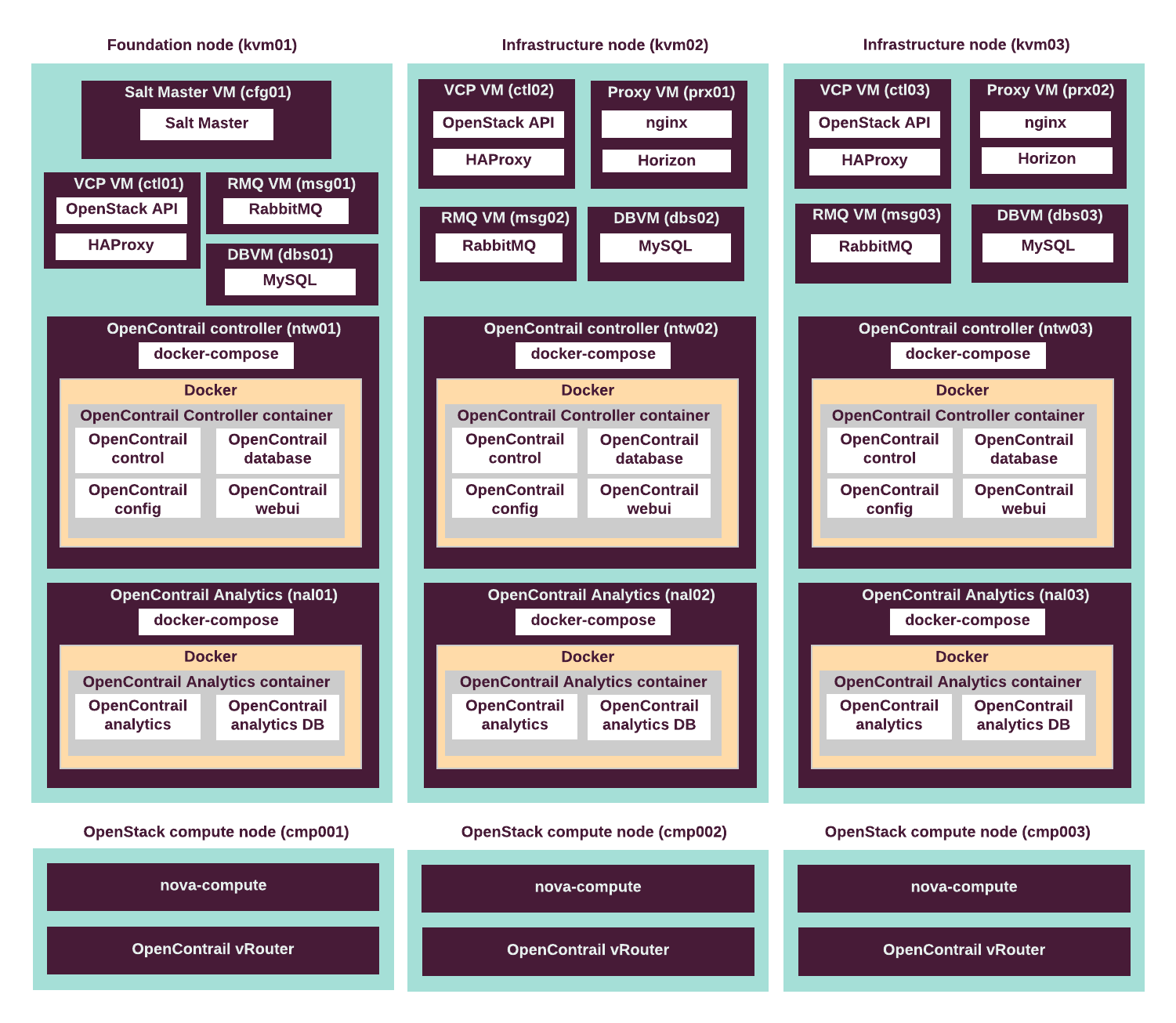

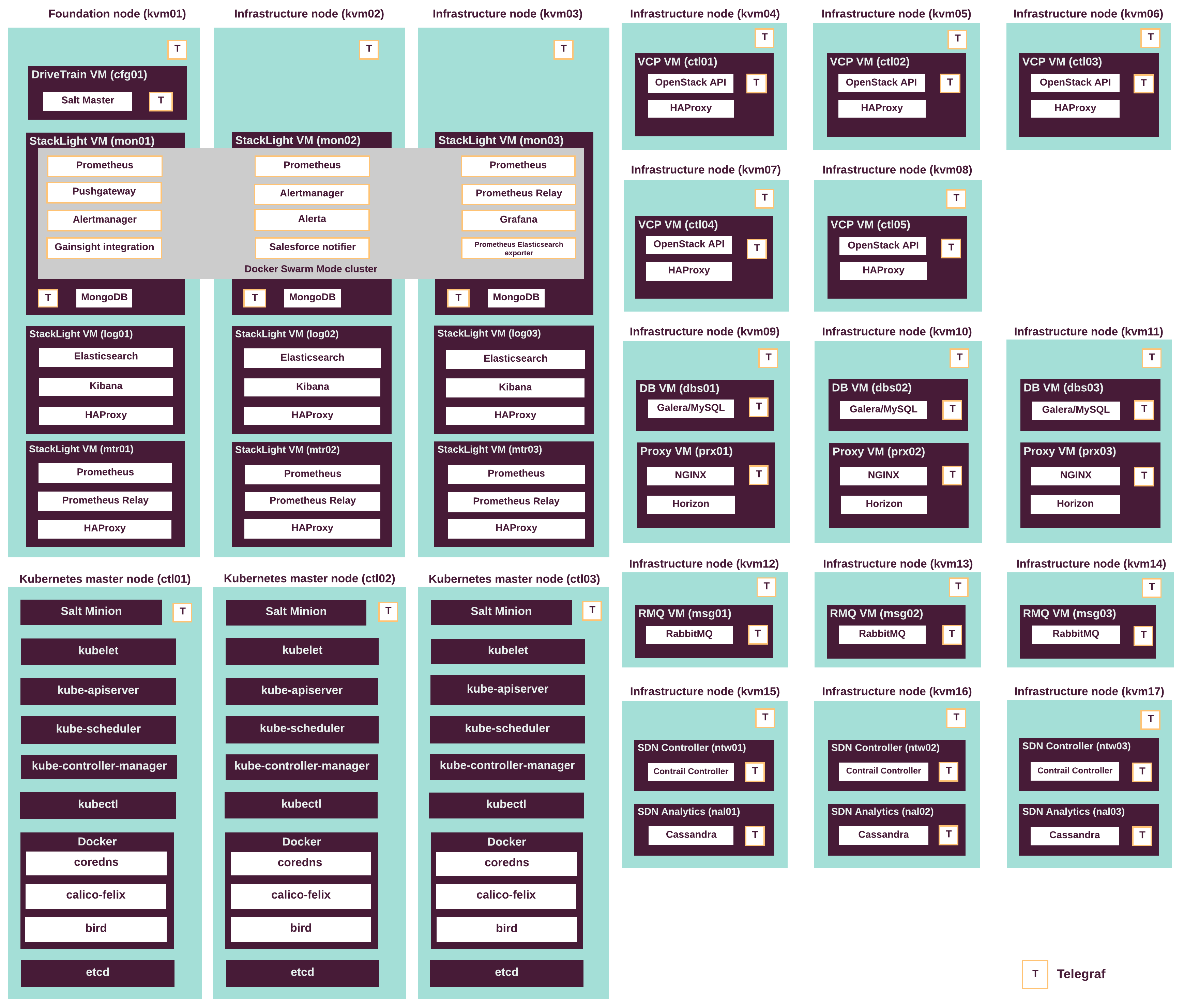

The following diagram displays the components mapping of the infrastructure nodes in the CPI reference architecture.

Hardware requirements for the CPI reference architecture are based on the capacity requirements of the control plane virtual machines and services. See details in Virtualized control plane layout.

The following table summarizes the actual configuration of the hardware infrastucture nodes used by Mirantis to validate and verify the CPI reference architecture. Use it as a reference to plan the hardware bill of materials for your installation of MCP.

| Server role | Servers number | Server model | CPU model | CPUs number | vCores number | RAM, GB | Storage, GB | NIC model | NICs number |

|---|---|---|---|---|---|---|---|---|---|

| Infrastructure node (VCP) | 3 | Supermicro SYS-6018R-TDW | Intel E5-2650v4 | 2 | 48 | 256 | 1900 [1] | Intel X520-DA2 | 2 |

| Infrastructure node (StackLight LMA) | 3 | Supermicro SYS-6018R-TDW | Intel E5-2650v4 | 2 | 48 | 256 | 5700 [2] | Intel X520-DA2 | 2 |

| Tenant gateway | 3 | Supermicro SYS-6018R-TDW | Intel E5-2620v4 | 1 | 16 | 96 | 960 [3] | Intel X520-DA2 | 2 |

| Compute node | 50 to 150 | Supermicro SYS-6018R-TDW | [4] | [4] | [4] | [4] | 960 [3] [5] | Intel X520-DA2 | 2 |

| Ceph OSD | 9+ [6] | Supermicro SYS-6018R-TDW | Intel E5-2620v4 | 1 | 16 | 96 | 960 [3] [7] | Intel X520-DA2 | 2 |

| [1] | One SSD, Micron 5200 MAX or similar. |

| [2] | Three SSDs, 1900 GB each, Micron 5200 MAX or similar. |

| [3] | (1, 2, 3) Two SSDs, 480 GB each, WD Blue 3D (WDS500G2B0A) or similar. |

| [4] | (1, 2, 3, 4) Depends on capacity requirements and compute planning. See details in Compute nodes planning. |

| [5] | Minimal system storage. Additional storage for virtual server instances might be required. |

| [6] | Minimal recommended number of Ceph OSD nodes for production deployment is 9. See details in Additional Ceph considerations. |

| [7] | Minimal system storage. Additional devices are required for Ceph storage, cache, and journals. For more details on Ceph storage configuration, see Ceph OSD hardware considerations. |

Note

RAM capacity of this hardware configuration includes overhead

for GlusterFS servers running on the infrastructure nodes

(kvm01, kvm02, and kvm03).

The rule of thumb for capacity planning of the infrastructure nodes is to have at least 10% more RAM than planned for all virtual machines on the host combined. This rule is also applied by StackLight LMA, and it will start sending alerts if less than 10% or 8 GB of RAM is free on an infrastructure node.

See also

Control plane virtual machines¶

MCP cluster infrastructure consists of a set of virtual machines that host the services required to manage workloads and respond to API calls.

MCP clusters includes a number of logical roles that define functions of its nodes. Each role can be assigned to a specific set of the control plane virtual machines. This allows to adjust the number of instances of a particular role independently of other roles, providing greater flexibility to the environment architecture.

To ensure high availability and fault tolerance, the control plane of an MCP cluster typically spreads across at least three physical nodes. However, depending on your hardware you may decide to break down the services on a larger number of nodes. The number of virtual instances that must run each service may vary as well.

The reference architecture for Cloud Provider Infrastructure use case uses 9 infrastructure nodes to host the MCP control plane services.

The following table lists the roles of infrastructure logical nodes and their standard code names used throughout the MCP metadata model:

| Server role | Server role codename in metadata model | Description |

|---|---|---|

| Infrastructure node | kvm |

Infrastructure KVM hosts that provide virtualization platform all VCP component |

| Network node | gtw |

Nodes that provide tenant network data plane services. |

| DriveTrain Salt Master node | cfg |

The Salt Master node that is responsible for sending commands to Salt Minion nodes. |

| DriveTrain LCM engine node | cid |

Nodes that run DriveTrain services in containers in Docker Swarm mode cluster. |

| RabbitMQ server node | msg |

Nodes that run the message queue server (RabbitMQ). |

| Database server node | dbs |

Nodes that run the clustered MySQL database (Galera). |

| OpenStack controller node | ctl |

Nodes that run the Virtualized Control Plane service, including the OpenStack API servers and scheduler components. |

| OpenStack compute node | cmp |

Nodes that run the hypervisor service and VM workloads. |

| OpenStack DNS node | dns |

Nodes that run OpenStack DNSaaS service (Designate). |

| OpenStack secrets storage nodes | kmn |

Nodes that run OpenStack Secrets service (Barbican). |

| OpenStack telemetry database nodes | mdb |

Nodes that run the Telemetry monitoring database services. |

| Proxy node | prx |

Nodes that run reverse proxy that exposes OpenStack API, dashboards, and other components externally. |

| Contrail controller nodes | ntw |

Nodes that run the OpenContrail controller services. |

| Contrail analytics nodes | nal |

Nodes that run the OpenContrail analytics services. |

| StackLight LMA log nodes | log |

Nodes that run the StackLight LMA logging and visualization services. |

| StackLight LMA database nodes | mtr |

Nodes that run the StackLight database services. |

| StackLight LMA nodes | mon |

Nodes that run the StackLight LMA monitoring services. |

| Ceph RADOS gateway nodes | rgw |

Nodes that run Ceph RADOS gateway daemon and expose Object Storage API. |

| Ceph Monitor nodes | cmn |

Nodes that run Ceph Monitor service. |

| Ceph OSD nodes | osd |

Nodes that provide storage devices for Ceph cluster. |

Note

In the Cloud Provider reference configuration, Ceph OSDs run on dedicated hardware servers. This reduces operations complexity, isolates the failure domain, and helps avoid resources contention.

See also

Networking¶

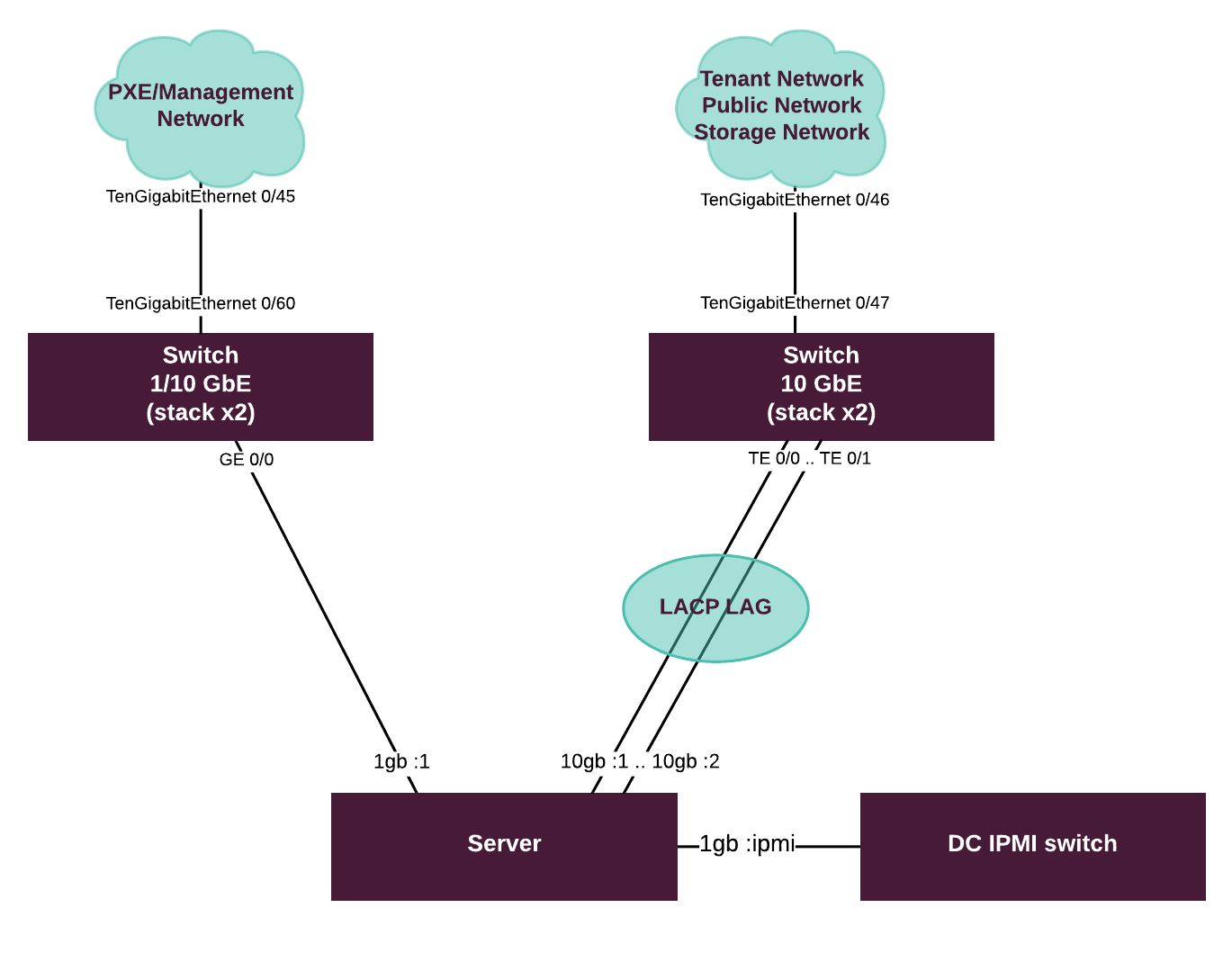

This section describes the key hardware recommendations on server and infrastructure networking, as well as switching fabric capabilities for CPI reference architecture.

Server networking¶

Server machines used in CPI reference architecture have 1 built-in dual port 1 GbE network interface card (NIC), and two additional 1/10 GbE NICs.

The built-in NIC is used for network boot of the servers. Only one interface is typically for PXE boot, the other one is kept unused for redundancy.

The first pair of 1/10 Gbit Ethernet interfaces is used for the management and control plane traffic. These interfaces should be connected to an access switch in 1 or 10 GbE mode.

In CPI referernce architecture, the interfaces of the first NIC are

joined in a bond logical interface in 802.3ad mode.

The second NIC with two interfaces is used for the data plane traffic and

storage traffic. On the operating system level, ports on this 1/10 GbE card

are joined into an LACP bond (Linux bond mode 802.3ad).

Recommended LACP load balancing method for both bond interfaces is

transmission hash policy based on TCP/UDP port numbers (xmit_hash_policy

layer3+4).

This NIC must be connected to an access switch in 10 GbE mode.

Note

The LACP configuration in 802.3ad mode on the server side must

be supported by the corresponding configuration of switching fabric.

See Switching fabric capabilities for details.

Access networking¶

The top of the rack (ToR) switches provide connectivity to servers on physical and data-link levels. They must provide support for LACP and other technologies used on the server side, for example, 802.1q VLAN segmentation. Access layer switches are used in stacked pairs.

In MCP CPI reference architecture validation lab, the following 10 GbE switches were used as the top of the rack (ToR) for PXE, Management Public, Storage, and Tenant networks in MCP:

- Dell Force10 S4810P 48x 10 GbE ports, 4x 40 GbE ports

Use this a reference when planning the hardware bill of materials for your installation of MCP.

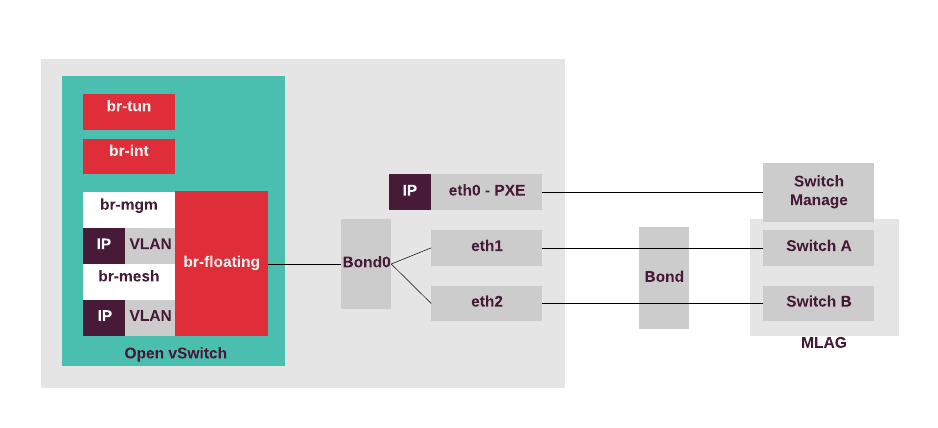

The following diagram illustrates how a server is connected to the switching fabric and how the fabric itself is configured.

Switching fabric capabilities¶

The following table summarizes requirements for the switching fabric capabilities:

| Name of requirement | Description |

|---|---|

| LACP TCP/UDP hash balance mode | Level 4 LACP hash balance mode is recommended to support services that employ TCP sessions. This helps to avoid fragmentation and asymmetry in traffic flows. |

| Multihome server connection support | There are two major options to support multihomed server connections:

|

| LAG/port-channel links | The number of supported LAGs/port-channel links per switch must be twice the number of ports. Take this parameter into account so that you can create the required number of LAGs to accommodate all servers connected to the switch. |

Note

LACP configurations on access and server levels must be compatible with each other. In general, it might require additional design and testing effort in every particular case, depending on the models of switching hardware and the requirements to networking performance.

Multi-cluster architecture¶

Note

The major limitation of the DriveTrain multi-cluster architecture as of MCP Build ID 2019.2.0 is that all clusters managed by a single instance of DriveTrain must have the same version and must be updated simultaneously to the new release of MCP. Some LCM operations on the clusters of earlier versions might not be possible. The only operation supported in this case is update/upgrade operation.

Mirantis Cloud Platform (MCP) can manage multiple disparate clusters using the same DriveTrain and infrastructure node installation. The following clusters are supported:

- OpenStack environments

- Kubernetes clusters support terminated since 2019.2.5

MCP provides the means to manage these sets of clusters using one DriveTrain installation over the L3 network. The cloud operator can execute such operations as applying the global configuration changes to a set of clusters or to an individual cluster, update cluster components, such as OpenStack services, and so on.

Starting with MCP release 2019.2.0, the updated recommendation is to avoid using single model structure to describe multiple clusters. Your cluster is more efficient and scalable if you describe every cluster in a separate model structure, stored in separate Git repository. This way, every cluster has dedicated Salt Master that uses a metadata model specific to that particular cluster. It also makes it easier to manage models using a multi-cluster orchestrator external to Salt Master.

A Jenkins deployment pipeline enables you to specify the URL and credentials of the Salt Master API endpoint that will be called upon the execution of the pipeline. Use the following pipeline parameters to designate the Salt Master service:

SALT_MASTER_URLSALT_MASTER_CREDENTIALS

The targeted Salt Master node then distributes appropriate changes to targeted nodes.

Note

MAAS and StackLight LMA do not support multi-cluster environments. These components are installed per cluster and used only for that cluster.

One of the most common use cases of a multi-cluster architecture is the installation of a staging cluster next to a production one. The staging environment is managed by the same instance of DriveTrain as the production cluster.

The instance of DriveTrain that manages multiple clusters should be installed separately from managed clusters and have its own cluster model, not be included in staging or production environment models.

The following diagram describes a high-level multi-cluster architecture:

Staging environment¶

Mirantis recommends creating a staging environment for any production purposes. Thus, a typical MCP installation should consist of at least two clusters of the same kind (OpenStack or Kubernetes): for staging and production.

Mirantis recommends you install the staging environment first and reuse as much as possible of the Reclass cluster model of the staging environment to deploy production environment(s). Having a staging environment with a control plane topology that differs from the production environment is considered impractical.

Consider installing a staging environment for your production environment if:

- You will run mission-critical workloads in your production environment.

- You plan to install more than one production environment in the same or similar topology.

- You plan to develop custom components or integrate an external service with MCP.

In any case, the staging environment provides you with a testing sandbox to test any changes in configuration or versions of artifacts before you apply it to your production environment.

Note

DriveTrain pipeline jobs allow you to select what Salt Master node should be called during the particular build/run of the pipeline job. This allows you to target staging and production environments separately. See more details in the Multi-cluster architecture section.

OpenStack cluster¶

MCP enables you to deploy one or multiple OpenStack environments to address the needs of your data center.

Coupled together with the deployment automation, native logging, monitoring, and alerting component, as well as with support for OpenContrail and Open vSwitch networking, an MCP OpenStack environment represents a reliable, scalable, and flexible cloud solution that supports numerous types of workloads and applications.

OpenStack cloud capabilities¶

The following capabilities are available to users of the MCP-based OpenStack clouds:

- Upload and manage virtual machine disk images to object storage using the OpenStack Image (Glance) API.

- Assign storage, network, and other infrastructural resources to the virtual machines upfront and at the runtime using the OpenStack Networking (Neutron) API.

- Boot and run virtual machines on a KVM hypervisor under the OpenStack management from the uploaded images, or from block storage through the OpenStack Compute (Nova) API.

- Migrate virtual machines between hypervisors and their groups, with or without interruption of the workload running on the virtual machine, depending on availability of a shared storage for disk images. This ability is provided by the Compute API.

- Connect remote or local block storage resources to the virtual machines using the OpenStack Block Storage (Cinder) API.

- Configure virtual load balancers through the OpenStack Load Balancing-as-a-Service (Octavia) API.

- Configure DNS names for their virtual server instances through the DNS-as-a-Service (Designate) API.

- Access all API endpoints of the OpenStack cloud over connections secured by SSL/TLS protocols.

OpenStack compact cloud¶

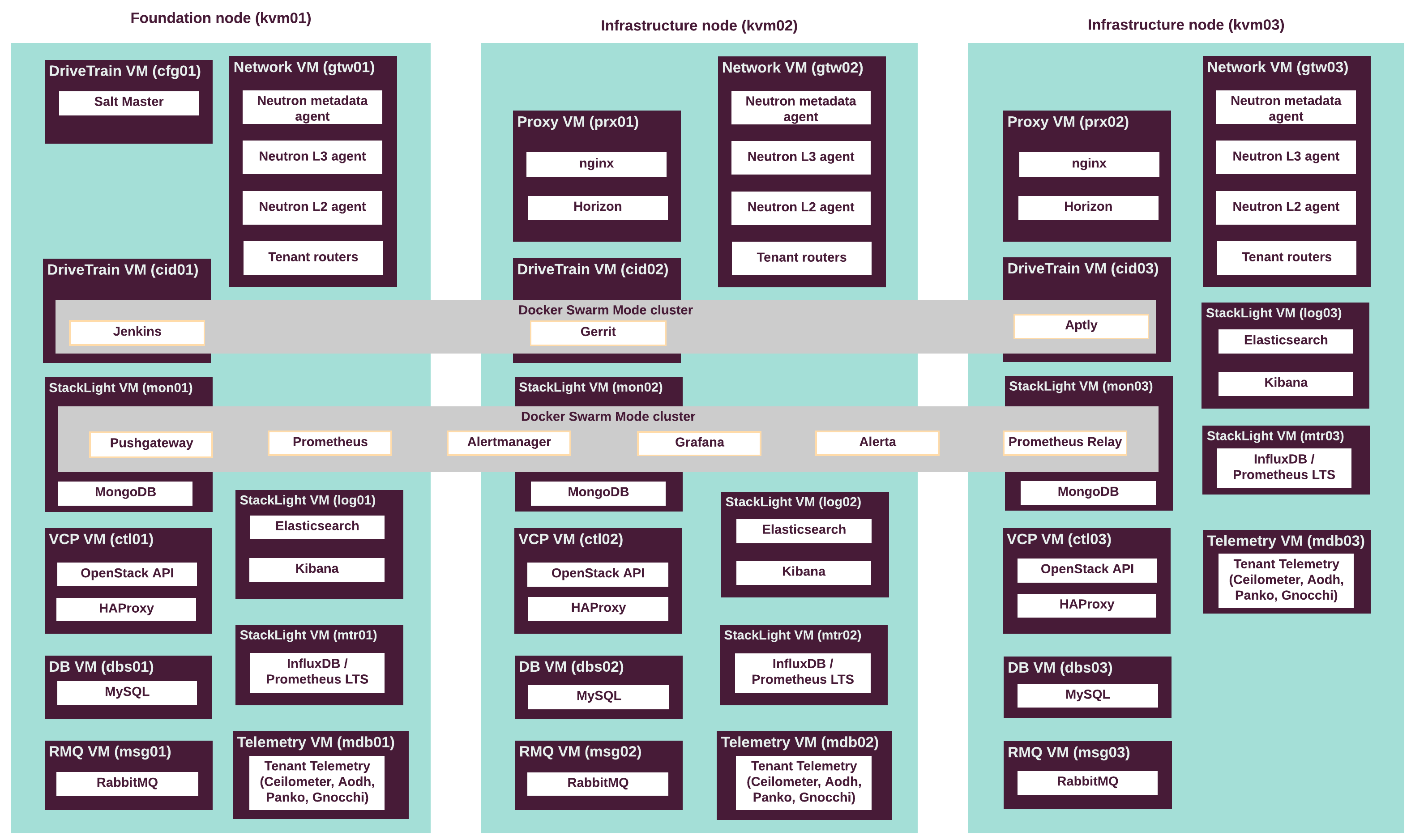

The Compact Cloud is an OpenStack-based reference architecture for MCP. It is designed to provide a generic public cloud user experience to the cloud tenants in terms of available virtual infrastructure capabilities and expectations. It features reduced control plane footprint, at the cost of reduced maximum capacity.

The compact reference architecture is designed to support up to 500 virtual servers or 50 hypervisor hosts. In addition to the desirable number of hypervisors, 3 infrastructure physical servers are required for the control plane. These 3 servers host the OpenStack virtualized control plane (VCP), StackLight services, and virtual Neutron gateway nodes.

Note

Out of the box, the compact reference architecture supports only Neutron OVS networking for OpenStack. DVR is enabled by default.

OpenContrail is not supported out of the box in the compact reference architecture.

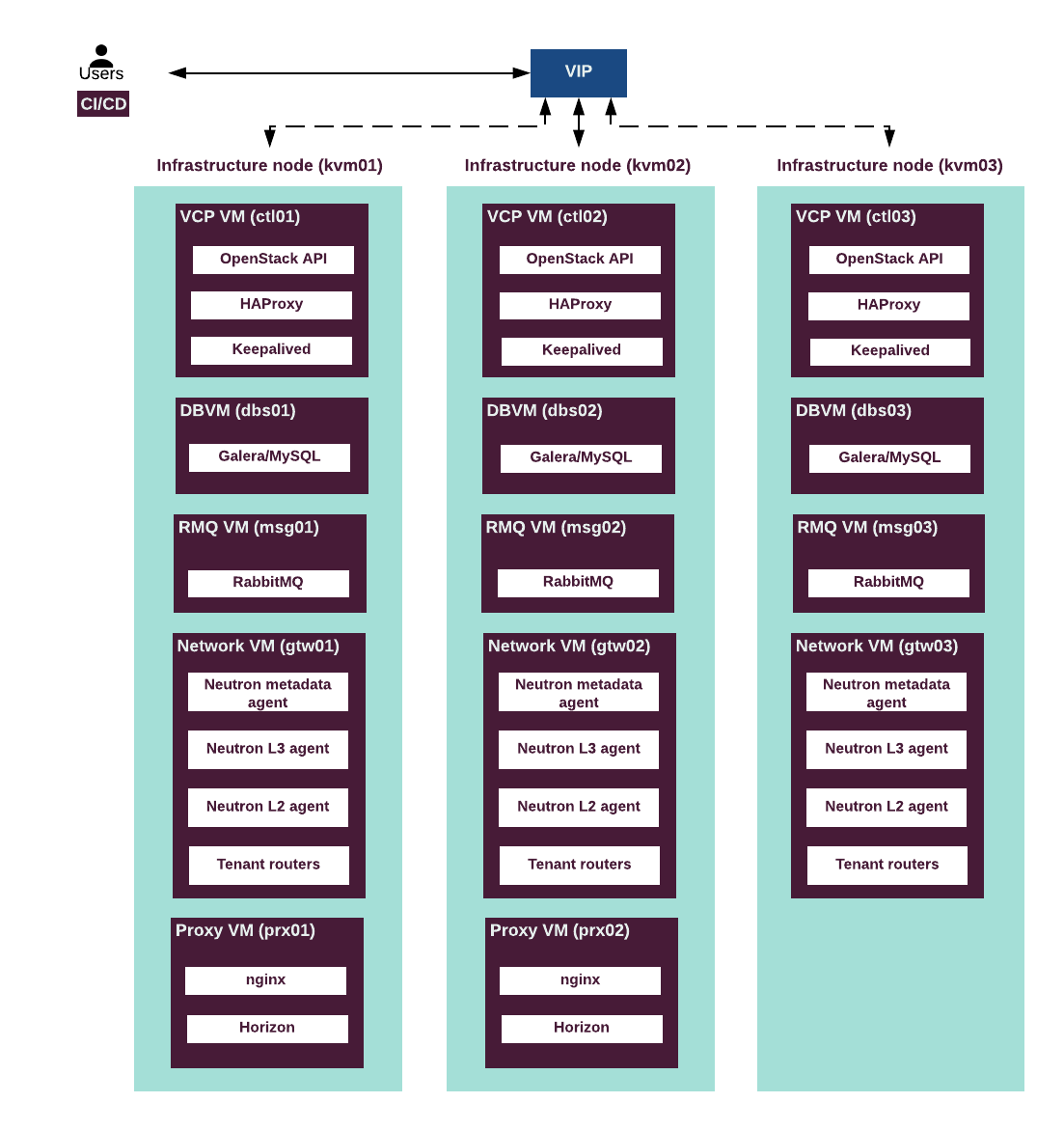

The following diagram describes the distribution of VCP and other services throughout the infrastructure nodes.

The following table describes the hardware nodes in the CPI reference architecture, roles assigned to them, and the number of nodes of each type.

| Node type | Role name | Number of servers |

|---|---|---|

| Infrastructure nodes (VCP) | kvm |

3 |

| OpenStack compute nodes | cmp |

up to 50 |

| Staging infrastructure nodes | kvm |

3 |

| Staging compute nodes | cmp |

2 - 5 |

The following table summarizes the VCP virtual machines mapped to physical servers.

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

ctl |

kvm01

kvm02

kvm03 |

3 | 8 | 32 | 100 |

msg |

kvm01

kvm02

kvm03 |

3 | 8 | 32 | 100 |

dbs |

kvm01

kvm02

kvm03 |

3 | 8 | 16 | 100 |

prx |

kvm02

kvm03 |

2 | 4 | 8 | 50 |

cfg |

kvm01 |

1 | 2 | 8 | 50 |

mon |

kvm01

kvm02

kvm03 |

3 | 4 | 16 | 500 |

mtr |

kvm01

kvm02

kvm03 |

3 | 4 | 32 | 1000 |

log |

kvm01

kvm02

kvm03 |

3 | 4 | 32 | 2000 |

cid |

kvm01

kvm02

kvm03 |

3 | 8 | 32 | 100 |

gtw |

kvm01

kvm02

kvm03 |

3 | 4 | 16 | 50 |

mdb |

kvm01

kvm02

kvm03 |

3 | 4 | 8 | 150 |

| TOTAL | 30 | 166 | 672 | 12450 |

Note

- The

gtwVM should have four separate NICs for the following interfaces:dhcp,primary,tenant, andexternal. It simplifies the host networking as you do not need to pass VLANs to VMs. - The

prxVM should have an additional NIC for the proxy network. - All other nodes should have two NICs for DHCP and primary networks.

See also

- Control plane virtual machines for the details on the functions of nodes of each type.

- Hardware requirements for Cloud Provider Infrastructure for the reference hardware configuration for each type of node.

OpenStack Cloud Provider infrastructure¶

The Cloud Provider infrastructure (CPI) is an OpenStack-based reference architecture for MCP. It is designed to provide a generic public cloud user experience to the cloud tenants in terms of available virtual infrastructure capabilities and expectations.

The reference customer persona for the MCP CPI reference architecture is a Cloud Services Provider, provider of a generic cloud service (public or private).

The CPI reference architecture is designed to support up to 2000 virtual servers or 150 hypervisor hosts. In addition to the desirable number of hypervisors, 9 infrastructure physical servers are required for the control plane. These include 3 servers dedicated to StackLight LMA services and 3 servers for the Neutron gateway nodes. The gateway nodes host virtual routers that provide network connectivity to virtual servers in the cloud.

Note

Out of the box, the CPI reference architecture supports only Neutron OVS networking for OpenStack. DVR is enabled by default.

OpenContrail is not supported out of the box in the CPI reference architecture.

The following table describes the hardware nodes in the CPI reference architecture, roles assigned to them and the number of nodes of each type.

| Node type | Role name | Number of servers |

|---|---|---|

| Infrastructure nodes (VCP) | kvm |

3 |

| Infrastructure nodes (StackLight LMA) | kvm |

3 |

| Tenant network gateway nodes | gtw |

3 |

| OpenStack compute nodes | cmp |

50 - 150 |

| Staging infrastructure nodes | kvm |

3 |

| Staging infrastructure nodes (StackLight LMA) | kvm |

3 |

| Staging tenant network gateway nodes | gtw |

3 |

| Staging compute nodes | cmp |

2 - 5 |

See also

- Control plane virtual machines for the details on the functions of nodes of each type.

- Hardware requirements for Cloud Provider Infrastructure for the reference hardware configuration for each type of node.

- Virtualized control plane layout for the details on layout of control plane services across physical infrastructure servers.

OpenStack large cloud¶

The Large Cloud is an OpenStack-based reference architecture for MCP. It is designed to provide a generic public cloud user experience to the cloud tenants in terms of available virtual infrastructure capabilities and expectations.

The large reference architecture is designed to support up to 5000 virtual servers or 500 hypervisor hosts. In addition to the desirable number of hypervisors, 18 infrastructure physical servers are required for the control plane. This number includes 9 servers that host OpenStack virtualized control plane (VCP), 6 servers dedicated to the StackLight services, and 3 servers for the OpenContrail control plane.

The following diagram describes the distribution of VCP and other services throughout the infrastructure nodes.

The following table describes the hardware nodes in the CPI reference architecture, roles assigned to them, and the number of nodes of each type.

| Node type | Role name | Number of servers |

|---|---|---|

| Infrastructure nodes (VCP) | kvm |

9 |

| Infrastructure nodes (OpenContrail) | kvm |

3 |

| Monitoring nodes (StackLight LMA) | mon |

3 |

| Infrastructure nodes (StackLight LMA) | kvm |

3 |

| OpenStack compute nodes | cmp |

200 - 500 |

| Staging infrastructure nodes | kvm |

18 |

| Staging OpenStack compute nodes | cmp |

2 - 5 |

The following table summarizes the VCP virtual machines mapped to physical servers.

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

ctl |

kvm02 kvm03 kvm04 kvm05 kvm06 |

5 | 24 | 128 | 100 |

dbs |

kvm04 kvm05 kvm06 |

3 | 24 | 64 | 1000 |

msg |

kvm07 kvm08 kvm09 |

3 | 32 | 196 | 100 |

prx |

kvm07 kvm08 |

2 | 8 | 32 | 100 |

mdb |

kvm07 kvm08 kvm09 |

3 | 8 | 32 | 150 |

| TOTAL | 16 | 328 | 1580 | 4450 |

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

cfg |

kvm01 |

1 | 8 | 32 | 50 |

cid |

kvm01 kvm02 kvm03 |

3 | 4 | 32 | 500 |

| TOTAL | 4 | 20 | 128 | 1550 |

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

ntw |

kvm10 kvm11 kvm12 |

3 | 16 | 64 | 100 |

nal |

kvm10 kvm11 kvm12 |

3 | 24 | 128 | 2000 |

| TOTAL | 6 | 120 | 576 | 6300 |

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

cmn |

kvm01 kvm02 kvm03 |

3 | 16 | 32 | 100 |

rgw |

kvm01 kvm02 kvm03 |

3 | 16 | 32 | 50 |

| TOTAL | 6 | 96 | 192 | 450 |

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

mon |

kvm16 kvm17 kvm18 |

3 | 24 | 256 | 1000 [0] |

mtr |

kvm13 kvm14 kvm15 |

3 | 16 | 196 | 3000 [0] |

log |

kvm13 kvm14 kvm15 |

3 | 16 | 64 [1] | 5000 [2] |

| TOTAL | 9 | 192 | 1548 | 27000 |

| [0] | (1, 2) The required disk space per instance depends on the Prometheus retention

policy, which by default is 5 days for mon nodes and 180 days for

mtr nodes. |

| [1] | The Elasticsearch heap size must not exceed 32 GB. For details, see Limiting memory usage. To limit the heap size, see MCP Operations Guide: Configure Elasticsearch. |

| [2] | The required disk space per instance depends on the Elasticsearch retention policy, which is 31 days by default. |

Note

- The

prxVM should have an additional NIC for the Proxy network. - All other nodes should have two NICs for DHCP and Primary networks.

See also

- Control plane virtual machines for the details on the functions of nodes of each type

- Hardware requirements for Cloud Provider Infrastructure for the reference hardware configuration for each type of node.

Virtualized control plane¶

The MCP virtualized control plane (VCP) provides all services and components required to manage the virual infrastructure of the cloud platform.

Virtualized control plane overview¶

Virtualized control plane (VCP) consists of the services required to manage workloads and respond to API calls. VCP is the heart and brain of your OpenStack deployment that controls all logic responsible for managing OpenStack-based virtual infrastructure and provide the OpenStack cloud capabilities.

For the sake of clarity, we split the OpenStack VCP services into the core and extension services.

The OpenStack VCP core services are dealing with the virtual infrastructure

resources, sush as virtual machines, images, networks, and

so on. From the layout standpoint, one instance of each

core service runs in the combined control plane virtual node called

ctl in terms of the metadata model.

The OpenStack VCP extension services enable management of the resources consumed by the workloads indrectly, such as DNS names, virtual load balancers, and so on. Unlike the core services, the extensions typically run on the dedicated virtual nodes. See the Control plane virtual machines for details.

Note

Core and extension services are considered mandatory in the Mirantis OpenStack reference architecture.

OpenStack VCP Core services¶

The following table summarizes the core capabilities provided by MCP Cloud Provider Infrastructure as APIs, services that provide specific parts of that API, the default and optional backends used by these services, where applicable.

| API type | Capabilities provided | Service | Default back-end | Optional back-ends |

|---|---|---|---|---|

| Compute | Boot and run virtual machines | Nova | Linux KVM | N/A |

| Identity | Control ownership, access and quota for the virtual resources | Keystone | N/A | N/A |

| Image | Create and manage VM disk images and snapshots | Glance | Ceph | GlusterFS |

| Networking | Create, manage and connect virtual overlay networks for the VMs | Neutron | OpenVSwitch | OpenContrail |

| Orchestration | Create and manage templates and instances of virtual infrastructures | Heat | N/A | N/A |

| Dashboard | Access dashboard UI for managing the virtual resources | Horizon | N/A | N/A |

| Block storage | Create, manage and connect block storage resources to the VMs | Cinder | Ceph | LVM |

| Object storage | Create, download and manage storage objects | Ceph RADOS Gateway | Ceph | N/A |

OpenStack VCP extension services¶

The following table summarizes the extension capabilities provided by MCP Cloud Provider Infrastructure as APIs, services that provide these APIs, the default and optional backends used by these services, where applicable.

| API Type | Capabilities provided | Service | Default back-end | Optional back-ends |

|---|---|---|---|---|

| Load Balancer | Create, configure and manage virtual load balancers for tenant workloads | Octavia | HAProxy | N/A |

| Domain Name Service | Create and manage DNS names for tenant workloads | Designate | PowerDNS | N/A |

| Secrets management | Store and manage certificates and other types of secrets for tenant workloads | Barbican | DogTag | N/A |

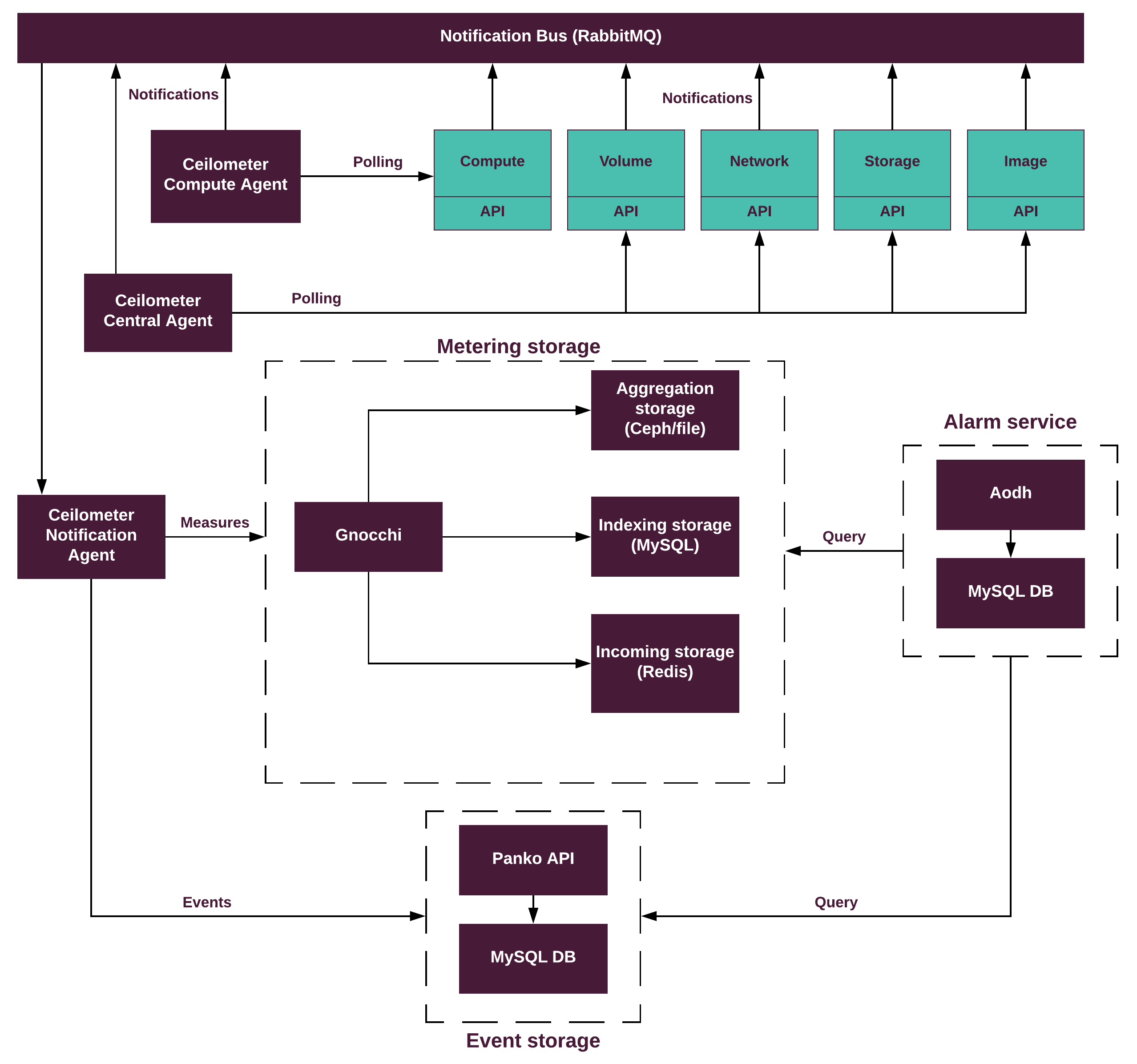

| Telemetry | Collect, store, and expose usage and utilization data for the virtual resources |

|

N/A | N/A |

OpenStack VCP extra services¶

Caution

Manila deprecation notice

In the MCP 2019.2.7 update, the OpenStack Manila component is being considered for deprecation. The corresponding capabilities are still available, although not further enhanced.

Starting with the 2019.2.11 maintenance update, the OpenStack Manila component will no longer be supported by Mirantis. For those existing customers who have the Manila functionality explicitly included in the scope of their contracts, Mirantis will continue to fulfill the corresponding support obligations.

The extra services provide additional capabilities supported by MCP, but not included in the reference architecture. See the supported versions of these components in Release Notes: Components Versions.

The following table summarizes the extra capabilities provided by MCP OpenStack platform as APIs, services that provide these APIs, the default and optional backends used by these services, where applicable.

| API type | Capabilities provided | Service | Default backend | Optional backends |

|---|---|---|---|---|

| Shared Storage | Create, configure, and manage shared storage folders for tenant workloads | Manila | NFS | N/A |

| Bare metal | Provision bare-metal servers as virtual instances through OpenStack API | Ironic [0] | N/A | N/A |

| [0] | Starting from the 2019.2.6 maintenance update, Ironic is officially supported and integrated into MCP. Before the 2019.2.6 maintenance update, Ironic is available as technical preview and can be used for testing and evaluation purposes only. |

Manila storage networking planning¶

Caution

Manila deprecation notice

In the MCP 2019.2.7 update, the OpenStack Manila component is being considered for deprecation. The corresponding capabilities are still available, although not further enhanced.

Starting with the 2019.2.11 maintenance update, the OpenStack Manila component will no longer be supported by Mirantis. For those existing customers who have the Manila functionality explicitly included in the scope of their contracts, Mirantis will continue to fulfill the corresponding support obligations.

Since OpenStack Manila is a share-as-a-service component, it requires network to be configured properly to reach shares from inside virtual machines. The networking approach heavily varies depending on an environment architecture and Manila backend. Some scenarios use the L2 connectivity with network interfaces for share servers created on a tenant-owned subnet. Therefore, virtual machines have direct connectivity to the storage. Such configuration requires a driver that can create share servers for each tenant.

Note

For the list of share drivers and their capabilities supported in OpenStack, see Manila share features support mapping in the official OpenStack documentation.

In some cases, the Manila share server runs on a standalone node that requires a separate network. Such approach requires the L3 connectivity through a router between the floating network and the Manila share server as illustrated on the diagram.

Note

The diagram depicts a common share server configuration.

Ironic planning¶

MCP enables you to provision the OpenStack environment workloads (instances) to bare metal servers using Ironic. Ironic provisions workloads to bare metal servers through the Compute service (Nova) in almost the same way the virtual machines are provisioned.

Note

Starting from the 2019.2.6 maintenance update, Ironic is officially supported and integrated into MCP. Before the 2019.2.6 maintenance update, Ironic is available as technical preview and can be used for testing and evaluation purposes only.

Ironic applies to a number of use cases that include:

- An OpenStack environment contains the workloads that can not be run on virtual machines due to, for example, legacy software installed.

- An OpenStack environment contains the workloads that require high performance as well as no virtualization overhead.

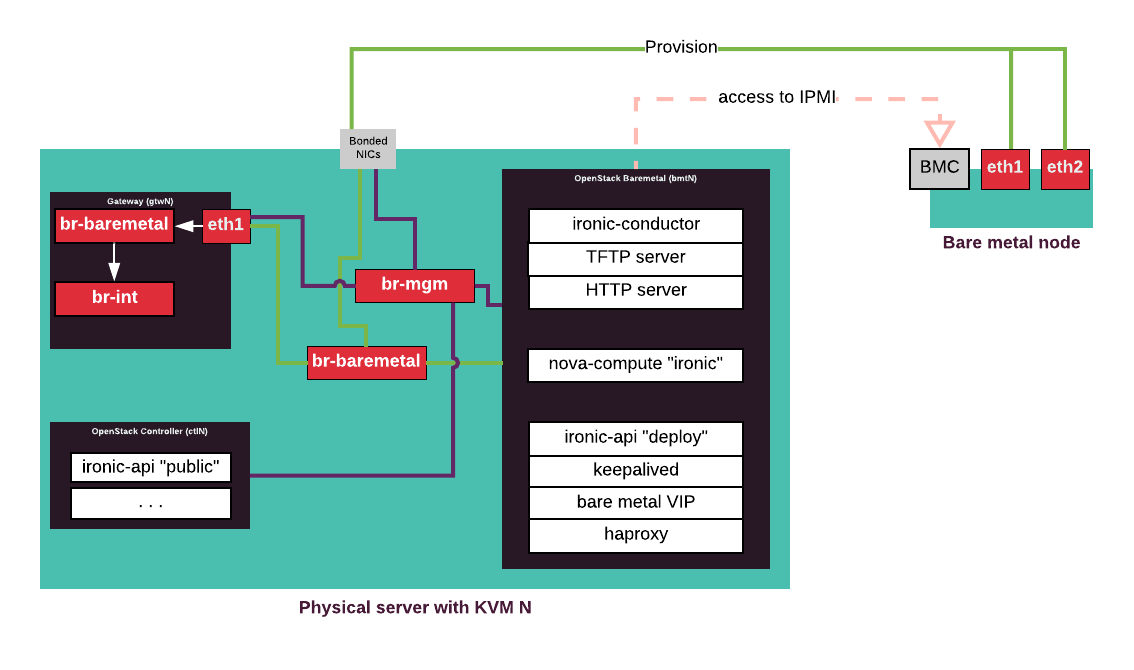

Ironic components¶

Ironic consists of two main components: ironic-api and

ironic-conductor. Additionally, it requires several auxiliary services for

ironic-conductor including TFTP and HTTP servers. To enable the Compute

service users to provision their workloads on bare metal servers, the

nova-compute service is configured to use the ironic virt-driver.

| Component | Description |

|---|---|

ironic-conductor |

Performs actual node provisioning. Due to security and performance considerations, it is deployed

on separate

bmt* VMs on MCP KVM nodes along with its auxiliary

services. |

ironic-api |

Due to security considerations, two pools of

|

nova-compute |

Separate pool of nova-compute services with ironic virt-driver

deploys on bmt* nodes. |

MCP Ironic supported features and known limitations¶

This section lists the Ironic drivers and features with known limitations that MCP DriveTrain supports. The driver or feature support in this section stands for the ability of MCP DriveTrain to deploy and configure the features by means of the Ironic Salt formula through the cluster model.

Supported Ironic drivers in MCP include:

- The

ipmihardware type with theiscsideploy interface (thepxe_ipmitoolclassic driver) - The

ilohardware type with theiscsideploy interface (thepxe_iloclassic driver)

Note

Ironic provides an ability to configure both classic drivers and new drivers. The latter drivers are also known as hardware types.

MCP DriveTrain does not support any other than listed above classic drivers and hardware types with other interfaces.

The following table includes the Ironic features and specifies the support status for these features in MCP. The status of any of the items included in this table may change in the future MCP release versions.

| Ironic feature | Support status for MCP Ironic |

|---|---|

| The integration of Cinder that includes the functionality to boot from an iSCSI back-end volume. | Supported |

| The PXE and iPXE boot | Supported, iPXE by default |

| The Ironic integration with the Fluentd logging | Supported since Queens |

The configuration of CORS for ironic-api |

Supported since Queens |

| Multitenancy support | Supported |

| The automated cleanup enablement and setting priorities for the default cleaning steps | Supported |

| The ability to fine-tune the Ironic conductor performance | Not supported |

| The configuration of the console support for the bare metal nodes | Supported since the MCP 2019.2.4 update, shellinabox by default |

The ironic-inspector and Ironic integration with

ironic-inspector |

Not supported |

| The configuration of a custom path to the iPXE boot script | Not supported |

| Compatibility with OpenContrail | Not supported |

MCP does not provide pre-built images for RAMDisk deployment to be used by Ironic during the node provisioning, as well as end-user images to be deployed on bare metal nodes due to high dependency from the hardware specifics.

Virtualized control plane layout¶

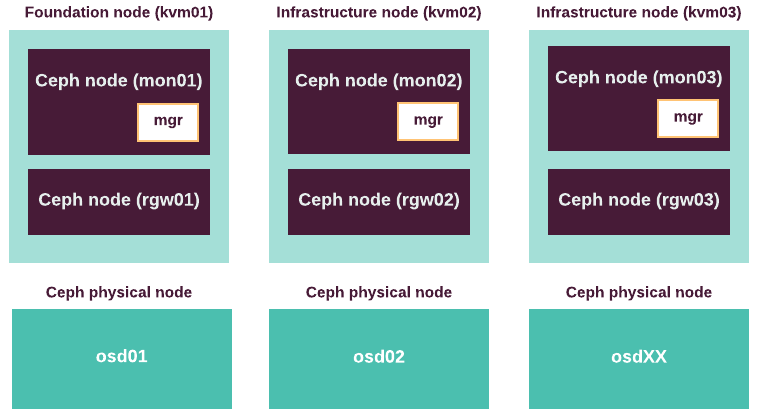

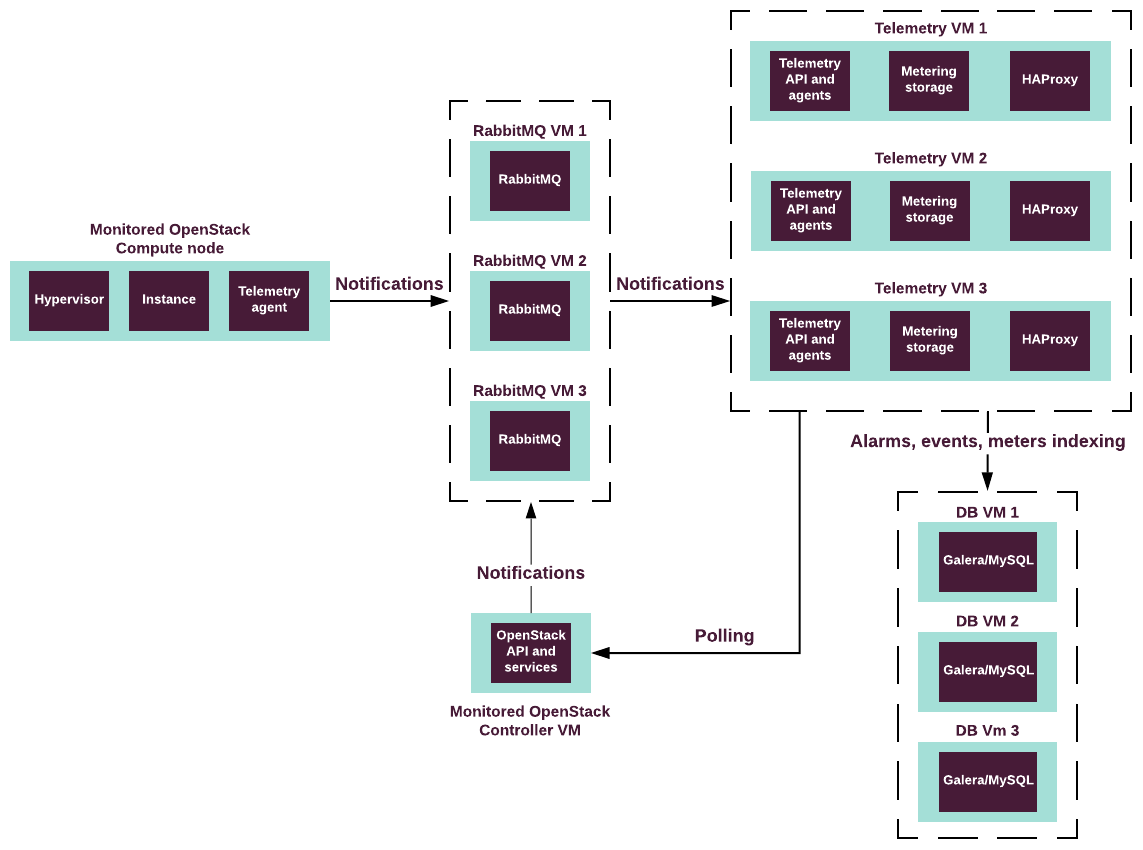

The following diagram describes the distribution of OpenStack and other services throughout the infrastructure nodes. For detailed description of the services, see Virtualized control plane.

The following table summarizes the VCP virtual machines mapped to physical servers. See Control plane virtual machines for details.

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

ctl |

kvm01 kvm02 kvm03 |

3 | 16 | 64 | 100 |

kmn |

kvm01 kvm02 kvm03 |

3 | 4 | 8 | 50 |

dns |

kvm02 kvm03 |

2 | 2 | 4 | 50 |

dbs |

kvm01 kvm02 kvm03 |

3 | 8 | 32 | 100 |

msg |

kvm01 kvm02 kvm03 |

3 | 16 | 64 | 100 |

prx |

kvm02 kvm03 |

2 | 4 | 16 | 300 |

cfg |

kvm01 |

1 | 8 | 16 | 50 |

cid |

kvm01 kvm02 kvm03 |

3 | 8 | 32 | 200 |

cmn |

kvm01 kvm02 kvm03 |

3 | 4 | 8 | 50 |

rgw |

kvm01 kvm02 kvm03 |

3 | 4 | 16 | 50 |

apt |

kvm01 |

1 | 4 | 16 | 500 |

mtr |

kvm04 kvm05 kvm06 |

3 | 12 | 96 | 1500 |

log |

kvm04 kvm05 kvm06 |

3 | 16 | 48 | 3000 |

mon |

kvm04 kvm05 kvm06 |

3 | 12 | 64 | 1000 |

mdb |

kvm04 kvm05 kvm06 |

3 | 8 | 32 | 500 |

High availability in OpenStack¶

The Virtual Control Plane (VCP) services in the MCP OpenStack are highly available and work in active/active or active/standby modes to enable service continuity after a single node failure. An OpenStack environment contains both stateless and stateful services. Therefore, the MCP OpenStack handles them in a different way to achieve high availability (HA).

- OpenStack microservices

To make the OpenStack stateless services, such as

nova-api,nova-conductor,glance-api,keystone-api,neutron-api, andnova-schedulersustainable against a single node failure, HAProxy load balancer (two or more instances) is used for failover. MCP runs multiple instances of each service distributed between physical machines to separate the failure domains.

- API availability

MCP OpenStack ensures HA for the stateless API microservices in an active/active configuration using the HAProxy with Keepalived. HAProxy provides access to the OpenStack API endpoint by redirecting the requests to active instances of an OpenStack service in a round-robin fashion. It sends API traffic to the available backends and prevents the traffic from going to the unavailable nodes. Keepalived daemon provides VIP failover for the HAProxy server.

Note

Optionally, you can manually configure SSL termination on the HAProxy, so that the traffic to OpenStack services is mirrored to go for inspection in a security domain.

- Database availability

In MCP OpenStack, MySQL database server runs in cluster with synchronous data replication between the instances of MySQL server. The cluster is managed by Galera. Galera creates and runs MySQL cluster of three instances of the database server. All servers in the cluster are active. HAProxy redirects all writing requests to just one server at any time and handles failovers.

- Message bus availability

RabbitMQ server provides messaging bus for OpenStack services. In MCP reference configuration, RabbitMQ is configured with

ha-modepolicy to run a cluster in active/active mode. Notification queues are mirrored across the cluster. Messaging queues are not mirrored by default, but are accessible from any node in the cluster.

- OpenStack dashboard

Two instances of proxy node are deployed. They run OpenStack dashboard (Horizon), and Nginx based reverse proxy that exposes OpenStack API endpoints.

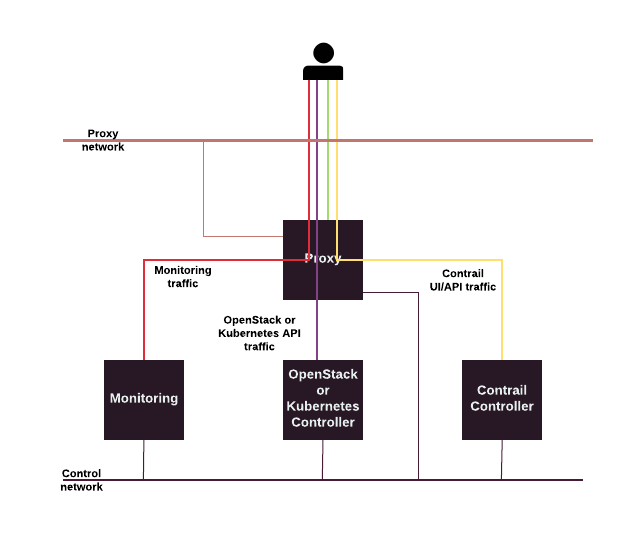

The following diagram describes the control flow in an HA OpenStack environment:

Secure OpenStack API¶

The Transport Layer Security (TLS) cryptographic protocol enables you to provide a secured encrypted communication for the client-server OpenStack applications as well as for the RabbitMQ and MySQL backends of an MCP OpenStack environment. TLS protects the communications in your MCP cluster from trespassing and eavesdropping.

Note

In the Mirantis Reference Architecture, all traffic between OpenStack API endpoints and clients is encrypted by default, including both external and internal endpoints.

See also

- MCP Deployment Guide: Enable TLS support

- Introduction to TLS and SSL in the OpenStack Security Guide

Compute nodes planning¶

Determining the appropriate hardware for the compute nodes greatly depends on the workloads, number of virtual machines, and types of applications that you plan to run on the nodes. Typically, the engagement of a Mirantis cloud architect is required to properly plan the capacity for your cloud.

That said, it is essential to understand your cloud capacity utilization tendencies and patterns to plan for expansion accordingly. On one hand, planning expansion too aggressively may result in underutilization. Underestimating expansion trends, on the other hand, threatens oversubscription and eventual performance degradation.

Mirantis provides a spreadsheet with the compute node calculation. You need to fill the following parameters in the spreadsheet:

| Parameter | Description |

|---|---|

| Overhead components | Describe components that put additional overhead on system resources, such as DVR/vRouter and Hypervisor. The parameters specified in the spreadsheet represent standard workloads. The DVR / vRouter parameters represent a Compute node with 2 x 10 Gbps NICs. If you use a larger capacity network interfaces, such as 40 Gbps, this number may increase. For most deployments, the hypervisor overhead parameters equal represented numbers. |

| HW Components | Compute profile represents the hardware specification that you require for the specified number of virtual machines and the selected flavor. The adjusted version of the compute profile represents the hardware specification after correction to overhead components. |

| Oversubscription ratio | Defines the amount of virtual resources to allocate for a single physical resource entity. Oversubscription ratio highly depends on the workloads that you plan to run in your cloud. For example, Mirantis recommends to allocate 8 vCPU per 1 hyper-thread CPU, as well as 1:1 ratio for both memory and disk for standard workloads, such as web application development environments. If you plan to run higher CPU utilization workloads, you may need to decrease CPU ratio down to 1:1. |

| Flavor definitions | Defines a virtual machine flavor that you plan to use in your deployment. The flavor depends on the workloads that you plan to run. In the spreadsheet, the OpenStack medium virtual machine is provided as an example. |

| Flavor totals | Defines the final hardware requirements based on specified parameters. Depending on the number and the virtual machine flavor, you get the number of compute nodes (numHosts) with the hardware characteristics. |

| Resource utilization per compute node | The resource utilization parameter defines the percentage of memory, processing, and storage resource utilization on each compute node. Mirantis recommends that vCPU, vMEM, and vDISK are utilized at least at 50 %, so that your compute nodes are properly balanced. If your calculation results in less than 50 % utilization, adjust the numbers to use the resources more efficiently. |

See also

Download Compute nodes calculation

OpenStack network architecture¶

OpenStack Networking manages virtual networks that connect virtual server instances to each other and to external network. Also, OpenStack networking handles network configuration of instances, virtual firewalls, and floating IP addresses.

Tenant virtual networks are overlay networks on top of physical and logical infrastructure of a data center network. Both the overlay network creation and host network configuration depend on the backend that you use.

MCP supports the following network technologies as back ends for OpenStack Networking:

- Neutron Open vSwitch (OVS)

- OpenContrail

Selecting a network technology¶

Neutron Open vSwitch networking is the default for OpenStack networking in the MCP CPI reference architecture. The second supported option is OpenContrail SDN networking.

The following table compares the two technologies and defines use cases for both.

| Neutron OVS | OpenContrail |

|---|---|

Neutron with Open vSwitch is the default networking for OpenStack. It is supported by diverse community of the OpenStack developers. Neutron provides basic networking including the IP addresses, IP routing, security groups, and floating IP addresses management. It also provides virtual load balancer capabilities through LBaaS API (Octavia) to the cloud users. Neutron uses VXLAN encapsulation for tenant overlay networks. Neutron supports distributed virtual router (DVR) for east-west and network node for north-bound traffic originating from tenant virtual servers. North-bound traffic reaches its destinations using address translation (SNAT) on the network (gateway) nodes. |

OpenContrail is an open source SDN product backed by Juniper and supported by community of developers. OpenContrail provides both basic networking, such as IP addresses management, security groups, floating IP addresses, and advanced networking functions, including DPDK network virtualization and SR-IOV. OpenContrail replaces standard Linux networking stack with its

own The main use case for OpenContrail is advanced overlay network configurations that heavily rely on Service Function Chaining and SR-IOV. It is generally recommended to cloud operators that run Telco workloads in their clouds. |

See also

Types of networks¶

The following diagram provides an overview of the underlay networks in an OpenStack environment:

An OpenStack environment typically uses the following types of networks:

Underlay networks for OpenStack that are required to build network infrastructure and related components. See details on the physical network infrastructure for CPI reference architecture in Networking.

PXE / Management

This network is used by SaltStack and MAAS to serve deployment and provisioning traffic, as well as to communicate with nodes after deployment. After deploying an OpenStack environment, this network runs low traffic. Therefore, a dedicated 1 Gbit network interface is sufficient. The size of the network also depends on the number of hosts managed by MAAS and SaltStack.

Public

Virtual machines access the Internet through Public network. Public network provides connectivity to the globally routed address space for VMs. In addition, Public network provides a neighboring address range for floating IPs that are assigned to individual VM instances by the project administrator.

Proxy

This network is used for network traffic created by Horizon and for OpenStack API access. The proxy network requires routing. Typically, two proxy nodes with Keepalived VIPs are present in this network, therefore, the /29 network is sufficient. In some use cases, you can use Proxy network as Public network.

Control

This network is used for internal communication between the components of the OpenStack environment. All nodes are connected to this network including the VCP virtual machines and KVM nodes. OpenStack control services communicate through the control network. This network requires routing.

Data

This network is used to build a network overlay. All tenant networks, including floating IP, fixed with RT, and private networks, are carried over this underlay network. VxLAN encapsulation is used by default in CPI reference architecture.

Data network does not require external routing by default. Routing for tenant networks is handled by Neutron gateway nodes. Routing for Data underlay network may be required if you want to access your workloads from corporate network directly (not via Floating IP addresses). In this case, external routers are required that are not managed by MCP.

Storage access (optional)

This network is used to access Ceph storage servers (OSD nodes). The network does not need to be accessible from outside the cloud environment. However, Mirantis recommends that you reserve a dedicated and redundant 10 Gbit network connection to ensure low latency and fast access to the block storage volumes. You can configure this network with routing for L3 connectivity or without routing. If you set this network without routing, you must ensure additional L2 connectivity to nodes that use Ceph.

Storage replication (optional)

This network is used for copying data between OSD nodes in a Ceph cluster. Does not require access from outside the OpenStack environment. However, Mirantis recommends reserving a dedicated and redundant 10 Gbit network connection to accommodation high replication traffic. Use routing only if rack-level L2 boundary is required or if you want to configure smaller broadcast domains (subnets).

Virtual networks inside OpenStack

Virtual networks inside OpenStack include virtual public and internal networks. Virtual public network connects to the underlay public network. Virtual internal networks exist within the underlay data network. Typically, you need multiple virtual networks of both types to address the requirements of your workloads.

In the reference architecture for Cloud Provider Infrastructure, isolated virtual/physical networks must be configured for PXE and Proxy traffic. For PXE network, 1 GbE interface is required. For Proxy, Data and Control network, CPI uses 10 GbE interfaces bonded together and split into VLANs, one VLAN per network. For Storage networks CPI requires a dedicated pair of 10 GbE interfaces bonded together for increased performance and resiliency. Storage access and replication networks may be divided into separate VLANs on top of the dedicated bond interface.

See also

MCP external endpoints¶

MCP exposes a number of services through endpoints terminated on a reverse

proxy server. MCP uses nginx as a reverse proxy server. The server

listens on a virtual IP address from Public network (see

types-networks).

The following table summarizes the endpoints and port numbers used by services in MCP CPI referenece architecture.

| Component | Service | Endpoint | Port |

|---|---|---|---|

| OpenStack | Dashboard | https | 443 |

| Keystone | https | 5000 | |

| Neutron | https | 9696 | |

| Nova | https | 8774 | |

| Nova Placement | https | 8778 | |

| Ceilometer | https | 8777 | |

| Cinder | https | 8776 | |

| Glance | https | 9292 | |

| Heat CFN | https | 8000 | |

| Heat CloudWatch | https | 8003 | |

| Heat | https | 8004 | |

| NoVNC | https | 6080 | |

| Octavia | https | 9876 | |

| Barbican | https | 9311 | |

| Designate | https | 9001 | |

| Aodh | https | 8042 | |

| Gnocci | https | 8041 | |

| Panko | https | 8977 | |

| Dashboard [1] | http | 80 | |

| Ceph | RadosGW | https | 8080 |

| StackLight | Alerta | https | 15017 |

| Grafana | https | 8084 | |

| Kibana | https | 5601 | |

| Alertmanager | https | 15011 | |

| Prometheus | https | 15010 | |

| DriveTrain | Gerrit | https | 8070 |

| jenkins | https | 8081 |

| [1] | Redirected to HTTPS, port 443. |

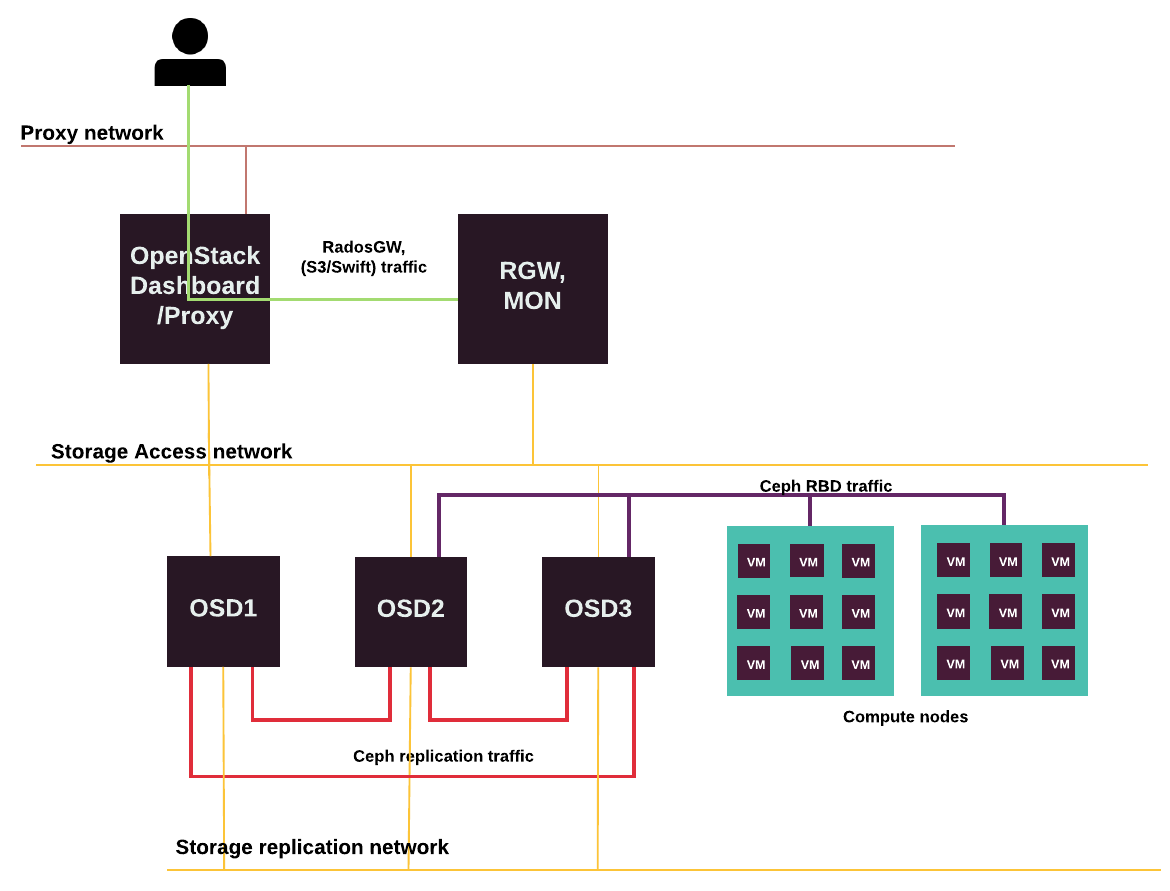

Storage traffic¶

Storage traffic flows through dedicated storage networks that Mirantis recommends to configure if you use Ceph.

The following diagram displays the storage traffic flow for Ceph RBD, replication, and RadosGW.

Neutron OVS networking¶

OpenStack Networking with OVS is used by default and is the only supported networking mode in the reference architecture for the CPI use case.

Neutron configuration for the CPI use case enables distributed virtual router (DVR) by default. Overlay networks are created using the VxLAN encapsulation. Access to the external networks is provided by source network address translation (SNAT) working on dedicated network nodes or gateway nodes.

Neutron supports external access to the virtual server instances in the cloud through the floating IP assigned to the virtual servers through the networking API.

Neutron OVS requires setting up of a specific network node, which is sometimes called gateway, that handles the routing across the internal networks, as well as the outbound routing.

Limitations¶

Due to a limitation in the OpenStack Networking service, the Distributed Virtual Router (DVR) in combination with L3 HA is not stable enough and affects the SNAT traffic. Therefore, Mirantis does not recommend such a configuration for production deployments.

Instead of L3 HA, the allow_automatic_l3agent_failover option is enabled

by default in neutron.conf for the Neutron OVS with DVR deployments in MCP.

This option enables automatic rescheduling of a failed L3 agent routers

to an online L3 agent on a different network node.

Node configuration¶

For all Neutron OVS use cases, configure four VLANs and four IP addresses in separate networks on all compute and network nodes. You will also need two VLAN ranges for tenant traffic and external VLAN traffic.

The following table describes a node network configuration for a Neutron OVS-based MCP OpenStack cluster:

| Interface/Bridge | Description | Interfaces | IP |

|---|---|---|---|

eth0 |

PXE Boot traffic | - |

Dynamic |

br-mgm |

OpenStack and other management traffic | bond0.<management_VLAN> |

Static |

br-prv |

Tenant VLAN traffic | bond0 |

Static |

br-mesh |

Tenant overlay traffic (VXLAN) | bond0.<tenant_VLAN> |

Static |

br-floating |

External VLAN traffic | bond0.<external_VLAN> (or VLAN range) |

No IP |

br-stor |

Storage traffic | bond0.<storage_VLAN> |

No IP |

bond0 |

Main bond | eth1, eth2 | No IP |

Depending on hardware capabilities, you can separate bonds for the control plane and data plane.

Depending on your use case, configure your network nodes to use the VLAN or VXLAN-based tenant network.

Network node configuration for VXLAN tenant networks¶

In the VXLAN-based tenant networks, the network node terminates VXLAN mesh

tunnels and sends traffic to external provider VLAN networks.

Therefore, all tagged interfaces must be configured directly

in Neutron OVS as internal ports without Linux bridges.

Bond0 is added into br-floating, which is mapped as physnet1

into the Neutron provider networks. br-mgm and br-mesh

are Neutron OVS internal ports with tags and IP addresses.

As there is no need to handle storage traffic on the network nodes,

all the sub-interfaces can be created in Neutron OVS. This also

allows for the creation of VLAN providers through the Neutron API.

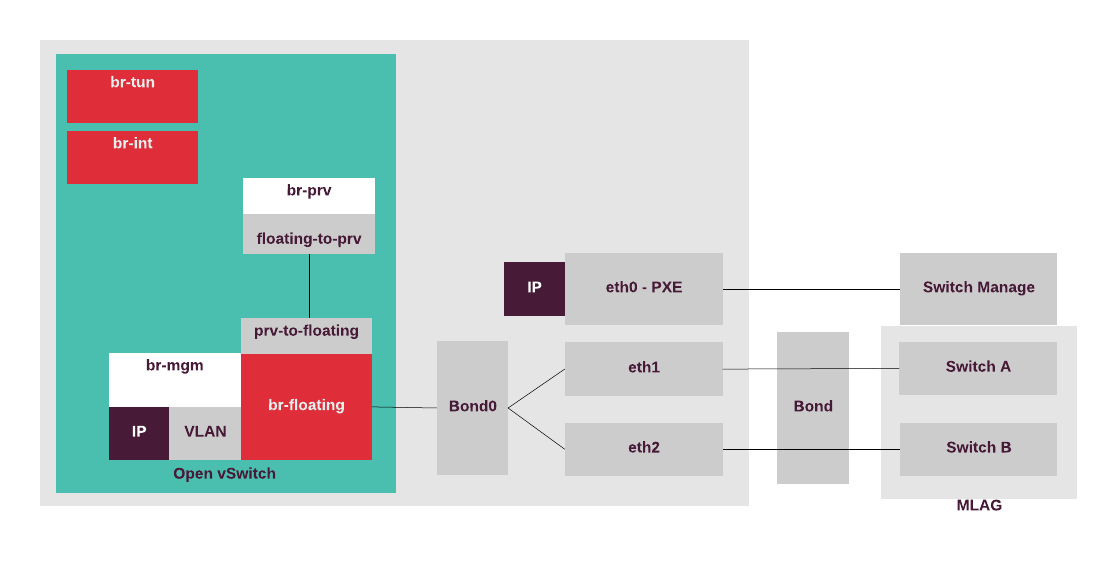

The following diagram displays the network node configuration for the use case with Neutron VXLAN tenant networks and external access configured on the network node only.

Network node configuration for VLAN tenant networks¶

In the VLAN-based tenant networks, the network node terminates private VLANs

and sends traffic to the external provider of VLAN networks.

Therefore, all tagged interfaces must be configured directly

in Neutron OVS as internal ports without Linux bridges.

Bond0 is added into br-floating, which is mapped as physnet1

into the Neutron provider networks. br-floating is patched with

br-prv which is mapped as physnet2 for VLAN tenant

network traffic. br-mgm is an OVS internal port with a tag and

an IP address. br-prv is the Neutron OVS bridge which is

connected to br-floating through the patch interface. As storage

traffic handling on the network nodes is not required, all the

sub-interfaces can be created in Neutron OVS which enables creation

of VLAN providers through the Neutron API.

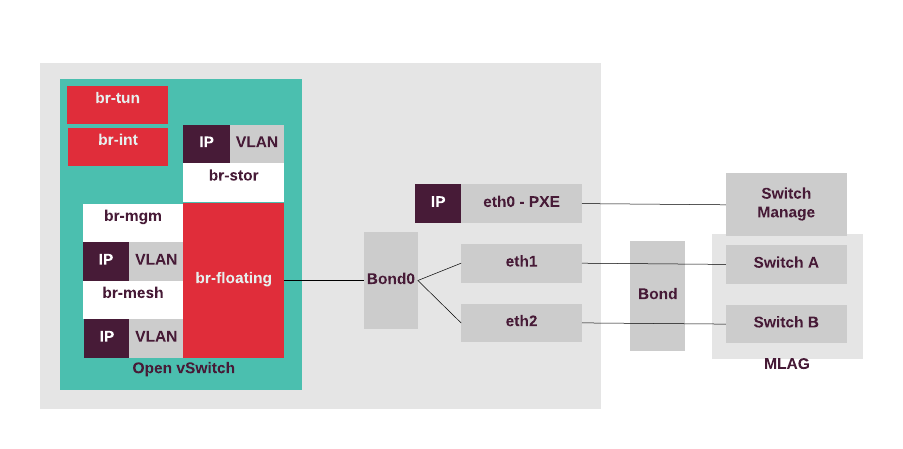

The following diagram displays the network node configuration for the use case with Neutron VLAN tenant networks and external access configured on the network node only.

VCP hosts networking¶

Each physical server that hosts a KVM on which the Virtualized Control Plane (VCP) services run must have the following network configuration:

| Bridge | Description | Interfaces | IP |

|---|---|---|---|

br-pxe |

PXE Boot traffic | eth0 | Dynamic |

br-bond0 |

Tenant internal and external traffic | bond0 (eth1/eth2) |

No IP |

br-mgm |

OpenStack and other management traffic | bond0.<management_VLAN> |

Static |

br-prx |

Proxy traffic | bond0.<proxy_VLAN> |

No IP |

Neutron VXLAN tenant networks with network nodes for SNAT (DVR for all)¶

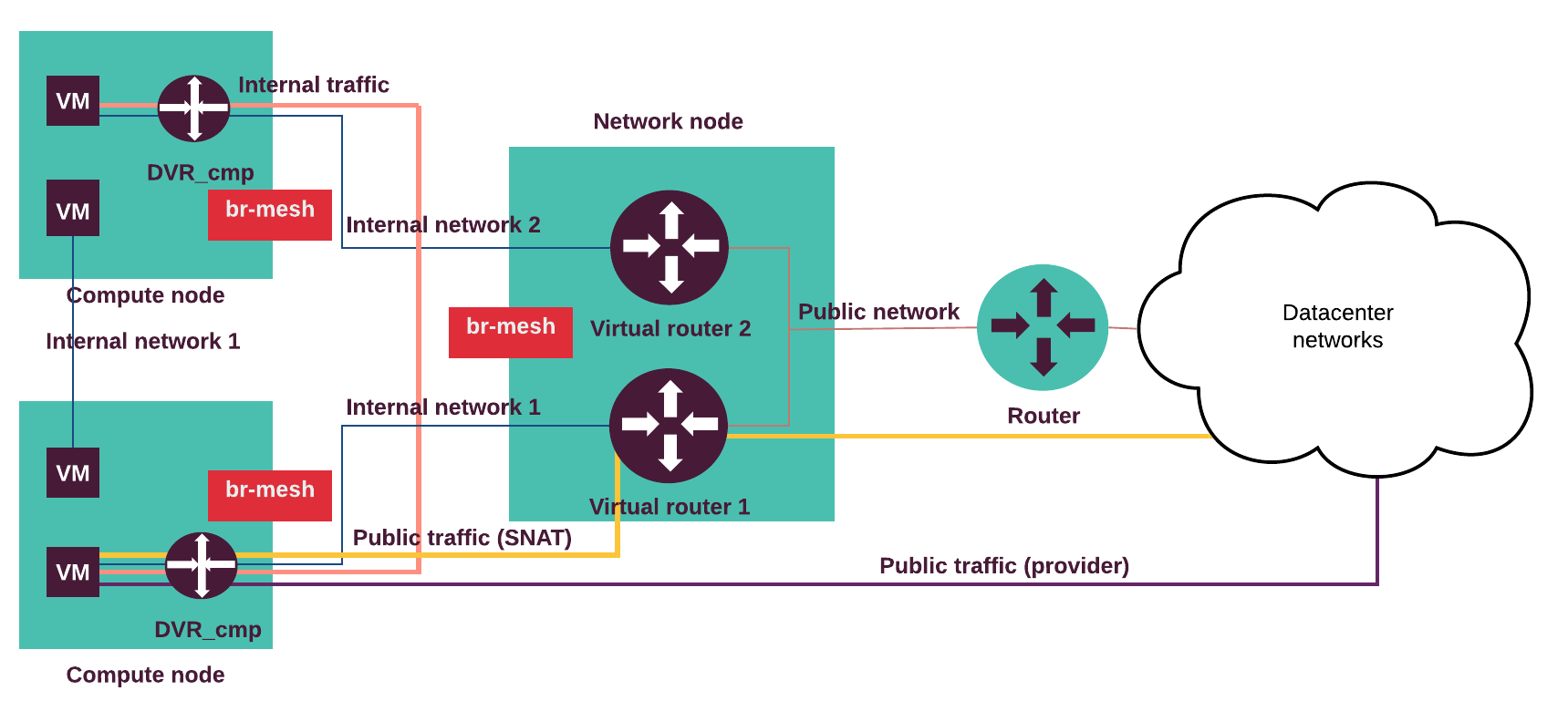

If you configure your network with Neutron OVS VXLAN tenant networks with network nodes for SNAT and Distributed Virtual Routers (DVR) on the compute nodes, network nodes perform SNAT and routing between tenant and public networks. The compute nodes running DVRs perform routing between tenant networks, as well as routing to public networks in cases when public networks (provider, externally routed) are exposed or Floating IP addresses are used.

The following diagram displays internal and external traffic flow.

The internal traffic from one tenant virtual machine located on the virtual Internal network 1 goes to another virtual machine located in the Internal network 2 through the DVRs on the compute nodes. The external traffic (SNAT) from a virtual machine goes through the Internal network 1 and the DVR on the compute node to the virtual router on the network node and through the Public network to the outside network. The external routable traffic from a virtual machine on the compute nodes goes through the Internal network 1 and the DVR on the compute node through the Control or Public network to the outside network.

Traffic flow examples:

- A virtual machine without a floating IP address sends traffic to a

destination outside the Public network (N-S). The Internal network 1

is connected to a public network through the Neutron router.

The virtual machine (VM) is connected to the Internal network 1.

- The VM sends traffic through the virtual router to the network node.

- The network node performs SNAT, de-encapsulates and forwards traffic to the public network’s external gateway router.

- Return path same.

- A virtual machine with a floating IP address sends traffic to a destination

outside the Public network (N-S). The compute node with a DVR hosting the

VM is connected to a public network. An Internal network 1 is connected to

the external network through the Neutron router. The VM is connected to the

Internal network 1.

- The VM sends traffic through the compute node DVR to a public network (egress).

- The compute node DVR performs SNAT, de-encapsulates and forwards traffic to the public network’s external gateway router.

- Return path (ingress) same (DNAT).

- A virtual machine on an internal (private, tenant) network sends traffic to

a destination IP address on a public (provider, externally routed) network

(E-W). The compute node with DVR hosting the VM is connected to the provider

network. The Internal network 1 is connected to the provider network

through the Neutron router. The VM is connected to the Internal network 1.

- The VM sends traffic through the compute node DVR to a destination IP on a public network.

- The compute node DVR de-encapsulates and forwards traffic to a public network (no NAT).

- Return path same.

- A virtual machine (VM1) sends traffic to another VM (VM2) located on

separate host(E-W).

The Internal network 1 is connected to the Internal network 2

through the Neutron router. The (VM1) is connected to the Internal network 1

and the VM2 is connected to the Internal network 2.

- The VM1 sends traffic to the VM2 through the compute node DVR.

- The DVR on the compute node hosting VM1 forwards encapsulated traffic to the DVR on the compute node hosting VM2.

- Return path same.

The compute nodes can access the external network,

therefore, there is the OVS bridge called br-floating.

All Open vSwitch bridges are automatically created by the Neutron

OVS agent. For a highly-available production environment, network

interface bonding is required.

The separation of the traffic types is done by the bonded tagged

sub-interfaces,

such as bond.x for the virtualized control plane traffic (management IP),

bond.y for the data plane bridge (br-mesh) that provides VTEP for

OVS, bond.z for storage, and others. The IP address of br-mesh is used