Install DTR

Install DTR¶

DTR system requirements¶

Docker Trusted Registry can be installed on-premises or on the cloud. Before installing, be sure your infrastructure has these requirements.

Hardware and Software requirements¶

You can install DTR on-premises or on a cloud provider. To install DTR, all nodes must:

- Be a worker node managed by UCP (Universal Control Plane). See Compatibility Matrix for version compatibility.

- Have a fixed hostname.

Minimum requirements¶

- 16GB of RAM for nodes running DTR

- 2 vCPUs for nodes running DTR

- 10GB of free disk space

Recommended production requirements¶

- 16GB of RAM for nodes running DTR

- 4 vCPUs for nodes running DTR

- 25-100GB of free disk space

Note that Windows container images are typically larger than Linux ones and for this reason, you should consider provisioning more local storage for Windows nodes and for DTR setups that will store Windows container images.

Ports used¶

When installing DTR on a node, make sure the following ports are open on that node:

| Direction | Port | Purpose |

|---|---|---|

| in | 80/tcp | Web app and API client access to DTR. |

| in | 443/tcp | Web app and API client access to DTR. |

These ports are configurable when installing DTR.

Compatibility and maintenance lifecycle¶

Docker Enterprise Edition is a software subscription that includes three products:

- Docker Enterprise Engine

- Docker Trusted Registry

- Docker Universal Control Plane

Learn more about the maintenance lifecycle for these products.

Install DTR online¶

Docker Trusted Registry (DTR) is a containerized application that runs on a swarm managed by the Universal Control Plane (UCP). It can be installed on-premises or on a cloud infrastructure.

Step 1. Validate the system requirements¶

Before installing DTR, make sure your infrastructure meets the DTR system requirements that DTR needs to run.

Step 2. Install UCP¶

Since DTR requires Docker Universal Control Plane (UCP) to run, you need to ref:install UCP for production<ucp_install> on all the nodes where you plan to install DTR.

DTR needs to be installed on a worker node that is being managed by UCP. You cannot install DTR on a standalone Docker Engine.

Step 3. Install DTR¶

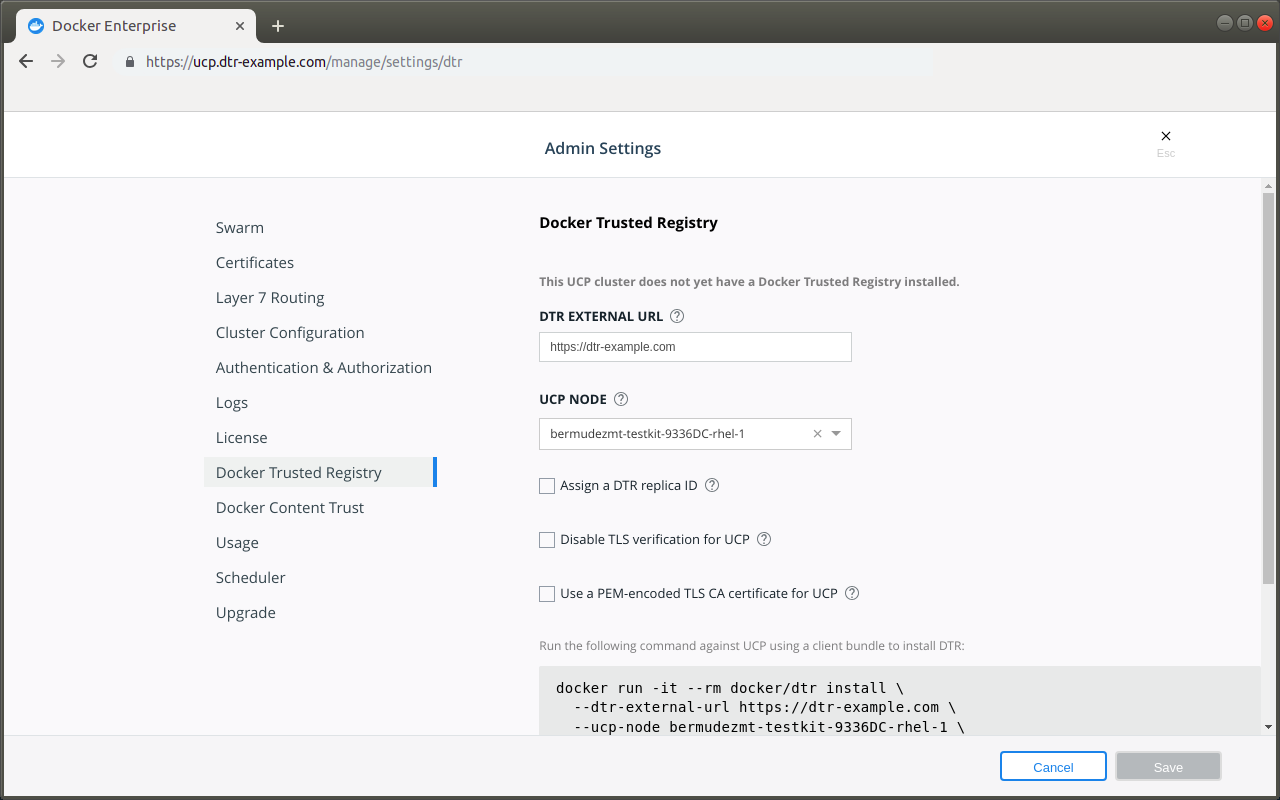

Once UCP is installed, navigate to the UCP web UI. In the Admin Settings, choose Docker Trusted Registry.

After you configure all the options, you’ll have a snippet that you can use to deploy DTR. It should look like this:

# Pull the latest version of DTR

$ docker pull docker/dtr:2.6.15

# Install DTR

$ docker run -it --rm \

docker/dtr:2.6.15 install \

--ucp-node <ucp-node-name> \

--ucp-insecure-tls

You can run that snippet on any node where Docker is installed. As an example you can SSH into a UCP node and run the DTR installer from there. By default the installer runs in interactive mode and prompts you for any additional information that is necessary.

By default DTR is deployed with self-signed certificates, so your UCP deployment might not be able to pull images from DTR. Use the –dtr-external-url <dtr-domain>:<port> optional flag while deploying DTR, so that UCP is automatically reconfigured to trust DTR. Since HSTS (HTTP Strict-Transport-Security) header is included in all API responses, make sure to specify the FQDN (Fully Qualified Domain Name) of your DTR, or your browser may refuse to load the web interface.

Step 4. Check that DTR is running¶

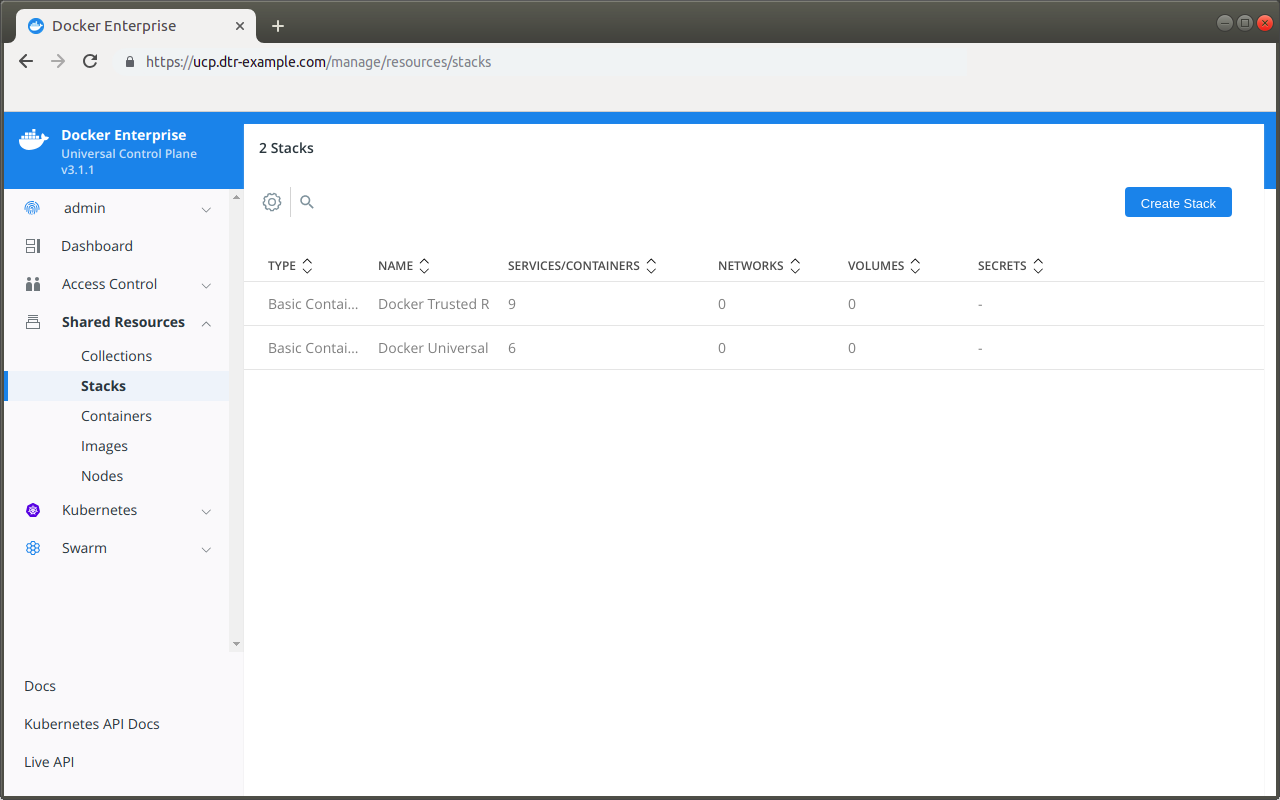

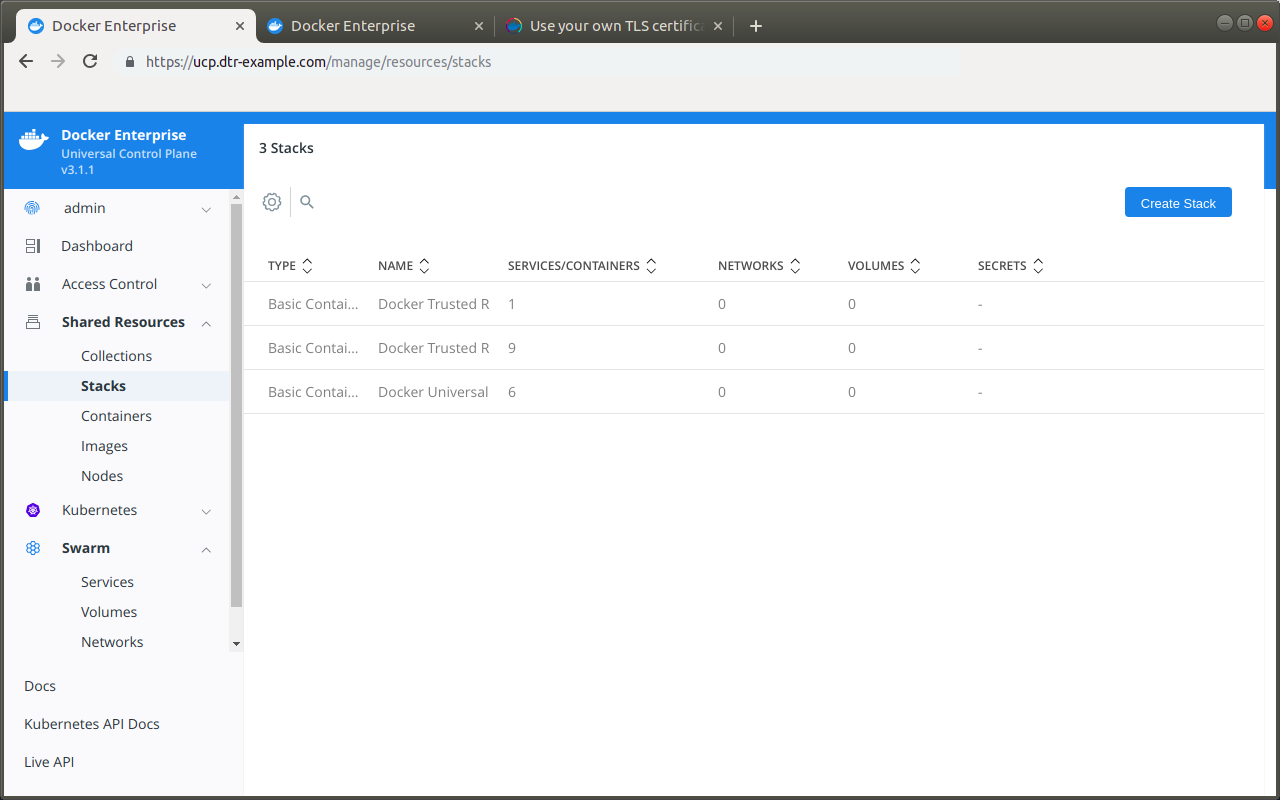

In your browser, navigate to the Docker Universal Control Plane web interface, and navigate to Shared Resources > Stacks. DTR should be listed as an application.

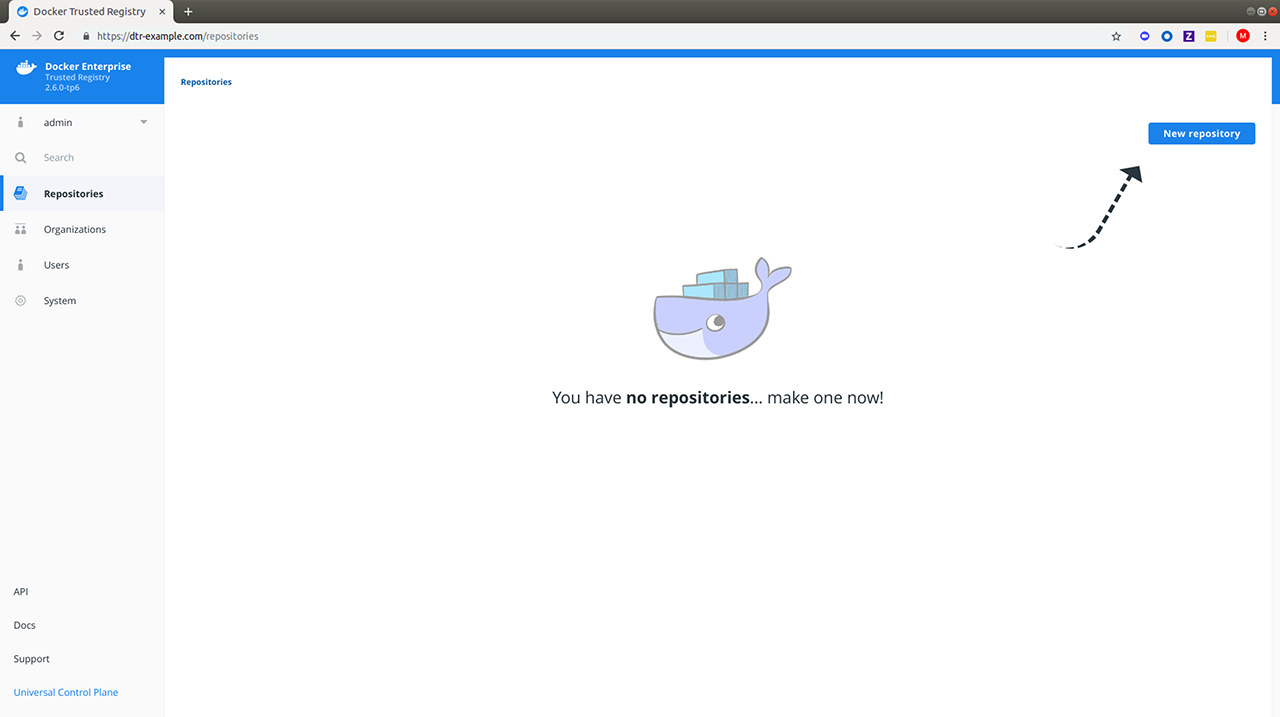

You can also access the DTR web interface, to make sure it is working. In your browser, navigate to the address where you installed DTR.

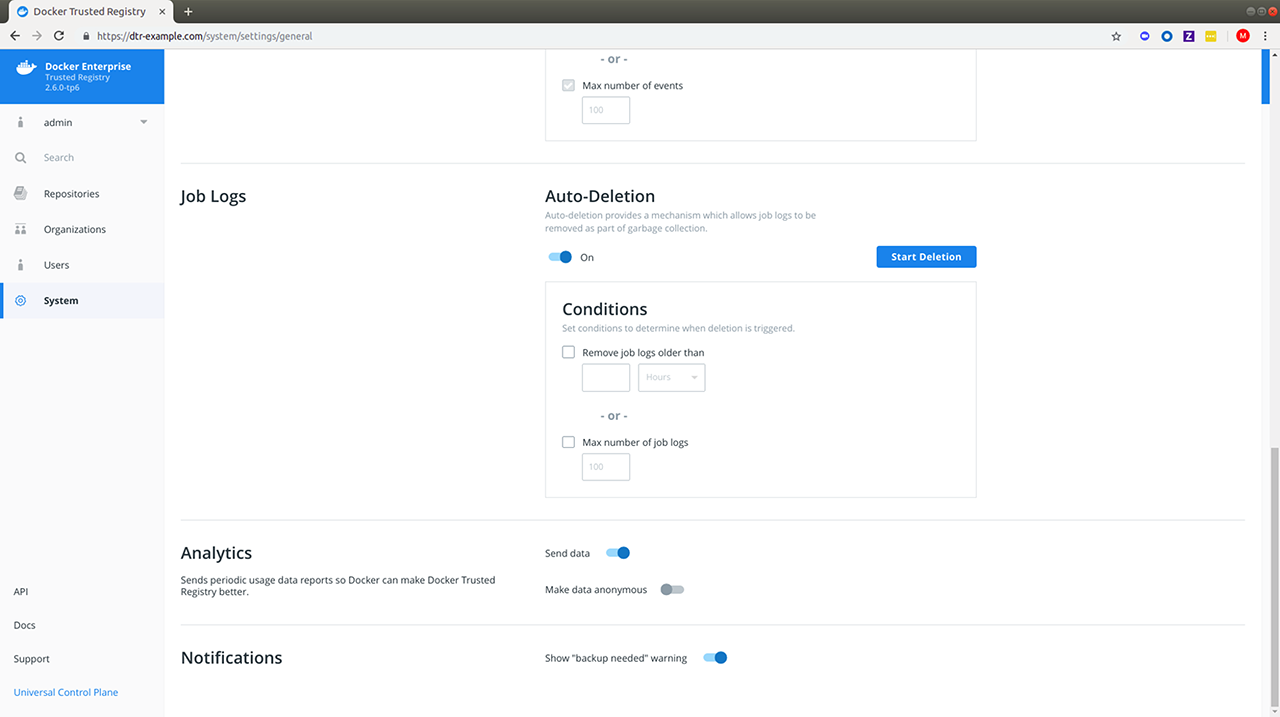

Step 5. Configure DTR¶

After installing DTR, you should configure:

- The certificates used for TLS communication. Learn more.

- The storage backend to store the Docker images. Learn more.

To perform these configurations, navigate to the Settings page of DTR.

Step 6. Test pushing and pulling¶

Now that you have a working installation of DTR, you should test that you can push and pull images to it:

- Configure your local Docker Engine

- Create a repository

- Push and pull images

Step 7. Join replicas to the cluster¶

This step is optional.

To set up DTR for high availability, you can add more replicas to your DTR cluster. Adding more replicas allows you to load-balance requests across all replicas, and keep DTR working if a replica fails.

For high-availability you should set 3, 5, or 7 DTR replicas. The nodes where you’re going to install these replicas also need to be managed by UCP.

To add replicas to a DTR cluster, use the docker/dtr join command:

Load your UCP user bundle.

Run the join command.

When you join a replica to a DTR cluster, you need to specify the ID of a replica that is already part of the cluster. You can find an existing replica ID by going to the Shared Resources > Stacks page on UCP.

Then run:

docker run -it --rm \ docker/dtr:2.7.6 join \ --ucp-node <ucp-node-name> \ --ucp-insecure-tls

Caution

–ucp-node

The

<ucp-node-name>following the--ucp-nodeflag is the target node to install the DTR replica. This is NOT the UCP Manager URL.Check that all replicas are running.

In your browser, navigate to the Docker Universal Control Plane web interface, and navigate to Shared Resources > Stacks. All replicas should be displayed.

Install DTR offline¶

The procedure to install Docker Trusted Registry on a host is the same, whether that host has access to the internet or not.

The only difference when installing on an offline host, is that instead of pulling the UCP images from Docker Hub, you use a computer that is connected to the internet to download a single package with all the images. Then you copy that package to the host where you’ll install DTR.

Versions available¶

Download the offline package¶

Use a computer with internet access to download a package with all DTR images:

$ wget <package-url> -O dtr.tar.gz

Now that you have the package in your local machine, you can transfer it to the machines where you want to install DTR.

For each machine where you want to install DTR:

Copy the DTR package to that machine.

$ scp dtr.tar.gz <user>@<host>

Use SSH to log in to the hosts where you transferred the package.

Load the DTR images.

Once the package is transferred to the hosts, you can use the

docker loadcommand to load the Docker images from the tar archive:$ docker load -i dtr.tar.gz

Install DTR¶

Now that the offline hosts have all the images needed to install DTR, you can install DTR on that host.

Preventing outgoing connections¶

DTR makes outgoing connections to:

- Report analytics

- Check for new versions

- Check online licenses

- Update the vulnerability scanning database

All of these uses of online connections are optional. You can choose to disable or not use any or all of these features on the admin settings page.

Upgrade DTR¶

DTR uses semantic versioning and Docker aims to achieve specific guarantees while upgrading between versions. While downgrades are not supported, Docker supports upgrades according to the following rules:

- When upgrading from one patch version to another, you can skip patch versions because no data migration is done for patch versions.

- When upgrading between minor versions, you *cannot* skip versions, but you can upgrade from any patch version of the previous minor version to any patch version of the current minor version.

- When upgrading between major versions, make sure to upgrade one major version at a time – and also upgrade to the earliest available minor version. We strongly recommend upgrading to the latest minor/patch version for your major version first.

| Description | From | To | Supported |

|---|---|---|---|

| patch upgrade | x.y.0 | x.y.1 | yes |

| skip patch version | x.y.0 | x.y.2 | yes |

| patch downgrade | x.y.2 | x.y.1 | no |

| minor upgrade | x.y.* | x.y+1.* | yes |

| skip minor version | x.y.* | x.y+2.* | no |

| minor downgrade | x.y.* | x.y-1.* | no |

| skip major version | x.. | x+2.. | no |

| major downgrade | x.. | x-1.. | no |

| major upgrade | x.y.z | x+1.0.0 | yes |

| major upgrade skipping minor version | x.y.z | x+1.y+1.z | no |

There may be at most a few seconds of interruption during the upgrade of a DTR cluster. Schedule the upgrade to take place outside of peak hours to avoid any business impacts.

2.5 to 2.6 upgrade¶

There are [important changes to the upgrade process](/ee/upgrade) that, if not correctly followed, can have impact on the availability of applications running on the Swarm during upgrades. These constraints impact any upgrades coming from any version before 18.09 to version 18.09 or greater. Additionally, to ensure high availability during the DTR upgrade, you can also drain the DTR replicas and move their workloads to updated workers. To do this, you can join new workers as DTR replicas to your existing cluster and then remove the old replicas. See docker/dtr join<:ref:`join command<dtr-cli-join> and docker/dtr remove for command options and details.

Minor upgrade¶

Before starting your upgrade, make sure that:

- The version of UCP you are using is supported by the version of DTR you are trying to upgrade to. Check the compatibility matrix.

- You have a recent DTR backup.

- You disable Docker content trust in UCP<run-only-the-images-you-trust>.

- You meet all system requirements.

Step 1. Upgrade DTR to 2.6 if necessary¶

Make sure you are running DTR 2.5. If this is not the case, upgrade your installation to the 2.5 version.

Step 2. Upgrade DTR¶

Then pull the latest version of DTR:

docker pull docker/dtr:2.6.8

Make sure you have at least 16GB of available RAM on the node you are running the upgrade on. If the DTR node does not have access to the Internet, you can follow the Install DTR offline documentation to get the images.

Once you have the latest image on your machine (and the images on the target nodes if upgrading offline), run the upgrade command.

Note

The upgrade command can be run from any available node, as UCP is aware of which worker nodes have replicas.

docker run -it --rm \

docker/dtr:2.6.8 upgrade

--ucp-insecure-tls

By default, the upgrade command runs in interactive mode and prompts you for any necessary information. You can also check the upgrade reference page<dtr-cli-upgrade for other existing flags.

The upgrade command will start replacing every container in your DTR cluster, one replica at a time. It will also perform certain data migrations. If anything fails or the upgrade is interrupted for any reason, you can rerun the upgrade command and it will resume from where it left off.

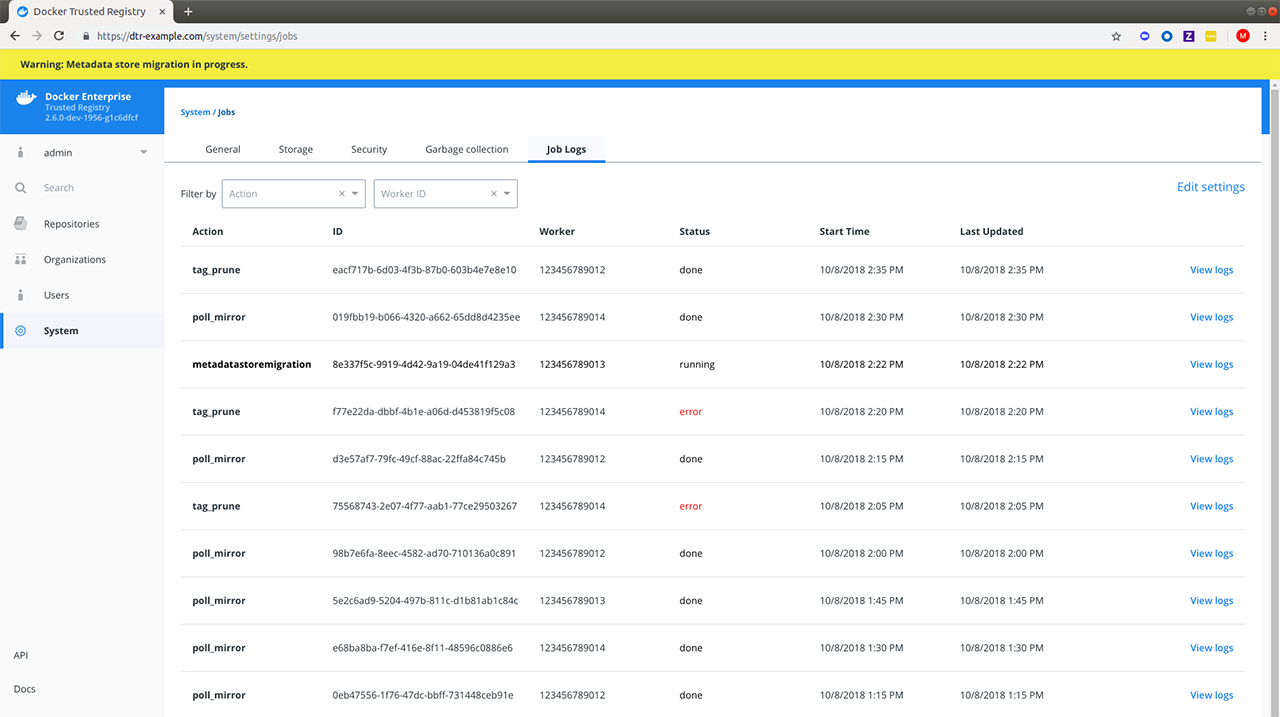

Metadata Store Migration¶

When upgrading from 2.5 to 2.6, the system will run a

metadatastoremigration job after a successful upgrade. This involves

migrating the blob links for your images, which is necessary for online garbage

collection. With 2.6, you can log into the DTR web interface and navigate

to System > Job Logs to check the status of the

metadatastoremigration job. Refer to Audit Jobs via the Web

Interface<dtr-manage-jobs-audit-jobs-via-ui> for more details.

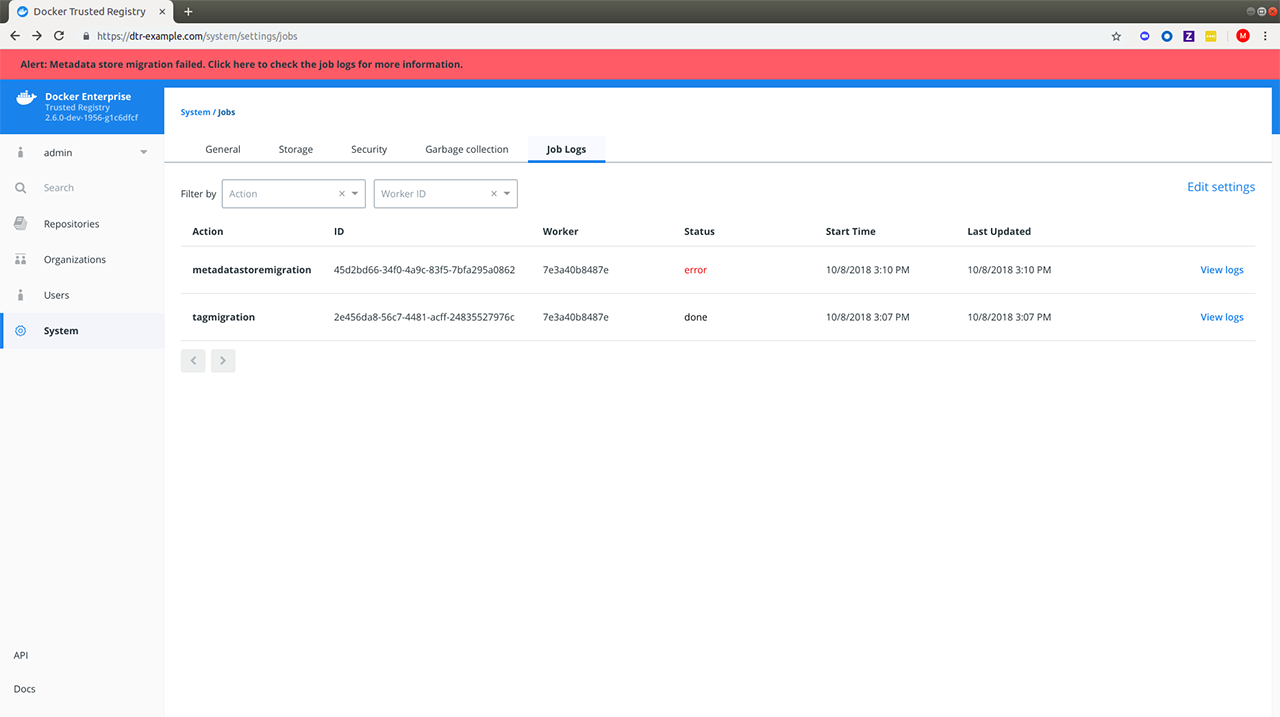

Garbage collection is disabled while the migration is running. In the

case of a failed metadatastoremigration, the system will retry

twice.

If the three attempts fail, you will have to retrigger the metadatastoremigration job manually. To do so, send a POST request to the /api/v0/jobs endpoint:

curl https://<dtr-external-url>/api/v0/jobs -X POST \

-u username:accesstoken -H 'Content-Type':'application/json' -d \

'{"action": "metadatastoremigration"}'

Alternatively, select API from the bottom left navigation pane of the DTR web interface and use the Swagger UI to send your API request.

Patch upgrade¶

A patch upgrade changes only the DTR containers and is always safer than a minor version upgrade. The command is the same as for a minor upgrade.

DTR cache upgrade¶

If you have previously deployed a cache, make sure to upgrade the node dedicated for your cache to keep it in sync with your upstream DTR replicas. This prevents authentication errors and other strange behaviors.

Download the vulnerability database¶

After upgrading DTR, you need to redownload the vulnerability database. Learn how to update your vulnerability database<dtr-config-set-up-vulnerability-scans>.

Uninstall DTR¶

Uninstalling DTR can be done by simply removing all data associated with each replica. To do that, you just run the destroy command once per replica:

docker run -it --rm \

docker/dtr:2.7.6 destroy \

--ucp-insecure-tls

You will be prompted for the UCP URL, UCP credentials, and which replica to destroy.

To see what options are available in the destroy command, check the destroy command reference documentation.