Introduction¶

Warning

In correlation with the end of life (EOL) date for MSR 2.8.x, Mirantis stopped maintaining this documentation version as of 2022-05-27. The latest MSR product documentation is available here.

This documentation provides information on how to deploy and operate a Mirantis Secure Registry (MSR). The documentation is intended to help operators to understand the core concepts of the product. The documentation provides sufficient information to deploy and operate the solution.

The information provided in this documentation set is being constantly improved and amended based on the feedback and kind requests from the consumers of MSR.

Product Overview¶

Warning

In correlation with the end of life (EOL) date for MSR 2.8.x, Mirantis stopped maintaining this documentation version as of 2022-05-27. The latest MSR product documentation is available here.

Mirantis Secure Registry (MSR) is a solution that enables enterprises to store and manage their container images on-premise or in their virtual private clouds. Built-in security enables you to verify and trust the provenance and content of your applications and ensure secure separation of concerns. Using MSR, you meet security and regulatory compliance requirements. In addition, the automated operations and integration with CI/CD speed up application testing and delivery. The most common use cases for MSR include:

- Helm charts repositories

Deploying applications to Kubernetes can be complex. Setting up a single application can involve creating multiple interdependent Kubernetes resources, such as pods, services, deployments, and replica sets. Each of these requires manual creation of a detailed YAML manifest file as well. This is a lot of work and time invested. With Helm charts (packages that consist of a few YAML configuration files and some templates that are rendered into Kubernetes manifest files) you can save time and install the software you need with all the dependencies, upgrade, and configure it.

- Automated development

Easily create an automated workflow where you push a commit that triggers a build on a CI provider, which pushes a new image into your registry. Then, the registry fires off a webhook and triggers deployment on a staging environment, or notifies other systems that a new image is available.

- Secure and vulnerable free images

When an industry requires applications to comply with certain security standards to meet regulatory compliances, your applications are as secure as the images that run those applications. To ensure that your images are secure and do not have any vulnerabilities, track your images using a binary image scanner to detect components in images and identify associated CVEs. In addition, you may also run image enforcement policies to prevent vulnerable or inappropriate images from being pulled and deployed from your registry.

Reference Architecture¶

Warning

In correlation with the end of life (EOL) date for MSR 2.8.x, Mirantis stopped maintaining this documentation version as of 2022-05-27. The latest MSR product documentation is available here.

The Mirantis Secure Registry (MSR) Reference Architecture provides comprehensive technical information on MSR, including component particulars, infrastructure specifications, and networking and volumes detail.

Introduction to MSR¶

Mirantis Secure Registry (MSR) is Mirantis’s enterprise-grade image storage solution. Installed behind the firewall, either on-premises or on a virtual private cloud, MSR provides a secure environment from which users can store and manage Docker images.

Image and job management

MSR has a web-based user interface that you can use to browse images and audit repository events. With the UI, you can see which Dockerfile lines produced an image and, if security scanning is enabled, a list of all of the software installed in that image. You can also audit jobs with the web interface.

MSR can serve as a Continuous Integration and Continuous Delivery (CI/CD) component, in the building, shipping, and running of applications.

Availability

MSR is highly available through the use of multiple replicas of all containers and metadata. As such, MSR will continue to operate in the event of machine failure, thus allowing for repair.

Efficiency

MSR is able to reduce the bandwidth used when pulling Docker images by caching images closer to users. In addition, MSR can clean up unreferenced manifests and layers.

Built-in access control

As with Mirantis Kubernetes Engine (MKE), MSR uses Role Based Access Control (RBAC), which allows you to manage image access, either manually, with LDAP, or with Active Directory.

Security scanning

A security scanner is built into MSR, which can be used to discover the versions of the software that is in use in your images. This tool scans each layer and aggregates the results, offering a complete picture of what is being shipped as a part of your stack. Most importantly, as the security scanner is kept up-to-date by tapping into a periodically updated vulnerability database, it is able to provide unprecedented insight into your exposure to known security threats.

Image signing

MSR ships with Notary, which allows you to sign and verify images using Docker Content Trust. For more information on managing Notary data in MSR, refer to the Using Notary to sign an image.

Components¶

Mirantis Secure Registry (MSR) is a containerized application that runs on a Mirantis Kubernetes Engine cluster.

Once you have MSR deployed, you use your Docker CLI client to login, push, and pull images.

For high-availability you can deploy multiple MSR replicas, one on each MKE worker node.

All MSR replicas run the same set of services and changes to their configuration are automatically propagated to other replicas.

When you install MSR on a node, the following containers are started:

Name |

Description |

|---|---|

dtr-api-<replica_id> |

Executes the MSR business logic. It serves the MSR web application and API |

dtr-garant-<replica_id> |

Manages MSR authentication |

dtr-jobrunner-<replica_id> |

Runs cleanup jobs in the background |

dtr-nginx-<replica_id> |

Receives http and https requests and proxies them to other MSR components. By default it listens to ports 80 and 443 of the host |

dtr-notary-server-<replica_id> |

Receives, validates, and serves content trust metadata, and is consulted when pushing or pulling to MSR with content trust enabled |

dtr-notary-signer-<replica_id> |

Performs server-side timestamp and snapshot signing for content trust metadata |

dtr-registry-<replica_id> |

Implements the functionality for pulling and pushing Docker images. It also handles how images are stored |

dtr-rethinkdb-<replica_id> |

A database for persisting repository metadata |

dtr-scanningstore-<replica_id> |

Stores security scanning data |

All these components are for internal use of MSR. Don’t use them in your applications.

System requirements¶

Mirantis Secure Registry can be installed on-premises or on the cloud. Before installing, be sure your infrastructure has these requirements.

You can install MSR on-premises or on a cloud provider. To install MSR, all nodes must:

Be a worker node managed by MKE (Mirantis Kubernetes Engine)

Have a fixed hostname

Minimum requirements:

16GB of RAM for nodes running MSR

4 vCPUs for nodes running MSR

25GB of free disk space

Recommended production requirements:

32GB of RAM for nodes running MSR

4 vCPUs for nodes running MSR

100GB of free disk space

Note that Windows container images are typically larger than Linux ones and for this reason, you should consider provisioning more local storage for Windows nodes and for MSR setups that will store Windows container images.

When the image scanning feature is used, we recommend that you have at least 32 GB of RAM. As developers and teams push images into MSR, the repository grows over time. As such, you should regularly inspect RAM, CPU, and disk usage on MSR nodes, and increase resources whenever resource saturation is seen to occur on a regular basis.

Networking¶

To allow containers to communicate, when installing MSR the following networks are created:

Name |

Type |

Description |

|---|---|---|

dtr-ol |

overlay |

Allows MSR components running on different nodes to communicate, to replicate MSR data |

When installing MSR on a node, make sure the following ports are open on that node:

Direction |

Port |

Purpose |

|---|---|---|

in |

80/tcp |

Web app and API client access to MSR. |

in |

443/tcp |

Web app and API client access to MSR. |

These ports are configurable when installing MSR.

Volumes¶

MSR uses these named volumes for persisting data:

Volume name |

Description |

|---|---|

dtr-ca-<replica_id> |

Root key material for the MSR root CA that issues certificates |

dtr-notary-<replica_id> |

Certificate and keys for the Notary components |

dtr-postgres-<replica_id> |

Vulnerability scans data |

dtr-registry-<replica_id> |

Docker images data, if MSR is configured to store images on the local filesystem |

dtr-rethink-<replica_id> |

Repository metadata |

dtr-nfs-registry-<replica_id> |

Docker images data, if MSR is configured to store images on NFS |

You can customize the volume driver used for these volumes, by creating the volumes before installing MSR. During the installation, MSR checks which volumes don’t exist in the node, and creates them using the default volume driver.

By default, the data for these volumes can be found at

/var/lib/docker/volumes/<volume-name>/_data.

Storage¶

By default, Mirantis Secure Registry stores images on the filesystem of the node where it is running, but you should configure it to use a centralized storage backend.

MSR supports the following storage systems:

Persistent volume |

|

Cloud storage providers |

|

Note

MSR cannot be deployed to Windows nodes.

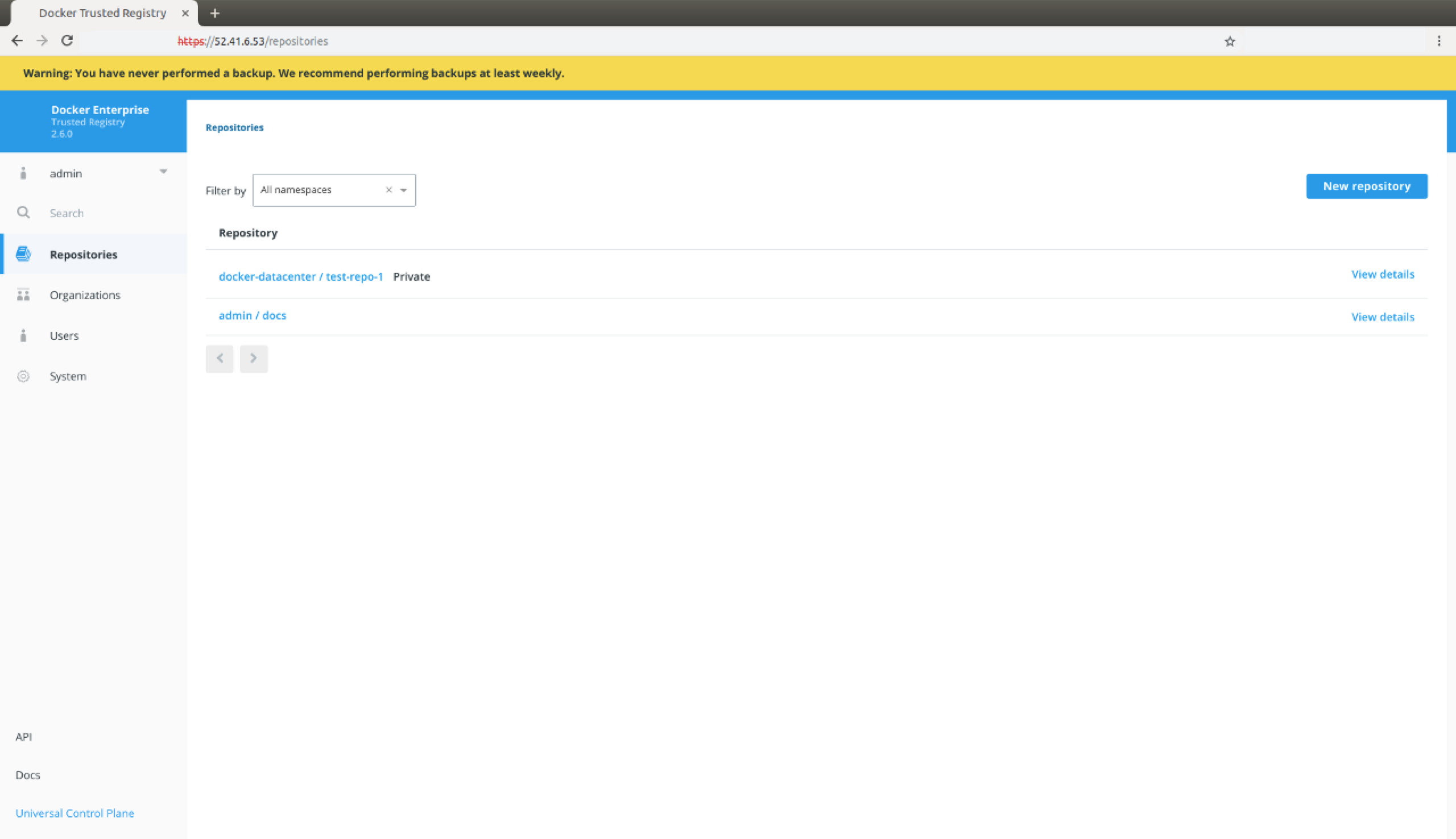

MSR Web UI¶

MSR has a web UI where you can manage settings and user permissions.

You can push and pull images using the standard Docker CLI client or other tools that can interact with a Docker registry.

Rule engine¶

MSR uses a rule engine to evaluate policies, such as tag pruning and image enforcement.

The rule engine supports the following operators:

|

|

Note

The matches operator conforms subject fields to a user-provided regular

expression (regex). The regex for matches must follow the specification

in the official Go documentation: Package syntax.

Each of the following policies uses the rule engine:

Installation Guide¶

Warning

In correlation with the end of life (EOL) date for MSR 2.8.x, Mirantis stopped maintaining this documentation version as of 2022-05-27. The latest MSR product documentation is available here.

Targeted to deployment specialists and QA engineers, the MSR Installation Guide provides the detailed information and procedures you need to install and configure Mirantis Secure Registry (MSR).

Pre-configure MKE¶

When installing or backing up MSR on a MKE cluster, Administrators need to be able to deploy containers on MKE manager nodes or nodes running MSR”. This setting can be adjusted in the MKE Settings menu.

The MSR installation or backup will fail with the following error message if Administrators are unable to deploy on MKE manager nodes or nodes running MSR”.

Error response from daemon: {"message":"could not find any nodes on which the container could be created"}

See also

compatibility-matrix

Install MSR online¶

Mirantis Secure Registry (MSR) is a containerized application that runs on a swarm managed by the Mirantis Kubernetes Engine (MKE). It can be installed on-premises or on a cloud infrastructure.

Step 1. Validate the system requirements¶

Before installing MSR, make sure your infrastructure meets the MSR system requirements.

Step 2. Install MKE¶

MSR requires Mirantis Kubernetes Engine (MKE) to run.

Note

Prior to installing MSR:

When upgrading, upgrade MKE before MSR for each major version. For example, if you are upgrading four major versions, upgrade one major version at a time, first MKE, then MSR, and then repeat for the remaining three versions. - MKE upgraded to the most recent version before an initial install of MSR.

Mirantis Container Runtime should be updated to the most recent version before installing or updating MKE.

MKE and MSR must not be installed on the same node, due to the potential for resource and port conflicts. Instead, install MSR on worker nodes that will be managed by MKE. Note also that MSR cannot be installed on a standalone Mirantis Container Runtime.

Step 3. Install MSR¶

Once MKE is installed, navigate to the MKE web interface as an admin. Expand your profile on the left-side navigation panel, and Select Admin Settings > Mirantis Secure Registry.

After you configure all the options, you should see a Docker CLI command that you can use to install MSR. Before you run the command, take note of the

--dtr-external-urlparameter:$ docker run -it --rm \ mirantis/dtr:2.8.13 install \ --dtr-external-url <msr.example.com> \ --ucp-node <mke-node-name> \ --ucp-username admin \ --ucp-url <mke-url>

If you want to point this parameter to a load balancer that uses HTTP for health probes over port

80or443, temporarily reconfigure the load balancer to use TCP over a known open port. Once MSR is installed, you can configure the load balancer however you need to.Run the MSR install command on any node connected to the MKE cluster, and with Mirantis Container Runtime installed. MSR will not be installed on the node you run the install command on. MSR will be installed on the MKE worker defined by the

--ucp-nodeflag.For example, you could SSH into a MKE node and run the MSR install command from there. Running the installation command in interactive TTY or

-itmode means you will be prompted for any required additional information.Here are some useful options you can set during installation:

To install a different version of MSR, replace

2.8.13with your desired version in the installation command above.MSR is deployed with self-signed certificates by default, so MKE might not be able to pull images from MSR. Use the

--dtr-external-url <msr-domain>:<port>optional flag during installation, or during a reconfiguration, so that MKE is automatically reconfigured to trust MSR.Starting with MSR 2.7, you can enable browser authentication via client certificates at install time. This bypasses the MSR login page and hides the logout button, thereby skipping the need for entering your username and password.

Verify that MSR is installed. Either:

See

https://<mke-fqdn>/manage/settings/dtr, or;Navigate to Admin Settings > Mirantis Secure Registry from the MKE web UI. Under the hood, MKE modifies

/etc/docker/certs.dfor each host and adds MSR’s CA certificate. MKE can then pull images from MSR because the Mirantis Container Runtime for each node in the MKE swarm has been configured to trust MSR.

Reconfigure your load balancer back to your desired protocol and port.

Step 4. Check that MSR is running¶

In your browser, navigate to the MKE web interface.

Select Shared Resources > Stacks from the left-side navigation panel. You should see MSR listed as a stack.

To verify that MSR is accessible from the browser, enter your MSR IP address or FQDN on the address bar. Since HSTS (HTTP Strict-Transport-Security) header is included in all API responses, make sure to specify the FQDN (Fully Qualified Domain Name) of your MSR prefixed with

https://, or your browser may refuse to load the web interface.

Step 5. Configure MSR¶

After installing MSR, you should configure:

The certificates used for TLS communication

The storage back end to store the Docker images

Web interface¶

To update your TLS certificates, access MSR from the browser and navigate to System > General.

To configure your storage back end, navigate to System > Storage.

Command line interface¶

To reconfigure MSR using the CLI, refer to MSR Operations Guide: CLI reference.

Step 6. Test pushing and pulling¶

Now that you have a working installation of MSR, you should test that you can push and pull images:

Configure your local Mirantis Container Runtime

Create a repository

Push and pull images

Step 7. Join replicas to the cluster¶

This step is optional.

To set up MSR for high availability, you can add more replicas to your MSR cluster. Adding more replicas allows you to load-balance requests across all replicas, and keep MSR working if a replica fails.

For high-availability, you should set 3 or 5 MSR replicas. The replica nodes also need to be managed by the same MKE.

To add replicas to a MSR cluster, use the join command:

Load your MKE user bundle.

Run the join command.

docker run -it --rm \ mirantis/dtr:2.8.13 join \ --ucp-node <mke-node-name> \ --ucp-insecure-tls

Important

The

<mke-node-name>following the--ucp-nodeflag is the target node to install the MSR replica. This is NOT the MKE Manager URL.When you join a replica to a MSR cluster, you need to specify the ID of a replica that is already part of the cluster. You can find an existing replica ID by going to the Shared Resources > Stacks page in the MKE web UI.

Check that all replicas are running.

In your browser, navigate to the MKE web UI.

Select Shared Resources > Stacks. All replicas should be displayed.

Install MSR offline¶

The procedure to install Mirantis Secure Registry on a host is the same, whether that host has access to the internet or not.

The only difference when installing on an offline host, is that instead of pulling the MKE images from Docker Hub, you use a computer that is connected to the internet to download a single package with all the images. Then you copy that package to the host where you’ll install MSR.

Versions available¶

Download the offline package¶

Use a computer with internet access to download a package with all MSR images:

$ wget <package-url> -O dtr.tar.gz

Now that you have the package in your local machine, you can transfer it to the machines where you want to install MSR.

For each machine where you want to install MSR:

Copy the MSR package to that machine.

$ scp dtr.tar.gz <user>@<host>

Use ssh to log into the hosts where you transferred the package.

Load the MSR images.

Once the package is transferred to the hosts, you can use the

docker loadcommand to load the Docker images from the tar archive:$ docker load -i dtr.tar.gz

Install MSR¶

Now that the offline hosts have all the images needed to install MSR, you can install MSR on that host.

Preventing outgoing connections¶

MSR makes outgoing connections to:

report analytics,

check for new versions,

check online licenses,

update the vulnerability scanning database

All of these uses of online connections are optional. You can choose to disable or not use any or all of these features on the admin settings page.

Obtain the license¶

After you install MSR, download your new MSR license and apply it using the MSR web UI.

Warning

Users are not authorized to run MSR on production workloads without a valid license. Refer to Mirantis Agreements and Terms for more information.

To download your MSR license:

Open an email from Mirantis Support with the subject Welcome to Mirantis’ CloudCare Portal and follow the instructions for logging in.

If you did not receive the CloudCare Portal email, you likely have not yet been added as a Designated Contact and should contact your Designated Administrator.

In the top navigation bar, click Environments.

Click the Cloud Name associated with the license you want to download.

Scroll down to License Information and click the License File URL. A new tab opens in your browser.

Click View file to download your license file.

To update your license settings in the MSR web UI:

Log in to your MSR instance as an administrator.

In the left-side navigation panel, click Settings.

On the General tab, click Apply new license. A file browser dialog displays.

Navigate to where you saved the license key (

.lic) file, select it, and click Open. MSR automatically updates with the new settings.

Uninstall MSR¶

Uninstalling MSR can be done by simply removing all data associated with each replica. To do that, you just run the destroy command once per replica:

docker run -it --rm \

mirantis/dtr:2.8.13 destroy \

--ucp-insecure-tls

You will be prompted for the MKE URL, MKE credentials, and which replica to destroy.

Operations Guide¶

Warning

In correlation with the end of life (EOL) date for MSR 2.8.x, Mirantis stopped maintaining this documentation version as of 2022-05-27. The latest MSR product documentation is available here.

The MSR Operations Guide provides the detailed information you need to store and manage images on-premises or in a virtual private cloud, to meet security or regulatory compliance requirements.

Access MSR¶

Configure your Mirantis Container Runtime¶

By default Mirantis Container Runtime uses TLS when pushing and pulling images to an image registry like Mirantis Secure Registry (MSR).

If MSR is using the default configurations or was configured to use self-signed certificates, you need to configure your Mirantis Container Runtime to trust MSR. Otherwise, when you try to log in, push to, or pull images from MSR, you’ll get an error:

docker login msr.example.org

x509: certificate signed by unknown authority

The first step to make your Mirantis Container Runtime trust the certificate authority used by MSR is to get the MSR CA certificate. Then you configure your operating system to trust that certificate.

Configure your host¶

macOS¶

In your browser navigate to https://<msr-url>/ca to download the TLS

certificate used by MSR. Then add that certificate to macOS

Keychain.

After adding the CA certificate to Keychain, restart Docker Desktop for Mac.

Windows¶

In your browser navigate to https://<msr-url>/ca to download the TLS

certificate used by MSR. Open Windows Explorer, right-click the file

you’ve downloaded, and choose Install certificate.

Then, select the following options:

Store location: local machine

Check place all certificates in the following store

Click Browser, and select Trusted Root Certificate Authorities

Click Finish

Learn more about managing TLS certificates.

After adding the CA certificate to Windows, restart Docker Desktop for Windows.

Ubuntu/ Debian¶

# Download the MSR CA certificate

sudo curl -k https://<msr-domain-name>/ca -o /usr/local/share/ca-certificates/<msr-domain-name>.crt

# Refresh the list of certificates to trust

sudo update-ca-certificates

# Restart the Docker daemon

sudo service docker restart

RHEL/ CentOS¶

# Download the MSR CA certificate

sudo curl -k https://<msr-domain-name>/ca -o /etc/pki/ca-trust/source/anchors/<msr-domain-name>.crt

# Refresh the list of certificates to trust

sudo update-ca-trust

# Restart the Docker daemon

sudo /bin/systemctl restart docker.service

Boot2Docker¶

Log into the virtual machine with ssh:

docker-machine ssh <machine-name>

Create the

bootsync.shfile, and make it executable:sudo touch /var/lib/boot2docker/bootsync.sh sudo chmod 755 /var/lib/boot2docker/bootsync.sh

Add the following content to the

bootsync.shfile. You can use nano or vi for this.#!/bin/sh cat /var/lib/boot2docker/server.pem >> /etc/ssl/certs/ca-certificates.crt

Add the MSR CA certificate to the

server.pemfile:curl -k https://<msr-domain-name>/ca | sudo tee -a /var/lib/boot2docker/server.pem

Run

bootsync.shand restart the Docker daemon:sudo /var/lib/boot2docker/bootsync.sh sudo /etc/init.d/docker restart

Log into MSR¶

To validate that your Docker daemon trusts MSR, try authenticating against MSR.

docker login msr.example.org

Where to go next¶

Configure your Notary client¶

Configure your Notary client as described in Delegations for content trust.

Use a cache¶

Mirantis Secure Registry can be configured to have one or more caches. This allows you to choose from which cache to pull images from for faster download times.

If an administrator has set up caches, you can choose which cache to use when pulling images.

In the MSR web UI, navigate to your Account, and check the Content Cache options.

Once you save, your images are pulled from the cache instead of the central MSR.

Manage access tokens¶

Mirantis Secure Registry lets you create and distribute access tokens to enable programmatic access to MSR. Access tokens are linked to a particular user account and duplicate whatever permissions that account has at the time of use. If the account changes permissions, so will the token.

Access tokens are useful in cases such as building integrations since you can issue multiple tokens – one for each integration – and revoke them at any time.

Create an access token¶

To create an access token for the first time, log in to

https://<msr-url>with your MKE credentials.Expand your Profile from the left-side navigation panel and select Profile > Access Tokens.

Add a description for your token. Use something that indicates where the token is going to be used, or set a purpose for the token. Administrators can also create tokens for other users.

Modify an access token¶

Once the token is created, you will not be able to see it again. You do have the option to rename, deactivate, or delete the token as needed. You can delete the token by selecting it and clicking Delete, or you can click View Details.

Use the access token¶

You can use an access token anywhere that requires your MSR password. As

an example you can pass your access token to the --password or

-p option when logging in from your Docker CLI client:

docker login dtr.example.org --username <username> --password <token>

To use the MSR API to list the repositories your user has access to:

curl --silent --insecure --user <username>:<token> dtr.example.org/api/v0/repositories

Configure MSR¶

Use your own TLS certificates¶

Mirantis Secure Registry (MSR) services are exposed using HTTPS by default. This ensures encrypted communications between clients and your trusted registry. If you do not pass a PEM-encoded TLS certificate during installation, MSR will generate a self-signed certificate. This leads to an insecure site warning when accessing MSR through a browser. Additionally, MSR includes an HSTS (HTTP Strict-Transport-Security) header in all API responses which can further lead to your browser refusing to load MSR’s web interface.

You can configure MSR to use your own TLS certificates, so that it is automatically trusted by your users’ browser and client tools. As of v2.7, you can also enable user authentication via client certificates provided by your organization’s public key infrastructure (PKI).

You can upload your own TLS certificates and keys using the web interface, or pass them as CLI options when installing or reconfiguring your MSR instance.

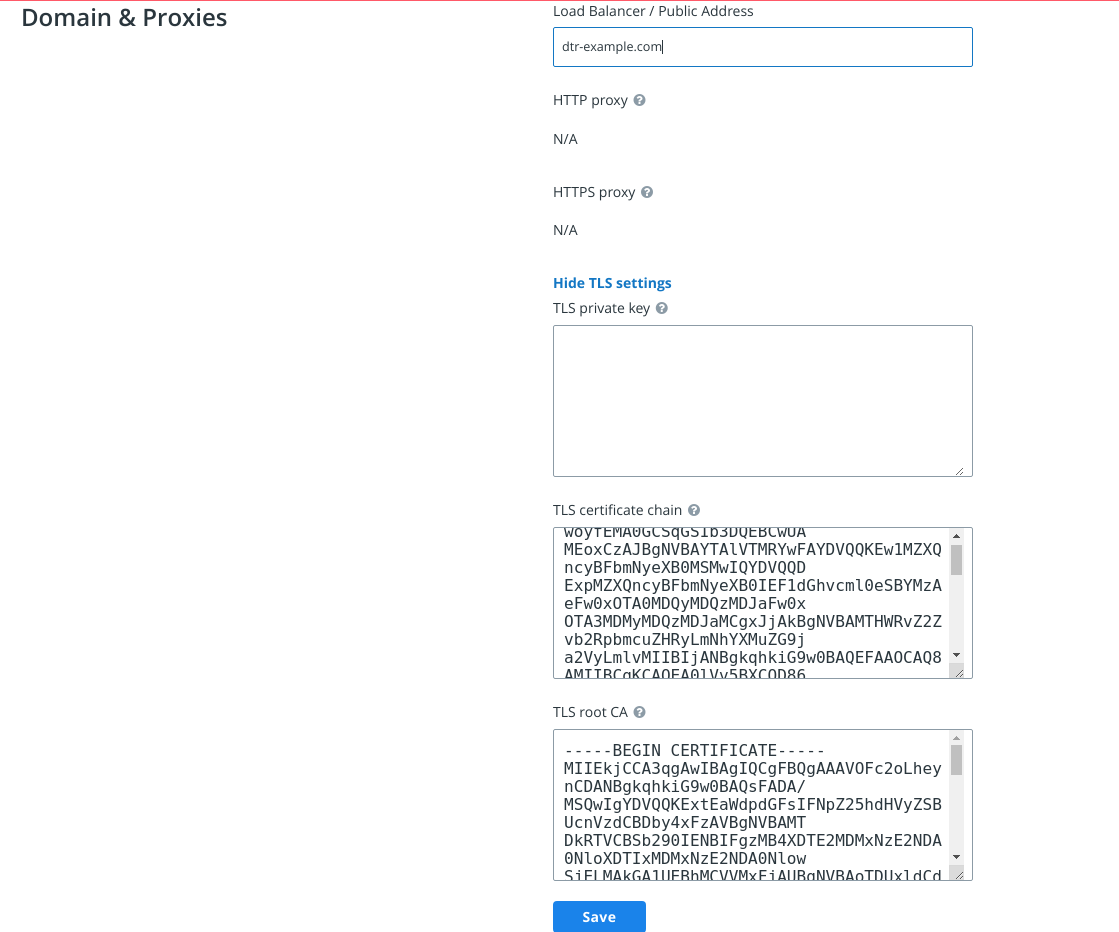

Use the web interface to replace the server certificates¶

Navigate to :samp:

https://<msr-url>and log in with your credentials.Select System from the left-side navigation panel, and scroll down to Domain & Proxies.

Enter your MSR domain name and upload or copy and paste the certificate details:

Load balancer/public address. The domain name clients will use to access MSR.

TLS private key. The server private key.

TLS certificate chain. The server certificate and any intermediate public certificates from your certificate authority (CA). This certificate needs to be valid for the MSR public address, and have SANs for all addresses used to reach the MSR replicas, including load balancers.

TLS CA. The root CA public certificate.

Click Save to apply your changes.

At this point, if you’ve added certificates issued by a globally trusted CA, any web browser or client tool should now trust MSR. If you’re using an internal CA, you will need to configure the client systems to trust that CA.

Use the command line interface to replace the server certificates¶

See install and reconfigure for TLS certificate options and usage.

Enable single sign-on¶

Users are shared between MKE and MSR by default, but the applications have separate browser-based interfaces which require authentication.

To only authenticate once, you can configure MSR to have single sign-on (SSO) with MKE.

Note

After configuring single sign-on with MSR, users accessing MSR via docker

login should create an access token and use it to authenticate.

At install time¶

When installing MSR, pass --dtr-external-url <url> to enable SSO.

Specify the Fully Qualified Domain Name (FQDN) of your MSR, or a load

balancer, to load-balance requests across multiple MSR replicas.

docker run --rm -it \

mirantis/dtr:2.8.13 install \

--dtr-external-url msr.example.com \

--dtr-cert "$(cat cert.pem)" \

--dtr-ca "$(cat dtr_ca.pem)" \

--dtr-key "$(cat key.pem)" \

--ucp-url mke.example.com \

--ucp-username admin \

--ucp-ca "$(cat ucp_ca.pem)"

This makes it so that when you access MSR’s web user interface, you are redirected to the MKE login page for authentication. Upon successfully logging in, you are then redirected to your specified MSR external URL during installation.

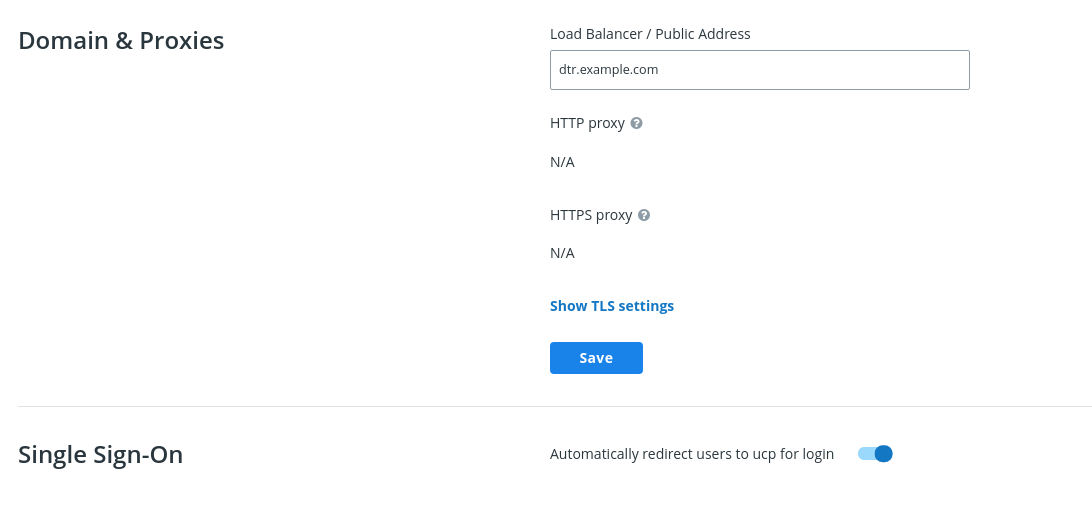

Post-installation¶

Web user interface¶

Navigate to

https://<msr-url>and log in with your credentials.Select System from the left-side navigation panel, and scroll down to Domain & Proxies.

Update the Load balancer / Public Address field with the external URL where users should be redirected once they are logged in. Click Save to apply your changes.

Toggle Single Sign-on to automatically redirect users to MKE for logging in.

Command line interface¶

You can also enable single sign-on from the command line by reconfiguring your MSR. To do so, run the following:

docker run --rm -it \

mirantis/dtr:2.8.13 reconfigure \

--dtr-external-url msr.example.com \

--dtr-cert "$(cat cert.pem)" \

--dtr-ca "$(cat dtr_ca.pem)" \

--dtr-key "$(cat key.pem)" \

--ucp-url mke.example.com \

--ucp-username admin \

--ucp-ca "$(cat ucp_ca.pem)"

Enable MSR telemetry¶

You can set MSR to automatically record and transmit data to Mirantis through an encrypted channel for monitoring and analysis purposes. The data collected provides the Mirantis Customer Success Organization with information that helps us to better understand the operational use of MSR by our customers. It also provides key feedback in the form of product usage statistics, which enable our product teams to enhance Mirantis products and services.

Caution

To send the telemetry, verify that dockerd and the MSR application container

can resolve api.segment.io and create a TCP (HTTPS) connection on port

443.

Log in to the MSR Web UI as an administrator.

Click System in the left-side navigation panel to open the System page.

Click the General tab in the detail pane.

Scroll down in the detail pane to the Analytics section.

Toggle the Send data slider to the right.

External storage¶

Configure MSR image storage¶

Configure your storage back end¶

By default MSR uses the local filesystem of the node where it is running to store your Docker images. You can configure MSR to use an external storage back end, for improved performance or high availability.

If your MSR deployment has a single replica, you can continue using the local filesystem for storing your Docker images. If your MSR deployment has multiple replicas, make sure all replicas are using the same storage back end for high availability. Whenever a user pulls an image, the MSR node serving the request needs to have access to that image.

MSR supports the following storage systems:

Local filesystem

NFS

Bind Mount

Volume

Cloud Storage Providers

Amazon S3

Microsoft Azure

OpenStack Swift

Google Cloud Storage

Note

Some of the previous links are meant to be informative and are not representative of MSR’s implementation of these storage systems.

To configure the storage back end, log in to the MSR web interface as an admin, and navigate to System > Storage.

The storage configuration page gives you the most common configuration options,

but you have the option to upload a configuration file in .yml, .yaml,

or .txt format.

Local filesystem¶

By default, MSR creates a volume named dtr-registry-<replica-id> to store

your images using the local filesystem. You can customize the name and path of

the volume by using mirantis/dtr install --dtr-storage-volume

or mirantis/dtr reconfigure --dtr-storage-volume.

Important

When running 2.6.0 to 2.6.3 (with experimental online garbage

collection), there is an issue with reconfiguring MSR with

--nfs-storage-url which leads to erased tags. Make sure to back up your

MSR metadata before you proceed. To work around the `–nfs-storage-url``

flag issue, manually create a storage volume on each MSR node. If MSR is

already installed in your cluster, reconfigure MSR with the

--dtr-storage-volume flag using your newly-created volume.

If you’re deploying MSR with high-availability, you need to use NFS or any other centralized storage back end so that all your MSR replicas have access to the same images.

To check how much space your images are utilizing in the local filesystem, SSH into the MSR node and run:

# Find the path to the volume

docker volume inspect dtr-registry-<replica-id>

# Check the disk usage

sudo du -hs \

$(dirname $(docker volume inspect --format '{{.Mountpoint}}' dtr-registry-<msr-replica>))

You can configure your MSR replicas to store images on an NFS partition, so that all replicas can share the same storage back end.

Cloud Storage¶

MSR supports Amazon S3 or other storage systems that are S3-compatible like Minio.

Switching storage back ends¶

Switching storage back ends initializes a new metadata store and erases your

existing tags. This helps facilitate online garbage collection. In earlier

versions, MSR would subsequently start a tagmigration job to rebuild tag

metadata from the file layout in the image layer store. This job has been

discontinued for DTR 2.5.x (with garbage collection) and DTR 2.6, as your

storage back end could get out of sync with your MSR metadata, like your

manifests and existing repositories. As a best practice, MSR storage back ends

and metadata should always be moved, backed up, and restored together.

The --storage-migrated flag in reconfigure lets

you indicate the migration status of your storage data during a reconfigure. If

you are not worried about losing your existing tags, you can skip the

recommended steps below and perform a reconfigure.

Note

Starting with MSR 2.9.0, switching your storage back end does not initialize a new metadata store or erase your existing storage. MSR now requires the new storage back end to contain an exact copy of the prior configuration’s data. If this requirement is not met, the storage must be reinitialized using the --reinitialize-storage flag with the dtr reconfigure command, which reinitializes a new metadata store and erases your existing tags.

It is a best practice to always move, back up, and restore your storage back ends with your metadata.

Best practice for data migration¶

Disable garbage collection by selecting “Never” under System > Garbage Collection, so blobs referenced in the backup that you create continue to exist. Make sure to keep it disabled while you’re performing the metadata backup and migrating your storage data.

Back up your existing metadata.

Migrate the contents of your current storage back end to the new one you are switching to. For example, upload your current storage data to your new NFS server.

Restore MSR from your backup and specify your new storage back end.

With MSR restored from your backup and your storage data migrated to your new back end, garbage collect any dangling blobs using the following API request:

curl -u <username>:$TOKEN -X POST "https://<msr-url>/api/v0/jobs" -H "accept: application/json" -H "content-type: application/json" -d "{ \"action": \"onlinegc_blobs\" }"

On success, you should get a

202 Acceptedresponse with a jobidand other related details. This ensures any blobs which are not referenced in your previously created backup get destroyed.

Alternative option for data migration¶

If you have a long maintenance window, you can skip some steps from above and do the following:

Put MSR in “read-only” mode using the following API request:

curl -u <username>:$TOKEN -X POST "https://<msr-url>/api/v0/meta/settings" -H "accept: application/json" -H "content-type: application/json" -d "{ \"readOnlyRegistry\": true }"

On success, you should get a

202 Acceptedresponse.Migrate the contents of your current storage back end to the new one you are switching to. For example, upload your current storage data to your new NFS server.

Reconfigure MSR while specifying the

--storage-migratedflag to preserve your existing tags.

Regarding previous versions…

Make sure to perform a backup before you change your storage back end when running DTR 2.5 (with online garbage collection) and 2.6.0-2.6.3.

Upgrade to DTR 2.6.4 and follow best practice for data migration to avoid the wiped tags issue when moving from one NFS server to another.

Configuring MSR for S3¶

You can configure MSR to store Docker images on Amazon S3, or other file servers with an S3-compatible API like Cleversafe or Minio.

Amazon S3 and compatible services store files in “buckets”, and users have permissions to read, write, and delete files from those buckets. When you integrate MSR with Amazon S3, MSR sends all read and write operations to the S3 bucket so that the images are persisted there.

Create a bucket on Amazon S3¶

Before configuring MSR you need to create a bucket on Amazon S3. To get faster pulls and pushes, you should create the S3 bucket on a region that’s physically close to the servers where MSR is running.

Start by creating a bucket. Then, as a best practice you should create a new IAM user just for the MSR integration and apply an IAM policy that ensures the user has limited permissions.

This user only needs permissions to access the bucket that you’ll use to store images, and be able to read, write, and delete files.

Here’s an example of a user policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:ListAllMyBuckets",

"Resource": "arn:aws:s3:::*"

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:ListBucketMultipartUploads"

],

"Resource": "arn:aws:s3:::<bucket-name>"

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:ListBucketMultipartUploads"

],

"Resource": "arn:aws:s3:::<bucket-name>/*"

}

]

}

Configure MSR¶

Once you’ve created a bucket and user, you can configure MSR to use it.

In your browser, navigate to https://<msr-url. Select System >

Storage.

Select the S3 option, and fill-in the information about the bucket and user.

Field |

Description |

|---|---|

Root directory |

The path in the bucket where images are stored |

AWS Region name |

The region where the bucket is. |

S3 bucket name |

The name of the bucket to store the images. |

AWS access key |

The access key to use to access the S3 bucket. This can be left empty if you’re using an IAM policy. |

AWS secret key |

The secret key to use to access the S3 bucket. This can be left empty if you’re using an IAM policy. |

Region endpoint |

The endpoint name for the region you’re using. |

There are also some advanced settings.

Field |

Description |

|---|---|

Signature version 4 auth |

Authenticate the requests using AWS signature version 4. |

Use HTTPS |

Secure all requests with HTTPS, or make requests in an insecure way. |

Skip TLS verification |

Encrypt all traffic, but don’t verify the TLS certificate used by the storage back end. |

Root CA certificate |

The public key certificate of the root certificate authority that issued the storage back end certificate. |

Once you click Save, MSR validates the configurations and saves the changes.

Configure your clients¶

If you’re using a TLS certificate in your storage back end that’s not globally trusted, you’ll have to configure all Mirantis Container Runtimes that push or pull from MSR to trust that certificate. When you push or pull an image MSR redirects the requests to the storage back end, so if clients don’t trust the TLS certificates of both MSR and the storage back end, they won’t be able to push or pull images.

And if you’ve configured MSR to skip TLS verification, you also need to configure all Mirantis Container Runtimes that push or pull from MSR to skip TLS verification. You do this by adding MSR to the list of insecure registries when starting Docker.

Supported regions¶

MSR supports the following S3 regions:

S3 Regions |

|||

|---|---|---|---|

us-east-1 |

us-east-1 |

us-east-2 |

us-west-1 |

us-west-2 |

eu-west-1 |

eu-west-2 |

eu-central-1 |

ap-south-1 |

ap-southeast-1 |

ap-southeast-2 |

ap-northeast-1 |

ap-northeast-2 |

sa-east-1 |

cn-north-1 |

us-gov-west-1 |

ca-central-1 |

Update your S3 settings on the web interface¶

When running 2.6.0 to 2.6.4 (with experimental online garbage collection), there is an issue with changing your S3 settings on the web interface which leads to erased metadata. Make sure to back up your MSR metadata before you proceed.

Restore MSR with S3¶

To restore MSR using your previously configured S3 settings, use

restore with --dtr-use-default-storage to keep your

metadata.

Configuring MSR for NFS¶

You can configure MSR to store Docker images in an NFS directory. Starting in DTR 2.6, changing storage back ends involves initializing a new metadatastore instead of reusing an existing volume. This helps facilitate online garbage collection. See changes to NFS reconfiguration below if you have previously configured MSR to use NFS.

Before installing or configuring MSR to use an NFS directory, make sure that:

The NFS server has been correctly configured

The NFS server has a fixed IP address

All hosts running MSR have the correct NFS libraries installed

To confirm that the hosts can connect to the NFS server, try to list the directories exported by your NFS server:

showmount -e <nfsserver>

You should also try to mount one of the exported directories:

mkdir /tmp/mydir && sudo mount -t nfs <nfs server>:<directory> /tmp/mydir

Install MSR with NFS¶

One way to configure MSR to use an NFS directory is at install time:

docker run -it --rm mirantis/dtr:2.8.13 install \

--nfs-storage-url <nfs-storage-url> \

<other options>

Use the format nfs://<nfs server>/<directory> for the NFS storage URL.

To support NFS v4, you can now specify additional options when running

install with --nfs-storage-url.

When joining replicas to a MSR cluster, the replicas will pick up your storage configuration, so you will not need to specify it again.

You can use the --storage-migrated flag with the

reconfigure CLI command to indicate the migration

status of your storage data during a reconfigure.

To reconfigure MSR using an NFSv4 volume as a storage back end:

docker run --rm -it \

mirantis/dtr:2.8.13 reconfigure \

--ucp-url <mke_url> \

--ucp-username <mke_username> \

--nfs-storage-url <msr-registry-nf>

--async-nfs

--storage-migrated

To reconfigure MSR to stop using NFS storage, leave the --nfs-storage-url

option blank:

docker run -it --rm mirantis/dtr:2.8.13 reconfigure \

--nfs-storage-url ""

Set up high availability¶

Mirantis Secure Registry is designed to scale horizontally as your usage increases. You can add more replicas to make MSR scale to your demand and for high availability.

All MSR replicas run the same set of services and changes to their configuration are automatically propagated to other replicas.

To make MSR tolerant to failures, add additional replicas to the MSR cluster.

MSR replicas |

Failures tolerated |

|---|---|

1 |

0 |

3 |

1 |

5 |

2 |

7 |

3 |

When sizing your MSR installation for high-availability, follow these rules of thumb:

Don’t create a MSR cluster with just two replicas. Your cluster won’t tolerate any failures, and it’s possible that you experience performance degradation.

When a replica fails, the number of failures tolerated by your cluster decreases. Don’t leave that replica offline for long.

Adding too many replicas to the cluster might also lead to performance degradation, as data needs to be replicated across all replicas.

To have high-availability on MKE and MSR, you need a minimum of:

3 dedicated nodes to install MKE with high availability,

3 dedicated nodes to install MSR with high availability,

As many nodes as you want for running your containers and applications.

You also need to configure the MSR replicas to share the same object storage.

Join more MSR replicas¶

To add replicas to an existing MSR deployment:

Use ssh to log into any node that is already part of MKE.

Run the MSR join command:

docker run -it --rm \ mirantis/dtr:2.8.13 join \ --ucp-node <mke-node-name> \ --ucp-insecure-tls

Where the

--ucp-nodeis the hostname of the MKE node where you want to deploy the MSR replica.--ucp-insecure-tlstells the command to trust the certificates used by MKE.If you have a load balancer, add this MSR replica to the load balancing pool.

Remove existing replicas¶

To remove a MSR replica from your deployment:

Use ssh to log into any node that is part of MKE.

Run the MSR remove command:

docker run -it --rm \ mirantis/dtr:2.8.13 remove \ --ucp-insecure-tls

You will be prompted for:

Existing replica id: the id of any healthy MSR replica of that cluster

Replica id: the id of the MSR replica you want to remove. It can be the id of an unhealthy replica

MKE username and password: the administrator credentials for MKE

If you’re load-balancing user requests across multiple MSR replicas, don’t forget to remove this replica from the load balancing pool.

Use a load balancer¶

Once you’ve joined multiple MSR replicas nodes for high-availability, you can configure your own load balancer to balance user requests across all replicas.

This allows users to access MSR using a centralized domain name. If a replica goes down, the load balancer can detect that and stop forwarding requests to it, so that the failure goes unnoticed by users.

MSR exposes several endpoints you can use to assess if a MSR replica is healthy or not:

/_ping: Is an unauthenticated endpoint that checks if the MSR replica is healthy. This is useful for load balancing or other automated health check tasks./nginx_status: Returns the number of connections being handled by the NGINX front-end used by MSR./api/v0/meta/cluster_status: Returns extensive information about all MSR replicas.

Load balance MSR¶

MSR does not provide a load balancing service. You can use an on-premises or cloud-based load balancer to balance requests across multiple MSR replicas.

Important

Additional load balancer requirements for MKE

If you are also using MKE, there are additional requirements if you plan to load balance both MKE and MSR using the same load balancer.

You can use the unauthenticated /_ping endpoint on each MSR replica,

to check if the replica is healthy and if it should remain in the load

balancing pool or not.

Also, make sure you configure your load balancer to:

Load balance TCP traffic on ports 80 and 443.

Not terminate HTTPS connections.

Not buffer requests.

Forward the

HostHTTP header correctly.Have no timeout for idle connections, or set it to more than 10 minutes.

The /_ping endpoint returns a JSON object for the replica being

queried of the form:

{

"Error": "error message",

"Healthy": true

}

A response of "Healthy": true means the replica is suitable for

taking requests. It is also sufficient to check whether the HTTP status

code is 200.

An unhealthy replica will return 503 as the status code and populate

"Error" with more details on any one of these services:

Storage container (registry)

Authorization (garant)

Metadata persistence (rethinkdb)

Content trust (notary)

Note that this endpoint is for checking the health of a single replica. To get the health of every replica in a cluster, querying each replica individually is the preferred way to do it in real time.

Configuration examples¶

Use the following examples to configure your load balancer for MSR.

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

stream {

upstream dtr_80 {

server <MSR_REPLICA_1_IP>:80 max_fails=2 fail_timeout=30s;

server <MSR_REPLICA_2_IP>:80 max_fails=2 fail_timeout=30s;

server <MSR_REPLICA_N_IP>:80 max_fails=2 fail_timeout=30s;

}

upstream dtr_443 {

server <MSR_REPLICA_1_IP>:443 max_fails=2 fail_timeout=30s;

server <MSR_REPLICA_2_IP>:443 max_fails=2 fail_timeout=30s;

server <MSR_REPLICA_N_IP>:443 max_fails=2 fail_timeout=30s;

}

server {

listen 443;

proxy_pass dtr_443;

}

server {

listen 80;

proxy_pass dtr_80;

}

}

global

log /dev/log local0

log /dev/log local1 notice

defaults

mode tcp

option dontlognull

timeout connect 5s

timeout client 50s

timeout server 50s

timeout tunnel 1h

timeout client-fin 50s

### frontends

# Optional HAProxy Stats Page accessible at http://<host-ip>:8181/haproxy?stats

frontend dtr_stats

mode http

bind 0.0.0.0:8181

default_backend dtr_stats

frontend dtr_80

mode tcp

bind 0.0.0.0:80

default_backend dtr_upstream_servers_80

frontend dtr_443

mode tcp

bind 0.0.0.0:443

default_backend dtr_upstream_servers_443

### backends

backend dtr_stats

mode http

option httplog

stats enable

stats admin if TRUE

stats refresh 5m

backend dtr_upstream_servers_80

mode tcp

option httpchk GET /_ping HTTP/1.1\r\nHost:\ <MSR_FQDN>

server node01 <MSR_REPLICA_1_IP>:80 check weight 100

server node02 <MSR_REPLICA_2_IP>:80 check weight 100

server node03 <MSR_REPLICA_N_IP>:80 check weight 100

backend dtr_upstream_servers_443

mode tcp

option httpchk GET /_ping HTTP/1.1\r\nHost:\ <MSR_FQDN>

server node01 <MSR_REPLICA_1_IP>:443 weight 100 check check-ssl verify none

server node02 <MSR_REPLICA_2_IP>:443 weight 100 check check-ssl verify none

server node03 <MSR_REPLICA_N_IP>:443 weight 100 check check-ssl verify none

{

"Subnets": [

"subnet-XXXXXXXX",

"subnet-YYYYYYYY",

"subnet-ZZZZZZZZ"

],

"CanonicalHostedZoneNameID": "XXXXXXXXXXX",

"CanonicalHostedZoneName": "XXXXXXXXX.us-west-XXX.elb.amazonaws.com",

"ListenerDescriptions": [

{

"Listener": {

"InstancePort": 443,

"LoadBalancerPort": 443,

"Protocol": "TCP",

"InstanceProtocol": "TCP"

},

"PolicyNames": []

}

],

"HealthCheck": {

"HealthyThreshold": 2,

"Interval": 10,

"Target": "HTTPS:443/_ping",

"Timeout": 2,

"UnhealthyThreshold": 4

},

"VPCId": "vpc-XXXXXX",

"BackendServerDescriptions": [],

"Instances": [

{

"InstanceId": "i-XXXXXXXXX"

},

{

"InstanceId": "i-XXXXXXXXX"

},

{

"InstanceId": "i-XXXXXXXXX"

}

],

"DNSName": "XXXXXXXXXXXX.us-west-2.elb.amazonaws.com",

"SecurityGroups": [

"sg-XXXXXXXXX"

],

"Policies": {

"LBCookieStickinessPolicies": [],

"AppCookieStickinessPolicies": [],

"OtherPolicies": []

},

"LoadBalancerName": "ELB-MSR",

"CreatedTime": "2017-02-13T21:40:15.400Z",

"AvailabilityZones": [

"us-west-2c",

"us-west-2a",

"us-west-2b"

],

"Scheme": "internet-facing",

"SourceSecurityGroup": {

"OwnerAlias": "XXXXXXXXXXXX",

"GroupName": "XXXXXXXXXXXX"

}

}

You can deploy your load balancer using:

# Create the nginx.conf file, then

# deploy the load balancer

docker run --detach \

--name dtr-lb \

--restart=unless-stopped \

--publish 80:80 \

--publish 443:443 \

--volume ${PWD}/nginx.conf:/etc/nginx/nginx.conf:ro \

nginx:stable-alpine

# Create the haproxy.cfg file, then

# deploy the load balancer

docker run --detach \

--name dtr-lb \

--publish 443:443 \

--publish 80:80 \

--publish 8181:8181 \

--restart=unless-stopped \

--volume ${PWD}/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro \

haproxy:1.7-alpine haproxy -d -f /usr/local/etc/haproxy/haproxy.cfg

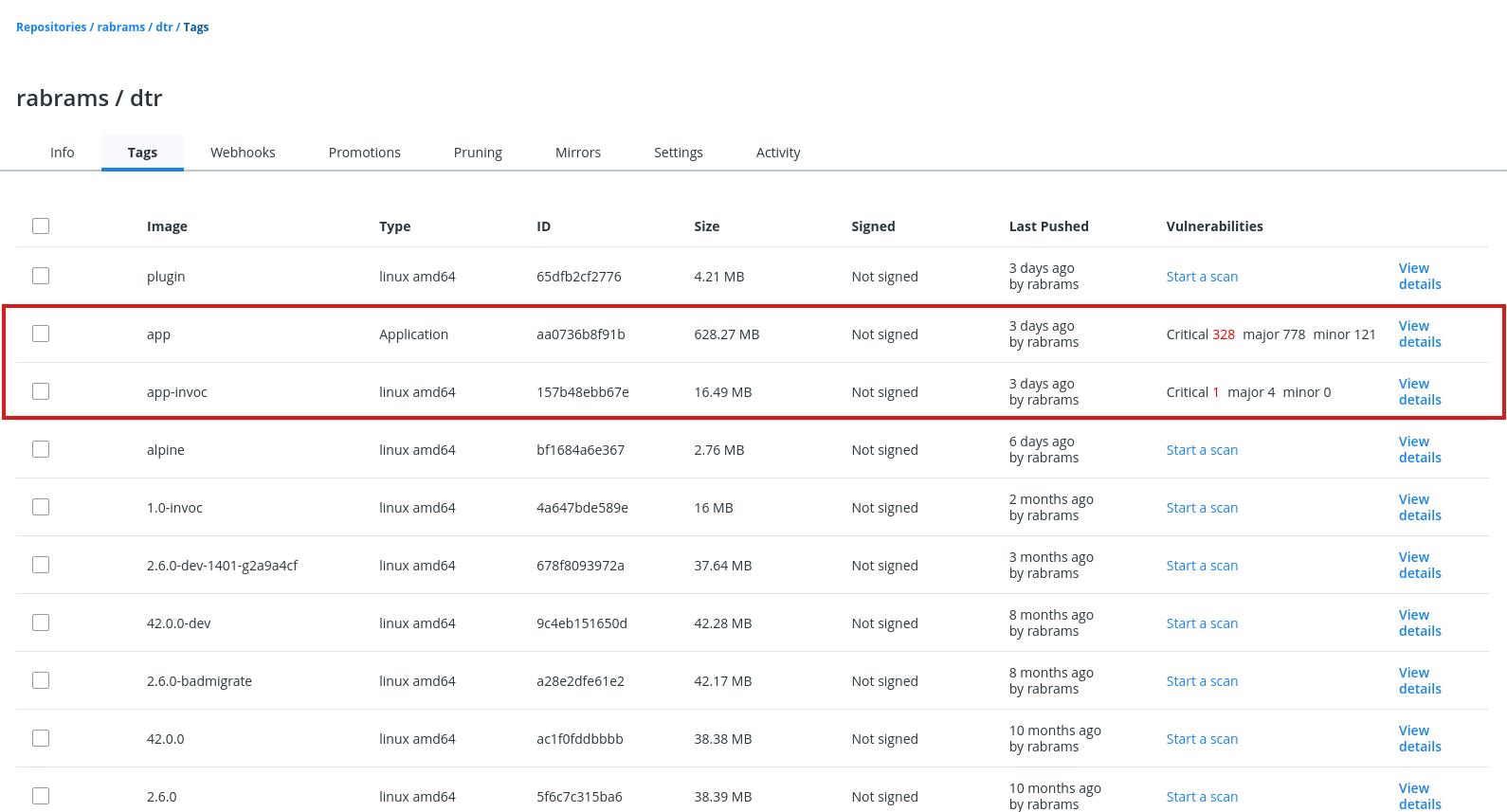

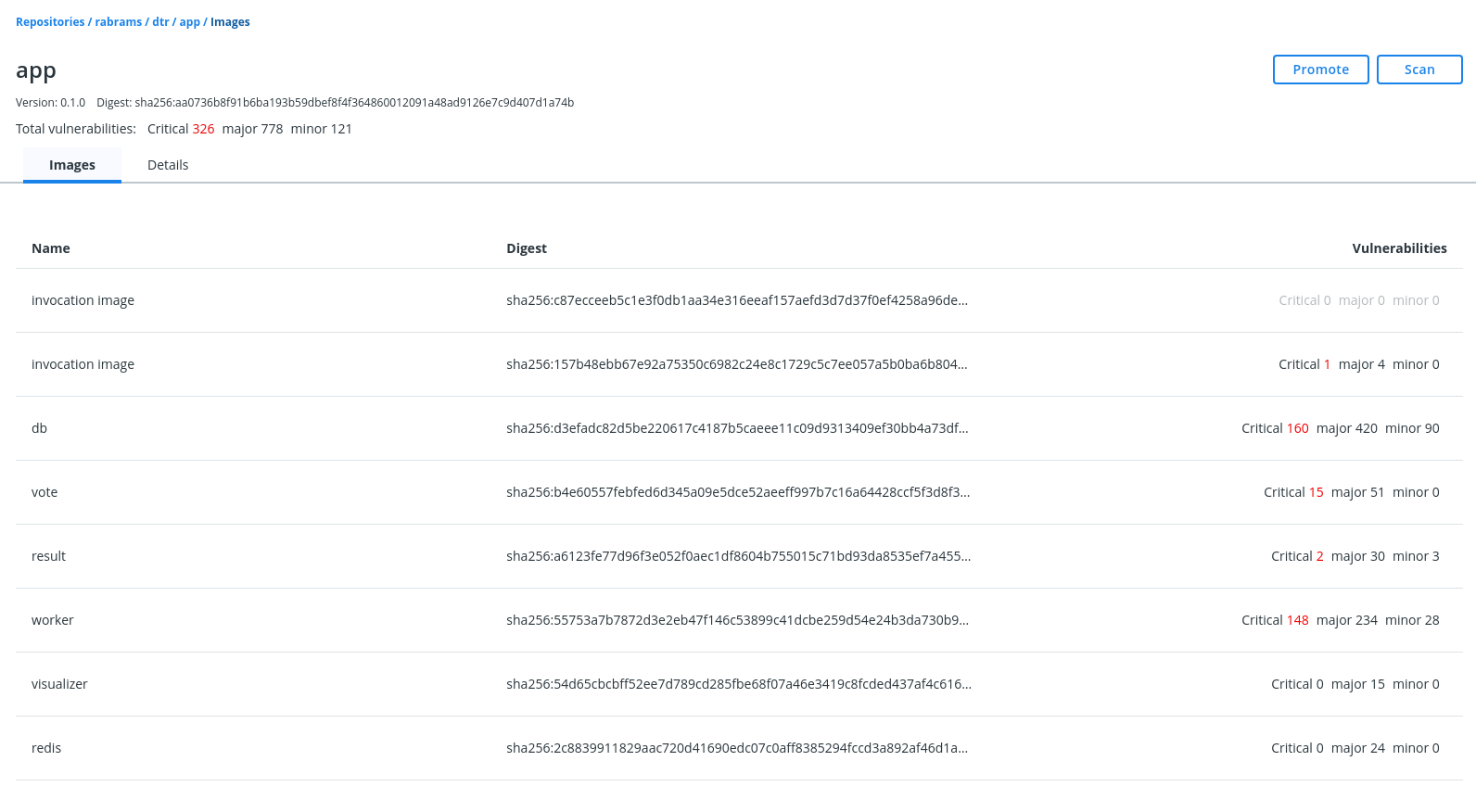

Set up security scanning in MSR¶

This page explains how to set up and enable Docker Security Scanning on an existing installation of Mirantis Secure Registry.

Prerequisites¶

These instructions assume that you have already installed Mirantis Secure Registry (MSR), and have access to an account on the MSR instance with administrator access.

Before you begin, make sure that you or your organization has purchased a MSR license that includes Docker Security Scanning, and that your Docker ID can access and download this license from the Docker Hub.

If you are using a license associated with an individual account, no

additional action is needed. If you are using a license associated with

an organization account, you may need to make sure your Docker ID is a

member of the Owners team. Only Owners team members can download

license files for an Organization.

If you will be allowing the Security Scanning database to update itself

automatically, make sure that the server hosting your MSR instance can

access both http://license.mirantis.com and

https://dss-cve-updates.mirantis.com/ on the standard https port 443.

Get the security scanning license¶

If your MSR instance already has a license that includes Security Scanning, skip this section and proceed to Enable MSR security scanning.

Tip

To check if your existing MSR license includes scanning, navigate to the MSR Settings page, and click Security. If an Enable scannin toggle appears, the license includes scanning.

If your current MSR license doesn’t include scanning, you must first download the new license.

Search for an email from Mirantis Support with the subject Welcome to Mirantis’ CloudCare Portal, and follow the instructions for logging in.

If you did not receive the CloudCare Portal email, it is likely that you have not yet been added as a Designated Contact. To remedy this, contact your Designated Administrator.

Click Environments in the top navigation bar.

Click the Cloud Name associated with the license you want to download.

Scroll down to License Information and click the License File url. A new tab will open in your browser.

Click View file to download your license file.

Next, install the new license on the MSR instance.

Log in to your MSR instance using an administrator account.

Click Settings in the left-side navigation panel.

On the General tab click Apply new license.

A file browser dialog displays.

Navigate to where you saved the license key (

.lic) file, select it, and click Open.

Enable MSR security scanning¶

To enable security scanning in MSR:

Log in to your MSR instance with an administrator account.

Click Settings in the left-side navigation panel.

Click the Security tab.

Click the Enable scanning toggle so that it turns blue and says “on”.

Next, provide a security database for the scanner. Security scanning will not function until MSR has a security database to use.

By default, security scanning is enabled in Online mode. In this mode, MSR attempts to download a security database from a Docker server. If your installation cannot access

https://dss-cve-updates.docker.com/you must manually upload a.tarfile containing the security database.If you are using

Onlinemode, the MSR instance will contact a Docker server, download the latest vulnerability database, and install it. Scanning can begin once this process completes.If you are using

Offlinemode, use the instructions in Update scanning database - offline mode to upload an initial security database.

By default when Security Scanning is enabled, new repositories will

automatically scan on docker push. If you had existing repositories

before you enabled security scanning, you might want to change

repository scanning behavior.

Set repository scanning mode¶

Two modes are available when Security Scanning is enabled:

Scan on push & Scan manually: the image is re-scanned on eachdocker pushto the repository, and whenever a user withwriteaccess clicks the Start Scan links or Scan button.Scan manually: the image is scanned only when a user withwriteaccess clicks the Start Scan links or Scan button.

By default, new repositories are set to Scan on push & Scan manually, but

you can change this setting during repository creation.

Any repositories that existed before scanning was enabled are set to

Scan manually mode by default. If these repositories are still in

use, you can change this setting from each repository’s Settings

page.

Note

To change an individual repository scanning mode, you must have write

or admin access to the repo.

To change an individual repository’s scanning mode:

Navigate to the repository, and click the Settings tab.

Scroll down to the Image scanning section.

Select the desired scanning mode.

Update the CVE scanning database¶

Docker Security Scanning indexes the components in your MSR images and compares them against a known CVE database. When new vulnerabilities are reported, Docker Security Scanning matches the components in new CVE reports to the indexed components in your images, and quickly generates an updated report.

Users with administrator access to MSR can check when the CVE database was last updated from the Security tab in the MSR Settings pages.

Update CVE database - online mode¶

By default Docker Security Scanning checks automatically for updates to the vulnerability database, and downloads them when available. If your installation does not have access to the public internet, use the Offline mode instructions below.

To ensure that MSR can access these updates, confirm that the host can reach

both http://license.mirantis.com and

https://dss-cve-updates.mirantis.com/ on port 443 using https.

MSR checks for new CVE database updates at 3:00 AM UTC every day. If an update is found it is downloaded and applied without interrupting any scans in progress. Once the update is complete, the security scanning system looks for new vulnerabilities in the indexed components.

To set the update mode to Online:

Log in to MSR as a user with administrator rights.

Click Settings in the left-side navigation panel and click Security.

Click Online.

Your choice is saved automatically.

Note

MSR also checks for CVE database updates when scanning is first enabled, and when you switch update modes. If you need to check for a CVE database update immediately, you can briefly switch modes from online to offline and back again.

Update CVE database - offline mode¶

To update the CVE database for your MSR instance when connection to the update

server is not possible, download and install a .tar file that contains

the database updates.

Run the following command to download the most recent CVE database:

Note

The example command specifies default values. It assumes that you want

the container to output the database file to ~/Downloads and that the

volume should map from the local machine into the container. If the

destination for the database is in a separate directory, you must define

an additional volume.

docker run -it --rm \

-v ${HOME}/Downloads:/data \

-e CVE_DB_URL_ONLY=false \

-e CLOBBER_FILE=false \

-e DATABASE_OUTPUT="/data" \

-e DATABASE_SCHEMA=3 \

-e DEBUG=false \

-e VERSION_ONLY=false \

mirantis/get-dtr-cve-db:latest

Variable |

Default |

Override detail |

|---|---|---|

CLOBBER_FILE |

|

Set to |

CVE_DB_URL_ONLY |

|

Set to |

DATABASE_OUTPUT |

|

Indicates the database download directory inside the container. |

DATABASE_SCHEMA |

|

|

DEBUG |

|

Set to |

VERSION_ONLY |

|

Set to |

To manually update the MSR CVE database using the downloaded .tar file:

Log in to MSR as a user with administrator rights.

Click Settings in the left-side navigation panel and click Security.

Click Upload .tar database file.

Browse to the latest

.tarfile that you received, and click Open.

MSR installs the new CVE database, and begins checking already indexed images for components that match new or updated vulnerabilities.

Note

The Upload button is unavailable while MSR applies CVE database updates.

Enable or disable automatic database updates¶

To change the update mode:

Log in to MSR as a user with administrator rights.

Click Settings in the left-side navigation panel and click Security.

Click Online/Offline.

Your choice is saved automatically.

Caches¶

MSR cache fundamentals¶

The further away you are from the geographical location where MSR is deployed, the longer it will take to pull and push images. This happens because the files being transferred from MSR to your machine need to travel a longer distance, across multiple networks.

To decrease the time to pull an image, you can deploy MSR caches geographically closer to users.

Caches are transparent to users, since users still log in and pull images using the MSR URL address. MSR checks if users are authorized to pull the image, and redirects the request to the cache.

In this example, MSR is deployed on a datacenter in the United States, and a cache is deployed in the Asia office.

Users in the Asia office update their user profile within MSR to fetch from the cache in their office. They pull an image using:

# Log in to MSR

docker login msr.example.org

# Pull image

docker image pull msr.example.org/website/ui:3-stable

MSR authenticates the request and checks if the user has permission to pull the image they are requesting. If they have permissions, they get an image manifest containing the list of image layers to pull and redirecting them to pull the images from the Asia cache.

When users request those image layers from the Asia cache, the cache pulls them from MSR and keeps a copy that can be used to serve to other users without having to pull the image layers from MSR again.

Caches or mirroring policies¶

Use caches if you:

Want to make image pulls faster for users in different geographical regions.

Want to manage user permissions from a central place.

If you need users to be able to push images faster, or you want to implement RBAC policies based on different regions, do not use caches. Instead, deploy multiple MSR clusters and implement mirroring policies between them.

With mirroring policies you can set up a development pipeline where images are automatically pushed between different MSR repositories, or across MSR deployments.

As an example you can set up a development pipeline with three different stages. Developers can push and pull images from the development environment, only pull from QA, and have no access to Production.

With multiple MSR deployments you can control the permissions developers have for each deployment, and you can create policies to automatically push images from one deployment to the next.

Cache deployment strategy¶

The main reason to use a MSR cache is so that users can pull images from a service that’s geographically closer to them.

For example, a company has developers spread across three locations: United States, Asia, and Europe. Developers working in the US office can pull their images from MSR without problem, but developers in the Asia and Europe offices complain that it takes them a long time to pulls images.

To address that, you can deploy MSR caches in the Asia and Europe offices, so that developers working from there can pull images much faster.

Deployment overview¶

To deploy the MSR caches for the example scenario, you need three datacenters:

The US datacenter runs MSR configured for high availability.

The Asia datacenter runs a MSR cache.

The Europe datacenter runs another MSR cache.

Both caches are configured to fetch images from MSR.

System requirements¶

Before deploying a MSR cache in a datacenter, make sure you:

Provision multiple nodes and install Docker on them.

Join the nodes into a Swarm.

Have one or more dedicated worker nodes just for running the MSR cache.

Have TLS certificates to use for securing the cache.

Have a shared storage system, if you want the cache to be highly available.

Ports used¶

You can customize the port used by the MSR cache, so you’ll have to configure your firewall rules to make sure users can access the cache using the port you chose.

By default the documentation guides you in deploying caches that are exposed on port 443/TCP using the swarm routing mesh.

Deploy a MSR cache with Swarm¶

This example guides you in deploying a MSR cache, assuming that you’ve got a MSR deployment up and running. It also assumes that you’ve provisioned multiple nodes and joined them into a swarm.

The MSR cache is going to be deployed as a Docker service, so that Docker automatically takes care of scheduling and restarting the service if something goes wrong.

We’ll manage the cache configuration using a Docker configuration, and the TLS certificates using Docker secrets. This allows you to manage the configurations securely and independently of the node where the cache is actually running.

Dedicate a node for the cache¶

To make sure the MSR cache is performant, it should be deployed on a node dedicated just for it. Start by labelling the node where you want to deploy the cache, so that you target the deployment to that node.

Use SSH to log in to a manager node of the swarm where you want to deploy the MSR cache. If you’re using MKE to manage that swarm, use a client bundle to configure your Docker CLI client to connect to the swarm.

docker node update --label-add dtr.cache=true <node-hostname>

Prepare the cache deployment¶

Create a file structure, as illustrated below:

├── docker-stack.yml # Stack file to deploy cache with a single command

├── config.yml # The cache configuration file

└── certs

├── cache.cert.pem # The cache public key certificate

├── cache.key.pem # The cache private key

└── dtr.cert.pem # MSR CA certificate

Add the following content to each of the two YAML files, docker-stack.yml and config.yml:

version: "3.3"

services:

cache:

image: mirantis/dtr-content-cache:2.8.2

entrypoint:

- /start.sh

- "/config.yml"

ports:

- 443:443

deploy:

replicas: 1

placement:

constraints: [node.labels.dtr.cache == true]

restart_policy:

condition: on-failure

configs:

- config.yml

secrets:

- dtr.cert.pem

- cache.cert.pem

- cache.key.pem

configs:

config.yml:

file: ./config.yml

secrets:

dtr.cert.pem:

file: ./certs/dtr.cert.pem

cache.cert.pem:

file: ./certs/cache.cert.pem

cache.key.pem:

file: ./certs/cache.key.pem

version: 0.1

log:

level: info

storage:

delete:

enabled: true

filesystem:

rootdirectory: /var/lib/registry

http:

addr: 0.0.0.0:443

secret: generate-random-secret

host: https://<cache-url>

tls:

certificate: /run/secrets/cache.cert.pem

key: /run/secrets/cache.key.pem

middleware:

registry:

- name: downstream

options:

blobttl: 24h

upstreams:

- https://<msr-url>:<msr-port>

cas:

- /run/secrets/dtr.cert.pem

Add the public key certificate for the cache here. If the certificate has been signed by an intermediate certificate authority, append its public key certificate at the end of the file.

Add the unencrypted private key for the cache here.

The cache communicates with MSR using TLS. If you’ve customized MSR to use TLS certificates issued by a globally trusted certificate authority, the cache automatically trusts MSR.</p>

But if you’re using the default MSR configuration, or MSR is using TLS certificates signed by your own certificate authority, you need to configure the cache to trust MSR.</p>

Add the MSR CA certificate to the certs/dtr.cert.pem file. You can do

this by running:

curl -sk https://<dtr-url>/ca > certs/dtr.cert.pem

Next, add content to the three cert pem files, as described.

pem file |

Content to add |

|---|---|

cache.cert.pem |

Add the public key certificate for the cache. If the certificate has been signed by an intermediate certificate authority, append its public key certificate at the end of the file. |

cache.key.pem |

Add the unencrypted private key for the cache. |

dtr.cert.pem |

The cache communicates with MSR using TLS. If you’ve customized MSR to use TLS certificates issued by a globally trusted certificate authority, the cache automatically trusts MSR. If, though, you are using the default MSR configuration, or MSR is using TLS certificates signed by your own certificate authority, you need to configure the cache to trust MSR. To do this, add the MSR CA certificate to the certs/dtr.cert. pem file. curl -sk https://<msr-url>/ca > certs/dtr.cert.pem

|

With this configuration, the cache fetches image layers from MSR and keeps a local copy for 24 hours. After that, if a user requests that image layer, the cache fetches it again from MSR.

The cache is configured to persist data inside its container. If something goes wrong with the cache service, Docker automatically redeploys a new container, but previously cached data is not persisted. You can customize the storage parameters, if you want to store the image layers using a persistent storage backend.

Also, the cache is configured to use port 443. If you’re already using that port in the swarm, update the deployment and configuration files to use another port. Don’t forget to create firewall rules for the port you choose.

Deploy the cache¶

Now that everything is set up, you can deploy the cache by running:

docker stack deploy --compose-file docker-stack.yml dtr-cache

You can check if the cache has been successfully deployed by running:

docker stack ps dtr-cache

Docker should show the dtr-cache stack is running.

Register the cache with MSR¶

Now that you’ve deployed a cache, you need to configure MSR to know

about it. This is done using the POST /api/v0/content_caches API.

You can use the MSR interactive API documentation to use this API.

In the MSR web UI, click the top-right menu, and choose API docs.

Navigate to the POST /api/v0/content_caches line and click it to

expand. In the body field include:

{

"name": "region-asia",

"host": "https://<cache-url>:<cache-port>"

}

Click the Try it out! button to make the API call.

Configure your user account¶

Now that you’ve registered the cache with MSR, users can configure their user profile to pull images from MSR or the cache.

In the MSR web UI, navigate to your Account, click the Settings tab, and change the Content Cache settings to use the cache you deployed.

If you need to set this for multiple users at the same time, use the

/api/v0/accounts/{username}/settings API endpoint.

Now when you pull images, you’ll be using the cache.

Test that the cache is working¶

To validate that the cache is working as expected:

Push an image to MSR.

Make sure your user account is configured to use the cache.

Delete the image from your local system.

Pull the image from MSR.

To validate that the cache is actually serving your request, and to troubleshoot misconfigurations, check the logs for the cache service by running:

docker service logs --follow dtr-cache_cache

The most common causes of configuration are due to TLS authentication:

MSR not trusting the cache TLS certificates.

The cache not trusting MSR TLS certificates.

Your machine not trusting MSR or the cache.

When this happens, check the cache logs to troubleshoot the misconfiguration.

Clean up sensitive files¶

The certificates and private keys are now managed by Docker in a secure way. Don’t forget to delete sensitive files you’ve created on disk, like the private keys for the cache:

rm -rf certs

Deploy a MSR cache with Kubernetes¶

This example guides you through deploying a MSR cache, assuming that you’ve got a MSR deployment up and running.

The MSR cache is going to be deployed as a Kubernetes Deployment, so that Kubernetes automatically takes care of scheduling and restarting the service if something goes wrong.

We’ll manage the cache configuration using a Kubernetes Config Map, and the TLS certificates using Kubernetes secrets. This allows you to manage the configurations securely and independently of the node where the cache is actually running.

Prepare the cache deployment¶

At the end of this exercise you should have the following file structure on your workstation:

├── dtrcache.yaml # Yaml file to deploy cache with a single command

├── config.yaml # The cache configuration file

└── certs

├── cache.cert.pem # The cache public key certificate, including any intermediaries

├── cache.key.pem # The cache private key

└── dtr.cert.pem # MSR CA certificate

The MSR cache will be deployed with a TLS endpoint. For this you will need to generate a TLS ceritificate and key from a certificate authority. The way you expose the MSR Cache will change the SANs required for this certificate.

For example:

If you are deploying the MSR Cache with an Ingress Object you will need to use an external MSR cache address which resolves to your ingress controller as part of your certificate.

If you are exposing the MSR cache through a Kubernetes Cloud Provider then you will need the external Loadbalancer address as part of your certificate.

If you are exposing the MSR Cache through a Node Port or a Host Port you will need to use a node’s FQDN as a SAN in your certificate.

On your workstation, create a directory called certs. Within it

place the newly created certificate cache.cert.pem and key

cache.key.pem for your MSR cache. Also place the certificate

authority (including any intermedite certificate authorities) of the

certificate from your MSR deployment. This could be sourced from the

main MSR deployment using curl.

$ curl -s https://<dtr-fqdn>/ca -o certs/dtr.cert.pem`.

The MSR Cache will take its configuration from a file mounted into the container. Below is an example configuration file for the MSR Cache. This yaml should be customised for your environment with the relevant external msr cache, worker node or external loadbalancer FQDN.

With this configuration, the cache fetches image layers from MSR and keeps a local copy for 24 hours. After that, if a user requests that image layer, the cache will fetch it again from MSR.