Introduction¶

Mirantis provides the MSR 4 documentation to help you understand the core concepts of Mirantis Secure Registry 4, and to provide information on how to deploy and operate the product.

Product Overview¶

Mirantis Secure Registry (MSR) 4 is an Enterprise-grade container registry solution that can be integrated easily with standard Kubernetes distributions to provide tight security controls for cloud native development. Based on Harbor, which is open source and the only CNCF graduated container registry, this Mirantis product can serve as the core of an effective secure software supply chain.

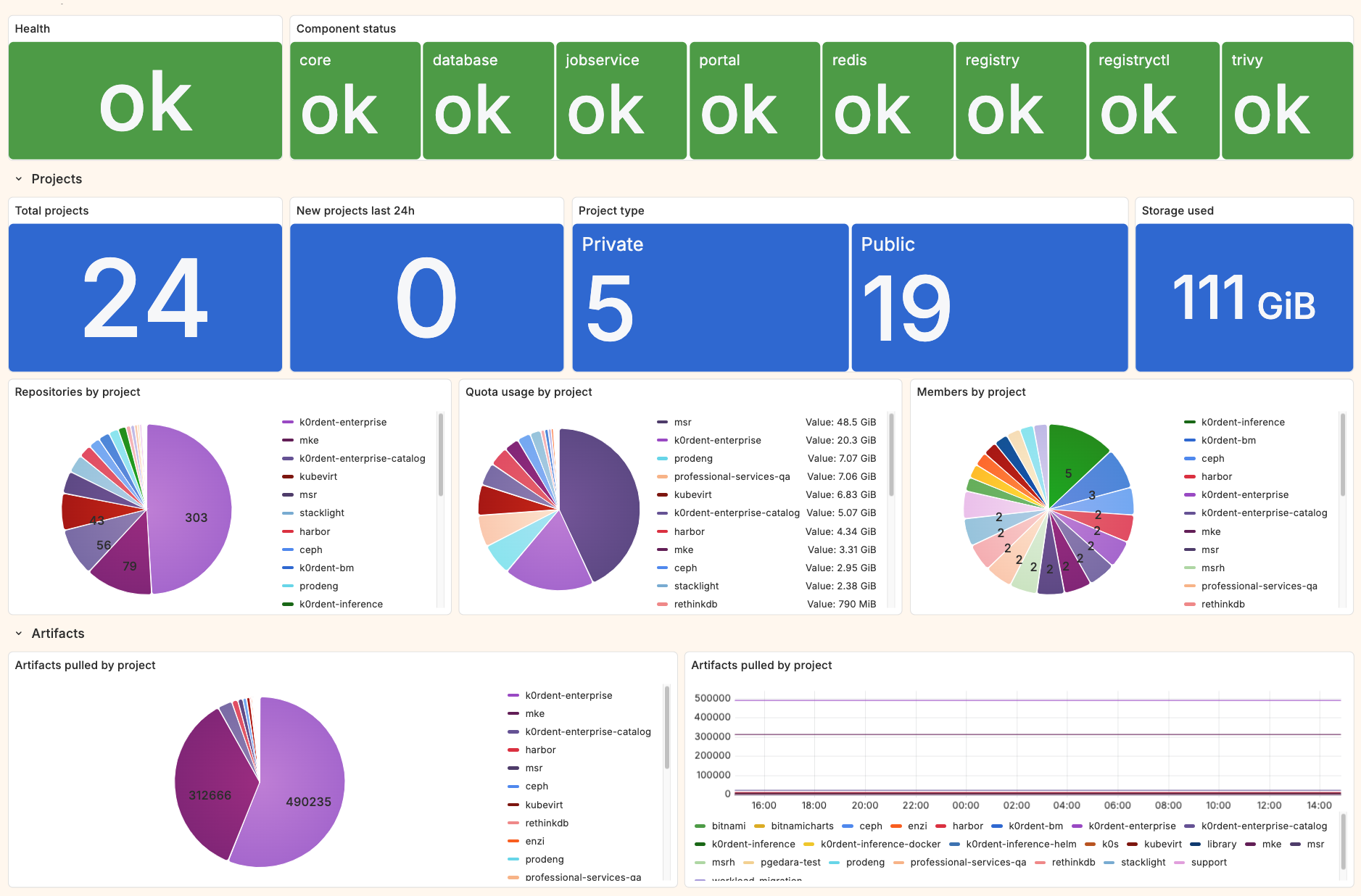

Using MSR 4, you can automate the security of your software supply chain, securely storing, sharing, and managing images in your own private container registry, to automate the security of your software supply chain.

With MSR 4, you can:

Run the software alongside your other applications in any standard Kubernetes version from 1.10 and up, deploying it with Docker Compose or a Helm chart.

Secure artifacts through policies and role-based access control (RBAC), to ensure your container images are free from vulnerabilities.

Improve DevOps collaboration while maintaining clear boundaries, by creating and pushing multiservice applications and images and making these resources accessible within your company.

Accelerate image distribution using peer-to-peer (P2P) preheating capabilities.

Automatically promote images from testing through to production in a controlled manner, thus ensuring that they comply with your defined security minimums, before mirroring containerized content to distributed teams using policy-based controls.

Integrate the software into your development pipeline using webhooks. In this way, policy-based promotion automates compliance checks to secure your application supply chain.

MSR 4 Key Changes¶

Mirantis Secure Registry 4 marks a major evolution in our container image management solution. With a new foundation based on the CNCF Harbor project, MSR 4 delivers improved performance, scalability, and flexibility for modern DevOps workflows.

This section outlines the key changes and improvements introduced in MSR 4, highlights differences compared to MSR 2 and MSR 3.

Product highlights¶

This section summarizes the major architectural and functional improvements introduced in MSR 4. These enhancements are designed to increase performance, improve scalability, simplify operations, and align the platform with current cloud-native standards.

Quota Management¶

Introduces project- and repository-level quotas.

Administrators can enforce storage usage limits across teams.

Helps prevent uncontrolled registry growth in shared environments.

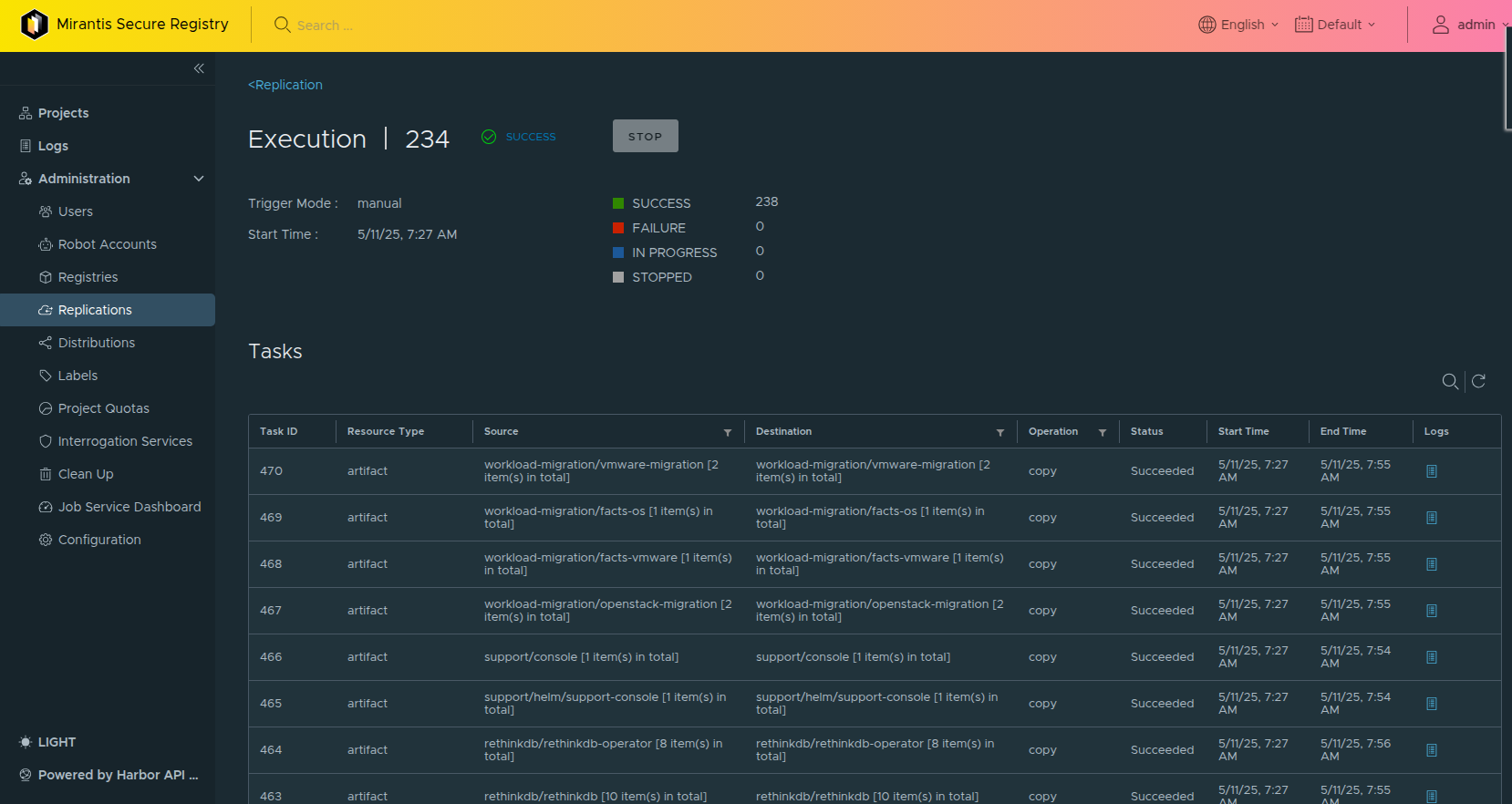

Expanded Replication Targets¶

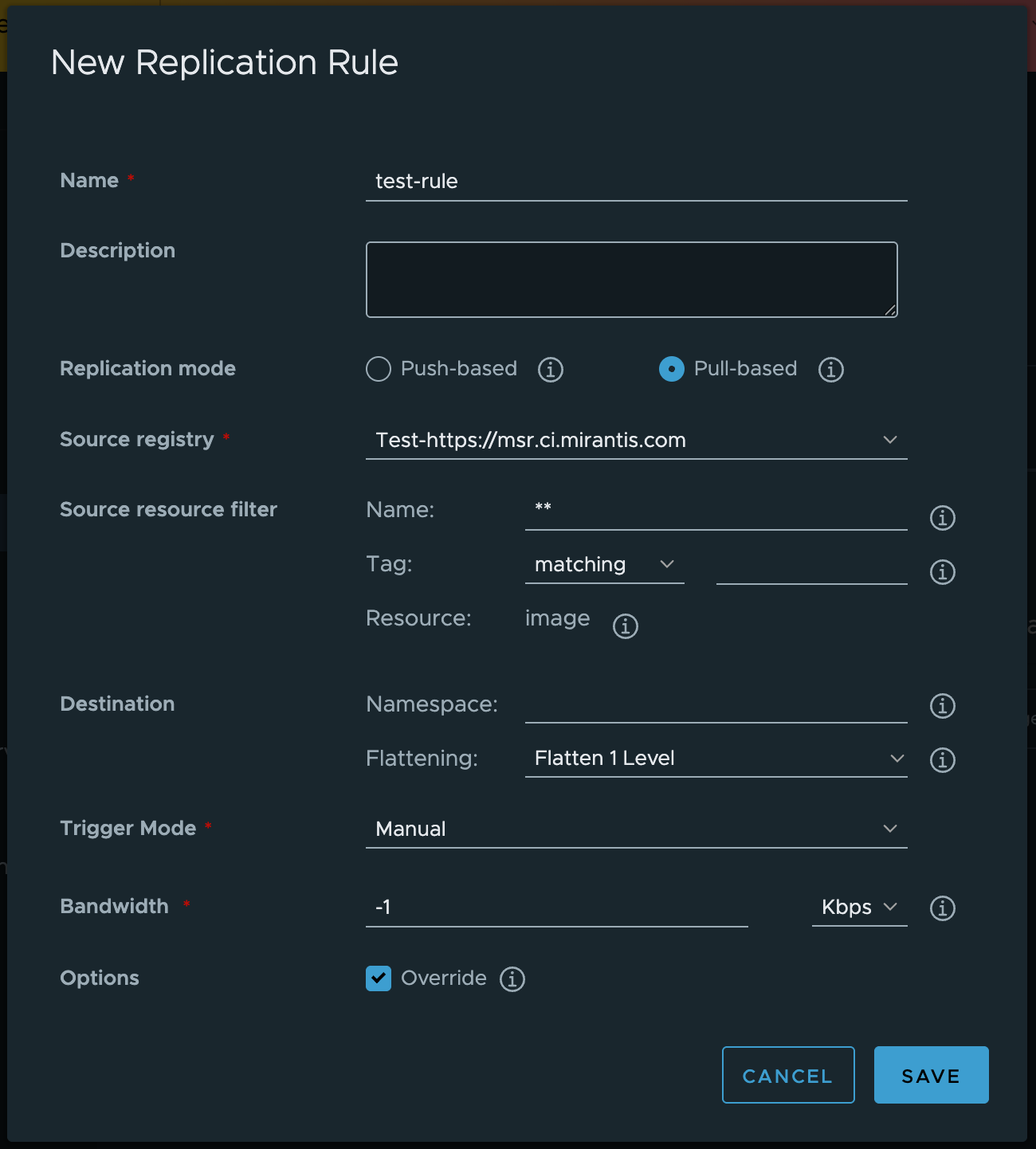

MSR 4 extends support for replicating images and artifacts to and from OCI-compatible registries.

Supported targets include:

Docker Hub, Docker Registry v2

AWS Elastic Container Registry (ECR), Azure ACR

Google Container Registry (GCR), Google Artifact Registry

AliCloud Container Registry, Huawei SWR, GitLab, Quay, JFrog Artifactory

Enables hybrid and multi-cloud workflows with minimal configuration.

Modern Image Signing with Cosign and Notary v2¶

Replaces Docker Content Trust (DCT) and Notary v1 with Cosign and Notary v2.

Cosign and Notary v2 support OCI-native signature formats and validation.

Signatures are stored alongside artifacts, improving integrity enforcement.

Enables keyless signing using OIDC identities.

Aligns with modern DevSecOps practices and cloud-native toolchains.

Enhanced Backup and Restore with Velero¶

Integrates Velero for backup and disaster recovery.

Supports full and selective repository restoration.

Compatible with AWS, GCP, Azure, and S3-compatible storage.

Enables point-in-time recovery and incremental backups.

SBOM Support (SPDX and CycloneDX)¶

Supports storage and distribution of Software Bills of Materials (SBOMs).

SPDX and CycloneDX formats are treated as first-class OCI artifacts.

Enables automated compliance checks and dependency transparency.

Modern Image Signing with Cosign¶

Replaces Docker Content Trust (DCT) and Notary v1 with Cosign and Notary v2.

Supports signing via OIDC without external signing services.

Enables signature validation and artifact integrity with OCI standards.

Enhances integration with Kubernetes-native DevSecOps workflows.

Proxy Caching with Bandwidth Throttling¶

Integrates Harbor’s proxy cache for upstream image caching.

Reduces bandwidth usage and improves image pull performance.

Administrators can apply speed limits to control network usage.

Enhanced Audit Logging and Observability¶

Captures detailed audit logs for UI and API-level actions.

Logs include user activity, system events, and admin operations.

Uses structured formats compatible with centralized logging and SIEM tools.

CloudNativeAI Model Registry Support¶

Supports OCI-format storage for ML models via the CloudNativeAI format.

Unifies container and model artifact management under a single registry.

Enables version control and secure distribution of AI/ML models.

Image Preheating with Dragonfly or Kraken¶

Supports image preheating using Dragonfly or Kraken.

Frequently used images are pulled to nodes in advance.

Reduces deployment startup times for large workloads.

Dragonfly provides peer-to-peer distribution across clusters.

Differences between MSR versions¶

Mirantis Secure Registry (MSR) 4 is now based on CNCF Harbor, bringing increased stability, an expanded feature set, and a broader ecosystem of integrations. This document outlines key changes and considerations between MSR versions.

For more information, refer to the full documentation or contact Mirantis.

API¶

API and webhook behavior has been updated to reflect Harbor’s implementation. These changes support better compatibility with ecosystem tools and simplify DevOps automation.

Architecture¶

MSR 4 introduces a Kubernetes-native architecture that is more scalable and easier to operate than the legacy Swarm-based design. Legacy components such as RethinkDB and embedded services have been removed or refactored, improving performance and simplifying upgrades.

Artifact Management and CI/CD Pipelines¶

Helm Support

Helm chart support in MSR 4 is now OCI-compliant. Charts are stored and managed as OCI artifacts rather than through a dedicated Helm repository. Use OCI commands:

helm push oci:///

helm pull oci:///

The helm search repo command is no longer supported. Instead, use the

Harbor UI or the forthcoming Harbor CLI.

This change improves compatibility with OCI tooling but may require minor adjustments to traditional Helm workflows.

Promotion Policies

Promotion policies are not supported in MSR 4. You must adapt CI/CD pipelines to reflect this change.

Authentication and Access Control¶

OpenID Connect (OIDC) Authentication

MSR 4 replaces legacy SAML support with OpenID Connect (OIDC). OIDC is more suitable for modern applications due to its lightweight protocol, better mobile and microservices compatibility, and broader support across enterprise Identity Providers (IdPs) such as Azure AD, Okta, Google Identity Platform, Amazon Cognito, Ping Identity, IBM Security Verify, OneLogin, and VMware Workspace ONE.

Customers using SAML must configure an IdP that supports SAML-to-OIDC bridging (e.g., Okta, Keycloak, Azure AD).

Role-Based Access Control (RBAC)

MSR 4 removes the legacy Teams and Enzi components. You must now add users manually to projects to configure access. Group-based access is supported only through AD Groups, which requires integration with LDAP/AD and OIDC.

For more information, refer to Authentication Configuration.

Database¶

MSR 4 replaces the legacy RethinkDB backend with PostgreSQL, an industry-standard relational database known for stability and scalability. This transition improves data consistency, query performance, and operational resilience in high-demand environments. PostgreSQL also simplifies administrative tasks and aligns MSR 4 with enterprise database best practices.

Deployment and Infrastructure Support¶

Swarm Support and HA

Upstream Harbor does not support Swarm. You can deploy MSR 4 as a single-node instance using Docker Compose. High availability (HA) requires Kubernetes. Most customers with HA needs already have Kubernetes and can use it for production deployments.

Backup and Disaster Recovery

MSR 2 and MSR 3 included built-in backup capabilities. MSR 4 requires external backup management using Velero, an open-source tool widely used in enterprise Kubernetes environments, including Azure.

Velero supports backup and restore, but it requires a Kubernetes-based deployment. Unlike earlier versions, MSR 4 does not provide native backup functionality.

For more information, refer to Backup Guide.

Upgrades¶

All MSR 4 upgrades are supported as in-place operations. You no longer need to use disruptive blue-green or backup-restore strategies. Administrators can apply version updates with less downtime and lower operational complexity.

For more information, refer to Upgrade Guide.

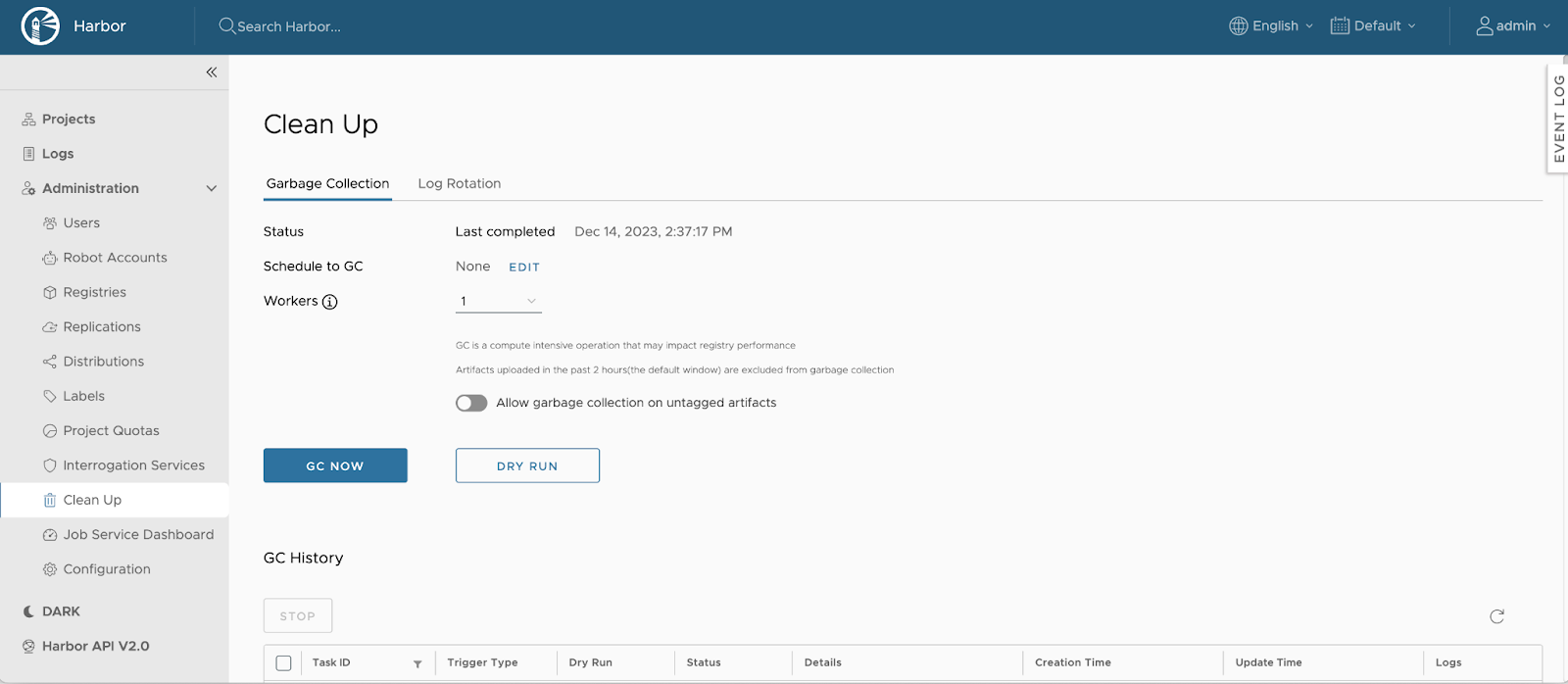

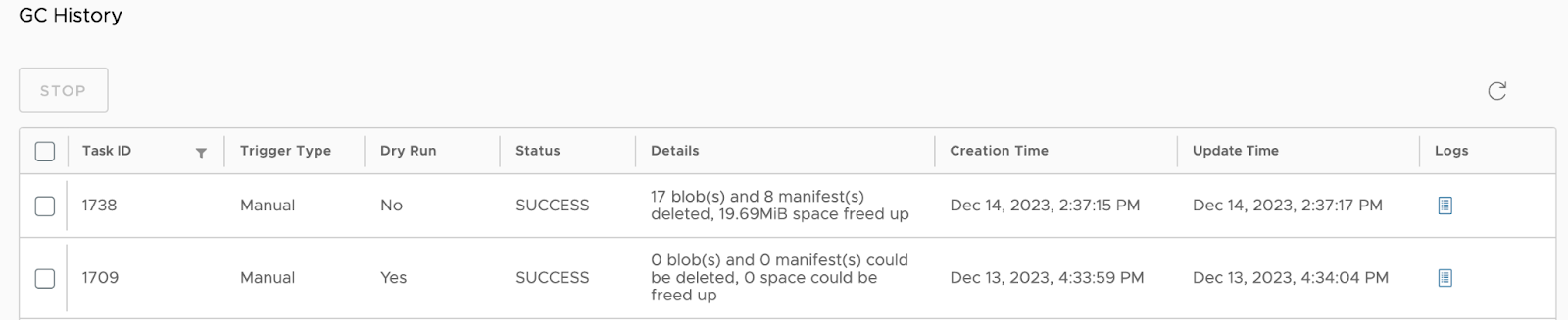

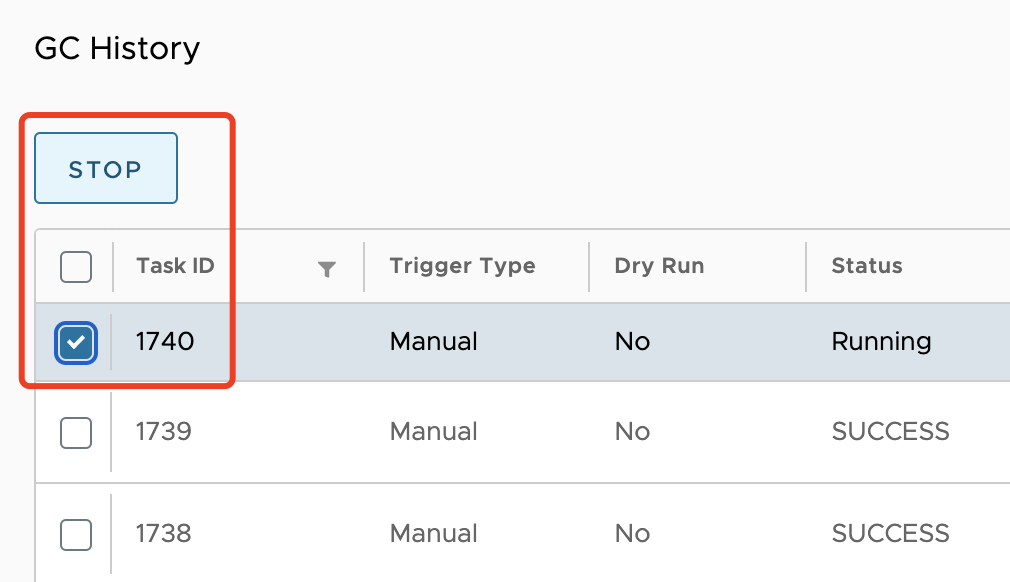

Job Runner for Background Task Execution¶

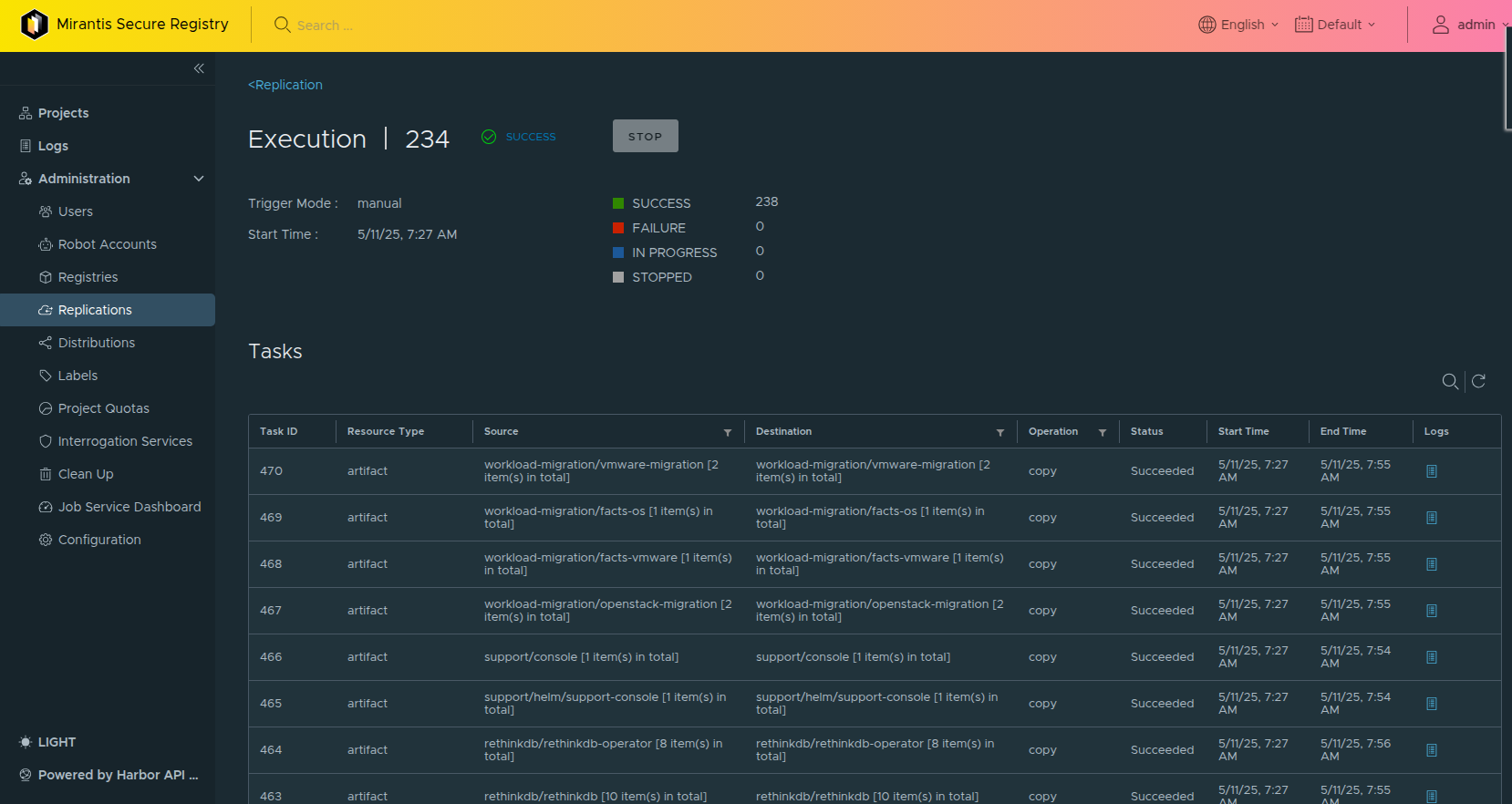

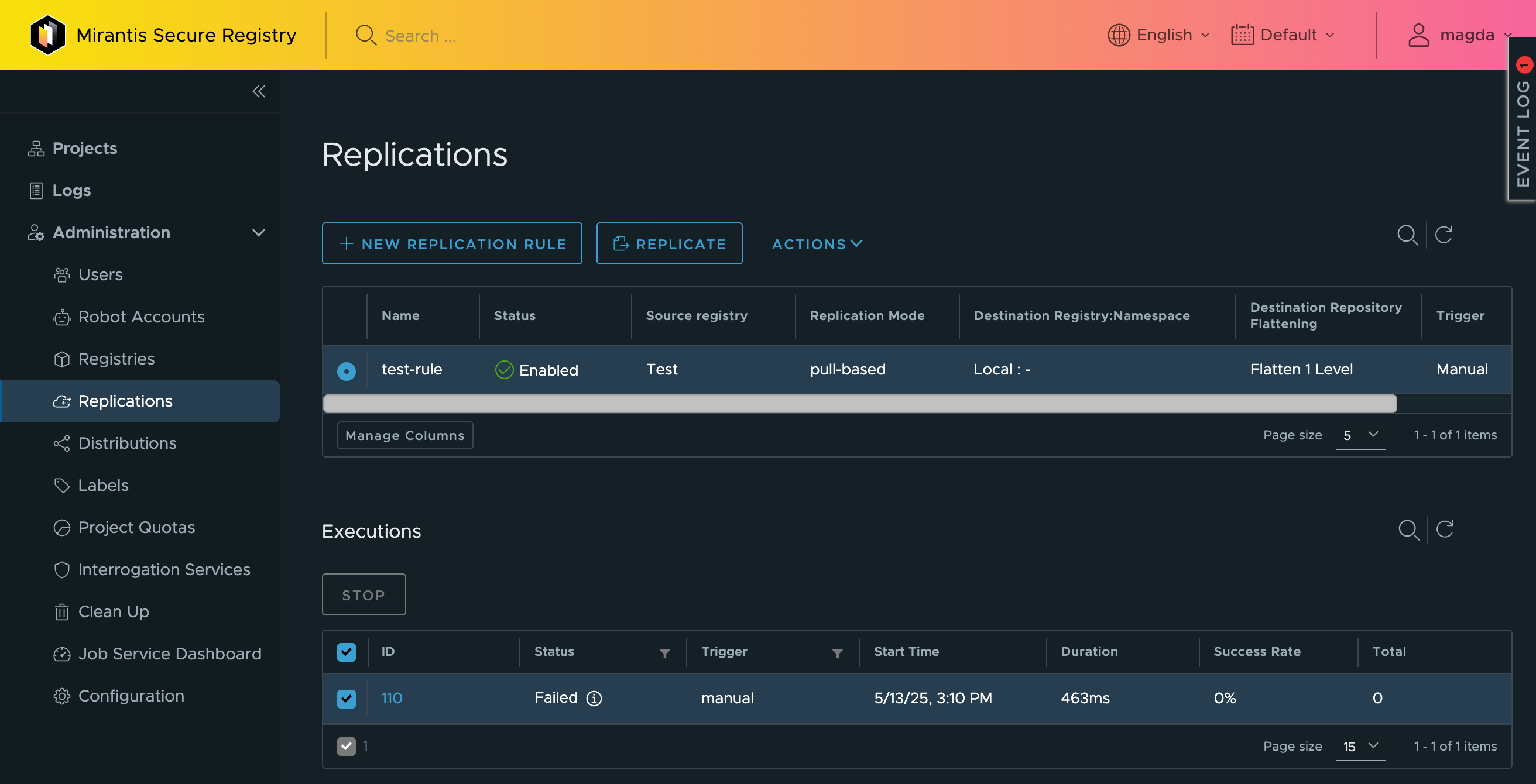

The MSR 4 job runner, inherited from Harbor’s job service, provides a modernized mechanism for executing background tasks such as garbage collection, replication, scanning, and retention.

Compared to MSR 2, the new job runner supports distributed execution, automatic retry policies, improved error reporting, and detailed job history. These features improve observability and reliability for registry operations.

Long Repository Names (256 Characters)¶

MSR 4 supports repository path lengths up to 256 characters, aligning with OCI registry specifications. This support enables deeper project namespaces and more descriptive naming, which are common in enterprise CI/CD pipelines.

Migration¶

CNCF MSR 4 supports mirroring-based migration from MSR 2 and MSR 3. The following elements transfer automatically:

Repositories

Images

Permissions

Push and Pull Mirroring Policies

Roles

Helm Charts

This migration method uses mirroring, which reduces the need for extended downtime or manual migration tools like MMT. MSR 2 or 3 can remain active alongside MSR 4, allowing teams to update pipelines while maintaining system availability.

For more information, refer to Migration Guide.

Summary¶

Migrating to MSR 4 improves performance, simplifies upgrades, and expands feature capabilities. However, functional changes may require you to adjust authentication, promotion workflows, and backup strategies.

Review the changes outlined in this document and plan your migration accordingly.

Removed features¶

The following capabilities available in previous MSR versions are not included in MSR 4:

SAML Support: MSR4 no longer supports SAML authentication and instead uses OpenID Connect (OIDC), a more modern and flexible standard that better aligns with cloud-native environments and improves security and scalability. Please refer to OIDC Authentication for more information on configuring OIDC.

Promotion Policies: Automated promotion policies are no longer included. Customers can adapt their CI/CD pipelines to achieve similar workflows.

Swarm support customers can use MSR4 as a single instance for Swarm environments instead of HA clusters

Feature |

MSR4 (Harbor-Based) |

MSR2 |

MSR3 |

|---|---|---|---|

Distribution |

CNCF Harbor |

Proprietary |

Proprietary |

Database |

PostgreSQL |

RethinkDB |

RethinkDB |

Redis (Caching) |

Yes |

Yes |

Yes |

Orchestration |

Kubernetes-native |

Docker Swarm |

Docker Swarm |

OCI Compliance |

Full OCI and Helm OCI support |

Limited support |

Limited support |

User Interface |

Modern and intuitive |

Basic |

Improved |

Quotas |

Fully supported |

Not available |

Not available |

Vulnerability Scanning |

Trivy, Grype, Aqua, Anchore, and others |

Synopsis (built-in) |

Synopsis (built-in) |

Backup Integration |

Velero-based backup/restore |

Manual/internal |

Manual/internal |

Promotion Policies |

Not Available |

Available |

Available |

SAML Support |

Replaced by OIDC |

Available |

Available |

In-Place Upgrades |

Yes |

No |

No |

Image Signing |

Uses Cosign for image signing and verification |

Uses Docker Content Trust (DCT) based on Notary v1 |

Uses Docker Content Trust (DCT) based on Notary v1 |

Long Repository Names |

256 characters |

Limited |

Limited |

SBOM Support |

SPDX, CycloneDX |

Not available |

Not available |

Artifact Replication |

Docker Hub, AWS ECR, GCR, GitLab, Quay, etc. |

Limited |

Limited |

Proxy Cache and Throttling |

Full support with bandwidth control |

Not available |

Not available |

Audit Logging |

Extended API/UI activity tracking |

Basic |

Basic |

AI Model Registry |

Supported via CloudNativeAI |

Not available |

Not available |

Preheat |

Dragonfly-based P2P preheat |

Not available |

Not available |

Architecture¶

The Mirantis Secure Registry (MSR) Reference Architecture provides comprehensive technical information on MSR, including component particulars, infrastructure specifications, and networking and volumes detail.

Reference Architecture¶

The diagram shown below is the high-level architecture of the MSR 4 solution.

As per the diagram, the MSR 4 solution contains:

MSR can also be integrated with various auxiliary services, for more information refer to Integration.

Consumers Layer¶

MSR 4 natively supports various related clients, including the Docker CLI, Cosign client, and OCI-compatible clients like Oras and Helm. In addition to these clients, MSR 4 features a web portal that enables administrators to manage and monitor all artifacts seamlessly.

The MSR 4 Web Portal is a graphical user interface that helps users manage images on the Registry.

Fundamental Services Layer¶

These are the core functional services of MSR 4, including Proxy, Core, and Job services, all built on Harbor. This layer can also accommodate third-party services installed and integrated to enhance functionality, such as improved replication, advanced logging capabilities, and additional integration drivers.

Core¶

Harbor’s core service, which provides the following functions, is illustrated in the diagram below:

Function |

Description |

|---|---|

API Server |

An HTTP server that accepts REST API requests and responds by utilizing its submodules, including Authentication and Authorization, Middleware, and API Handlers, to process and manage the requests effectively. |

Authentication and Authorization |

The authentication service can secure requests, which can be powered by a local database, AD/LDAP, or OIDC. The RBAC (Role-Based Access Control) mechanism authorizes actions such as pulling or pushing images. The Token service issues tokens for each Docker push/pull command based on the user’s role within a project. If a request from a Docker client lacks a token, the Registry redirects the request to the Token service for token issuance. |

Middleware |

This component preprocesses incoming requests to determine whether they meet the required criteria before passing them to backend services for further processing. Various functions, including quota management, signature verification, vulnerability severity checks, and robot account parsing, are implemented as middleware. MSR4 supports Cosign for image signing and verification. Cosign is part of the Sigstore project. Cosign allows signing without relying on a separate, heavyweight service like Notary and supports keyless signing with OIDC identities. Harbor integrates this natively, providing better interoperability with Kubernetes-native tools and workflows. |

API Handlers |

These handle the corresponding REST API requests, primarily parsing and validating request parameters. They execute the business logic associated with the relevant API controller and generate a response, which is then written back to the client. |

API Controller |

The API controller plays a critical role in orchestrating the processing of REST API requests. It’s a key component within the system’s architecture that manages the interaction between the user’s requests and the backend services. |

Configuration Manager |

Manages all system configurations, including settings for authentication types, email configurations, certificates, and other essential parameters. |

Project Management |

Oversees the core data and associated metadata of projects, which are created to isolate and manage the artifacts effectively. |

Quota Manager |

Manages project quota settings and validates quotas whenever new pushes are made, ensuring that usage limits are followed. |

Chart Controller |

Acts as a proxy for chart-related requests to the OCI-compatible registry backend and provides various extensions to enhance the chart management experience. |

Retention Manager |

Manages tag retention policies and oversees the execution and monitoring of tag retention processes, ensuring efficient storage management. |

Content Trust |

Enhances the trust capabilities provided by the backend Cosign, facilitating a seamless content trust process for secure and verified operations. |

Replication Controller |

Manages replication policies and registry adapters while also triggering and monitoring concurrent replication processes to ensure consistency and reliability across systems. |

Scan Manager |

Oversees multiple configured scanners from different providers and generates scan summaries and reports for specified artifacts, ensuring comprehensive security and vulnerability assessments. |

Label Manager |

The Label Manager is responsible for the creation and management of labels that can be applied to projects and resources within the registry. |

P2P Manager |

This component is crucial for enhancing the efficiency of image distribution across different instances using peer-to-peer (P2P) technology. It’s role involves setting up and managing P2P preheat provider instances. These instances allow specified images to be preheated into a P2P network, facilitating faster access and distribution across various nodes. |

Notification Manager (Webhook) |

A mechanism configured in Harbor that sends artifact status changes to designated webhook endpoints. Interested parties can trigger follow-up actions by listening to related webhook events, such as HTTP POST requests or Slack notifications. |

OCI Artifact Manager |

The core component manages the entire lifecycle of OCI artifacts across the Harbor registry, ensuring efficient storage, retrieval, and management. |

Registry Driver |

Implemented as a registry client SDK, it facilitates communication with the underlying registry (currently Docker Distribution), enabling seamless interaction and data management. |

Robot Manager |

The Robot Manager manages robot accounts, which are used to automate operations through APIs without requiring interactive user login. These accounts facilitate automated workflows such as CI/CD pipelines, allowing tasks like pushing or pulling images and Helm charts, among other operations, through command-line interfaces (CLI) like Docker and Helm. |

Log Collector |

Responsible for aggregating logs from various modules into a centralized location, ensuring streamlined access and management of log data. |

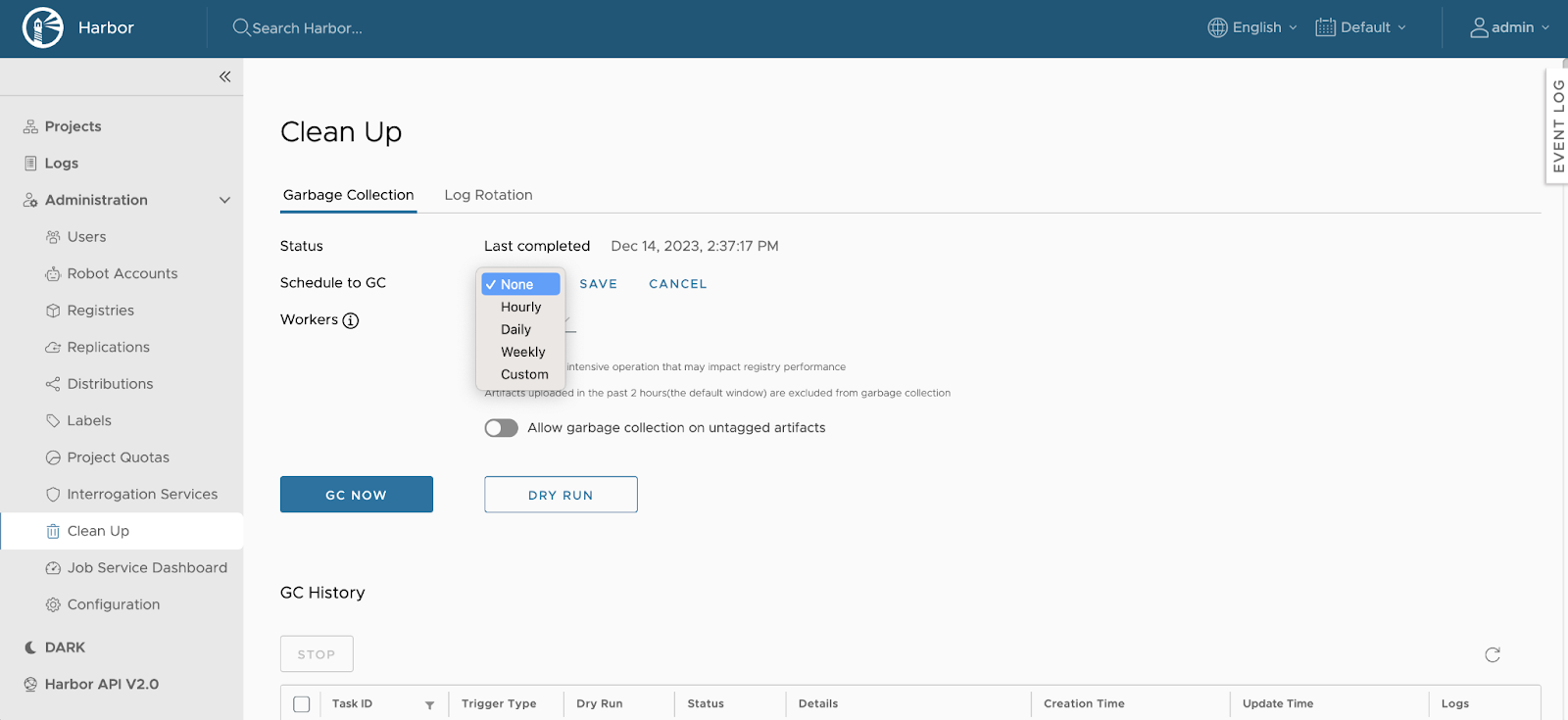

GC Controller |

Manages the online garbage collection (GC) schedule, initiating and tracking the progress of GC tasks to ensure efficient resource utilization and cleanup. |

Traffic Proxy |

The Traffic Proxy in Harbor primarily functions through its Proxy Cache feature, which allows Harbor to act as a middleman between users and external Docker registries. |

Job Service¶

The MSR 4 Job Service is a general job execution queue service to let other components/services submit requests of running asynchronous tasks concurrently with simple restful APIs.

Trivy¶

Trivy is a powerful and versatile security scanner with tools to detect security vulnerabilities across various targets, ensuring comprehensive scans for potential issues. However, if customers prefer to use a different scanner, MSR 4 allows such customization in the configuration.

Data Access Layer¶

The MSR 4 Data Access Layer manages data storage, retrieval, and caching within the system. It encompasses Key-Value storage for caching, an SQL database for storing metadata such as project details, user information, policies, and image data, and Data Storage, which serves as the backend for the registry.

Data Access Layer Elements |

Description |

|---|---|

Key Value Storage |

MSR 4 Key-Value (K-V) storage, powered by Redis, provides data caching functionality and temporarily persists job metadata for the Job Service. |

Database |

The MSR 4 database stores essential metadata for Harbor models, including information on projects, users, roles, replication policies, tag retention policies, scanners, charts, and images. PostgreSQL is used as the database solution. |

Data Storage |

Multiple storage options are supported for data persistence, serving as backend storage for the OCI-compatible registry. |

Integration¶

Functional services can be integrated with various auxiliary services, including publicly available providers and locally hosted corporate services.

Identity providers¶

Identity providers are centralized Identity and Access Management solutions, such as AD/LDAP or OIDC, that can be seamlessly integrated with MSR 4.

Metrics Observability¶

MSR 4 can be integrated with Prometheus to centralize the collection and management of metrics.

Scan providers¶

MSR 4 supports integration with multiple scanning providers. As mentioned in the core services, Trivy is used by default.

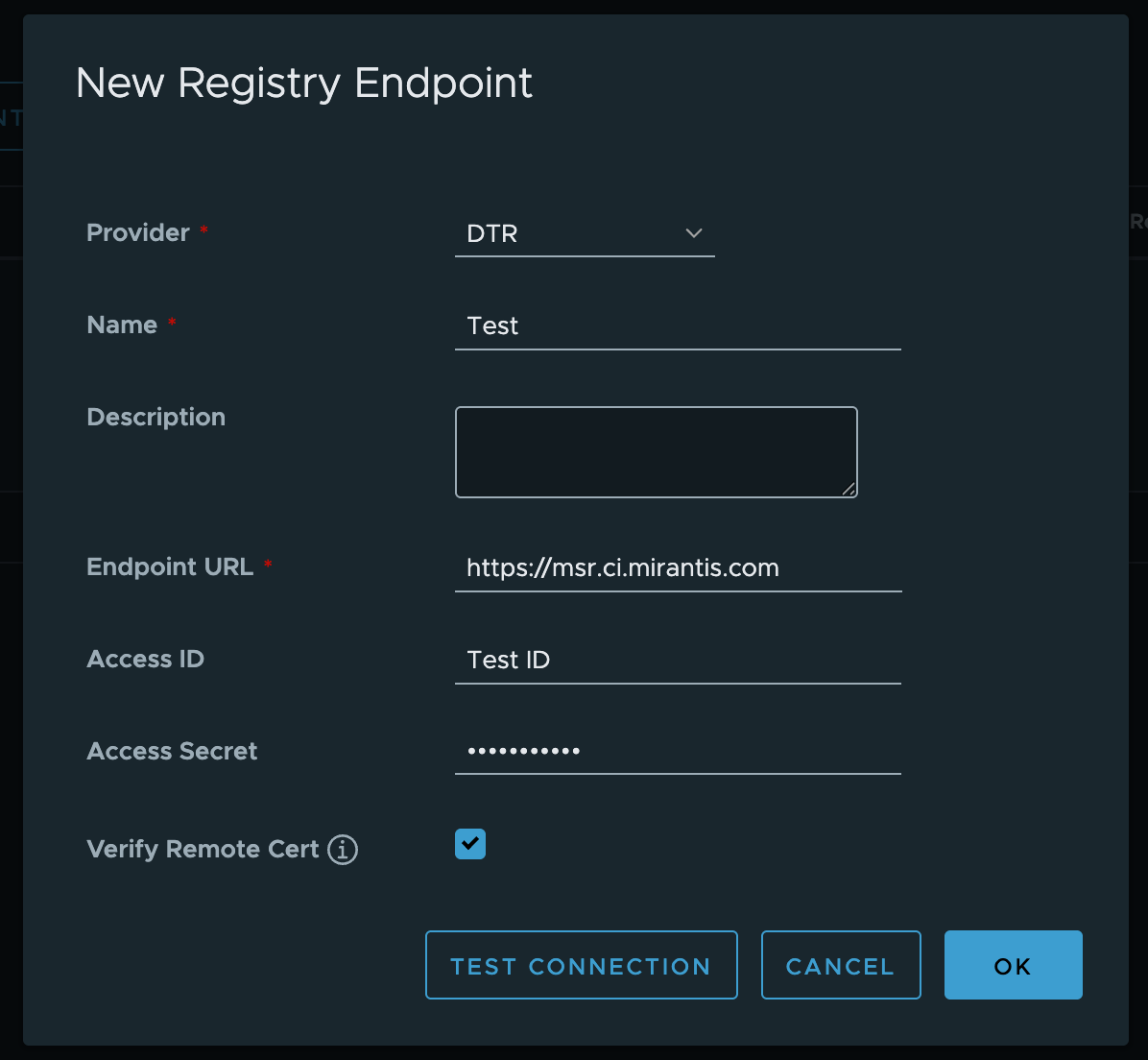

Registry providers¶

Multiple providers can support image storage in MSR 4. By default, MSR 4 uses an internal registry that stores data on Data Storage, as outlined in the Data Access Layer. Alternatively, various registry providers can be enabled, including:

Distribution (Docker Registry)

Docker Hub

Huawei SWR

Amazon ECR

Google GCR

Azure ACR

Ali ACR

Helm Hub

Quay

Artifactory

GitLab Registry

Once a provider is attached, MSR 4 will use it as a backend registry replication, pushing and pulling images. For more information regarding the replication and Backend Registry configuration please refer to the Configuring Replication.

Deployment¶

MSR 4 is deployed using Helm charts and supports two primary deployment options to address different operational and scalability needs:

All-in-One on a Single Node

Multi-Node High Availability (HA)

Explore the sections below to learn more about each deployment model and how to get started.

Deployment Options¶

MSR 4 offers two primary deployment options, each with the flexibility to accommodate various modifications. For instance, in the all-in-one deployment, local storage can be replaced with shared storage, and databases or key-value stores can be made remote. This adaptability allows MSR 4 to support various configurations and deployment scenarios.

However, to establish a standardized approach, we propose two primary deployment options tailored for specific use cases:

All-in-One on a Single Node – Ideal for testing and development

Multi-Node HA Deployment – Designed for production environments

Since MSR 4 operates as a Kubernetes workload, all of its core services run as Kubernetes pods. As a result, we consider a worker node as the minimum footprint for an all-in-one MSR 4 deployment, and three workers as the minimum footprint for an HA deployment. Master nodes, however, are not included in this count, giving you the flexibility to design and deploy the underlying Kubernetes cluster according to your needs.

All-in-one Deployment¶

The All-in-One Deployment consolidates all services onto a single worker node, making it the most straightforward way to deploy MSR 4. In this setup, all services run as single-instance components without high availability (HA) or replication. Such approach is not applicable for production usage but is useful for testing or Proof of Concept. Refer to the installation guidance in the MSR 4 documentation Install MSR on a Single Host using Docker Compose or you can use a Helm chart approach (that is mentioned in HA deployment variant) instead, but scaling replicas to 1 in variables configuration.

While this deployment effectively showcases MSR 4’s capabilities and functionality, it is not intended for production use due to its lack of redundancy. Instead, it is a lightweight option suitable for demonstrations, training, testing, and development.

The following diagram illustrates a single worker node running all MSR 4-related services.

There are two methods for installing the all-in-one MSR 4:

Using Kubernetes Helm

Each approach has its own advantages. The Kubernetes method is similar to High Availability (HA) mode and allows for easy scaling from a single-node to a multi-node deployment. On the other hand, Docker Compose is ideal for those not using Kubernetes in their infrastructure, enabling them to leverage MSR 4’s capabilities by running all services in containers.

High Availability Deployment¶

The Highly Available (HA) Deployment of MSR 4 is distributed across three or more worker nodes, ensuring resilience and reliability through multiple service instances. For installation guidance, refer to the Install MSR with High Availability.

A key aspect of this deployment is that Job Service and Registry utilize a shared volume, which should be backed by a non-local, shared file system or external storage cluster, such as Ceph (CephFS). Additionally, Redis and PostgreSQL run in a replicated mode within this example, co-hosted on the same worker nodes as MSR 4’s core services. However, it is also possible to integrate existing corporate Redis and PostgreSQL instances outside of these nodes, leveraging an enterprise-grade key-value store and database infrastructure.

The following diagram illustrates the service placement in an HA deployment. Dashed boxes indicate potential additional replicas for certain services. As a reference, we recommend deploying at least two instances of Portal, Core, Job Service, Registry, and Trivy—though this number can be adjusted based on specific requirements, workload, and use cases. These services are not quorum-based.

While the number of replicas for these services can scale as needed, Redis and PostgreSQL must always have a minimum of three replicas to ensure proper replication and fault tolerance. This requirement should be carefully considered when planning a production deployment. Redis and PostgreSQL are quorum-based services, so the number of replicas should always be odd, specifically 1, 3, 5, and so on.

The reference HA deployment of an MSR 4 is presented in the following diagram.

Components Deployment¶

As previously emphasized, MSR 4 components operate as a Kubernetes workload. This section provides a reference visualization of the resources involved in deploying each component. Additionally, it outlines how service deployment differs between a single-node and a highly available (HA) setup, highlighting key structural changes in each approach.

MSR 4 deployment includes the following components:

The reference between these components is illustrated in the following diagram:

Web Portal¶

The Web Portal is a graphical user interface designed to help users manage images within the Registry. To ensure scalability and redundancy, it is deployed as a ReplicaSet, with a single instance in an All-in-One deployment and multiple instances in a Highly Available (HA) setup. These replicas are not quorum-based, meaning there are no limits on the number of replicas. The instance count should be determined by your specific use case and load requirements. To ensure high availability, it is recommended to have at least two replicas.

Proxy (API Routing)¶

An API proxy, specifically NGINX, runs as a ReplicaSet. It can operate with a single instance in All-in-One deployments or scale with multiple instances in an HA deployment. The proxy uses a ConfigMap to store the nginx.conf and a Secret to provide and manage TLS certificates.

Important to know is that if services are exposed through Ingress, the NGINX Proxy will not be utilized. It happens because the Ingress controller in Kubernetes, often NGINX-based, handles the required tasks such as load balancing and SSL termination. So in such a case, all the functionality of an API Routing Proxy will be handed over to Ingress.

Core¶

The Core is a monolithic application that encompasses multiple controller and manager functions. The Fundamental Services -> Core section provides a detailed description. It is deployed as a Replica Set, with a single instance for All-in-One deployments and multiple replicas for HA deployments. These replicas are not quorum-based, meaning there are no limits on the number of replicas. The instance count should be determined by your specific use case and load requirements. To ensure high availability, it is recommended to have at least two replicas. The Core uses a ConfigMap to store non-sensitive configurations while securely attaching encrypted parameters, such as passwords, to sensitive data.

Job Service¶

The Harbor Job Service runs as a ReplicaSet, with a single replica in All-in-One deployments and multiple replicas in HA deployments. These replicas are not quorum-based, meaning there are no limits on the number of replicas. The instance count should be determined by your specific use case and load requirements. To ensure high availability, it is recommended to have at least two replicas. It utilizes a PVC to store job-related data, which can be configured using local or remote shared storage. Please refer to the separate Storage section for more details on storage options. The Job Service also uses a ConfigMap to retrieve the config.yaml and a Secret to access sensitive parameters, such as keys and passwords.

Registry¶

The Harbor Registry is deployed as a ReplicaSet, running as a single instance in All-in-One deployments and supporting multiple replicas in HA mode. These replicas are not quorum-based, meaning there are no limits on the number of replicas. The instance count should be determined by your specific use case and load requirements. To ensure high availability, it is recommended to have at least two replicas. Like the Job Service, it utilizes a PVC to store registry data, using either local or shared backend storage. For more details on storage options, please refer to the Storage section. The Registry workload relies on a ConfigMap to store the config.yaml and uses Secrets to manage sensitive parameters, such as keys and passwords.

Tivy¶

The Trivy service is deployed as a StatefulSet and utilizes a PVC, with a separate volume for each Trivy instance. The number of instances can range from a single instance in All-in-One deployments to multiple instances in HA deployments. These replicas are not quorum-based, meaning there are no limits on the number of replicas. The instance count should be determined by your specific use case and load requirements. To ensure high availability, it is recommended to have at least two replicas. Trivy also uses a Secret to store connection details for the Key-Value store.

K-V storage¶

Unlike other fundamental services in MSR 4, K-V storage is part of the Data Access Layer. It can either be installed as a simplified, single-instance setup using the same Harbor Helm Chart suitable for All-in-One deployments or deployed in HA mode using a separate Redis Helm Chart. Alternatively, an individual instance of K-V storage can be used and integrated into MSR 4 as an independent storage service. In this case, it is not considered part of the deployment footprint but rather a dependency managed by a dedicated corporate team. While a remote service is an option, it is not part of the reference architecture and is more suited for specific customization in particular deployment scenarios.

Single Node Deployment Redis¶

It is a simplified, single-instance Redis deployment that runs as a StatefulSet and utilizes a PVC for storage.

HA Deployment Redis¶

Unlike the previous single-instance deployment, this setup is more robust and comprehensive. It involves deploying K-V Redis storage in replication mode, distributed across multiple worker nodes. This configuration includes two types of pods: replicas and master. Each pod uses a PVC for storage and a ConfigMap to store scripts and configuration files, while sensitive data, such as passwords, is securely stored in a Secret.

Redis is a quorum-based service, so the number of replicas should always be odd—specifically 1, 3, 5, and so on.

SQL Database¶

Like K-V Storage, the SQL Database service is not part of the Fundamental Services but is included in the Data Access Layer. It can be installed as a simplified, single-instance setup using the same Harbor Helm Chart, making it suitable for All-in-One deployments, or deployed in HA mode using a separate PostgreSQL Helm Chart. Alternatively, a separate SQL Database instance can be integrated into MSR 4 as an independent storage service. In this case, it is considered a dependency rather than part of the deployment footprint and is managed by a dedicated corporate team. While a remote service is an option, it is not part of the reference architecture and is more suited for custom deployments based on specific needs.

Single Node Deployment¶

This is a streamlined, single-instance PostgreSQL deployment that runs as a StatefulSet and utilizes a PVC for storage.

HA Deployment¶

Unlike the previous single-node deployment, this setup is more robust and comprehensive. It involves deploying PostgreSQL in replication mode across multiple worker nodes. The configuration includes two types of pods: replicas, managed as a StatefulSet, and pgpool, running as a ReplicaSet. Each pod uses a PVC for storage and a ConfigMap to store scripts and configuration files, while sensitive data, such as passwords, is securely stored in a Secret.

Pgpool operates as an efficient middleware positioned between PostgreSQL servers and PostgreSQL database clients. It maintains and reuses connections to PostgreSQL servers. When a new connection request with identical properties (such as username, database, and protocol version) is made, Pgpool reuses the existing connection. This minimizes connection overhead and significantly improves the system’s overall throughput.

PostgreSQL is a quorum-based service, so the number of replicas should always be odd—specifically 1, 3, 5, and so on.

Deployment Resources¶

MSR 4 deployment is performed through the Helm charts. The following resources, described in the following tables, are expected to be present in the environment after the deployment.

Harbor Helm Chart¶

Please note that the type and number of resources may vary based on the deployment configuration and the inclusion of additional services.

Secret¶

Name |

Namespace |

Description |

|---|---|---|

msr-4-harbor-core |

default |

Stores data needed for integration with other fundamental and data storage services and API-related keys, certificates, and passwords for DB integration. |

msr-4-harbor-database |

default |

Contains a DB password. |

msr-4-harbor-jobservice |

default |

Contains a job service secret and a registry credential password. |

msr-4-harbor-nginx |

default |

Contains TLS certs for API proxy. |

msr-4-harbor-registry |

default |

Contains a registry secret and Redis password. |

msr-4-harbor-registry-htpasswd |

default |

Contains the registry password. |

msr-4-harbor-registryctl |

default |

Contains registry-controller sensitive configuration. |

msr-4-harbor-trivy |

default |

Contains Trivy reference to Redis K-V storage. |

ConfigMap¶

Name |

Namespace |

Description |

|---|---|---|

msr-4-harbor-core |

default |

Stores configuration for core services, defining integrations, databases, URLs, ports, and other non-sensitive settings (excluding passwords, keys, and certs). |

msr-4-harbor-jobservice-env |

default |

Job service configuration parameters such as URLs, ports, users, proxy configuration, etc. |

msr-4-harbor-jobservice |

default |

A job service config.yaml. |

msr-4-harbor-nginx |

default |

Nginx.config. |

msr-4-harbor-portal |

default |

Portal virtual host HTTP config. |

msr-4-harbor-registry |

default |

Registry config.yaml. |

msr-4-harbor-registryctl |

default |

Register controller configuration. |

PersistentVolumeClaim¶

Name |

Namespace |

Description |

|---|---|---|

msr-4-harbor-jobservice |

default |

PVC for job service. |

msr-4-harbor-registry |

default |

PVC for registry. |

Service¶

Name |

Namespace |

Description |

|---|---|---|

msr-4-harbor-core |

default |

Service for Core. |

msr-4-harbor-database |

default |

Service for DB. |

msr-4-harbor-jobservice |

default |

Service for Job Service. |

harbor |

default |

Service for Harbor. |

msr-4-harbor-portal |

default |

Service for Portal. |

msr-4-harbor-redis |

default |

Service for k-v Redis. |

msr-4-harbor-registry |

default |

Service for Registry. |

msr-4-harbor-trivy |

default |

Service for Trivy. |

Deployment¶

Name |

Namespace |

Description |

|---|---|---|

msr-4-harbor-core |

default |

A Deployment configuration for Core. |

msr-4-harbor-jobservice |

default |

A Deployment configuration for Job Service. |

msr-4-harbor-nginx |

default |

A Deployment configuration for Proxy. |

msr-4-harbor-portal |

default |

A Deployment configuration for Portal. |

msr-4-harbor-registry |

default |

A Deployment configuration for Registry. |

ReplicaSet¶

Name |

Namespace |

Description |

|---|---|---|

msr-4-harbor-core |

default |

A ReplicaSet configuration for Core. |

msr-4-harbor-jobservice |

default |

A ReplicaSet configuration for Job Service. |

msr-4-harbor-nginx |

default |

A ReplicaSet configuration for Proxy. |

msr-4-harbor-portal |

default |

A ReplicaSet configuration for Portal. |

msr-4-harbor-registry |

default |

A ReplicaSet configuration for Registry. |

StatefulSet¶

Name |

Namespace |

Description |

|---|---|---|

msr-4-harbor-database |

default |

A StatefulSet configuration for DB. |

msr-4-harbor-redis |

default |

A StatefulSet configuration for k-v. |

msr-4-harbor-trivy |

default |

A StatefulSet configuration for Trivy. |

Redis Helm Chart¶

For a Highly Available (HA) deployment, a dedicated Redis Helm chart can be used to deploy a Redis instance, ensuring distribution across nodes for replication and enhanced reliability.

NetworkPolicy¶

Name |

Namespace |

Description |

|---|---|---|

redis |

default |

A NetworkPolicy for Redis declares an ingress port for exposure. |

PodDisruptionBudget¶

Name |

Namespace |

Description |

|---|---|---|

redis-master |

default |

Helps maintain the availability of applications during voluntary disruptions like node drains or rolling updates. It specifies the minimum number or percentage of pods that must remain available during a disruption for redis-master pods. |

redis-replicas |

default |

It’s the same for replica pods. |

ServiceAccount¶

Name |

Namespace |

Description |

|---|---|---|

redis-master |

default |

Service account configuration for redis-master. |

redis-replicas |

default |

Service account configuration for redis-replicas. |

Secrets¶

Name |

Namespace |

Description |

|---|---|---|

redis |

default |

It contains a Redis password. |

ConfigMaps¶

Name |

Namespace |

Description |

|---|---|---|

redis-configuration |

default |

Master.conf, redis.conf, replica.conf. |

redis-health |

default |

Multiple .sh files with health checks. |

redis-scripts |

default |

start-master.sh and start-replica.sh. |

Services¶

Name |

Namespace |

Description |

|---|---|---|

redis-headless |

default |

Service for redis-headless. |

redis-master |

default |

Service for redis-master. |

redis-replicas |

default |

Service for redis-replica. |

StatefulSet¶

Name |

Namespace |

Description |

|---|---|---|

redis-master |

default |

StatefulSet configuration for redis-master. |

redis-replicas |

default |

StatefulSet configuration for redis-replica. |

PostgreSQL Helm Chart¶

PostgreSQL helm chart {#postgresql-helm-chart}

For a Highly Available (HA) deployment, a dedicated PostgreSQL Helm chart can be used to deploy a PostgreSQL instance, ensuring distribution across nodes for replication and enhanced reliability.

NetworkPolicy¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha-pgpool |

default |

A NetworkPolicy for PostgreSQL pgpool declares an ingress port for exposure. |

postgresql-ha-postgresql |

default |

A NetworkPolicy for PostgreSQL declares an ingress port for exposure. |

PodDisruptionBudget¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha-pgpool |

default |

Helps maintain the availability of applications during voluntary disruptions like node drains or rolling updates. It specifies the minimum number or percentage of pods that must remain available during a disruption for postgres-pgpool pods. |

postgresql-ha-postgresql |

default |

It’s the same for PostgreSQL replicas. |

postgresql-ha-postgresql-witness |

default |

It’s the same for PostgreSQL witness. |

ServiceAccount¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha |

default |

A Service Account configuration for PostgreSQL. |

Secrets¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha-pgpool |

default |

A Service Account configuration for PostgreSQL pgpool. |

postgresql-ha-postgresql |

default |

A Service Account configuration for PostgreSQL replicas. |

ConfigMaps¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha-postgresql-hooks-scripts |

default |

pre-stop.sh and readiness-probe.sh. |

Services¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha-pgpool |

default |

A Service configuration for PostgreSQL pgpool. |

postgresql-ha-postgresql-headless |

default |

A Service configuration for PostgreSQL headless. |

postgresql-ha-postgresql |

default |

A Service configuration for PostgreSQL replicas. |

Deployments¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha-pgpool |

default |

A Deployment configuration for PostgreSQL pgpool. |

StatefulSet¶

Name |

Namespace |

Description |

|---|---|---|

postgresql-ha-postgresql |

default |

A StatefulSet configuration for PostgreSQL replicas. |

System requirements¶

To learn more about Mirantis Secure Registry (MSR) system requirements go to Prerequisites in the Installation Guide.

Storage¶

Storage is a critical component of the MSR 4 deployment, serving multiple purposes, such as temporary job-related data and image storage. It can be configured as local storage on the worker nodes or as shared storage, utilizing a remote standalone storage cluster like Ceph, or by attaching a dedicated storage application license.

Local¶

Local storage is used for non-critical data that can be safely discarded during development, testing, or when service instances are reinitialized. This setup is primarily applicable in All-in-One deployments or when storage redundancy is provided through hardware solutions, such as RAID arrays on the worker nodes.

Volumes¶

Please refer to the

Volume access type

outlined in the installation section. While volumes used in

All-in-One deployments can utilize

the WriteToOne access mode, volumes that leverage shared storage may be

configured with the ReadWriteMany access mode. This allows the same volume

to be accessed by multiple replicas of services, such as Job Service or

Registry.

External¶

Please be aware that Harbor also offers the capability to integrate with external object storage solutions, allowing data to be stored directly on these platforms without the need for configuring Volumes and Persistent Volume Claims (PVCs). This integration remains optional.

Networking¶

MSR 4 is deployed as a workload within a Kubernetes (K8s) cluster and offers multiple deployment options. The diagram below illustrates the network communication between the MSR 4 components.

Network communication between the MSR 4 components varies depending on the deployment configuration.

In a closed deployment, where all components—including Data Layer services—are deployed within the same Kubernetes cluster (either as an all-in-one or high-availability setup), communication occurs over the internal workload network. These components interact through Kubernetes Service resources, with the only externally exposed endpoints belonging to MSR 4. To ensure security, these endpoints must be protected with proper firewall configurations and TLS encryption.

For deployments where Data Layer components are remote, as depicted in the diagram, communication must be secured between the Cluster IP network used by Kubernetes worker nodes and the external endpoints of the key-value (K-V) and database (DB) storage systems.

For a comprehensive list of ports requiring security configurations, refer to Network requirements.

Security¶

Securing MSR 4 requires a comprehensive approach that encompasses all its components, including Harbor, Redis, and PostgreSQL running on Kubernetes, along with additional services such as Trivy and others if enabled. Ensuring the integrity, confidentiality, and availability of data and services is paramount.

This section provides guidance on securing both individual system components and the broader Kubernetes environment.

By implementing security best practices for Kubernetes, Harbor, Redis, and PostgreSQL, you can enhance the security, reliability, and resilience of MSR 4 against potential threats. Continuous monitoring and proactive assessment of your security posture are essential to staying ahead of emerging risks.

Kubernetes Security¶

Kubernetes serves as the foundation for MSR 4, making its security a top priority. Adhering to best practices and maintaining vigilance over the underlying infrastructure that supports MSR 4 is essential.

Since MSR 4 is deployed as a workload within Kubernetes, the following sections outline best practices and recommendations for strengthening the security of the underlying infrastructure.

Access Control¶

To ensure security, the MSR 4 workload should be isolated from other services within the cluster. Ideally, it should be the only workload running on a dedicated Kubernetes cluster. However, if it is co-hosted with other applications, strict access control becomes essential.

A well-configured Role-Based Access Control (RBAC) system is crucial in such cases. Kubernetes RBAC should be enabled and carefully configured to enforce the principle of least privilege, ensuring that each component has only the necessary permissions.

Additionally, using dedicated service accounts for each MSR 4 component, such as Harbor, Redis, and PostgreSQL, helps minimize the attack surface and prevent unnecessary cross-service access.

Securing the Kubernetes platform itself is equally important. The API server must be protected against unauthorized access by implementing strong authentication mechanisms, such as certificate-based or token-based authentication. These measures help safeguard MSR 4 and its infrastructure from potential threats.

Network Policies¶

Defining proper Network Policies is essential to restrict traffic between pods and ensure that only authorized components, such as Redis and PostgreSQL, can communicate with each other and with Harbor.

As outlined in the deployment resources, specific NetworkPolicies are provided for Redis and PostgreSQL when they are deployed separately from the Harbor core. The same level of attention must be given to securing remote data storage solutions if they are used, ensuring that communication remains controlled and protected from unauthorized access.

Secrets Management¶

Kubernetes Secrets store sensitive information such as passwords and tokens, making their protection a critical aspect of security.

Enabling encryption of secrets at rest using Kubernetes’ built-in encryption feature ensures that even if an attacker gains access to the backend storage, they cannot easily retrieve the secrets’ contents.

For environments with more complex security requirements, integrating an external secrets management solution like HashiCorp Vault can provide an additional layer of protection, offering enhanced control and security for sensitive data.

TLS Encryption¶

All internal communications within the Kubernetes cluster must be encrypted using TLS to protect data in transit.

Kubernetes’ native support for TLS certificates should be utilized, or alternatively, integration with a service like cert-manager can streamline certificate management through automation.

Implementing these measures ensures secure communication between components and reduces the risk of unauthorized access or data interception.

Harbor Security¶

Harbor serves as the container registry in MSR 4, making its security crucial for safeguarding both container images and their associated metadata. Ensuring proper security measures are in place helps protect against unauthorized access, image tampering, and potential vulnerabilities within the registry.

Image Signing and Scanning¶

Cosign is used to sign images stored in Harbor, ensuring their authenticity and providing a layer of trust.

In addition, vulnerability scanning via Trivy is enabled by default for all images pushed to Harbor. This helps identify potential security flaws before the images are deployed, ensuring that only secure and trusted images are used in production environments.

Secure Communication¶

It is crucial to configure Harbor to use HTTPS with strong SSL/TLS certificates to secure client-server communications.

For production environments, corporate-signed certificates should be used rather than self-signed ones. Self-signed certificates are acceptable only for testing purposes and should not be used in production, as they do not provide the same level of trust and security as certificates issued by a trusted certificate authority.

Registry Hardening¶

For added security, it is important to assess your specific use case and disable any unused features in Harbor, such as unnecessary APIs, to reduce the attack surface. Regularly reviewing and disabling non-essential functionalities can help minimize potential vulnerabilities.

Additionally, credentials used to access Harbor—such as API tokens and system secrets—should be rotated regularly to enhance security.

Since these credentials are not managed by the internal MSR 4 mechanism, it is recommended to use third-party CI tools or scripts to automate and manage the credential rotation process, ensuring that sensitive resources are updated and protected consistently.

K-V Storage (Redis) Security¶

Redis is an in-memory data store, and securing its configuration and access is critical to maintaining the integrity of cached data. While Redis is often part of MSR 4 installations, it’s important to note that in some cases, a corporate key-value (K-V) storage solution may be used instead. In such scenarios, the responsibility for securing the K-V storage is transferred to the corresponding corporate service team, which must ensure the storage is appropriately configured and protected against unauthorized access or data breaches.

Authentication¶

To secure Redis, it is essential to enable authentication by setting a strong password using the requirepass directive in the Redis configuration. This ensures that only authorized clients can access the Redis instance.

Additionally, TLS/SSL encryption should be enabled to secure communication between Redis clients and the Redis server. This helps protect sensitive data in transit, preventing unauthorized interception or tampering of the information being exchanged.

Network Security¶

Since the placement of the K-V Storage service may vary—whether cohosted on the same cluster, accessed from another cluster, or deployed entirely separately—it is crucial to bind Redis to a private network to prevent unauthorized external access. Redis should only be accessible from trusted sources, and access should be restricted to the minimum necessary.

To achieve this, Kubernetes Network Policies should be used to enforce strict controls on which pods can communicate with the Redis service. This ensures that only authorized pods within the cluster can access Redis, further minimizing the attack surface and enhancing security.

Redis Configuration¶

To enhance security, the CONFIG command should be disabled in Redis to prevent unauthorized users from making changes to the Redis configuration. This reduces the risk of malicious users altering critical settings.

Additionally, for Redis instances that should not be exposed to the internet, consider enabling Redis’ protected mode. This mode ensures that Redis only accepts connections from trusted sources, blocking any unauthorized access attempts from external networks.

DB Service (PostgreSQL) Security¶

PostgreSQL is a relational database, and its security is vital for ensuring data protection and maintaining compliance with regulations. Securing PostgreSQL helps safeguard sensitive information from unauthorized access, tampering, and potential breaches, ensuring that both the integrity and confidentiality of the data are preserved. Proper security measures are essential for both operational efficiency and regulatory adherence.

Data Encryption¶

To protect sensitive data stored on disk, enable data-at-rest encryption in PostgreSQL. This ensures that any data stored in the database is encrypted and remains secure even if the underlying storage is compromised.

Additionally, use SSL/TLS for data-in-transit encryption to secure communications between PostgreSQL and application components. This ensures that data exchanged between the database and clients is encrypted, preventing interception or tampering during transit.

Access Control¶

To enhance security, ensure that PostgreSQL is not directly accessible from the public internet. Use Kubernetes Network Policies to restrict access to authorized services only, ensuring that only trusted internal services can communicate with the database.

Additionally, apply restrictions to limit access based on IP addresses, allowing only trusted sources to connect to PostgreSQL. Furthermore, configure client authentication methods, such as certificate-based authentication, to further secure access and ensure that only authenticated clients can interact with the database.

Backups and Disaster Recovery¶

Regularly backing up the PostgreSQL database is crucial to ensure data integrity and availability. It is essential that backup files are stored securely, preferably in an encrypted format, to protect them from unauthorized access or tampering.

Additionally, enable point-in-time recovery (PITR) to provide the ability to recover the database to a specific state in case of corruption or failure. PITR ensures minimal data loss and allows for quick recovery in the event of an incident.

Logging and Monitoring¶

Proper logging and monitoring are crucial for identifying and responding to security incidents in a timely manner. By capturing detailed logs of database activity, access attempts, and system events, you can detect anomalies and potential security threats. Implementing comprehensive monitoring allows you to track system health, performance, and security metrics, providing visibility into any suspicious behavior. This enables a proactive response to mitigate risks and maintain the integrity and security of the system.

Centralized Logging¶

Implementing centralized logging for Harbor, Redis, PostgreSQL, and Kubernetes is essential for maintaining visibility into system activity and detecting potential security incidents. By aggregating logs from all components in a centralized location, you can more easily monitor and analyze events, track anomalies, and respond to threats quickly.

To achieve this, consider using tools like Fluentd, Elasticsearch, and Kibana (EFK stack). Fluentd can collect and aggregate logs, Elasticsearch stores and indexes the logs, and Kibana provides a user-friendly interface for visualizing and analyzing log data. This setup allows for efficient log management and better insights into system behavior, enabling prompt detection of security incidents.

Security Monitoring¶

Setting up Prometheus and Grafana is an effective way to monitor the health and performance of the system, as well as detect any unusual behavior. Prometheus can collect and store metrics from various components, while Grafana provides powerful dashboards for visualizing those metrics in real-time.

For enhanced security, integrating with external monitoring solutions like Falco or Sysdig is recommended for runtime security monitoring. These tools help detect suspicious activity and provide real-time alerts for potential security breaches, ensuring a comprehensive security monitoring strategy.

Supply Chain¶

Mirantis hosts and controls all sources of MSR 4 that are delivered to the environment, ensuring a secure supply chain. This controlled process is essential for preventing any malware injections or unauthorized modifications to the system infrastructure. By maintaining tight control over the software delivery pipeline, Mirantis helps safeguard the integrity and security of the environment from the outset.

Platform Sources¶

Helm charts and images used for building MSR 4 are hosted and maintained by Mirantis. These resources are regularly scanned and updated according to Mirantis’ corporate schedule, ensuring that they remain secure and up-to-date.

To ensure the security of the environment, the customer must establish a secure communication channel between their infrastructure and Mirantis’ repositories and registries. This can be achieved through specific proxy configurations, which ensure a direct and controlled connection, minimizing the risk of unauthorized access or data breaches.

Patch Management¶

Regularly applying security patches to all components—such as Harbor, Redis, PostgreSQL, and Kubernetes—is essential to mitigate vulnerabilities promptly and maintain a secure environment. Keeping components up-to-date with the latest security patches helps protect the system from known threats and exploits.

It is also important to monitor security bulletins and advisories for updates and fixes relevant to your stack. Staying informed about new vulnerabilities and their corresponding patches allows for quick action when necessary.

While Mirantis handles the security of sources delivered from its repositories and registries, third-party integrations require additional security measures. These must be secured with proper scanning and a regular patching schedule to ensure they meet the same security standards as internal components, reducing the risk of introducing vulnerabilities into the environment.

Compliance Standards¶

Implementing audit trails is essential for tracking and monitoring system activity, enabling you to detect and respond to potential security incidents. Audit logs should capture all critical events, such as access attempts, configuration changes, and data modifications, ensuring accountability and traceability.

Additionally, sensitive data must be encrypted both at rest and in transit. Encryption at rest protects stored data from unauthorized access, while encryption in transit ensures that data exchanged between systems remains secure during transmission. This dual-layer approach helps safeguard sensitive information from potential breaches and attacks.

Mirantis actively checks the sources for Common Vulnerabilities and Exposures (CVEs) and malware injections. This proactive approach ensures that the software and components delivered from Mirantis repositories are thoroughly vetted for security risks, helping to prevent vulnerabilities and malicious code from being introduced into the environment. By conducting these checks, Mirantis maintains a secure supply chain for MSR 4 deployments.

Ensure that the environment adheres to relevant compliance standards such as GDPR, HIPAA, or PCI-DSS, depending on your use case.

Installation Guide¶

Mirantis Secure Registry (MSR) supports two installation scenarios designed to meet most customer needs:

The following comparison highlights key differences to help you choose the most appropriate option for your environment.

Installation Scenario |

Detail |

|---|---|

High Availability |

Deployment of MSR in a high availability configuration on Kubernetes using Helm charts. Benefits

Use case Production environments of medium to large enterprises, where uptime is critical. |

Single host using Docker Compose |

High availability is not supported. Thus, if the MSR instance becomes unavailable, there is no orchestrator to provide redundancy. Benefits

Use case Non-production environments, or smaller enterprises or office sites. Also suitable for non-Kubernetes environments. |

Some organizations may have unique infrastructure requirements or prefer custom deployment strategies that extend beyond the scope outlined here. While Mirantis supports a wide range of use cases, official support is limited to the configurations described above.

Contact Mirantis Professional Services for assistance with specialized installations or custom deployments.

Note

The full set of installation options for MSR follows the Harbor upstream documentation at.

Prerequisites¶

Before proceeding, verify that your environment meets the system requirements.

Hardware requirements¶

The following hardware requirements outline the resources that must be available on the worker node to run MSR 4 services effectively.

Resource |

Minimum |

Recommended |

|---|---|---|

CPU |

2 CPU |

4 CPU |

RAM |

4 GB |

8 GB |

Disk |

40 GB |

160 GB |

Software requirements¶

The following software requirements must be met to run the MSR 4 workload successfully.

Software |

Version and Comment |

|---|---|

Kubernetes |

1.21+ |

HELM |

3.7+ |

Redis |

If remote and not a part of the deployment |

PostgreSQL |

If remote and not a part of the deployment |

Network requirements¶

Certain services will be exposed through the following ports. These ports must be accessible and configured correctly in the firewall.

Port |

Protocol |

Description |

|---|---|---|

80 |

HTTP |

The Harbor portal and core API accept HTTP requests on this port. You can change this port in the configuration file. |

443 |

HTTPS |

The Harbor portal and core API accept HTTPS requests on this port. You can change this port in the configuration file. |

Prepare MKE 3.x for MSR Installation¶

Important

This procedure applies only to Kubernetes environments running MKE 3.x. If you are using MKE 4.x, no additional preparation is required before installing MSR.

To install MSR on MKE you must first configure both the

default:postgres-operator user account and the default:postgres-pod

service account in MKE 3.x with the privileged permission.

To prepare MKE 3.x for MSR install:

Log in to the MKE web UI.

In the left-side navigation panel, click the <username> drop-down to display the available options.

Click Admin Settings > Privileges.

Navigate to the User account privileges section.

Enter

<namespace-name>:postgres-operatorinto the User accounts field.Note

You can replace

<namespace-name>withdefaultto indicate the use of the default namespace.Select the privileged check box.

Scroll down to the Service account privileges section.

Enter

<namespace-name>:postgres-podinto the Service accounts field.Note

You can replace

<namespace-name>withdefaultto indicate the use of the default namespace.Select the privileged checkbox.

Click Save.

Important

For already deployed MSR instances, issue a rolling restart of the

postgres-operator deployment:

kubectl rollout restart deploy/postgres-operator

Install MSR with High Availability¶

This section provides a comprehensive guide for installing MSR with High Availability (HA) into an existing Kubernetes cluster.

Prerequisites¶

To deploy MSR with High Availability (HA) ensure that your environment meets the following requirements.

Host environment¶

- Kubernetes 1.10+ Cluster

HA MSR runs on an existing MKE or other Kubernetes cluster, preferably with a highly available control plane (at least three controllers), a minimum of three worker nodes, and highly available ingress.

- Kubernetes storage backend with ReadWriteMany (RWX) support

A storage backend that allows a Persistent Volume Claim to be shared across all worker nodes in the host cluster (for example, CephFS, AWS EFS, Azure Files).

- Highly-Available PostgreSQL 9.6+

A relational database for metadata storage.

- Highly-Available Redis

An in-memory cache and message/job queue.

Management workstation¶

Use a laptop or virtual machine running Linux, Windows, or macOS, configured to manage Kubernetes and install MSR and its dependencies:

Helm 2.8.0+ - Required for installing databases (PostgreSQL, Redis), MSR components, and other dependencies.

kubectl - Install a kubectl version that matches your Kubernetes cluster.

Kubernetes client access¶

Obtain and install a Kubernetes client bundle or

kubeconfig with embedded certificates on your management workstation to

allow kubectl and Helm to manage your cluster.

This depends on your Kubernetes distribution and configuration.

For MKE 3.8 host cluster, refer to Download the client bundle for more information.

Install Helm¶

To install Helm, run the following command:

curl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

To learn more about Helm refer to Helm’s official documentation Quickstart Guide.

Create PVC across Kubernetes workers¶

HA MSR requires a Persistent Volume Claim (PVC) that can be shared across all worker nodes.

Note

MSR 4 can use any StorageClass and PVC that you configure on your

Kubernetes cluster. The following examples set up cephfs or NFS as your

default StorageClass. For more information, see

Storage Classes

in the official Kubernetes documentation.

Configure cephfs¶

The following example shows how to configure persistent storage for Kubernetes

using cephfs. You can adapt these steps for your environment.

Create a

StorageClass, the specifics of which depend on the storage backend you are using. The following example illustrates how to create a StorageClass class with a CephFS backend and Ceph CSI:apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: cephfs annotations: storageclass.kubernetes.io/is-default-class: "true" provisioner: cephfs.csi.ceph.com parameters: clusterID: <cluster-id>

Run kubectl apply to apply the StorageClass configuration to the cluster, in the appropriate namespace.

Create the PVC:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: shared-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 10Gi storageClassName: cephfs

Note

The

.spec.storageClassNamereferences the name of theStorageClassyou created above.Run kubectl apply to apply PVC to the cluster, in the appropriate namespace.

Configure NFS¶

The following example shows how to configure persistent storage for Kubernetes using NFS. You can adapt these steps for your environment.

Add the Helm repository for the NFS subdirectory external provisioner.

helm repo add nfs-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/ helm repo update

Install the NFS client provisioner. Replace the placeholders with values for your environment.

helm install nfs-client-provisioner nfs-provisioner/nfs-subdir-external-provisioner \ --set nfs.server=<NFS-SERVER-IP> \ --set nfs.path=</DIRECTORY/YOU/WANT/TO/USE> \ --set storageClass.name=nfs-storage \ --set storageClass.defaultClass=true

Install highly available PostgreSQL¶

Install the Zalando Postgres Operator:

helm install postgres-operator postgres-operator --repo https://opensource.zalando.com/postgres-operator/charts/postgres-operator --set configGeneral.docker_image=registry.mirantis.com/msr/spilo:17-4.0-p3-20251117010013

Note

Alternatively, you can configure the deployment to use the default image provided in the upstream Zalando Postgres Operator community release:

helm install postgres-operator postgres-operator --repo https://opensource.zalando.com/postgres-operator/charts/postgres-operator

Create and configure the

msr-postgres-manifest.yamlfile:OpenShift deployments only

While the default PostgreSQL Operator settings allow the operator to run in a general Kubernetes environment, they are not sufficient for OpenShift. To ensure proper functionality, enable the

kubernetes_use_configmapsparameter. For details, refer to the Zalando Postgres Operator quickstart guide.Note

Adjust

numberOfInstancesto match your desired cluster size.apiVersion: "acid.zalan.do/v1" kind: postgresql metadata: name: msr-postgres spec: teamId: "msr" volume: size: 1Gi numberOfInstances: 3 users: msr: # database owner - superuser - createdb databases: registry: msr # dbname: owner postgresql: version: "17"

If you are running RHEL 9.4 or later, exclude the

bg_monmodule from the PostgreSQL configuration as shown below. Refer to the Known issues for more details.apiVersion: "acid.zalan.do/v1" kind: postgresql metadata: name: msr-postgres spec: teamId: "msr" volume: size: 1Gi numberOfInstances: 3 users: msr: # database owner - superuser - createdb databases: registry: msr # dbname: owner postgresql: version: "17" parameters: shared_preload_libraries: "pg_stat_statements,pgextwlist,pg_auth_mon,set_user,timescaledb,pg_cron,pg_stat_kcache"

Deploy the Postgres instance:

kubectl create -f msr-postgres-manifest.yaml

Retrieve connection details for the Postgres service:

Get the service’s IP address:

kubectl get svc \ -l application=spilo,cluster-name=msr-postgres,spilo-role=master \ -o jsonpath={.items..spec.clusterIP}

Get the service’s port number:

kubectl get svc \ -l application=spilo,cluster-name=msr-postgres,spilo-role=master \ -o jsonpath={.items..spec.ports..port}

Upgrade highly available PostgreSQL¶

Verify the Zalando Postgres Operator image:

kubectl get operatorconfiguration.acid.zalan.do -o=jsonpath='{.items[0].configuration.docker_image}'

Perform the upgrade:

helm upgrade postgres-operator postgres-operator --repo https://opensource.zalando.com/postgres-operator/charts/postgres-operator --set configGeneral.docker_image=registry.mirantis.com/msr/spilo:17-4.0-p3-20251117010013

Install highly available Redis¶

Install the Redis Operator from the OT-Container-Kit Helm repository:

helm install redis-operator redis-operator \ --repo https://ot-container-kit.github.io/helm-charts

Generate a strong, random password for authenticating with Redis:

PASSWORD=$(LC_ALL=C tr -dc A-Za-z0-9 </dev/urandom | head -c 24)

Create a Kubernetes secret to securely store the password:

kubectl create secret generic msr-redis-secret \ --from-literal=REDIS_PASSWORD=${PASSWORD}

Deploy the Redis instance:

Note

Set

clusterSizeto the desired number of Redis nodes.helm install msr-redis redis-replication \ --repo https://ot-container-kit.github.io/helm-charts \ --set redisReplication.clusterSize=3 \ --set redisReplication.redisSecret.secretName=msr-redis-secret \ --set redisReplication.redisSecret.secretKey=REDIS_PASSWORD \ --set redisReplication.image=quay.io/opstree/redis \ --set redisReplication.tag=v8.2.2

Retrieve the connection details for the Redis service:

Get the service’s port number:

kubectl get svc msr-redis -o jsonpath={.spec.ports..port}

Upgrade highly available Redis¶

Verify Redis version:

kubectl get pod <Redis pod> -o jsonpath='{.spec.containers[*].image}'

Upgrade Redis:

helm upgrade msr-redis redis-replication \ --repo https://ot-container-kit.github.io/helm-charts \ --set redisReplication.clusterSize=3 \ --set redisReplication.redisSecret.secretName=msr-redis-secret \ --set redisReplication.redisSecret.secretKey=REDIS_PASSWORD \ --set redisReplication.image=quay.io/opstree/redis \ --set redisReplication.tag=v8.2.2

Note

Mirantis recommends installing Redis version 8.2.2 or the latest validated release. For more options, refer to the official redis-operator page.

Install highly available MSR¶

Generate a configuration values file for the chart:

helm show values oci://registry.mirantis.com/harbor/helm/msr --version <MSR-VERSION> > msr-values.yaml

Helm automatically creates certificates. To manually create your own, follow these steps:

Create a directory for certificates named

certs:mkdir certsCreate a

certs.conftext file in thecertsdirectory:[req] distinguished_name = req_distinguished_name x509_extensions = v3_req prompt = no [req_distinguished_name] C = US ST = State L = City O = Organization OU = Organizational Unit CN = msr [v3_req] keyUsage = digitalSignature, keyEncipherment, dataEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names [alt_names] IP.1 = <IP-ADDRESS-OF-WORKERNODE> # Replace with your actual IP address

Generate the certificate and the key using the

certs.conffile you just created:openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -config certs.conf

If you are using the Helm certificates skip this step. If you manually created your own certificates, create the Kubernetes secret. Run the following command from outside of the

certsfolder:kubectl create secret tls <NAME-OF-YOUR-SECRET> \ --cert=certs/tls.crt \ --key=certs/tls.key

Modify the

msr-values.yamlfile to configure MSR:Set the expose type:

expose: # Set how to expose the service. Set the type as "ingress", "clusterIP", "nodePort" or "loadBalancer" # and fill the information in the corresponding section type: nodePort

Set the cert source to TLS and the secret name:

certSource: secret secret: # The name of secret which contains keys named: # "tls.crt" - the certificate # "tls.key" - the private key secretName: "<NAME-OF-YOUR-SECRET>"

Set the

nodePortports to allownodePort ingress. You can use any ephemeral port. Some Kubernetes distributions restrict the range. Generally accepted range is 32768-35535.nodePort: # The name of NodePort service name: harbor ports: http: # The service port Harbor listens on when serving HTTP port: 80 # The node port Harbor listens on when serving HTTP nodePort: <httpNodePort> https: # The service port Harbor listens on when serving HTTPS port: 443 # The node port Harbor listens on when serving HTTPS nodePort: <httpNodePort>

Set the external URL, if using nodePort use a worker node IP address (the same one that you used in generating the cert):

externalURL: <A-WORKER-NODE-EXTERNAL-IP:httpsNodePort>

Enable data persistence:

persistence: enabled: true

If you are using a named StorageClass (as opposed to the default StorageClass) you need to specify it as shown in the following sample: