Hardware requirements for Cloud Provider Infrastructure

Hardware requirements for Cloud Provider Infrastructure¶

The reference architecture for MCP Cloud Provider Infrastructure (CPI) use case requires 9 infrastructure nodes to run the control plane services.

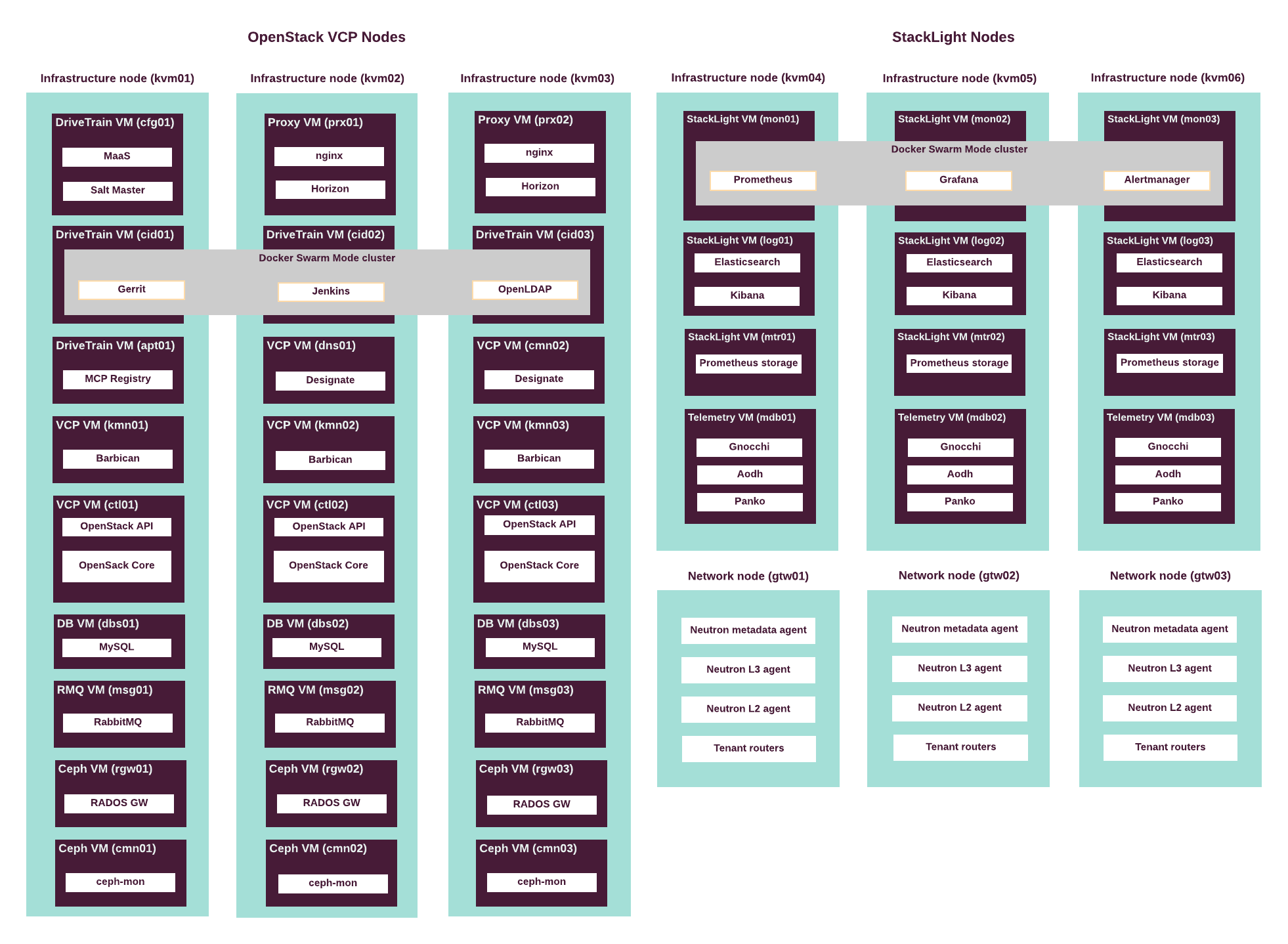

The following diagram displays the components mapping of the infrastructure nodes in the CPI reference architecture.

Hardware requirements for the CPI reference architecture are based on the capacity requirements of the control plane virtual machines and services. See details in Virtualized control plane layout.

The following table summarizes the actual configuration of the hardware infrastucture nodes used by Mirantis to validate and verify the CPI reference architecture. Use it as a reference to plan the hardware bill of materials for your installation of MCP.

| Server role | Servers number | Server model | CPU model | CPUs number | vCores number | RAM, GB | Storage, GB | NIC model | NICs number |

|---|---|---|---|---|---|---|---|---|---|

| Infrastructure node (VCP) | 3 | Supermicro SYS-6018R-TDW | Intel E5-2650v4 | 2 | 48 | 256 | 1900 [1] | Intel X520-DA2 | 2 |

| Infrastructure node (StackLight LMA) | 3 | Supermicro SYS-6018R-TDW | Intel E5-2650v4 | 2 | 48 | 256 | 5700 [2] | Intel X520-DA2 | 2 |

| Tenant gateway | 3 | Supermicro SYS-6018R-TDW | Intel E5-2620v4 | 1 | 16 | 96 | 960 [3] | Intel X520-DA2 | 2 |

| Compute node | 50 to 150 | Supermicro SYS-6018R-TDW | [4] | [4] | [4] | [4] | 960 [3] [5] | Intel X520-DA2 | 2 |

| Ceph OSD | 9+ [6] | Supermicro SYS-6018R-TDW | Intel E5-2620v4 | 1 | 16 | 96 | 960 [3] [7] | Intel X520-DA2 | 2 |

| [1] | One SSD, Micron 5200 MAX or similar. |

| [2] | Three SSDs, 1900 GB each, Micron 5200 MAX or similar. |

| [3] | (1, 2, 3) Two SSDs, 480 GB each, WD Blue 3D (WDS500G2B0A) or similar. |

| [4] | (1, 2, 3, 4) Depends on capacity requirements and compute planning. See details in Compute nodes planning. |

| [5] | Minimal system storage. Additional storage for virtual server instances might be required. |

| [6] | Minimal recommended number of Ceph OSD nodes for production deployment is 9. See details in Additional Ceph considerations. |

| [7] | Minimal system storage. Additional devices are required for Ceph storage, cache, and journals. For more details on Ceph storage configuration, see Ceph OSD hardware considerations. |

Note

RAM capacity of this hardware configuration includes overhead

for GlusterFS servers running on the infrastructure nodes

(kvm01, kvm02, and kvm03).

The rule of thumb for capacity planning of the infrastructure nodes is to have at least 10% more RAM than planned for all virtual machines on the host combined. This rule is also applied by StackLight LMA, and it will start sending alerts if less than 10% or 8 GB of RAM is free on an infrastructure node.

See also