MCP Ceph cluster overview

MCP Ceph cluster overview¶

MCP uses Ceph as a primary solution for all storage types, including image storage, ephemeral storage, persistent block storage, and object storage. When planning your Ceph cluster, consider the following:

- Ceph version

- Supported versions of Ceph are listed in the MCP Release Notes: Release artifacts section.

- Daemon colocation

Ceph uses the Ceph Monitor (

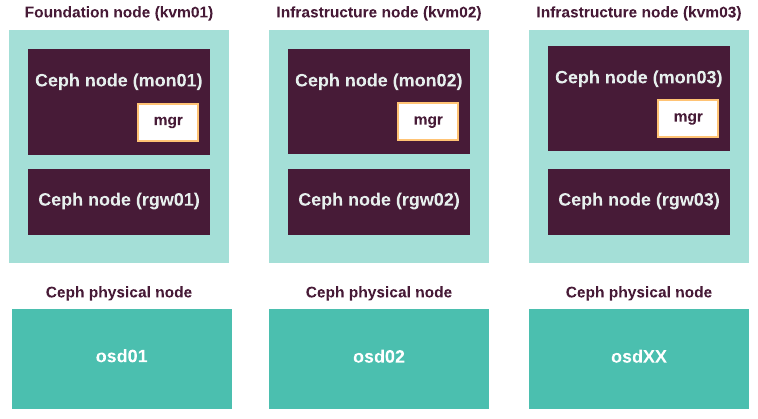

ceph.mon), object storage (ceph.osd), Ceph Manager (ceph-mgr) and RADOS Gateway (ceph.radosgw) daemons to handle the data and cluster state. Each daemon requires different computing capacity and hardware optimization.Mirantis recommends running Ceph Monitors and RADOS Gateway daemons on dedicated virtual machines or physical nodes. Colocating the daemons with other services may negatively impact cluster operations. Three Ceph Monitors are required for a cluster of any size. If you have to install more than three, the number of Monitors must be odd.

Ceph Manager is installed on every node (virtual or physical) running Ceph Monitor, to achieve the same level of availability.

Note

MCP Reference Architecture uses 3 instances of RADOS Gateway and 3 Ceph Monitors. The daemons run in dedicated virutal machines, one VM of each type per infrastructure node, on 3 infrastructure nodes.

- Store type

Ceph can use either the BlueStore or FileStore backend. The BlueStore back end typically provides better performance than FileStore because in BlueStore the journaling is internal and more light-weight compared to FileStore. Mirantis supports BlueStore only for Ceph versions starting from Luminous.

For more information about Ceph backends, see Storage devices.

- BlueStore configuration

BlueStore uses Write-Ahead Log (WAL) and Database to store the internal journal and other metadata. WAL and Database may reside on a dedicated device or on the same device as the actual data (primary device).

- Dedicated

Mirantis recommends using a dedicated WAL/DB device whenever possible. Typically, it results in better storage performance. One write-optimized SSD is recommended for WAL/DB metadata per five primary HDD devices.

- Colocated

WAL and Database metadata are stored on the primary device. Ceph will allocate space for data and metadata storage automatically. This configuration may result in slower storage performance in some environments.

- FileStore configuration

Mirantis recommends using dedicated write-optimized SSD devices for Ceph journal partitions. Use one journal device per five data storage devices.

It is possible to store the journal on the data storage devices. However, Mirantis does not recommend it unless special circumstances preclude the use of dedicated SSD journal devices.

Note

MCP Reference Configuration uses BlueStore store type as a default.

- Ceph cluster networking

A Ceph cluster requires having at least the front-side network, which is used for client connections (public network in terms of Ceph documentation). Ceph Monitors and OSDs are always connected to the front-side network.

To improve the performance of the replication operations, you may additionally set up the back-side network (or cluster network in terms of Ceph documentation), which is used for communication between OSDs. Mirantis recommends assigning dedicated interface to the cluster network. For more details on Ceph cluster networking, see Ceph Network Configuration Reference.

- Pool parameters

Set up each pool according to expected usage. Consider at least the following pool parameters:

min_sizesets the minimum number of replicas required to perform I/O on the pool.sizesets the number of replicas for objects in the pool.typesets the pool type, which can be eitherreplicatedorerasure.

The following diagram describes the Reference Architecture of Ceph cluster in MCP: