OpenStack compact cloud

OpenStack compact cloud¶

The Compact Cloud is an OpenStack-based reference architecture for MCP. It is designed to provide a generic public cloud user experience to the cloud tenants in terms of available virtual infrastructure capabilities and expectations. It features reduced control plane footprint, at the cost of reduced maximum capacity.

The compact reference architecture is designed to support up to 500 virtual servers or 50 hypervisor hosts. In addition to the desirable number of hypervisors, 3 infrastructure physical servers are required for the control plane. These 3 servers host the OpenStack virtualized control plane (VCP), StackLight services, and virtual Neutron gateway nodes.

Note

Out of the box, the compact reference architecture supports only Neutron OVS networking for OpenStack. DVR is enabled by default.

OpenContrail is not supported out of the box in the compact reference architecture.

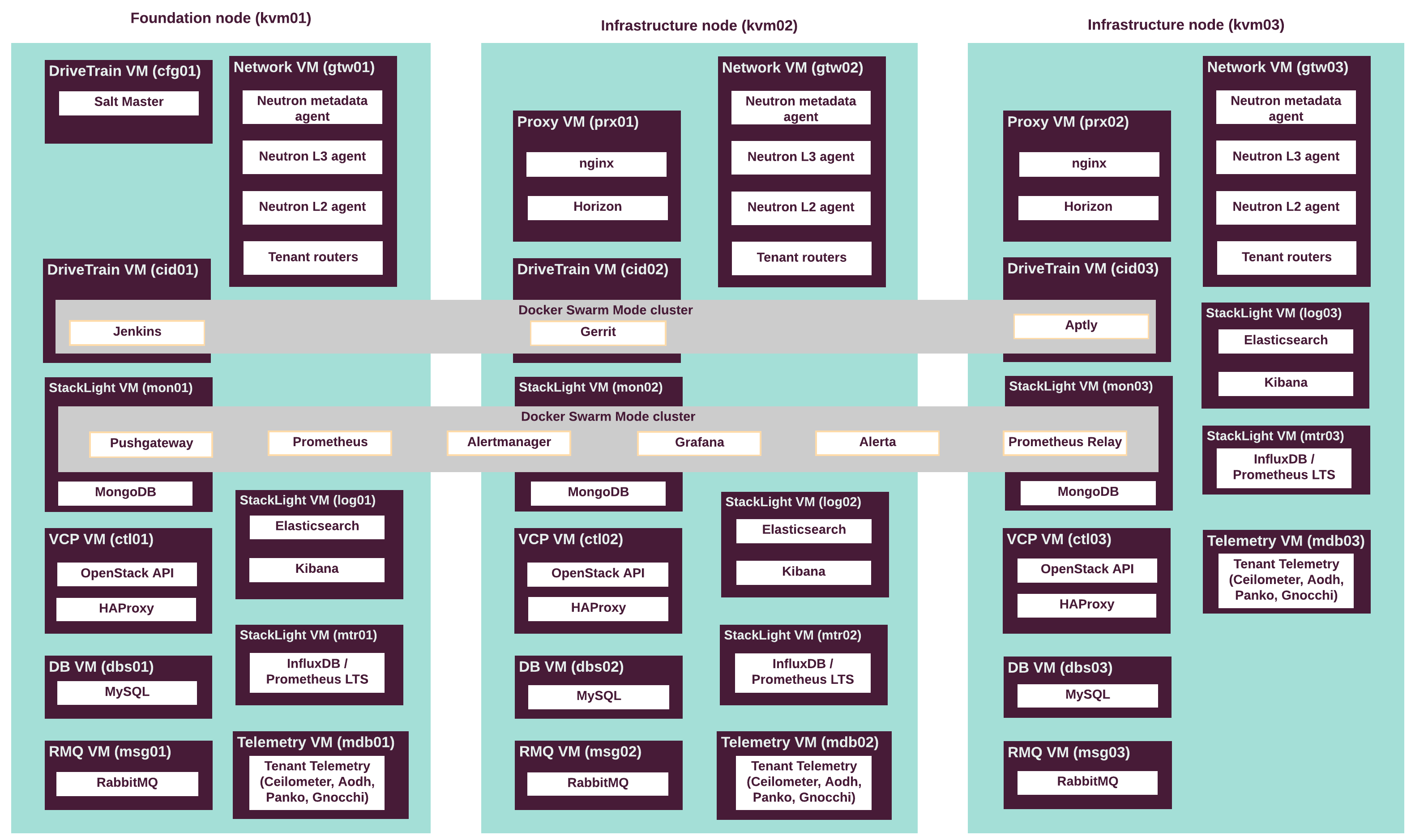

The following diagram describes the distribution of VCP and other services throughout the infrastructure nodes.

The following table describes the hardware nodes in the CPI reference architecture, roles assigned to them, and the number of nodes of each type.

| Node type | Role name | Number of servers |

|---|---|---|

| Infrastructure nodes (VCP) | kvm |

3 |

| OpenStack compute nodes | cmp |

up to 50 |

| Staging infrastructure nodes | kvm |

3 |

| Staging compute nodes | cmp |

2 - 5 |

The following table summarizes the VCP virtual machines mapped to physical servers.

| Virtual server roles | Physical servers | # of instances | CPU vCores per instance | Memory (GB) per instance | Disk space (GB) per instance |

|---|---|---|---|---|---|

ctl |

kvm01

kvm02

kvm03 |

3 | 8 | 32 | 100 |

msg |

kvm01

kvm02

kvm03 |

3 | 8 | 32 | 100 |

dbs |

kvm01

kvm02

kvm03 |

3 | 8 | 16 | 100 |

prx |

kvm02

kvm03 |

2 | 4 | 8 | 50 |

cfg |

kvm01 |

1 | 2 | 8 | 50 |

mon |

kvm01

kvm02

kvm03 |

3 | 4 | 16 | 500 |

mtr |

kvm01

kvm02

kvm03 |

3 | 4 | 32 | 1000 |

log |

kvm01

kvm02

kvm03 |

3 | 4 | 32 | 2000 |

cid |

kvm01

kvm02

kvm03 |

3 | 8 | 32 | 100 |

gtw |

kvm01

kvm02

kvm03 |

3 | 4 | 16 | 50 |

mdb |

kvm01

kvm02

kvm03 |

3 | 4 | 8 | 150 |

| TOTAL | 30 | 166 | 672 | 12450 |

Note

- The

gtwVM should have four separate NICs for the following interfaces:dhcp,primary,tenant, andexternal. It simplifies the host networking as you do not need to pass VLANs to VMs. - The

prxVM should have an additional NIC for the proxy network. - All other nodes should have two NICs for DHCP and primary networks.

See also

- Control plane virtual machines for the details on the functions of nodes of each type.

- Hardware requirements for Cloud Provider Infrastructure for the reference hardware configuration for each type of node.