Types of networks

Types of networks¶

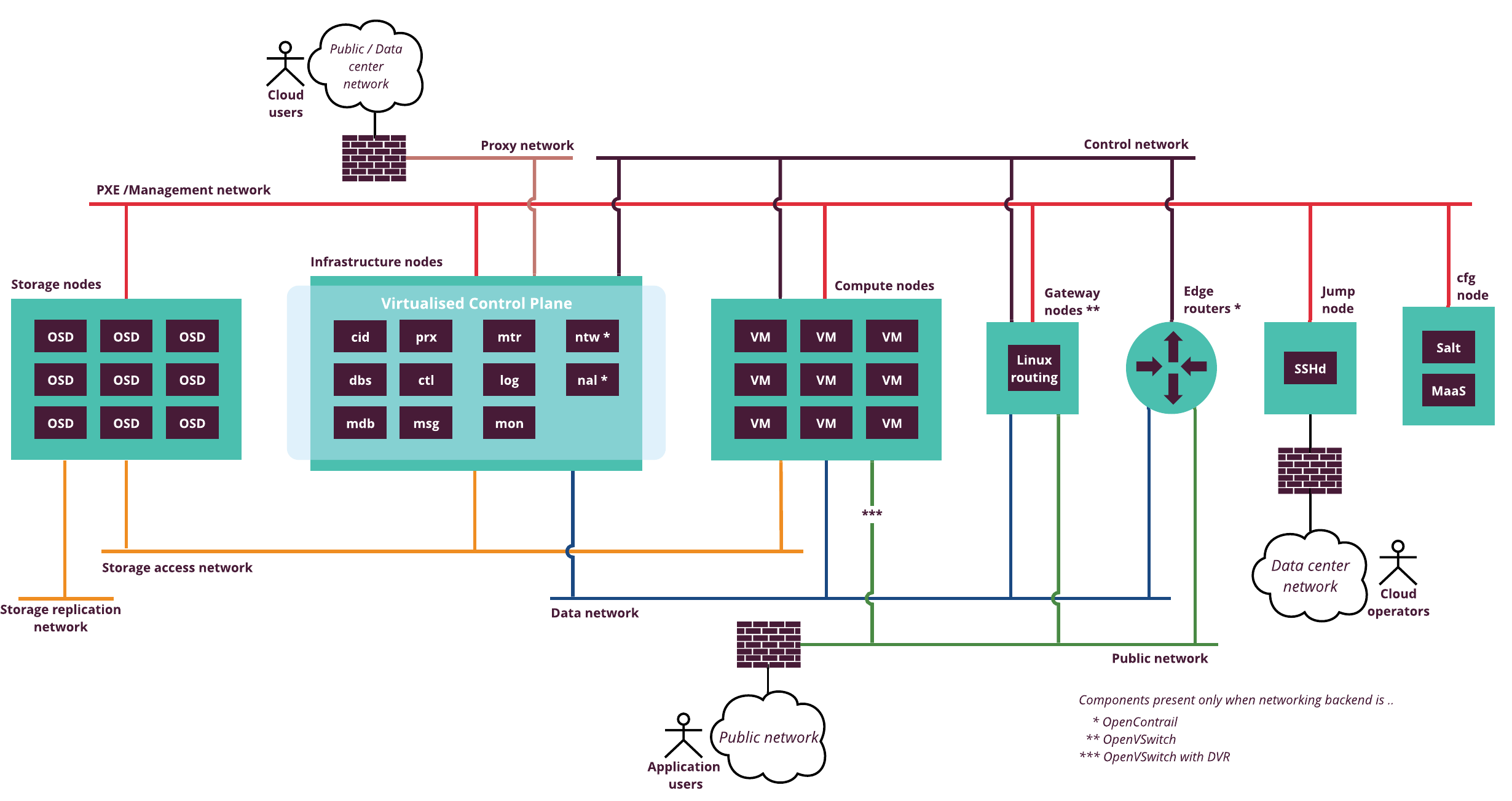

The following diagram provides an overview of the underlay networks in an OpenStack environment:

An OpenStack environment typically uses the following types of networks:

Underlay networks for OpenStack that are required to build network infrastructure and related components. See details on the physical network infrastructure for CPI reference architecture in Networking.

PXE / Management

This network is used by SaltStack and MAAS to serve deployment and provisioning traffic, as well as to communicate with nodes after deployment. After deploying an OpenStack environment, this network runs low traffic. Therefore, a dedicated 1 Gbit network interface is sufficient. The size of the network also depends on the number of hosts managed by MAAS and SaltStack.

Public

Virtual machines access the Internet through Public network. Public network provides connectivity to the globally routed address space for VMs. In addition, Public network provides a neighboring address range for floating IPs that are assigned to individual VM instances by the project administrator.

Proxy

This network is used for network traffic created by Horizon and for OpenStack API access. The proxy network requires routing. Typically, two proxy nodes with Keepalived VIPs are present in this network, therefore, the /29 network is sufficient. In some use cases, you can use Proxy network as Public network.

Control

This network is used for internal communication between the components of the OpenStack environment. All nodes are connected to this network including the VCP virtual machines and KVM nodes. OpenStack control services communicate through the control network. This network requires routing.

Data

This network is used to build a network overlay. All tenant networks, including floating IP, fixed with RT, and private networks, are carried over this underlay network. VxLAN encapsulation is used by default in CPI reference architecture.

Data network does not require external routing by default. Routing for tenant networks is handled by Neutron gateway nodes. Routing for Data underlay network may be required if you want to access your workloads from corporate network directly (not via Floating IP addresses). In this case, external routers are required that are not managed by MCP.

Storage access (optional)

This network is used to access Ceph storage servers (OSD nodes). The network does not need to be accessible from outside the cloud environment. However, Mirantis recommends that you reserve a dedicated and redundant 10 Gbit network connection to ensure low latency and fast access to the block storage volumes. You can configure this network with routing for L3 connectivity or without routing. If you set this network without routing, you must ensure additional L2 connectivity to nodes that use Ceph.

Storage replication (optional)

This network is used for copying data between OSD nodes in a Ceph cluster. Does not require access from outside the OpenStack environment. However, Mirantis recommends reserving a dedicated and redundant 10 Gbit network connection to accommodation high replication traffic. Use routing only if rack-level L2 boundary is required or if you want to configure smaller broadcast domains (subnets).

Virtual networks inside OpenStack

Virtual networks inside OpenStack include virtual public and internal networks. Virtual public network connects to the underlay public network. Virtual internal networks exist within the underlay data network. Typically, you need multiple virtual networks of both types to address the requirements of your workloads.

In the reference architecture for Cloud Provider Infrastructure, isolated virtual/physical networks must be configured for PXE and Proxy traffic. For PXE network, 1 GbE interface is required. For Proxy, Data and Control network, CPI uses 10 GbE interfaces bonded together and split into VLANs, one VLAN per network. For Storage networks CPI requires a dedicated pair of 10 GbE interfaces bonded together for increased performance and resiliency. Storage access and replication networks may be divided into separate VLANs on top of the dedicated bond interface.

See also