Mirantis Container Cloud (MCC) becomes part of Mirantis OpenStack for Kubernetes (MOSK)!

Starting with MOSK 25.2, the MOSK documentation set covers all product layers, including MOSK management (formerly Container Cloud). This means everything you need is in one place. Some legacy names may remain in the code and documentation and will be updated in future releases. The separate Container Cloud documentation site will be retired, so please update your bookmarks for continued easy access to the latest content.

CephOsdRemoveRequest OSD removal status¶

Warning

This procedure is valid for MOSK clusters that use the MiraCeph custom

resource (CR), which is available since MOSK 25.2 to replace the deprecated

KaaSCephCluster. For the equivalent procedure with the KaaSCephCluster

CR, refer to the following section:

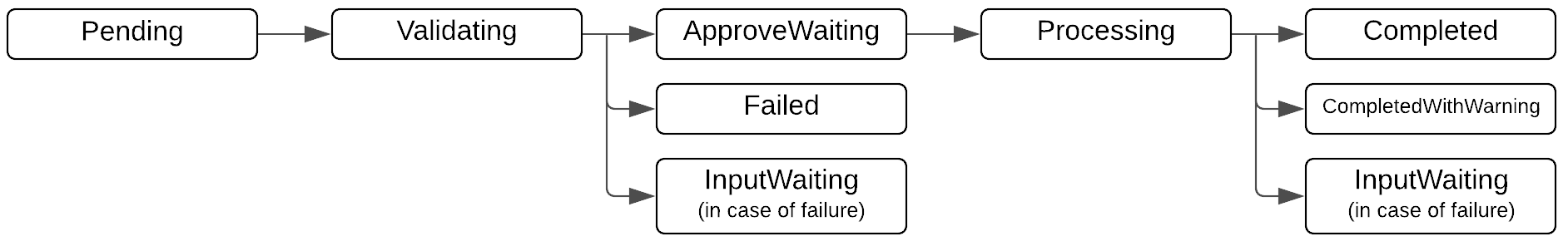

This section describes the status fields of the

CephOsdRemoveRequest CR that you can use to review a Ceph OSD or node

removal phases. The following diagram represents the phases flow:

Parameter |

Description |

|---|---|

|

Describes the current request phase that can be one of:

|

|

The overall information about the Ceph OSDs to remove: final removal

map, issues, and warnings. Once the The

|

|

Informational messages describing the reason for the request transition to the next phase. |

|

History of spec updates for the request. |

Example of status.removeInfo after successful Validation

removeInfo:

cleanUpMap:

"node-a":

completeCleanUp: true

osdMapping:

"2":

deviceMapping:

"sdb":

path: "/dev/disk/by-path/pci-0000:00:0a.0"

partition: "/dev/ceph-a-vg_sdb/osd-block-b-lv_sdb"

type: "block"

class: "hdd"

zapDisk: true

"6":

deviceMapping:

"sdc":

path: "/dev/disk/by-path/pci-0000:00:0c.0"

partition: "/dev/ceph-a-vg_sdc/osd-block-b-lv_sdc-1"

type: "block"

class: "hdd"

zapDisk: true

"11":

deviceMapping:

"sdc":

path: "/dev/disk/by-path/pci-0000:00:0c.0"

partition: "/dev/ceph-a-vg_sdc/osd-block-b-lv_sdc-2"

type: "block"

class: "hdd"

zapDisk: true

"node-b":

osdMapping:

"1":

deviceMapping:

"sdb":

path: "/dev/disk/by-path/pci-0000:00:0a.0"

partition: "/dev/ceph-b-vg_sdb/osd-block-b-lv_sdb"

type: "block"

class: "ssd"

zapDisk: true

"15":

deviceMapping:

"sdc":

path: "/dev/disk/by-path/pci-0000:00:0b.1"

partition: "/dev/ceph-b-vg_sdc/osd-block-b-lv_sdc"

type: "block"

class: "ssd"

zapDisk: true

"25":

deviceMapping:

"sdd":

path: "/dev/disk/by-path/pci-0000:00:0c.2"

partition: "/dev/ceph-b-vg_sdd/osd-block-b-lv_sdd"

type: "block"

class: "ssd"

zapDisk: true

"node-c":

osdMapping:

"0":

deviceMapping:

"sdb":

path: "/dev/disk/by-path/pci-0000:00:1t.9"

partition: "/dev/ceph-c-vg_sdb/osd-block-c-lv_sdb"

type: "block"

class: "hdd"

zapDisk: true

"8":

deviceMapping:

"sde":

path: "/dev/disk/by-path/pci-0000:00:1c.5"

partition: "/dev/ceph-c-vg_sde/osd-block-c-lv_sde"

type: "block"

class: "hdd"

zapDisk: true

"sdf":

path: "/dev/disk/by-path/pci-0000:00:5a.5",

partition: "/dev/ceph-c-vg_sdf/osd-db-c-lv_sdf-1",

type: "db",

class: "ssd"

The example above is based on the example spec provided in

CephOsdRemoveRequest OSD removal specification.

During the Validation phase, the provided information was validated and

reflects the final map of the Ceph OSDs to remove:

For

node-a, Ceph OSDs with IDs2,6, and11will be removed with the related disk and its information: all block devices, names, paths, and disk class.For

node-b, the Ceph OSDs with IDs1,15, and25will be removed with the related disk information.For

node-c, the Ceph OSD with ID8will be removed, which is placed on the specifiedsdbdevice. The related partition on thesdfdisk, which is used as the BlueStore metadata device, will be cleaned up keeping the disk itself untouched. Other partitions on that device will not be touched.

Example of removeInfo with removeStatus succeeded

removeInfo:

cleanUpMap:

"node-a":

completeCleanUp: true

hostRemoveStatus:

status: Removed

osdMapping:

"2":

removeStatus:

osdRemoveStatus:

status: Removed

deploymentRemoveStatus:

status: Removed

name: "rook-ceph-osd-2"

deviceCleanUpJob:

status: Finished

name: "job-name-for-osd-2"

deviceMapping:

"sdb":

path: "/dev/disk/by-path/pci-0000:00:0a.0"

partition: "/dev/ceph-a-vg_sdb/osd-block-b-lv_sdb"

type: "block"

class: "hdd"

zapDisk: true

Example of removeInfo with removeStatus failed

removeInfo:

cleanUpMap:

"node-a":

completeCleanUp: true

osdMapping:

"2":

removeStatus:

osdRemoveStatus:

errorReason: "retries for cmd ‘ceph osd ok-to-stop 2’ exceeded"

status: Failed

deviceMapping:

"sdb":

path: "/dev/disk/by-path/pci-0000:00:0a.0"

partition: "/dev/ceph-a-vg_sdb/osd-block-b-lv_sdb"

type: "block"

class: "hdd"

zapDisk: true

Example of removeInfo with removeStatus failed by timeout

removeInfo:

cleanUpMap:

"node-a":

completeCleanUp: true

osdMapping:

"2":

removeStatus:

osdRemoveStatus:

errorReason: Timeout (30m0s) reached for waiting pg rebalance for osd 2

status: Failed

deviceMapping:

"sdb":

path: "/dev/disk/by-path/pci-0000:00:0a.0"

partition: "/dev/ceph-a-vg_sdb/osd-block-b-lv_sdb"

type: "block"

class: "hdd"

zapDisk: true

Note

In case of failures similar to the examples above, review the

ceph-request-controller logs and the Ceph cluster status. Such failures

may simply indicate timeout and retry issues. If no other issues were found,

re-create the request with a new name and skip adding successfully removed

Ceph OSDS or Ceph nodes.