Mirantis Container Cloud (MCC) becomes part of Mirantis OpenStack for Kubernetes (MOSK)!

Now, the MOSK documentation set covers all product layers, including MOSK management (formerly Container Cloud). This means everything you need is in one place. Some legacy names may remain in the code and documentation and will be updated in future releases. The separate Container Cloud documentation site will be retired, so please update your bookmarks for continued easy access to the latest content.

OpenStack cluster¶

OpenStack and auxiliary services are running as containers in the kind: Pod

Kubernetes resources. All long-running services are governed by one of

the ReplicationController-enabled Kubernetes resources, which include

either kind: Deployment, kind: StatefulSet, or kind: DaemonSet.

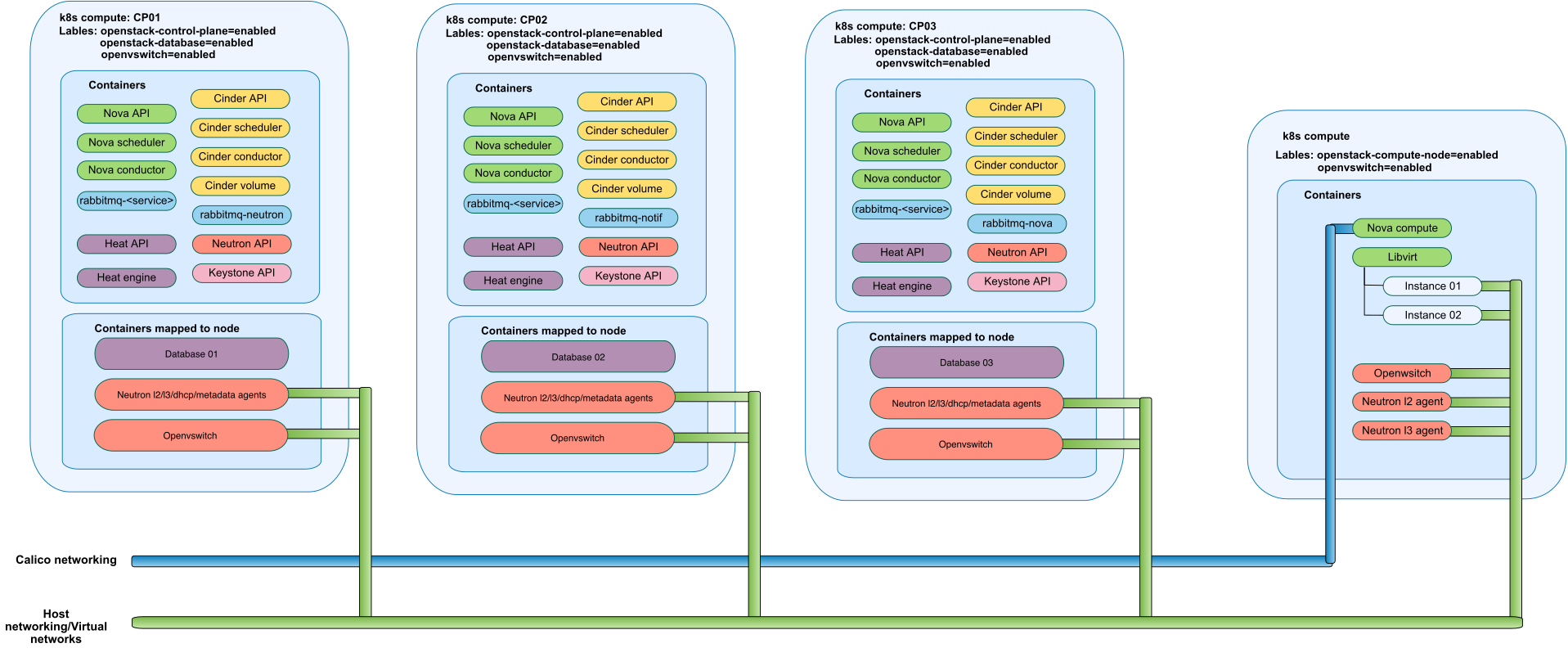

The placement of the services is mostly governed by the Kubernetes node labels. The labels affecting the OpenStack services include:

openstack-control-plane=enabled- the node hosting most of the OpenStack control plane services.openstack-compute-node=enabled- the node serving as a hypervisor for Nova. The virtual machines with tenants workloads are created there.openvswitch=enabled- the node hosting Neutron L2 agents and OpenvSwitch pods that manage L2 connection of the OpenStack networks.openstack-gateway=enabled- the node hosting Neutron L3, Metadata and DHCP agents, Octavia Health Manager, Worker and Housekeeping components.

Note

OpenStack is an infrastructure management platform. Mirantis OpenStack for Kubernetes (MOSK) uses Kubernetes mostly for orchestration and dependency isolation. As a result, multiple OpenStack services are running as privileged containers with host PIDs and Host Networking enabled. You must ensure that at least the user with the credentials used by Helm/Tiller (administrator) is capable of creating such Pods.

Infrastructure services¶

Service |

Description |

|---|---|

Storage |

While the underlying Kubernetes cluster is configured to use Ceph CSI for providing persistent storage for container workloads, for some types of workloads such networked storage is suboptimal due to latency. This is why the separate |

Database |

A single WSREP (Galera) cluster of MariaDB is deployed as the SQL

database to be used by all OpenStack services. It uses the storage class

provided by Local Volume Provisioner to store the actual database files.

The service is deployed as |

Messaging |

RabbitMQ is used as a messaging bus between the components of the OpenStack services. A separate instance of RabbitMQ is deployed for each OpenStack service that needs a messaging bus for intercommunication between its components. An additional, separate RabbitMQ instance is deployed to serve as a notification messages bus for OpenStack services to post their own and listen to notifications from other services. StackLight also uses this message bus to collect notifications for monitoring purposes. Each RabbitMQ instance is a single node and is deployed as

|

Caching |

A single multi-instance of the Memcached service is deployed to be used by all OpenStack services that need caching, which are mostly HTTP API services. |

Coordination |

A separate instance of etcd is deployed to be used by Cinder, which require Distributed Lock Management for coordination between its components. |

Ingress |

Is deployed as |

Image pre-caching |

A special This is especially useful for containers used in |

OpenStack services¶

Service |

Description |

|---|---|

Identity (Keystone) |

Uses MySQL backend by default.

|

Image (Glance) |

Supported backend is RBD (Ceph is required). |

Volume (Cinder) |

Supported backend is RBD (Ceph is required). |

Network (Neutron) |

Supported backends are Open vSwitch, Open Virtual Network, and Tungsten Fabric. |

Placement |

|

Compute (Nova) |

Supported hypervisor is Qemu/KVM through libvirt library. |

Dashboard (Horizon) |

|

DNS (Designate) |

Supported backend is PowerDNS. |

Load Balancer (Octavia) |

|

Ceph Object Gateway (SWIFT) |

Provides the object storage, the Ceph Object Gateway S3 API, and the Ceph

Object Gateway Swift API that is compatible with the OpenStack Swift API.

You can manually enable the service in the |

Instance HA (Masakari) |

An OpenStack service that ensures high availability of instances running

on a host. You can manually enable Masakari in the

|

Orchestration (Heat) |

|

Key Manager (Barbican) |

The supported backends include:

|

Tempest |

Runs tests against a deployed OpenStack cloud. You can manually enable

Tempest in the |

Shared Filesystems (OpenStack Manila) |

Provides Shared Filesystems as a service that enables you to create and manage shared filesystems in a multi-project cloud environments. For details, refer to Shared Filesystems service. |

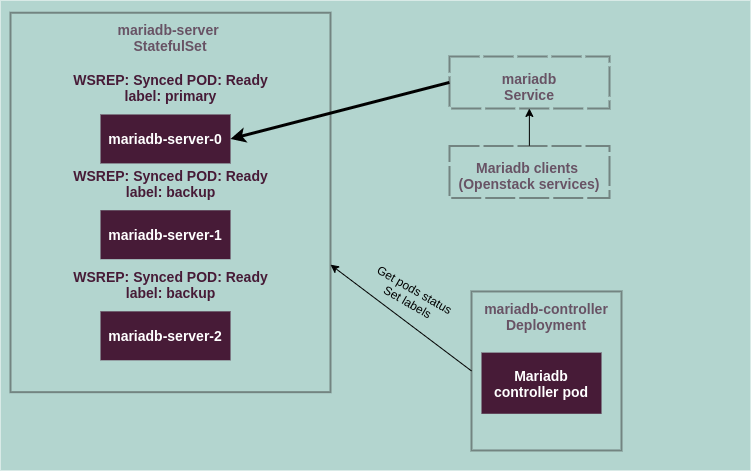

OpenStack database architecture¶

A complete setup of a MariaDB Galera cluster for OpenStack is illustrated in the following image:

MariaDB server pods are running a Galera multi-master cluster. Clients

requests are forwarded by the Kubernetes mariadb service to the

mariadb-server pod that has the primary label. Other pods from

the mariadb-server StatefulSet have the backup label. Labels are

managed by the mariadb-controller pod.

The MariaDB Controller periodically checks the readiness of the

mariadb-server pods and sets the primary label to it if the following

requirements are met:

The

primarylabel has not already been set on the pod.The pod is in the ready state.

The pod is not being terminated.

The pod name has the lowest integer suffix among other ready pods in the StatefulSet. For example, between

mariadb-server-1andmariadb-server-2, the pod with themariadb-server-1name is preferred.

Otherwise, the MariaDB Controller sets the backup label. This means that

all SQL requests are passed only to one node while other two nodes are in

the backup state and replicate the state from the primary node.

The MariaDB clients are connecting to the mariadb service.