Mirantis Container Cloud (MCC) becomes part of Mirantis OpenStack for Kubernetes (MOSK)!

Now, the MOSK documentation set covers all product layers, including MOSK management (formerly Container Cloud). This means everything you need is in one place. Some legacy names may remain in the code and documentation and will be updated in future releases. The separate Container Cloud documentation site will be retired, so please update your bookmarks for continued easy access to the latest content.

Ceph overview¶

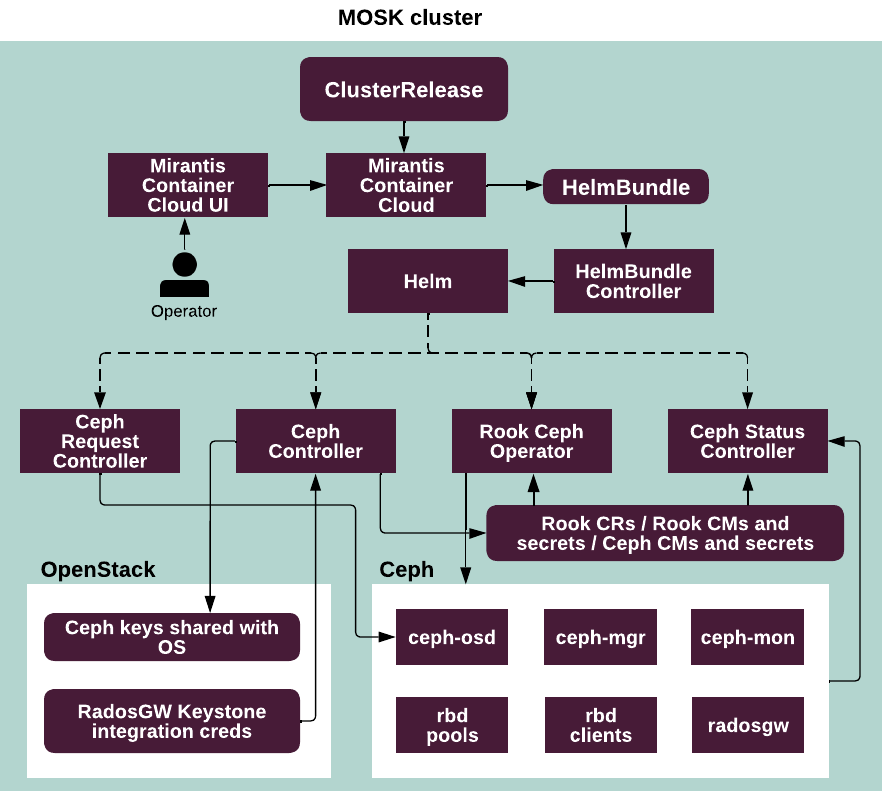

MOSK management deploys Ceph on MOSK using Helm charts with the following components:

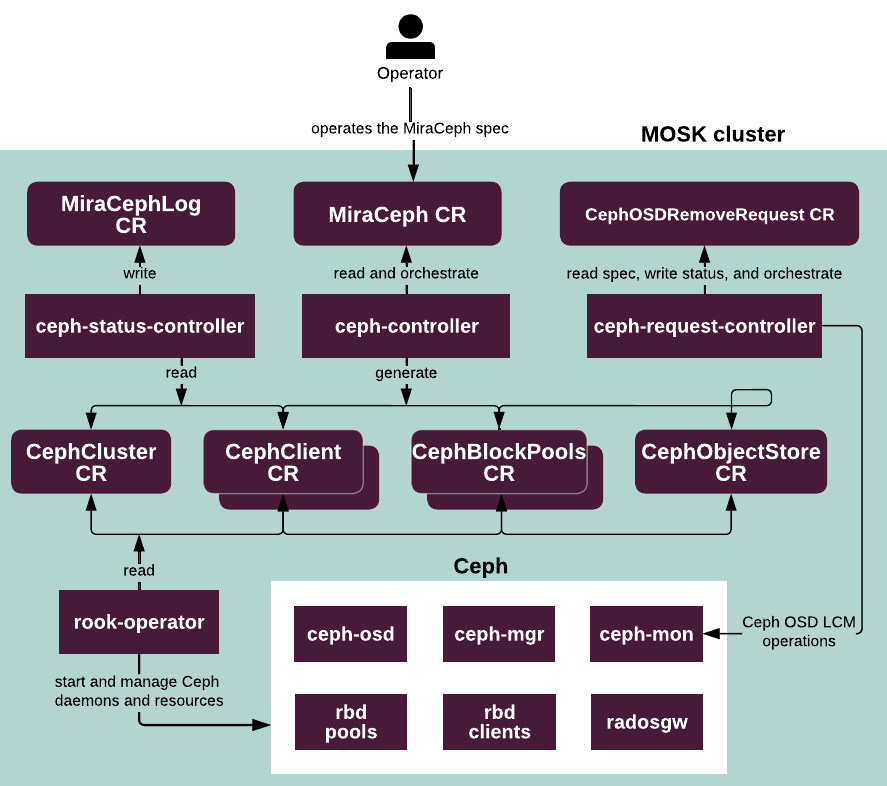

- Rook Ceph Operator

A storage orchestrator that deploys Ceph on top of a Kubernetes cluster. Also known as

RookorRook Operator. Rook operations include:Deploying and managing a Ceph cluster based on provided Rook CRs such as

CephCluster,CephBlockPool,CephObjectStore, and so on.Orchestrating the state of the Ceph cluster and all its daemons.

- Ceph cluster custom resource (Ceph cluster CR)

The

MiraCephCR that is managed byceph-controlleron the MOSK cluster. It represents the customization of a Kubernetes installation and allows you to define the required Ceph configuration before deployment. For example, you can define the failure domain, Ceph pools, Ceph node roles, number of Ceph components such as Ceph OSDs, and so on.Warning

The

KaaSCephClusterCR, managed byceph-kcc-controlleron the management cluster, is deprecated.- Ceph Controller

A Kubernetes controller that obtains the parameters from MOSK management through a CR, creates CRs for Rook and updates its CR status based on the Ceph cluster deployment progress. It creates users, pools, and keys for OpenStack and Kubernetes and provides Ceph configurations and keys to access them. Also, Ceph Controller eventually obtains the data from the OpenStack Controller (Rockoon) for the Keystone integration and updates the Ceph Object Gateway services configurations to use Kubernetes for user authentication.

The Ceph Controller operations include:

Transforming user parameters from the MOSK Ceph CR into Rook CRs and deploying a Ceph cluster using Rook.

Providing integration of the Ceph cluster with Kubernetes.

Providing data for OpenStack to integrate with the deployed Ceph cluster.

- Ceph Status Controller

A Kubernetes controller that collects all valuable parameters from the current Ceph cluster, its daemons, and entities and exposes them into the

MiraCephHealthstatus. Ceph Status Controller operations include:Collecting all statuses from a Ceph cluster and corresponding Rook CRs.

Collecting additional information on the health of Ceph daemons.

Provides information to the

statussection of theMiraCephHealthCR.

- Ceph Request Controller

A Kubernetes controller that obtains the parameters from MOSK management through a CR and manages Ceph OSD lifecycle management (LCM) operations. It allows for a safe Ceph OSD removal from the Ceph cluster. Ceph Request Controller operations include:

Providing an ability to perform Ceph OSD LCM operations.

Obtaining specific CRs to remove Ceph OSDs and executing them.

Pausing the regular Ceph Controller reconciliation until all requests are completed.

A typical Ceph cluster consists of the following components:

Two Ceph Managers

Three or, in rare cases, five Ceph Monitors

Ceph Object Gateway (

radosgw). Mirantis recommends having three or moreradosgwinstances for HA.Ceph OSDs. The number of Ceph OSDs may vary depending on deployment needs.

Warning

A Ceph cluster with 3 Ceph nodes does not provide hardware fault tolerance and is not eligible for recovery operations, such as a disk or an entire Ceph node replacement.

A Ceph cluster uses the replication factor that equals 3. If the number of Ceph OSDs is less than 3, a Ceph cluster moves to the degraded state with the write operations restriction until the number of alive Ceph OSDs equals the replication factor again.

The placement of Ceph Monitors and Ceph Managers is defined in the MiraCeph

CR.

The following diagram illustrates the way a Ceph cluster is deployed in MOSK:

The following diagram illustrates the processes within a deployed Ceph cluster:

See also