Mirantis Container Cloud (MCC) becomes part of Mirantis OpenStack for Kubernetes (MOSK)!

Now, the MOSK documentation set covers all product layers, including MOSK management (formerly Container Cloud). This means everything you need is in one place. Some legacy names may remain in the code and documentation and will be updated in future releases. The separate Container Cloud documentation site will be retired, so please update your bookmarks for continued easy access to the latest content.

MOSK cluster networking¶

Mirantis OpenStack for Kubernetes (MOSK) clusters managed by MOSK management use the following networks to serve different types of traffic:

Network role |

Description |

|---|---|

Provisioning (PXE) network |

Enables remote booting of servers through the Preboot eXecution Environment (PXE) protocol. The PXE subnet provides IP addresses for DHCP and network boot of the bare metal hosts for initial inspection and operating system provisioning using the bare metal provisioning service (Ironic). This network may not have the default gateway or a router connected to it. The operator defines the PXE subnet during bootstrap. In management clusters, the DHCP server listens on this network for hosts discovery and inspection. In MOSK clusters, hosts use this network only for the initial PXE boot and provisioning, and this network must not be configured on an operational MOSK node after its provisioning. For requirements, see DHCP range requirements for PXE. |

Life-cycle management (LCM) network |

Connects LCM Agents running on the hosts to the MOSK

LCM API. The LCM API is provided by the management cluster. The LCM

network is also used for communication between The LCM subnet(s) provides IP addresses that are statically allocated by the IPAM service to bare metal hosts. This network must be connected to the Kubernetes API endpoint of the management cluster through an IP router. LCM Agents running on MOSK clusters will connect to the management cluster API through this router. LCM subnets may be different per MOSK cluster as long as this connection requirement is satisfied. You can use more than one LCM network segment in a MOSK cluster. In this case, separated L2 segments and interconnected L3 subnets are still used to serve LCM and API traffic. All IP subnets in the LCM networks must be connected to each other by IP routes. These routes must be configured on the hosts through L2 templates. All IP subnets in the LCM network must be connected to the Kubernetes API endpoints of the management cluster through an IP router. You can manually select the load balancer IP address for external

access to the cluster API and specify it in the ARP or BGP announcement When using the ARP announcement of the IP address for the cluster API load balancer, the following limitations apply:

When using the BGP announcement of the IP address for the cluster API load balancer, which is available as Technology Preview, no segment stretching is required between Kubernetes master nodes. Also, in this scenario, the load balancer IP address is not required to match the LCM subnet CIDR address. Depending of cluster needs, an operator can select how the VIP address for Kubernetes API is advertised. When BGP advertisement is used or the OpenStack control plane is deployed on separate nodes, as opposite to a compact control plane, it allows for a more flexible configuration and there is no need to search for a compromise such as the one described below. But, when using ARP advertisement on a compact control plane, the selection of network for advertising the VIP address for Kubernetes API may depend on whether the symmetry of service return traffic is required. Therefore, when using ARP advertisement on a compact control plane, select one of the following options in the drop-down list:

Network selection for advertising the VIP address for Kubernetes

API on a compact control plane

|

Kubernetes workloads (pods) network |

Serves as an underlay network for traffic between pods in the MOSK cluster. Do not share this network between clusters. There might be more than one Kubernetes pods network segment in the cluster. In this case, they must be connected through an IP router. Kubernetes workloads network does not need an external access. The Kubernetes workloads subnet(s) provides IP addresses that are statically allocated by the IPAM service to all nodes and that are used by Calico for cross-node communication inside a cluster. By default, VXLAN overlay is used for Calico cross-node communication. |

Kubernetes external network |

Serves for an access to the OpenStack endpoints in a MOSK cluster. When using the ARP announcement of the external endpoints of load-balanced services, the network must contain a VLAN segment extended to all MOSK nodes connected to this network. When using the BGP announcement of the external endpoints of load-balanced services, which is available as Technology Preview, there is no requirement of having a single VLAN segment extended to all MOSK nodes connected to this network. A typical MOSK cluster only has one external network. The external network must include at least two IP address ranges

defined by separate

|

Storage access network |

Serves for the storage access traffic from and to Ceph OSD services. A MOSK cluster may have more than one VLAN segment and IP subnet in the storage access network. All IP subnets of this network in a single cluster must be connected by an IP router. The storage access network does not require external access unless you want to directly expose Ceph to the clients outside of a MOSK cluster. Note A direct access to Ceph by the clients outside of a MOSK cluster is technically possible but not supported by Mirantis. Use at your own risk. The IP addresses from subnets in this network are statically allocated by the IPAM service to Ceph nodes. The Ceph OSD services bind to these addresses on their respective nodes. This is a public network in Ceph terms. 1 |

Storage replication network |

Serves for the storage replication traffic between Ceph OSD services. A MOSK cluster may have more than one VLAN segment and IP subnet in this network as long as the subnets are connected by an IP router. This network does not require external access. The IP addresses from subnets in this network are statically allocated by the IPAM service to Ceph nodes. The Ceph OSD services bind to these addresses on their respective nodes. This is a cluster network in Ceph terms. 1 |

Out-of-Band (OOB) network |

Connects Baseboard Management Controllers (BMCs) of the bare metal hosts. Must not be accessible from a MOSK cluster. |

- 1(1,2)

For more details about Ceph networks, see Ceph Network Configuration Reference.

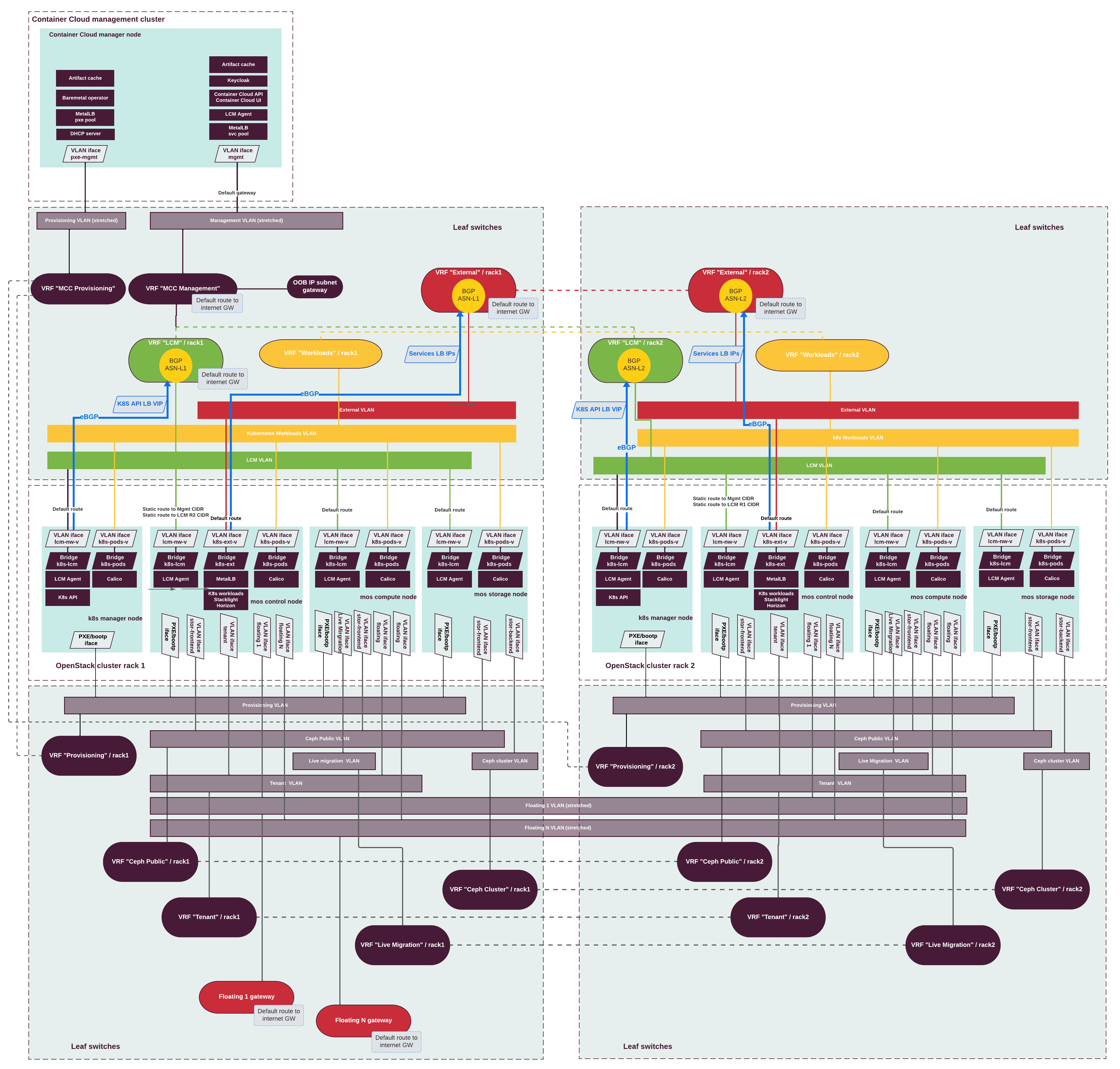

The following diagram illustrates the networking schema of a management cluster deployment on bare metal with a MOSK cluster using ARP announcements:

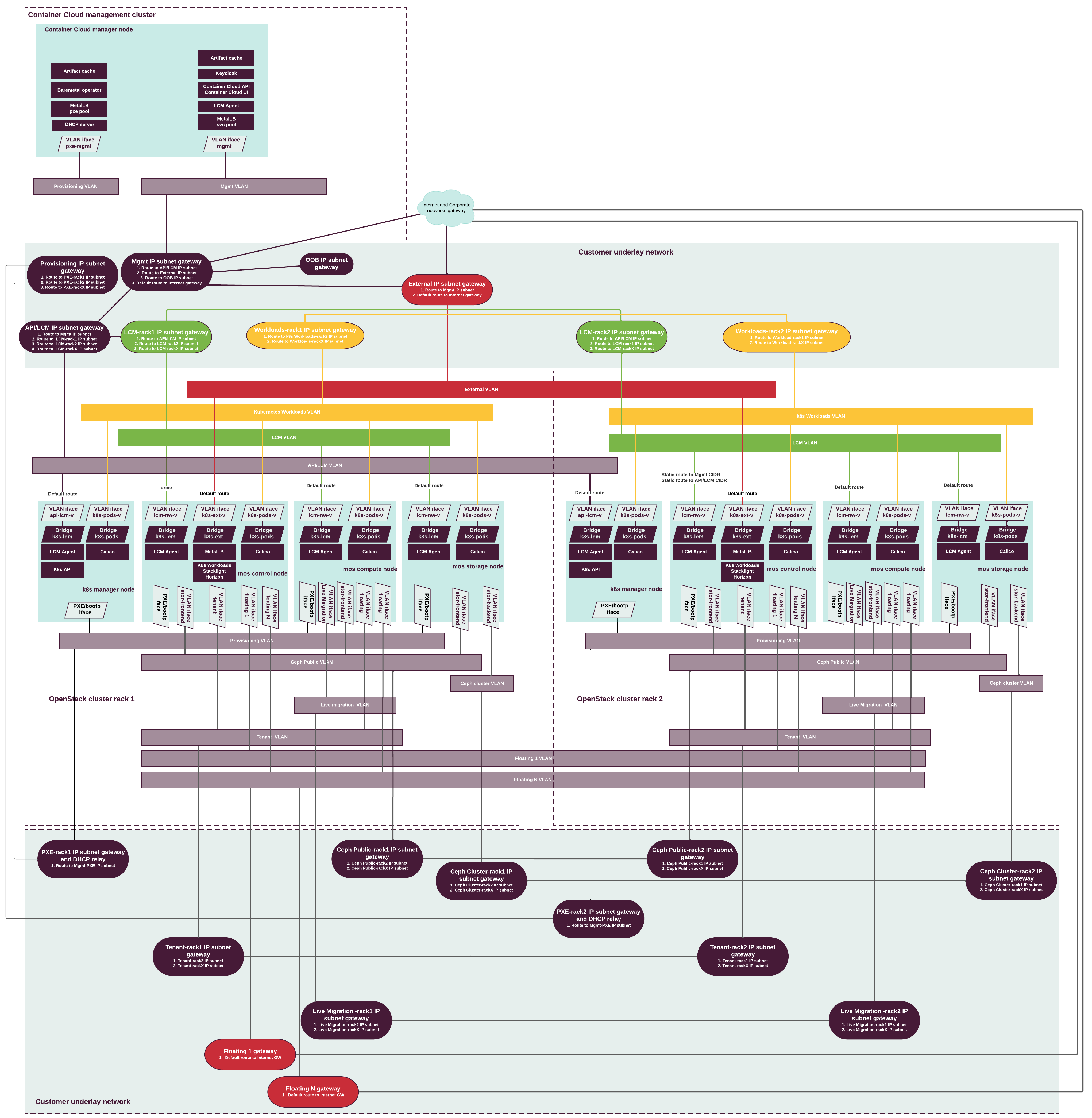

MOSK supports full L3 networking topology in the Technology Preview scope. The following diagram illustrates the networking schema of a management cluster deployment on bare metal with a MOSK cluster using BGP announcements: